IBM Platform Cluster Manager - Advanced Edition (PCM-AE) for technical cloud computing

This chapter describes aspects of PCM-AE related to technical cloud computing.

This chapter includes the following sections:

5.1 Overview

A characteristic of a cloud environment is that it allows users to serve themselves when it comes to resource provisioning. You can have, for example, a smart cloud entry environment to manage a set of IBM Power or x86 servers in such a way that users can create their own environment (logical partitions or virtual machines) to run their workloads. Clouds automate day-to-day tasks such as system deployment, leaving IT specialists time to perform more challenging work such as thinking about solutions to solve business problems in the company.

If resource provisioning can be done at the level of a single system, how about automating whole cluster deployments? Moreover, how about automating provisioning tasks for highly demanding and specialized cluster environments such as high performance computing (HPC)? How much time can you save your IT administrators team by having users trigger the deployment of HPC clusters dynamically? You can do all of this with the implementation of IBM Platform Cluster Manager - Advanced Edition (PCM-AE).

Deployment automation and a self-service consuming model are just a small part of the advantages that PCM-AE can provide you. For more information about all of PCM-AE capabilities along with the benefits you get from them, see 5.2, “Platform Cluster Manager - Advanced Edition capabilities and benefits” on page 90.

IBM Platform Cluster Manager - Advanced Edition is a product that manages the provisioning of complex HPC clusters and cluster resources in a self-service and flexible fashion.

This chapter is not intended to be a comprehensive description of PCM-AE. Rather, it focuses on PCM-AE aspects that are of interest for technical computing cloud environments. For information about how to implement a cloud environment managed with PCM-AE, see IBM Platform Computing Solutions, SG24-8073. For a detailed administration guide, see Platform Cluster Manager Advanced Edition Administering1, SC27-4760-01.

5.2 Platform Cluster Manager - Advanced Edition capabilities and benefits

PCM-AE can greatly simplify the IT management of complex HPC clusters and be the foundation of building HPC environments as a service. The following are the capabilities and benefits of PCM-AE:

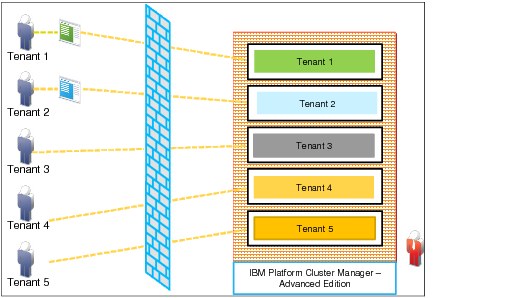

•Manage multi-tenancy HPC environments

PCM-AE can create and manage isolated HPC environments running on your server farm. You can use it to deploy multiple HPC workloads, each running a particular application and used by a different group of users as shown in Figure 5-1 on page 91. Each environment can be accounted for individually in terms of resource usage limits and resource usage reporting.

Figure 5-1 Multi-tenancy characteristics of IBM PCM-AE

•Support for multiple HPC products

PCM-AE can help manage IBM HPC products such as IBM Platform Symphony, IBM Platform LSF, and IBM InfoSphere BigInsights to name a few. PCM-AE also supports third-party products such as Grid Engine, PBS Pro, and Hadoop. These characteristics allow you to consolidate multiple workload types under the same hardware infrastructure as depicted in Figure 5-2.

Figure 5-2 Consolidating multiple HPC clusters under a PCM-AE managed environment

•On-demand and self-service provisioning

Users can dynamically create clusters within PCM-AE based on cluster definitions that are published by PCM-AE cloud administrators. This process requires no deep understand of the cluster setup process. Also, there is little or no need for paperwork and approval flows to deploy a cluster because resource usage limits and policies are defined on a per tenant basis. Users can help themselves freely according to the rules and limits established for them. This helps reduce operational costs.

•Use of physical and virtual resources

You can choose whether to deploy an HPC cluster with underlying physical servers composing your cluster infrastructure, or you can provision on top of a virtualized layer, or use a mixed approach. Some larger servers with generous amounts of processor and memory can be good candidates for hosting clusters in a virtual fashion with the use of virtual machines. You can maximize the consolidation level of your infrastructure as a whole with virtualization, or you can truly isolate workloads by engaging only physical servers. Either way, PCM-AE can serve your goals.

•Increased server consolidation

Having multiple technical computing cluster infrastructures that are isolated as silos might result in a considerable amount of idle capacity when looking at overall resources. With PCM-AE, these silos’ infrastructure can be managed in a centralized, integrated way that allows you to use these little islands of capacity that alone would not be sufficient for deploying a new cluster.

•Fast and automated provisioning

With a self-service based approach and predefined cluster definitions, all of the provisioning process can be automated. This reduces the deployment time from hours (or days) to minutes.

•Cluster scaling

As workloads increase or decrease, you can adjust the amount of resources that are assigned to your cluster dynamically as depicted in Figure 5-3 on page 93. This feature is commonly called cluster flexing, and can happen automatically based on utilization thresholds that you define for a particular cluster. This allows you to optimize your server utilization. For more information, see 5.5.3, “Cluster flexing” on page 100. Also, temporary cluster deployments or recurring cluster deployments that are based on scheduled workload processing are supported.

Figure 5-3 Dynamically resizing of clusters

•Shared HPC cloud services

This helps use other HPC cloud infrastructures to handle peak demands. If you face an unpredictable high resource utilization in your PCM-AE cloud environment and need to extend it, you can use IBM Smart Cloud public cloud infrastructure to add extra resources to your environment to meet your peak demand.

In addition, during low utilization periods, you can lease excess capacity to other entities. This is a good use case for universities that sparsely consume their HPC cloud resources, and is depicted in Figure 5-4. You can even have a completely isolated PCM-AE environment to serve external consumers.

Figure 5-4 Leasing excess capacity with PCM-AE

5.3 Architecture and components

PCM-AE has its own particular internal software components architecture, and it uses other software components to create a manageable cloud infrastructure environment. Figure 5-5 depicts the hardware and software components of a PCM-AE environment. They can be classified into three distinct components: hardware, PCM-AE external software components, and PCM-AE internal software components.

Figure 5-5 PCM-AE software components architecture

This section describes the architecture of Figure 5-5 in a bottom-up approach.

5.3.1 Hardware

The components with a blue background are the hardware: Servers, switches, and storage units. As explained in 5.2, “Platform Cluster Manager - Advanced Edition capabilities and benefits” on page 90, servers can be used as physical boxes or can be further virtualized by software at upper layers.

5.3.2 External software components

The components in orange are PCM-AE external components that can be used with PCM-AE to provide resource provisioning. They manage machine or virtual machine allocation, network definition, and storage area definition. As Figure 5-5 illustrates, you can use xCAT to perform bare metal provisioning of servers with Linux along with dynamic VLAN configuration. Also, KVM is an option as a hypervisor host managed by PCM-AE.

Another external software component, IBM General Parallel File System (GPFS), can be used to manage storage area network disks that can be used, for example, to host virtual machines that are created within the environment. GPFS plays an important role when it comes to Technical Computing clouds because of its capabilities as explained in 6.1, “Overview” on page 112. Parallel access to data with good performance makes GPFS a good file system for many HPC applications. Also, the ability of GPFS to access remote network shared disks by using the NSD protocol provides flexibility in the management of the cloud.

PCM-AE can also manage and provision clouds using the Unified Fabric Manager platform with InfiniBand. PCM-AE can be used to provide a private network among the servers while maintaining multi-tenant cluster isolation by using VLANs.

PCM-AE is a platform that can offer support for the integration of other provisioning software, including eventual custom adapters that you might already have or need in your existing environment.

5.3.3 Internal software components

The components in green in Figure 5-5 on page 94 are PCM-AE’s internal software components.

Besides the resource integrations layer that allows PCM-AE to use external software components, PCM-AE includes these software components:

•Software to control the multi-tenancy characteristic of a PCM-AE based cloud (user accounts)

•A service level agreement component that defines the rules for dynamic cluster growth or shrinking

•Allocation engine software that manages resource plans, prioritization and how the hardware pieces are interconnected

•Accounting and reporting software to provide feedback on cluster utilization and resources consumed by tenants

•Software that handles operational management of the clouds (cluster support and operational management). This allows you to define, deploy and modify clusters, and also to visualize existing clusters and the servers within them.

5.4 PCM-AE managed clouds support

PCM-AE supports many computing and analytics workloads. If the cloud hardware and the provisioned cluster operating system can handle the workload requirements, you can have your workload managed by PCM-AE.

Table 5-1 shows a list of supported hardware and operating systems for cluster deployment in a PCM-AE managed cloud.

Table 5-1 PCM-AE hardware and software support, and environment provisioning support

|

Infrastructure hardware and software support

|

|

|

Hardware support

|

•IBM System x iDataPlex

•IBM Intelligent Cluster™

•Other rack-based servers

•Non-IBM x86-64 servers

|

|

Supported provisioning software for bare-metal severs

|

•xCAT 2.7.6

•IBM support for xCAT V2 (suggested)

|

|

Supported provisioning software for virtual servers

|

•KVM on RHEL 6.3 (x86 64-bit)

•IBM SmartCloud Provisioning 2.1

•vSphere 5.0 with ESXi 5.0

|

|

Network infrastructure support

|

•IBM RackSwitch G8000, G8124, G8264

•Mellanox InfiniBand Switch System IS5030, SX6036, SX6512

•Cisco Catalyst 2960 and 3750 switches

|

|

PCM-AE Master node OS and software requirements

|

•RHEL 6.3 (x86 64-bit)

•MySQL, stand-alone 5.1.64 or Oracle 11g Release 2 or Oracle 11g XE

|

|

Environment provisioning support

|

|

|

Bare-metal-provisioned systems (by xCAT)

|

•RHEL 6.3 (x86 64-bit)

•KVM on RHEL 6.3 (x86 64-bit)

•CentOS 5.8 (x86 64-bit)

|

|

Virtually provisioned systems (by KVM, SmartCloud, vSphere)

|

•RHEL 6.3 (x86 64-bit)

•Microsoft Windows 2008 (64-bit)

|

|

Provisioned storage clients

|

•IBM GPFS V3.5 client node

|

PCM-AE supports most of today’s workload managers, including:

•IBM Platform LSF 8.3, or later

•IBM Platform Application Center 8.3

•IBM Platform Symphony 5.2, or later

•IBM InfoSphere BigInsights 1.4, or later

5.5 PCM-AE: a cloud-oriented perspective

This section provides an overview of some of PCM-AE’s common operations such as cluster definition, cluster deployment, cluster flexing, and cluster metrics. This section is intended to present and demonstrate the product from a practical user point of view. It is not a comprehensive user administration guide.

5.5.1 Cluster definition

PCM-AE cluster provisioning is based on a self-service approach. This means that a BigData consumer can create a cluster and start using its analytics application for report generation. This particular consumer knows how to operate its analytics application, but might not have any idea of how to build the required cluster infrastructure for the application.

To solve these challenges and ensure that tenants are able to allocate clusters in a self-service, infrastructure-knowledge-less manner, PCM-AE isolates the complexity of cluster internals from the tenants. So, as a tenant, you can only create clusters for which there is a published cluster definition inside your PCM-AE environment. If it has a published IBM InfoSphere BigInsights cluster definition, you can create a BigInsights cluster for your use and access BigInsights directly. If you need to run an ANSYS workload but your PCM-AE environment has no definition for this type of workload, the PCM-AE administrator must first create a cluster definition before PCM-AE can provision it for you.

A cluster definition is an information set that establishes these settings:

•Which types of nodes compose the cluster (master, compute, custom nodes)

•Which operating system is to be installed on each type of node

•Minimal and maximum amount of resources allowed (processor and memory, number of nodes)

•How to assign IP addresses

•Pre and post-install scripts

•Custom user variables to guide the scripts (for instance, where to get the applications from for installation, or in which directory to install them)

•Storage placement

•Other information that is pertinent to the provisioning of the cluster itself.

Figure 5-6 shows a definition for an IBM InfoSphere BigInsights 2.1 with a Platform Symphony 6.1 cluster.

Figure 5-6 Definition for an IBM BigInsights 2.1 and Platform Symphony 6.1 cluster

Figure 5-6 shows that this cluster definition is composed of two node types: Master and compute nodes. A BigInsights cluster has one master node and can have multiple compute nodes for processing. Therefore, the administrator creates a cluster definition with both of these node types. As you can also see in Figure 5-6, the operating system to be used on the master node (its selection is highlighted on the picture) is Red Hat Enterprise Linux 6.2.

In this particular cluster definition, the administrator used post-installation scripts to install IBM InfoSphere BigInsights (layer 2, master setup and compute setup steps). After its installation was completed on both nodes and synchronized (SignalComplete and WaitForSignal, which are custom scripts based on sleep-verify checks), the installation of Platform Symphony took place (SymphonyMaster and SymphonyCompute scripts). Finally, the integration scripts were called.

Figure 5-7 shows the custom script that was used for installing BigInsights on the master node in the cluster definition example.

Figure 5-7 Example of a custom script in a cluster definition inside PCM-AE

PCM-AE cluster definitions give you a flexible way to define a thorough provisioning of your cluster. If your cluster software installation and software setup can be scripted, you can probably manage its deployment with PCM-AE.

5.5.2 Cluster deployment

After a cluster definition is available, users can self-service themselves by instantiating a cluster based on it. The process is as simple as going through a guided wizard, selecting the number of compute nodes (or other custom node types depending on the cluster definition), and how much memory and processor to assign to the cluster.

Figure 5-8 shows the initial cluster provisioning steps in PCM-AE.

Figure 5-8 Creating a cluster inside PCM-AE

The numbers in Figure 5-8 denote the sequence of actions to provision a cluster with PCM-AE:

1. Go to the Clusters view in PCM-AE’s navigation area.

2. Select the Cockpit area to visualize the active clusters.

3. Click New to start the cluster creation wizard.

4. Select a cluster definition for your cluster. In this example, create a streams cluster from the definition on the list of published cluster definitions.

5. Click Instantiate to go to the next and last step of the creation wizard.

After you complete these steps, select the amount of resources for your cluster as shown in Figure 5-9.

Figure 5-9 Resource assignment in cluster creation wizard

6. Edit the cluster properties as needed such as the cluster name and description, and select which definition version to use2.

7. Define the cluster lifetime. You can provision the cluster for a limited amount of time (start - end), make it a recurrent provisioning (suits workloads that happen punctually but in a repetitive schedule base), or make it a lifetime cluster (no expiration).

8. Select the resources to assign to your new cluster. In this streams cluster, the cluster definition allows you to select the number of nodes, and the amount of processor and memory per node.

9. Click Create, and the wizard starts provisioning the new cluster.

This process is straightforward and does not require any specific knowledge of cluster infrastructure.

5.5.3 Cluster flexing

After a cluster is up and running, it might not always be at its peak utilization. Computations have their minimums, averages, and maximums. PCM-AE provides cluster flexing to make better use of the cloud infrastructure for technical computing.

Clusters can be flexed up (node addition) or down (node removal) as long as the current number of nodes obey the maximum and minimum node numbers in the cluster definition used with your particular cluster.

There are two ways to perform cluster flexing: Manually or automatically. A manual cluster flexing is done through PCM-AE’s Clusters → Cockpit view in the main navigation area by selecting Modify → Add or Remove Machines as depicted in Figure 5-10.

Figure 5-10 IBM PCM-AE main navigation window

Then, change the number of on-demand nodes as shown in Figure 5-11.

Figure 5-11 Flexing of an active cluster in PCM-AE

The cluster definition used in this example imposes a minimum number of one node and a maximum number of four nodes in the cluster. Therefore, you can change the “on-demand” nodes from 0 - 3.

It is also possible to configure PCM-AE to flex the cluster based on thresholds that you define. A cluster policy must be in place for that to happen. Policies can be predefined in cluster definitions, or can be created later.

Figure 5-12 shows a streams cluster definition with flexing threshold policies. In this case, the cluster is flexed up by one node (action parameters) if average processor utilization reaches 95%, or is flexed down by one node if average processor utilization gets below 15%. It is possible to send notification emails when cluster auto-flexing occurs.

Figure 5-12 Flexing threshold policy definition in cluster definition

Figure 5-13 shows the graphs of a simple one-node cluster that is deployed with the cluster definition from Figure 5-12 on page 102. After its creation, the processor workload is increased on the node. When it reaches the 95% threshold, it flexes up. Notice that the second node gets accounted for in the statistics at the beginning of the flex up process, but is only really available for processing (and thus appearing as ready) after its whole deployment process is complete. At the end, take the load out and the cluster flexes itself down back to one node only. The flex down happens much more quickly as you can verify in Figure 5-13.

Figure 5-13 Example of automatic cluster flexing

5.5.4 Users and accounts

To manage a self-service cloud environment for Platform Computing, HPC, and analytics clusters, PCM-AE offers mechanisms to control user access and define accounts.

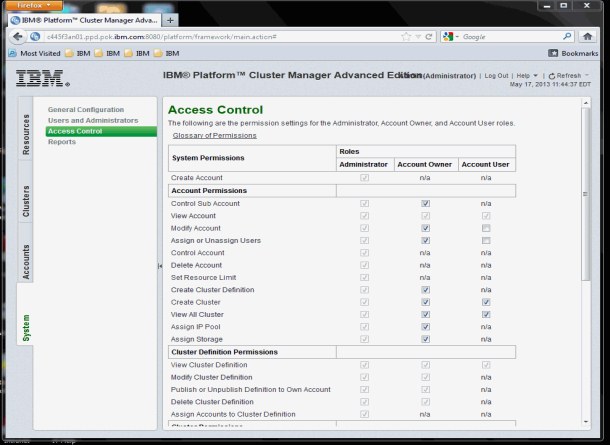

Users

A user in PCM-AE is the entity that represents a person who logs in to the environment. The user holds information for user authentication and other ordinary data such as email, phone number, department name, location, first and surnames, and business unit. The operations that a user can perform in a PCM-AE environment are based on its classification. Users can be classified as administrators, account owners, or account users. Each user type is granted permission to perform a set of operations. These permissions can be customized.

To understand more about or to customize the permissions that are granted to user types, check PCM-AE’s access control description in its System → Access Control view as depicted in Figure 5-14.

Figure 5-14 PCM-AE user and account management permissions

The default permissions are the less restrictive set of permissions. This helps the self-service approach of cloud use described throughout this book.

PCM-AE can be integrated with Lightweight Directory Access Protocol (LDAP) user databases and with Windows Active Directory databases for authentication. For more information, see the Platform Cluster Manager Advanced Edition Administering guide, SC27-4760-01 at:

Accounts

Accounts are an administrative layer on top of the user layer. Users can be grouped in an account. Whichever resources and resource limits the account grants to its users, all of the users under that account are subject to these definitions. This is how you can control your PCM-AE cloud environment with a multi-tenant approach. A company’s departments can be accounts. Also, a university department can be an account. This not only isolate users into groups, but also allows the cloud administrator to provide different service levels to different accounts.

|

Hint: All of the resources that a cluster uses are reported and tracked against the account of the user who created it.

|

In PCM-AE’s Accounts view, you can view existing accounts, view cluster definitions available for use of an account, view clusters along with its systems or virtual machines deployed by users of a particular account, and trigger resource consumption by clusters within an account. Figure 5-15 shows which clusters are deployed under the PCM-AE’s default account “SampleAccount”.

Figure 5-15 Clusters that are deployed under a particular account in PCM-AE

5.5.5 Cluster metrics

As with any cloud environment, administrators must know how much of the resources are in use. This allows for capacity planning of the cloud. Also, it helps you decide whether to reach a peak resource consumption, or to go beyond and temporarily expand the cloud capacity by using a shared HPC cloud service as explained in 5.2, “Platform Cluster Manager - Advanced Edition capabilities and benefits” on page 90.

In addition, cloud administrators might want to identify how much of the resources of the cloud are in use or have been consumed by a particular cluster, or by a particular tenant who might own several clusters. Whether the technical computing cloud environment is private or public, this data is useful for charging tenants. Charging is a somewhat obvious concept in a public cloud because the consumers are all external. However, private clouds might also benefit if, for example, the IT department provides cloud infrastructure services for multiple departments within the company or institution. You might want to charge these internal consumers, allowing the IT department to provide the tenants difference service levels that are based on the charges.

Reports can be generated by using the System → Reports view inside of PCM-AE as illustrated in Figure 5-16.

Figure 5-16 Accessing the reports view in PCM-AE

Figure 5-17 shows a capacity report for PCM-AE managed hardware. It shows the data by resource groups. This example shows a resource group of physical systems managed by xCAT, and another for virtual machines managed by KVM. The results within each resource group show the percentage of the total capacity that was used during the report period. It is possible to create custom reports for specific resource groups and for specific date ranges.

Figure 5-17 PCM-AE cloud capacity report

If you need a different reporting view, you can use a cloud resource allocation by cluster report example as shown in Figure 5-18. The view shows the number of hours each resource group was allocated by each cluster. On-demand hours also are displayed in the report.

Figure 5-18 PCM-AE resource allocation report by cluster

In addition, reports based on single users or group accounts are available. Figure 5-19 depicts a user consumption report of the example PCM-AE cloud. This information can be used to account for tenants’ use of the cloud in both public and private clouds.

Figure 5-19 PCM-AE resource allocation report by user or account

So far only metrics that are useful from a cloud administration point of view have been covered. However, it is also possible to measure a cluster’s instantaneous processor and memory usage, and check the number of active nodes over time (good for checking cluster flexing actions that are taken upon the cluster). This can be done by verifying the cluster Performance tab in the Clusters → Cockpit view as seen in Figure 5-20.

Figure 5-20 Cluster performance metrics: Processor, memory utilization, and number of active nodes

1 http://www.ibm.com/e-business/linkweb/publications/servlet/pbi.wss?CTY=US&FNC=SRX&PBL=SC27-4760-01

2 Cluster definitions can use version tracking as the administrators make changes, enhance, or provide different architecture definitions for the same cluster type.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.