A wide range of security technologies exists that provides solutions for securing network access and data transport mechanisms within the corporate network infrastructure. Many of the technologies overlap in solving problems that relate to ensuring user or device identity, data integrity, and data confidentiality.

NOTE

Throughout this book, authentication, authorization, and access control are incorporated into the concept of identity. Although these concepts are distinct, they all pertain to each individual user of the network—be it a person or device. Each person or device is a distinct entity that has separate abilities within the network and is allowed access to resources based on who they are. Although in the purest sense, identity really pertains only to authentication, in many cases, it makes sense to discuss the entities' authorization and access control at the same time.

Authentication refers to the process of validating the claimed identity of an end user or a device (such as clients, servers, switches, routers, firewalls, and so on). Authorization refers to the process of granting access rights to a user, groups of users, or specified system; access control refers to limiting the flow of information from the resources of a system to only the authorized persons or systems in the network. In most of the cases studied here, authorization and access control are subsequent to successful authentication.

This chapter describes security technologies commonly used to establish identity (authentication, authorization, and access control) and to ensure some degree of data integrity and confidentiality in a network. Data integrity ensures that any alteration or destruction of data by people who are not explicitly intended to modify it is detected; data confidentiality ensures that only the entities allowed to see the data see it in a usable format.

The intent is to develop a basic understanding of how these technologies can be implemented in corporate networks and to identify their strengths and weaknesses. The following categories have been selected in an attempt to group the protocols according to shared attributes:

Identity technologies

Application layer security protocols

Transport layer security protocols

Network layer security

Link layer security protocols

Public key infrastructure and distribution models

NOTE

Many of the technologies discussed here either have been or are in the process of being standardized by the IETF. For information on more technical details and the latest developments, refer to Appendix A, “Sources of Technical Information.” Appendix A contains pointers to the IETF working groups that produce the RFCs and drafts relating to the technologies discussed here.

This section describes the primary technologies used to establish identity for a host, an end-user, or both. Authentication is an extremely critical element because everything is based on who you are. In many corporate networks, you would not grant authorized access to specific parts of the network before establishing who is trying to gain access to restricted resources. Who needs to identify who is a consideration. In some instances, the initiator of a communication is the required entity to authenticate, in others it is the responder. Sometimes mutual authentication is required. To complicate things even further, there is the additional consideration of whether to authenticate only the end device or also the actual user and possibly to associate them together. In many instances, multiple end users use the same device and may need different privileges across the network. Also, the proliferation of laptops make it easier for people to travel and get connected back to corporate offices anywhere on the globe. If a laptop gets stolen and if an automated authentication scheme is used to authenticate and allow access privileges based only on the device identity, however, large security implications apply.

How foolproof the authentication method is depends on the technology used and how foolproof the procedures are.

Authentication methods can loosely be categorized as those where there is local control and those where you provide authentication verification through a trusted third party.

One of the potential weaknesses in some authentication methods is who you trust. Many authentication methods rely on a third party to verify someone's identity. The strength of this verification is the limiting factor in the strength of the authentication. When using a third party to authenticate an end user or device, ask yourself, “What is the likelihood that the third party I'm counting on to provide the authentication verification has been compromised?”

The technologies discussed in this section include variants of secure passwords, which provide varying degrees of security and are offered by most vendors today. Many protocols authorize some form of connection setup after authentication has been successfully verified. In dialup environments, a peer-to-peer, link-level connection is established; sometimes, additional access control mechanisms can be used at higher levels of the protocol stack, such as permitting access to hosts with certain IP addresses accessing specific applications. This discussion covers different protocols that often use an initial authentication process to then grant authorization and access control.

NOTE

You can use digital certificates as an authentication method, as discussed in detail in the “Public Key Infrastructure and Distribution Models” section later in this chapter.

Although passwords are often used as proof for authenticating a user or device, passwords can easily be compromised if they are easy to guess, if they are not changed often enough, and if they are transmitted in cleartext across a network. To make passwords more secure, more robust methods are offered by encrypting the password or by modifying the encryption so that the encrypted value changes each time. This is the case with most one-time password schemes; the most common being the S/Key protocol and the token password authentication schemes.

The S/Key One-Time Password System, released by Bellcore and defined in RFC 1760, is a one-time, password-generation scheme based on MD4 and MD5. The S/Key protocol is designed to counter a replay attack when a user is attempting to log in to a system. A replay attack in the context of login is when someone eavesdrops on a network connection to get the login ID and password of a legitimate user and later uses it to gain access to the network.

The operation of the S/Key protocol is client/server-based; the client is typically a PC, and the server is some flavor of UNIX. Initially, both the client and the server must be configured with the same pass phrase and an iteration count. The iteration count specifies how many times a given input will be applied to the hash function. The client initiates the S/Key exchange by sending an initialization packet; the server responds with a sequence number and seed, as shown in Figure 2-1.

The client then computes the one-time password, a process that involves three distinct steps: a preparatory step, a generation step, and an output function. (See Figure 2-2.)

In the preparatory step, the client enters a secret pass phrase. This pass phrase is concatenated with the seed that was transmitted from the server in cleartext.

The generation step applies the secure hash function multiple times, producing a 64-bit final output.

The output function takes the 64-bit one-time password and displays it in readable form.

The last phase is for the client to pass the one-time password to the server, where it can be verified. (See Figure 2-3.)

The server has a file (on the UNIX reference implementation, it is /etc/skeykeys) containing, for each user, the one-time password from the last successful login. To verify an authentication attempt, the authentication server passes the received one-time password through the secure hash function once. If the result of this operation matches the stored previous one-time password, the authentication is successful and the accepted one-time password is stored for future use.

Because the number of hash function applications executed by the client decreases by one each time, this ensures a unique sequence of generated passwords. However, at some point, the user must re-initialize the system to avoid being unable to log in again. The system is re-initialized using the keyinit command, which allows the changing of the secret pass phrase, the iteration count, and the seed.

When computing the S/Key password on the client side, the client pass phrase can be of any length—more than eight characters is recommended. The use of the nonsecret seed allows a client to use the same secret pass phrase on multiple machines (using different seeds) and to safely recycle secret pass phrases by changing the seed.

NOTE

Many implementations require the generated one-time password to be entered either using a cut-and-paste approach, or manually. In manual entry scenarios, the one-time password is converted to, and accepted, as a sequence of six short (one- to four-letter) English words. Each word is chosen from a dictionary of 2048 words; at 11 bits per word, all one-time passwords may be encoded. Interoperability requires that all S/Key system hosts and calculators use the same dictionary.

S/Key is an alternative to simple passwords. Free as well as commercial implementations are widely available.

Token authentication systems generally require the use of a special card (called a token card), although some implementations are done using software to alleviate the problem of losing the token card. These types of authentication mechanisms are based on one of two alternative schemes: challenge-response and time-synchronous authentication.

The challenge-response approach is shown in Figure 2-4. The following steps carry out the authentication exchange:

The user dials in to an authentication server, which then issues a prompt for a user ID.

The user provides the ID to the server, which then issues a challenge—a random number that appears on the user's screen.

The user enters that challenge number into the token card, a credit card type of device, which then encrypts the challenge with the user's encryption key and displays a response.

The user types this response and sends it to the authentication server. While the user is obtaining a response from the token, the authentication server calculates what the appropriate response should be based on its database of user keys.

When the server receives the user's response, it compares that response with the one it has calculated.

If the two responses match, the user is granted access to the network. If they don't match, access is denied.

Figure 2-5 shows the time-synchronous authentication scheme. In this scheme, a proprietary algorithm executes in the token and on the server to generate identical numbers that change over time. The user dials in to the authentication server, which issues a prompt for an access code. The user enters a personal identification number (PIN) on the token card, resulting in digits displayed at that moment on the token. These digits represent the one-time password and are sent to the server. The server compares this entry with the sequence it generated; if they match, it grants the user access to the network.

A popular variant is to require a password prompt in addition to the PIN to increase the amount of information required to compromise the identity. In some cases, the time-synchronous token systems can be set up without the user being required to enter a PIN on the token card to obtain the present access code. The motivation in these cases is that administrators have found that employees would etch or write the PIN onto the token card directly; however, should the card be misplaced or stolen, the card would be easily abused. This latter scenario, where the user is not required to enter any information on the token card, is strongly discouraged.

Use of either the challenge-response or time-synchronous token password authentication scheme generally requires the user to carry a credit card type of device to provide authentication credentials. This can be a burden to some users because they have to remember to carry the device, but it has the flexibility to allow fairly secure authenticated access from anywhere in the world. It is extremely useful for mobile users who frequently log in from remote sites. If the mobile users have their own laptop, the token can be installed as software, which relieves the burden of remembering to carry an additional device. These schemes are very robust and scalable from a centralized database point of view.

NOTE

Using the one-time password scheme only protects you from replay attacks when initially logging in to the site. If you then continue to log in to other machines at the campus site, the password will be sent in the clear. It is best to combine one-time password use with some form of confidentiality (encryption) technique if protection is required for more than just the initial login sequence.

Passwords are incorporated into many protocols that provide authentication services. For dial-in connections, the Point-to-Point Protocol (PPP) is most often used to establish a dial-in connection over serial lines or ISDN. PPP authentication mechanisms include the Password Authentication Protocol (PAP), the Challenge Handshake Protocol (CHAP), and the Extensible Authentication Protocol (EAP). In some of these cases, the peer device is being authenticated rather than the user of the device.

NOTE

In the section “Link Layer Security Technologies” later in this chapter, other protocols such as L2TP and PPPoE are discussed; these other protocols rely on the PPP authentication mechanisms. These technologies extend the point-to-point link across non-point-to-point networks and because the PPP frames may be forwarded beyond the local loop of a dialup connection, additional considerations need to be taken into account for the path over which those frames traverse.

The Password Authentication Protocol (PAP) provides a simple way for a peer to establish its identity to the authenticator using a two-way handshake. This is done only at initial link establishment. There exist three PAP frame types, as shown in Figure 2-7.

After the link establishment phase is completed, the authenticate-request packet is used to initiate the PAP authentication. This packet contains the peer name and password, as shown in Figure 2-8.

This request packet is sent repeatedly until a valid reply packet is received or an optional retry counter expires. If the authenticator receives a Peer-ID/Password pair that is both recognizable and acceptable, it should reply with an Authenticate-Ack (where Ack is short for acknowledge). If the Peer-ID/Password pair is not recognizable or acceptable, the authenticator should reply with an Authenticate-Nak (where Nak is short for negative acknowledge).

Figure 2-9 shows the sequence of PPP negotiations between a branch router (the peer) trying to authenticate to the network access server (NAS, the authenticator).

PAP is not a strong authentication method. PAP authenticates only the peer, and passwords are sent over the circuit “in the clear.” PAP authentication is only performed once during a session. There is no protection from replay attacks or repeated trial-and-error attacks. The peer is in control of the frequency and timing of the attempts.

The Challenge Handshake Authentication Protocol (CHAP), defined in RFC 1994, is used to periodically verify the identity of a host or end user using a three-way handshake. CHAP is performed at initial link establishment and can be repeated any time after the link has been established. Four CHAP frame types exist, as shown in Figure 2-10.

Figure 2-11 shows a scenario in which a branch router (the peer) is trying to authenticate to the NAS (the authenticator).

CHAP imposes network security by requiring that the peers share a plaintext secret. This secret is never sent over the link. The following sequence of steps is carried out:

After the link establishment phase is complete, the authenticator sends a challenge message to the peer. The challenge consists of an identifier (ID), a random number (the challenge value), and either the host name of the local device or the name of the user on the remote device.

The receiving peer calculates a response value using a one-way hash function; the input to the one-way hash function is the ID, the secret, and the challenge value, concatenated in that order.

The peer sends the challenge response, which consists of the following:

The ID

A response value (the calculated hash value from Step 2)

Either the host name of the remote device, or the name of the user on the remote device

When the authenticator receives the challenge response, it verifies the secret by looking up the name given in the response and performing the same encryption operation. The authenticator checks the response against its own calculation of the expected hash value.

If the values match, the authenticator acknowledges the authentication and sends a success message, and the LCP establishes the link.

The secret passwords must be identical on the remote and local devices. These secrets should be agreed on, generated, and exchanged out of band in a secure manner. Because the secret is never transmitted, other devices are prevented from stealing it and gaining illegal access to the system. Without the proper response, the remote device cannot connect to the local device.

CHAP provides protection against playback attack through the use of an incrementally changing identifier and a variable challenge value. The use of repeated challenges is intended to limit the time of exposure to any single attack. The authenticator is in control of the frequency and timing of the challenges.

Either CHAP peer can act as the authenticator; there is no requirement in the specification that authentication must be full duplex or that the same protocol must be used in both directions.

NOTE

Typically, MD5 is used as the CHAP one-way hash function; the shared secrets are required to be stored in plaintext form. Despite the claim in RFC 1994 to the contrary, however, irreversably encrypted passwords can be used as long as the password is correctly preprocessed (that is, encrypted) by the peer before being processed by the CHAP algorithm. Microsoft has a variation of CHAP (MS-CHAP and MS-CHAPv2), in which the password is stored encrypted in both the peer and the authenticator. Therefore, MS-CHAP can take advantage of irreversibly encrypted password databases commonly available, whereas the standards-based CHAP cannot.

The PPP Extensible Authentication Protocol (EAP) is defined in RFC 2284, although there is a newer draft version numbered RFC 2284bis. EAP is a general protocol for PPP authentication that supports multiple authentication mechanisms. It provides its own support for duplicate elimination and retransmission. Fragmentation is not supported within EAP itself; however, individual EAP methods may support this.

EAP does not select a specific authentication mechanism at the link control phase; rather, it postpones this until the authentication phase so that the authenticator can request more information before determining the specific authentication mechanism. This arrangement also permits the use of a “back-end” server, which actually implements the various authentication mechanisms, whereas the PPP authenticator merely passes through the authentication exchange. This has the added benefit of not requiring the authenticator to be updated to support each new authentication method.

Figure 2-12 shows the EAP packet format.

Additional code types are specified in the data portion of the EAP request/response packets, as shown in Figure 2-13.

New code types are continually being defined to allow for various authentication mechanisms. The following are some commonly used ones:

Identity type (1)—. Used to query the identity of the peer. Generally, the authenticator issues this as the initial request. An optional displayable message could be included to prompt the peer in the case where there is the expectation of interaction with a user. A response message is typically sent of the same type containing the requested information.

Notification type (2)—. Optionally used to display a message from the authenticator to the peer. For example, it could be a notification that a password is about to expire.

Nak type (response only) (3)—. Valid only in a response message. It is sent in reply to a request where the desired authentication type is unacceptable.

MD5-Challenge type (4)—. Analogous to the PPP CHAP protocol(with MD5 as the specified algorithm). The request contains a “challenge” message to the peer. The response sent in reply to the request is either of type 4 (MD5-Challenge) or type 3 (Nak). Note that the use of the Identifier field in the MD5-Challenge type differs from that described in RFC 1994. EAP allows for retransmission of MD5-Challenge request packets, whereas RFC 1994 states that both the Identifier and Challenge fields must change each time a Challenge (the CHAP equivalent of the MD5-Challenge request packet) is sent.

One-Time Password (OTP) type (5)—. The OTP system is defined in “A One-Time Password System” (RFC 2289) and “OTP Extended Responses” (RFC 2243). The request contains a displayable message containing an OTP challenge. The response sent in reply to the request will be of type 5 (OTP) or type 3 (Nak).

Generic Token Card (GTC) type (6)—. Defined for use with various token card implementations that require user input. The request contains a displayable message, and the response contains the token card information necessary for authentication. Typically, this is information read by a user from the token card device and entered as ASCII text. The response sent in reply to the request will be of type 6 (GTC) or type 3 (Nak).

Figure 2-14 shows how PPP EAP works between two network infrastructure devices.

In Figure 2-14, the branch router (the peer) is trying to authenticate to the NAS (the authenticator). The sequence of steps is as follows:

When the link establishment phase is complete, the authenticator sends one or more requests to authenticate the peer. The request has a Type field to indicate what is being requested.

NOTE

Typically, the authenticator sends an initial identity request followed by one or more requests for authentication information. However, an initial identity request is not required and may be bypassed in cases where the identity is presumed (for example, with leased lines, dedicated dialups, and so on).

The peer sends a response packet in reply to each request. As with the request packet, the response packet contains a Type field that corresponds to the Type field of the request.

The authenticator ends the authentication phase with a success or failure packet.

Figure 2-15 shows an example where a RADIUS server is used as a back-end server to actually implement the authentication mechanism. In this case, the client is a telecommuter dialing in to a NAS to access the corporate network. The NAS (PPP authenticator) merely passes through the authentication exchange.

EAP adds more flexibility to PPP authentication and provides the capability to use new technologies—such as digital certificates—when they become widely available. The EAP protocol can support multiple authentication mechanisms without having to prenegotiate a particular one. Devices (for instance, a NAS, switch, or access point) do not have to understand each authentication method and can act as a passthrough agent for a backend authentication server. Although the support for passthrough is optional in the specification, many vendors are implementing it. An authenticator can authenticate local users while at the same time acting as a passthrough for nonlocal users and authentication methods it does not implement locally.

PPP authentication is required for dial-in connectivity. Any of the three standard mechanisms—PAP, CHAP, and EAP—can be used. Most current implementations are taking advantage of the flexibility of EAP, and it is widely available. Table 2-1 gives a summary of the strengths and weaknesses of these mechanisms.

Table 2-1. PPP Authentication Summary

Many protocols require authentication verification before providing authorization and access rights to the user or device. The previous sections of this chapter discussed a variety of authentication methods. This section details the protocols that make use of these authentication mechanisms. TACACS+, RADIUS, Kerberos, DCE, and FORTEZZA are examples of such protocols. TACACS+ and RADIUS are often used in dial-in environments to provide a scalable authentication database and can incorporate a variety of authentication methods. Kerberos is a protocol used in some campus environments to first verify that users and the network services they use are really who and what they claim to be before granting access privileges. For completeness, the Distributed Computing Environment (DCE) and FORTEZZA authentication mechanisms are included in this section, although their use is not widespread.

802.1x is an IEEE specification that enables authentication and key management for IEEE 802 local-area networks. IEEE 802.1x is not a single authentication method; rather it uses the Extensible Authentication Protocol (EAP) as its authentication framework. This technology is also included because its application is widely deployable in many networking scenarios.

The TACACS+ protocol is the latest generation of TACACS. TACACS is a simple UDP-based access control protocol originally developed by BBN for the MILNET. Cisco has enhanced (extended) TACACS several times, and Cisco's implementation, based on the original TACACS, is referred to as XTACACS. The fundamental differences between TACACS, XTACACS, and TACACS+ are given here:

TACACS+ uses TCP for its transport. The server daemon usually listens at port 49, the login port assigned for the TACACS protocol. This port is reserved in the assigned number's RFC for both UDP and TCP. Current TACACS and extended TACACS implementations also use port 49.

TACACS+ is a client/server protocol; the TACACS+ client is typically a NAS, and the TACACS+ server is usually a daemon process running on a UNIX or Windows NT machine. A fundamental design component of TACACS+ is the separation of authentication, authorization, and accounting. Figure 2-16 shows the TACACS+ header format.

TACACS+ allows for arbitrary length and content authentication exchanges, which allows any authentication mechanism to be used with TACACS+ clients (including PPP PAP, PPP CHAP, PPP EAP, token cards, and Kerberos). Authentication is not mandatory; it is a site-configured option. Some sites do not require it at all; others require it only for certain services.

TACACS+ authentication has three packet types:

START, which is always sent by the client

CONTINUE, which is always sent by the client

REPLY, which is always sent by the server

Authentication begins when the NAS (TACACS+ client) receives a connection request that needs to be authenticated. The client then sends a START message to the server. The START message describes the type of authentication to be performed (for example, simple cleartext password, PAP, or CHAP), and may contain the username and some authentication data. The START packet is sent only as the first message in a TACACS+ authentication session, or as the packet immediately following a restart. (A restart may be requested by the server in a REPLY packet.) A START packet always has a sequence number equal to 1.

In response to a START packet, the server sends a REPLY, which includes the authentication result. The REPLY message indicates whether the authentication is finished, or whether it should continue. If the REPLY indicates that authentication should continue, the message also indicates what new information is requested. The client gets that information and returns it in a CONTINUE message. This process repeats until all authentication information is gathered, and the authentication process concludes. The server responds to the last CONTINUE message with a REPLY.

The authentication result contained in a TACACS+ server REPLY can be one of the following three messages:

ACCEPT—. The user is authenticated and if authorization is configured on the NAS, the authorization process can start.

REJECT—. The user failed to authenticate, in which case the user might be denied further access or is prompted to retry the login sequence.

ERROR—. Indicates that an error occurred sometime during the authentication process; in which case, the NAS may try to use an alternative method to authenticate the user.

Authorization is the action of determining what a user is allowed to do. Generally, authentication precedes authorization, but, this is not required. An authorization request may indicate that the user is not authenticated. (That is, we don't know who they are.) In this case, it is up to the authorization agent to determine whether an unauthenticated user is allowed the services in question.

When authentication is completed (if authentication is used), the client can start the authorization process, if authorization is required. An authorization session is defined as a single pair of messages: a request followed by a response. The authorization Request message contains a fixed set of fields that describe the authenticity of the user or process, and a variable set of arguments that describes the services and options for which authorization is requested.

NOTE

In TACACS+, authorization does not merely provide yes or no answers—it may also customize the service for the particular user. Here are some examples of when authorization would be performed: when a user first logs in and wants to start a shell, and when a user starts PPP and wants to use IP over PPP with a particular IP address. The TACACS+ server daemon might respond to these requests by allowing the service, by placing a time restriction on the login shell, or by requiring IP access lists on the PPP connection.

Accounting is typically the third action after authentication and authorization. Accounting is the action of recording what a user is doing or has done. Accounting in TACACS+ can serve two purposes:

It may be used to account for services used, such as in a billing environment.

It may be used as an auditing tool for security services.

To this end, TACACS+ supports three types of accounting records:

Start records indicate that a service is about to begin.

Stop records indicate that a service has just terminated.

Update records are intermediate notices that indicate that a service is still being performed.

TACACS+ accounting records contain all the information used in the authorization records and also contain accounting-specific information such as start and stop times (when appropriate) and resource usage information.

Transactions between the TACACS+ client and TACACS+ server are authenticated through the use of a shared secret, which is never sent over the network. Typically, the secret is manually configured in both entities. TACACS+ encrypts all traffic between the TACACS+ client and the TACACS+ server daemon. The encryption is done through the use of MD5 and the XOR functionality. (See the following sidebar on XOR.) The following steps are used to create the ciphertext:

A hash (message digest) is calculated by using a concatenation of the session ID, shared secret key, version number, and sequence number as input to the MD5 algorithm. From this first hash, a second one is calculated by concatenating the first hash, session ID, version number, and sequence number as input to the MD5 algorithm. This process is repeated an implementation specific number of times.

All the calculated hashes are concatenated and then truncated to the length of the data that is to be encrypted, which results in what is termed the pseudo_pad.

A bytewise XOR is done on the pseudo_pad with the data that is to be encrypted, and this produces the resulting ciphertext.

The recipient of the ciphertext can calculate the pseudo_pad on its own because it is already preconfigured with a shared secret. An XOR of this pseudo_pad with the ciphertext results in the cleartext data.

Figure 2-17 shows the interaction between a dial-in user and the TACACS+ client and server.

The Remote Address Dial-In User Service (RADIUS) protocol was developed by Livingston Enterprises, Inc., as an access server authentication and accounting protocol. In June 1996, the RADIUS protocol specification was submitted to the IETF. The RADIUS specification (RFC 2865) is a proposed standard, and the RADIUS accounting specification (RFC 2866) is an informational RFC.

RADIUS uses UDP as its transport. Although some early implementations of RADIUS used port 1645, the official UDP port to use is 1812. Generally, the RADIUS protocol is considered to be a connectionless service. Issues related to server availability, retransmission, and timeouts are handled by the RADIUS-enabled devices rather than by the transmission protocol.

RADIUS is a client/server protocol. The RADIUS client is typically a NAS; the RADIUS server is usually a daemon process running on a UNIX or Windows NT machine. The client is responsible for passing user information to designated RADIUS servers and then acting on the response that is returned. RADIUS servers are responsible for receiving user connection requests, authenticating the user, and then returning all configuration information necessary for the client to deliver the service to the user. A RADIUS server can act as a proxy client to other RADIUS servers or to other kinds of authentication servers. Figure 2-18 shows the RADIUS packet format.

The RADIUS server can support a variety of methods to authenticate a user. When the server is provided with the username and original password given by the user, the server can support PPP PAP or CHAP, UNIX login, and other authentication mechanisms. What is supported depends on what a vendor has implemented.

Typically, a user login consists of a query (access-request) from the NAS to the RADIUS server and a corresponding response (access-accept or access-reject) from the server. The access-request packet contains the username, encrypted password, NAS IP address, and port. The format of the request also provides information about the type of session the user wants to initiate.

When the RADIUS server receives the access-request packet from the NAS, it searches a database for the username listed. If the username does not exist in the database, either a default profile is loaded or the RADIUS server immediately sends an access-reject message. This access-reject message can be accompanied by an optional text message, which may indicate the reason for the refusal.

In RADIUS, the authentication and authorization functionalities are coupled together. If the username is found and the password is correct, the RADIUS server returns an access-accept response, including a list of attribute-value pairs that describe the parameters to be used for this session. Typical parameters include service type (shell or framed), protocol type, IP address to assign the user (static or dynamic), access list to apply, or a static route to install in the NAS routing table. The configuration information in the RADIUS server defines what will be installed on the NAS.

The accounting features of the RADIUS protocol can be used independently of RADIUS authentication or authorization. The RADIUS accounting functions allow data to be sent at the start and end of sessions, indicating the amount of resources (such as time, packets, bytes, and so on) used during the session. An Internet service provider (ISP) might use RADIUS access control and accounting software to meet special security and billing needs.

Transactions between the RADIUS client and RADIUS server are authenticated through the use of a shared secret, which is never sent over the network. However, communication between the client and server is in the clear except for the user passwords, which are encrypted. The following steps are used to encrypt the user-supplied password:

The 16-byte random number from the Request Authenticator field and the preconfigured shared secret are input to an MD5 hash function, which results in a 16-byte hash.

The user-provided password is padded at the end with nulls until it is 16 bytes in length.

The hash from Step 1 is XOR'd with the padded password to create the encrypted password.

The recipient of the encrypted password calculates its own hash because it also has knowledge of the preconfigured shared secret. When this hash is XOR'd with the encrypted password, the result is the password in cleartext.

Figure 2-19 shows the RADIUS login and authentication process.

NOTE

With both TACACS+ and RADIUS, it is important to remember that encryption is performed between the TACACS+/RADIUS client and the TACACS+/RADIUS server. If the TACACS+/RADIUS client is a NAS and not the client PC, any communication between the PC and the NAS is not encrypted. (See Figure 2-20.) In addition, the communication between the NAS and the TACACS+/RADIUS server may traverse networks, which can easily be tapped into and snooped. Therefore, any authentication mechanism using cleartext passwords, such as PPP PAP, should not be used.

Kerberos is a secret-key network authentication protocol, developed at Massachusetts Institute of Technology (MIT), that uses the Data Encryption Standard (DES) cryptographic algorithm for encryption and authentication. The Kerberos version 5 protocol is an Internet standard specified by RFC 1510.

Kerberos was designed to authenticate user requests for network resources. Kerberos is based on the concept of a trusted third party that performs secure verification of users and services. In the Kerberos protocol, this trusted third party is called the key distribution center (KDC), sometimes also called the authentication server. The primary use of Kerberos is to verify that users and the network services they use are really who and what they claim to be. To accomplish this, a trusted Kerberos server issues “tickets” to users. These tickets have a limited life span and are stored in the user's credential cache. They can later be used in place of the standard username-and-password authentication mechanism.

A number of Kerberos-related terms are defined here. The following definitions will make the subsequent text easier to understand:

Credential—. A general term that refers to authentication tickets, such as ticket-granting tickets (TGTs) and service credentials. Kerberos credentials verify the identity of a user or service. If a network service decides to trust the Kerberos server that issued a ticket, it can be used in place of retyping in a username and password. Credentials have the default life span of 8 hours.

Instance—. An authorization-level label for Kerberos principals.

Kerberized—. Applications and services that have been modified to support the Kerberos credential infrastructure.

Kerberos realm—. A domain consisting of users, hosts, and network services that are registered to a Kerberos server. The Kerberos server is trusted to verify a user's or network service's identity to another user or network service. Kerberos realms must always be in uppercase characters. TCP fragmentation must also be defined on the KDC server. The Kerberos realm is also used to map a DNS domain to a Kerberos realm.

Kerberos sever—. A daemon running on a network host. Users and network services register their identities with the Kerberos server. Network services query the Kerberos server to authenticate to other network services.

Key distribution center (KDC)—. A Kerberos server and database program running on a network host.

Principal—. Also known as a Kerberos identity, this is who you are or what a service is according to a Kerberos server.

Service credential—. A credential for a network service. When issued from the KDC, this credential is encrypted with the password shared by the network service and the KDC, and with the user's TGT.

SRVTAB—. A password that a network service shares with the KDC. The network service authenticates an encrypted service credential using the SRVTAB (also known s KEYTAB) to decrypt it.

Ticket-granting ticket (TGT)—. A credential that the KDC issues to authenticate users. When users receive a TGT, they can authenticate to network services within the Kerberos realm represented by the KDC.

Initially, the Kerberos client has knowledge of an encryption key known only to the user and the KDC, Kclient. Similarly, each application server shares an encryption key with the KDC, Kserver. (See Figure 2-21.)

When the client wants to create an association with a particular application server, the client uses the authentication request and response to first obtain a ticket and a session key from the KDC. (See Figure 2-22.)

The steps are as follows:

The client sends an authentication request to the KDC. This request contains the following information:

Its claimed identity

The name of the application server

A requested expiration time for the ticket

A random number that will be used to match the authentication response with the request

The KDC verifies that the claimed identity exists in the Kerberos database and creates an authentication response. If pre-authentication is used, the client access rights are also verified.

The KDC returns the response to the client. The authentication response contains the following information:

The session key, Ksession

The assigned expiration time

The random number from the request

The name of the application server

Other information from the ticket

This information is all encrypted with the user's password, which was registered with the authentication server, Kclient. The KDC also returns a Kerberos ticket containing the random session key, Ksession, which will be used for authentication of the client to the application server; the name of the client to whom the session key was issued; and an expiration time after which the session key is no longer valid. The Kerberos ticket is encrypted using Kserver.

When the client receives the authentication reply, it prompts the user for the password. This password, Kclient, is used to decrypt the session key, Ksession.

Now the client is ready to communicate with the application server.

NOTE

Kclient is used as the bootstrap mechanism, but in subsequent communication between the KDC and the client, a short-term client key, Kclient-session, is used. Kclient-session is created by having the KDC convert the user's password to the short-term client key. The KDC sends the short-term client key, Kclient-session, encrypted with the user's password, to the client. The user decrypts the short-term client key and subsequent KDC to client communication use Kclient-session.

The application request and response is the exchange in which a client proves to an application server that it knows the session key embedded in a Kerberos ticket. Figure 2-23 shows the exchange.

The steps in the application request and response are as follows:

The client sends two things to the application server as part of the application request:

The Kerberos ticket (described earlier)

An authenticator, which includes the following (among other fields):

The current time

A checksum

An optional encryption key

These elements are all encrypted with the session key, Ksession, from the accompanying ticket.

After receiving the application request, the application server decrypts the ticket with Kserver, extracts the session key, Ksession, and uses the session key to decrypt the authenticator.

If the same key was used to encrypt the authenticator as was used to decrypt it, the checksum will match, and the verifier can assume that the authenticator was generated by the client named in the ticket and to whom the session key was issued. By itself, this check is not sufficient for authentication because an attacker can intercept an authenticator and replay it later to impersonate the user. For this reason, the verifier also checks the time stamp. If the time stamp is within a specified window (typically 5 minutes), centered around the current time on the verifier, and if the time stamp has not been seen on other requests within that window, the verifier accepts the request as authentic.

At this point, the identity of the client has been verified by the server. For some applications, the client also wants to be sure of the server's identity. If such mutual authentication is required, a third step is required.

The application server generates an application response by extracting the client's time from the authenticator and returns it to the client together with other information, all encrypted using the session key, Ksession.

The basic Kerberos authentication protocol allows a client with knowledge of the user's password to obtain a ticket and session key and to prove its identity to any verifier (usually an application server) registered with the KDC. The user's password must be presented each time the user performs authentication with a new verifier. A system should support single sign-on, where the user logs in to the system once and provides the password at that time; subsequent authentication occurs automatically.

The obvious way to cache the user's password on the workstation is dangerous. Although a Kerberos ticket and the key associated with it are valid for only a short time, an intruder knowing the user's password can obtain valid tickets and impersonate the user until the password is changed. This is why the short-term client key, Kclient-session, is used in place of the user's actual password in all but the initial bootstrap communication. The Kerberos approach is to cache only tickets and encryption keys (collectively called credentials) that will work for a limited time period.

The ticket-granting exchange of the Kerberos protocol allows a user to obtain tickets and encryption keys using such short-lived credentials, without re-entering the user's password. When the user first logs in, an authentication request is issued, and a ticket and the client session key for the ticket-granting service is returned by the KDC. This ticket, called a ticket-granting ticket (TGT), has a relatively short life (typically on the order of 8 hours). The response is decrypted, the ticket and session key are saved, and the user's password is forgotten. Subsequently, when the user wants to prove its identity to a new verifier, a new ticket is requested from the KDC using the ticket-granting exchange.

NOTE

The ticket-granting exchange is identical to the authentication exchange except that the ticket-granting request has embedded within it an application request (authenticating the client to the authentication server), and the ticket-granting response is encrypted using the client session key from the ticket-granting ticket rather than from the user's password.

Multiple realms, or domains, are supported in Kerberos to allow for scalable implementations. Assume that a corporation has implemented a Kerberos system with two separate realms, Italy and Hungary. When a client in Italy's realm connects to a server in Hungary's realm, Italy's KDC authenticates the client to Hungary's KDC. Hungary's KDC authenticates the client to Hungary's server. Multi-KDC chaining is not allowed, and trust for KDC chaining should go back only one level.

Several utility programs must be installed on the workstation to allow users to obtain Kerberos credentials (kinit), destroy credentials (kdestroy), list credentials (klist), and change their Kerberos passwords (kpasswd). Some sites choose to integrate the Kerberos login tool, kinit, with the workstation login program so that users do not have to type their password twice. This makes the use of Kerberos nearly transparent; users may not even be aware they are using Kerberos.

NOTE

Client/server applications must be modified to use Kerberos for authentication; such Kerberos-aware applications are said to be Kerberized.

You should also consider using a method of accurate time in all systems because Kerberos has a time-dependency issue through the use of time stamps. A synchronized, dependable mechanism of obtaining time is needed; most likely, the use of NTP is warranted.

The Distributed Computing Environment (DCE) is a set of functional specifications from the Open Software Foundation (OSF), found at http://www.opengroup.org/. DCE is a set of distributed computing technologies that provides security services to protect and control access to data; name services that make it easy to find distributed resources; and a highly scalable model for organizing widely scattered users, services, and data.

DCE has a modular design and supports authentication and authorization. The implemented authentication part is Kerberos version 5 (although, in theory, another mechanism can be substituted). The authorization part works in a manner similar to Kerberos but is implemented by privilege servers and registration servers. In practice, these are usually delivered with the KDC. The registration server ties the KDC with the user's privileges, which are found in the privilege server. The privilege server combines the universal unique ID (UUID) and the groups into a Kerberos ticket for secure transmission. Kerberos uses usernames (which may not always be consistent or unique across the enterprise). DCE uses the UUIDs, which are 128 bits long. On most systems, the user ID (UID) and group ID (GID) fields are 32 bits each.

In practice, a user can authenticate from any workstation with a username and password. The TGT is issued by the KDC. The workstation then uses that session key to form a session to the privilege server. The UUID and access control list (ACL) information is then passed to the workstation through a privilege ticket-granting ticket (PTGT) from the privilege server. The session key encrypted in the PTGT is used. The UUID and the group information are then used as the authorization information to allow or disallow access to services and resources.

NOTE

The DCE effort has not produced the groundswell effect its supporters hoped for. Today, some organizations have embraced it, but it is manpower-intensive to support (as is Kerberos) because it is fairly complex and relies on several other DCE services being implemented. Therefore, it is not found in use very often.

Multilevel Information Systems Security Initiative (MISSI) is a network security initiative, under the leadership of the National Security Agency (NSA). MISSI provides a framework for the development and evolution of interoperable, complementary security products to provide flexible, modular security for networked information systems across the Defense Information Infrastructure (DII) and the National Information Infrastructure (NII). These MISSI building blocks share a common network security infrastructure and are based on common security protocols and standards. Flexible solutions are tailored from these building blocks to meet a system's security requirements and may easily evolve, as future MISSI components provide additional backward-compatible security services and assurance.

Although some MISSI components result from government-contracted developments, most components are offered by commercial vendors as off-the-shelf products. The MISSI building blocks include the following:

FORTEZZA and FORTEZZA Plus

Firewalls

Guards

Inline encryptors

Trusted computing

FORTEZZA, combined with FORTEZZA-enabled applications, provides security services appropriate for protecting sensitive-but-unclassified (SBU) data. FORTEZZA provides the following features:

FORTEZZA Plus supports users of classified information with strong encryption methods. FORTEZZA Plus is an upgraded version of FORTEZZA that can be used to encrypt classified information up through Top Secret information. FORTEZZA Plus must be used in conjunction with a high assurance guard such as the secure network server (SNS), which ensures that the encryption of information is invoked. The use of FORTEZZA Plus to process classified information at different levels can be affected by the security limitations of other components in the system.

The FORTEZZA card is a cryptographic token in a PCMCIA form factor that provides encryption/ decryption and digital signature functions. It uses DSA and SHA for signature and message digests but NSA-designed key agreement and encryption algorithms. The key agreement algorithm is a variant of Diffie-Hellman called Key Exchange Algorithm (KEA) and the encryption algorithm is a block cipher called SKIPJACK. The card also stores certificates that include individualized key material used by the cryptographic and signature functions. The software on the workstation (PC, UNIX, and so on) exchanges commands and data with the FORTEZZA card to encrypt and sign messages before it sends them. It likewise uses the card to decrypt and verify the signatures of received messages. Each time the card is inserted into a workstation, the owner must unlock the card by entering a PIN. FORTEZZA card PINs can range from 4 to 12 characters. PINs may be a combination of alpha and numeric characters.

To perform application functions for the user, FORTEZZA must interoperate with FORTEZZA-enabled applications. These applications are either government developed or COTS applications (such as e-mail) that have been modified to interface with and use FORTEZZA security features. A large variety of such applications exist; more are being added as they are developed and tested.

Major types of FORTEZZA-enabled applications include these:

Electronic messaging—. FORTEZZA can secure e-mail, electronic data interchange (EDI), electronic commerce, and facsimile to provide message encryption, authentication, and data integrity.

World Wide Web (WWW)—. FORTEZZA can protect secure web transactions using strong identification and authentication and Secure Sockets Layer (SSL) interactions. Netscape has built a FORTEZZA-enabled version of its browser that links SSL with FORTEZZA.

File and media encryptors—. These encryptors are applications written to enable FORTEZZA to secure user files on storage media.

Identification and authentication—. After the FORTEZZA card has been installed in the workstation and the PIN has been correctly entered, the identity of the user is known and trusted. Access to other devices across a network can be authorized by exchanging this identification and authentication information in a trusted manner.

NOTE

FORTEZZA was originally designed by the NSA to provide strong cryptography while allowing the NSA to incorporate key escrow into the device. This was done using the Law Enforcement Access Field (LEAF), which contained the session key used to encrypt the transmitted traffic. The NSA could decrypt a communication by recovering the session key from the LEAF. The LEAF was embedded in the IV (initialization vector), which is a random block of data used to add randomness for key-generation material. However, due to the large key escrow controversy, in 1997 the NSA removed the LEAF. A dummy LEAF is now used for the IV for backward compatibility.

802.1x is the standard developed by the IEEE that enables authentication and key management for IEEE 802 local area networks. It allows for devices to be authenticated and authorized before they can logically connect to a port on a switch. In the case of Ethernet or Token Ring, these ports are physical entities that the device plugs into. In the case of wireless networks, however, these ports are logical entities known as associations.

The 802.1x standard defines three main logical entities (which may or may not reside on separate devices) as illustrated in Figure 2-24:

Supplicant—. A device that needs access to a LAN (for example, a laptop or workstation).

Authenticator—. A device that is responsible for initiating the authentication process and subsequently acts as a relay between the actual authentication server and the supplicant.

Authentication server—. A device that is responsible for performing the actual authentication and authorization on behalf of the authenticator. It contains profile information for all the users on the network and can use that information to authenticate and authorize users to connect to the ports on the authenticator.

The authentication data between the three entities is exchanged using EAP packets, which are carried in varying protocol packets. An encapsulation mechanism known as EAP over LANs (EAPOL) is defined in 802.1x to allow communication between the supplicant and the authenticator. EAPOL is defined separately for both Ethernet and Token Ring. The EAP messages are thus encapsulated using the EAPOL frames for transport between the supplicant and the authenticator. Upon receiving the EAPOL frame, the authenticator strips off the headers and, if the authenticator and authentication server reside on different devices, forwards the EAP message using another protocol. Communication between the authenticator and the authentication server is typically done via TACACS+ or RADIUS. RADIUS is generally preferred because it has EAP encapsulation extensions built into it. An example of the 802.1x transaction for authenticating a workstation to a LAN switch is shown in Figure 2-25. A RADIUS server is used as the authentication server.

This section focuses on technologies designed to address security needs for specific application protocols. The intent of this chapter is to familiarize the reader with most of the underlying security technologies available today, which form the basis for securing the network infrastructure. Some of the application layer security protocols are used as security solutions for specific environments, such as securing Voice over IP networks, as discussed in the next chapter.

The application layer pertains to the details of a particular application such as Telnet, FTP, or HTTP, and doesn't concern itself with the details of the movement of data across a network. This layer uses end-to-end protocols, in which end systems are responsible for providing security for the application protocol. Not many security protocols are specifically designed for individual applications. There are too many applications to make such an approach scalable. However, a few merit mentioning. Because the World Wide Web has become one of the fastest growing applications in the Internet, a specific security protocol was designed to be used for secure web transactions: Secure HyperText Transport Protocol (SHTTP). The Secure Multipurpose Internet Mail Extensions (S/MIME) protocol was designed to build security functionality on top of the MIME protocol to be easily integrated into e-mail and messaging products. Both are detailed here.

SHTTP is a secure message-oriented communications protocol designed to be used for securing messages using the HTTP protocol. The protocol preserves the characteristics of HTTP while allowing request and reply messages to be signed, authenticated, encrypted, or any combination of these (including no protection). SHTTP clients can communicate with non-HTTP-supported servers (although in these cases, the security features of SHTTP would not be applied).

Multiple key-management mechanisms are supported, including password-style manually shared secrets, and public key exchange. If some hosts do not have a public key pair, it is possible to use pre-arranged symmetric session keys to send confidential messages. These would usually be provided out of band.

Secure HTTP can verify message integrity and sender authenticity for a message using the computation of a message authentication code (MAC). The MAC is computed as a keyed hash over the document using a shared secret.

SHTTP uses option negotiation to allow clients and servers to agree on the following:

The main benefit of using an application-specific protocol such as SHTTP is that very specific security needs can be met. Consider these examples:

The application could deal with a message containing digital signatures by several different agents and make decisions based on who signed what.

Cryptographic security measures could be defined for individual web pages such that individually encrypted web pages could be published on any web server but could only be read by those with authorized keys.

In practice, SHTTP has achieved limited use. Transport layer security implementations are more easily available and more often used for web security.

S/MIME is short for Secure Multipurpose Internet Mail Extensions. The specification was designed to be easily integrated into e-mail and messaging products. S/MIME builds security on top of the industry-standard MIME protocol according to an equally important set of cryptographic standards, the Public Key Cryptography Standards (PKCS). Public key technologies are discussed later in this chapter. MIME was an enhancement to the original messaging specification defined in RFC 822, which restricted the message body to ASCII text. MIME added other forms of content by providing specifications for binary data.

S/MIME was originally developed by RSA Data Security, Inc., and was based on the PKCS #7 data format for the messages, and the X.509v3 format for certificates. PKCS#7 only supported RSA for key exchange, which meant that S/MIMEv2 only had support for RSA. The S/MIME working group was created in the IETF to add support for other key exchange and signature algorithms.

The S/MIMEv3 standard consists of five parts:

An additional protocol, Enhanced Security Services for S/MIME (RFC 2634), is a set of extensions to S/MIME to allow signed receipts, security labels, and secure mailing lists. The first two of these extensions work with either S/MIMEv2 or S/MIMEv3; secure mailing lists only work with S/MIMEv3.

NOTE

S/MIMEv2 is not an IETF standard. S/MIMEv2 requires the use of RSA key exchange, which is encumbered by U.S. patents held by RSA Data Security, Inc.; further, S/MIMEv2 requires the use of weak cryptography (40-bit keys). Both of these issues have prevented the protocol from being accepted as an IETF standard.

S/MIME provides a consistent way to send and receive secure MIME data by adding cryptographic signature and encryption services. It provides the following cryptographic security services for electronic messaging applications: authentication, message integrity and nonrepudiation of origin (using digital signatures), and privacy and data security (using encryption).

Separate requirements and recommendations are made for how receiving agents handle incoming messages and for how sending agents create outgoing messages. In general, they are based on the following strategy: Be liberal in what you receive and conservative in what you send. The separation for requirements on receiving agents and sending agents derives from the likelihood that there will be S/MIME systems that involve software other than traditional Internet mail clients. S/MIME is not restricted to mail; it can be used with any transport mechanism that transports MIME data, such as HTTP. Further, S/MIME can be used in automated message transfer agents that use cryptographic security services that do not require any human intervention, such as the signing of software-generated documents and the encryption of fax messages sent over the Internet.

The mandatory features required for S/MIME communication are as follows:

A sender may, of course, support other encryption algorithms. The sender is able to advertise the user's capabilities and preference for a choice of encryption algorithms.

The specification leaves it up to the implementers and users whether to either sign a message first or to provide confidentiality by encrypting the message first. When signing first, the signatories are then securely obscured by the encryption. When encrypting first, the signatories are exposed, but it is possible to verify signatures without removing the privacy protection. This may be useful in an environment were automatic signature verification is desired, because no private key material is required to verify a signature.

There are security ramifications to choosing whether to sign first or encrypt first. A recipient of a message that is encrypted and then signed can validate that the encrypted block was unaltered, but cannot determine any relationship between the signer and the unencrypted contents of the message. A recipient of a message that is signed and then encrypted can assume that the signed message itself has not been altered, but that a careful attacker may have changed the unauthenticated portions of the encrypted message.

Several characteristics of S/MIME make it a very flexible security solution for a variety of messaging applications:

Multiple signers—. Because CMS supports multiple signatures on a single message, S/MIME supports having a message signed by multiple senders.

Multiple recipients—. It is possible to send the same message securely to multiple recipients by encrypting the message with a single CEK and then encrypting the CEK individually for each recipient.

Receipt—. The ability to provide a receipt allows a sender to be sure that the recipient received a message and that it wasn't altered in transit. However, the recipient is not required to generate a receipt, and therefore lack of a receipt does not indicate that the recipient did not receive the message.

Forwarding—. Messages can be forwarded from one recipient to another while leaving the message signature intact and verifiable. This is possible because S/MIME uses a digital signature that signs the whole message.

Transport independence—. S/MIME provides end-to-end security at the application layer and is independent of any underlying transport. Therefore, in IP networks it can run over either TCP or UDP.

S/MIMEv3 is becoming widely deployed and is an underlying requirement for providing security services in Session Initiation Protocol (SIP)-based telephony networks, discussed in the next chapter.

The transport layer provides the details of moving the flow of data between two hosts. The following sections describe the security protocols that operate over TCP/IP or some other reliable but insecure transport. They are categorized as transport layer security protocols because their intent is to secure the transport layer and to provide methods for implementing privacy, authentication, and integrity above the transport layer. This layer uses end-to-end protocols, in which end systems are responsible for providing security for the transport protocol.

The Secure Socket Layer (SSL)/Transport Layer Security (TLS) protocol specifies a mechanism for providing data security layered between application protocols (such as HTTP, Telnet, NNTP, or FTP) and TCP/IP. It provides data encryption, server authentication, message integrity, and optional client authentication for a TCP/IP connection.

The original proprietary SSLv1 specification was designed by Netscape, and was subsequently modified into SSLv2 and publicly released in 1994. While SSLv2 was being deployed, Microsoft was coming up with its own incompatible variant, PCT. SSLv2 contained a number of security flaws, some of which PCT corrected, that ultimately led to the design of SSLv3. SSLv2 used RC4 for encryption and the MD5 algorithm for authentication. RSA keys were used for both authentication and key exchange; and at the time the SSLv2 specification came out, the U.S. government export laws limited the key length of a cryptographic algorithm to 40-bits, which many implementations supported. SSLv3 added support for DSS for authentication and DH for key agreement. The Transport Layer Security (TLS) working group was formed in 1996 to try and standardize an SSL-like protocol. TLS is based on SSLv3 and requires the use of DSS for authentication, DH for key agreement, and 3DES for encryption. In the following text, any reference to SSL is meant to imply SSLv3. Only the SSL operation is described because TLS is essentially the same.

NOTE

Although the TLS protocol operation is very similar to that of SSLv3, the key expansion and message authentication computations are incompatibe and therefore TLS and SSLv3 will not necessarily interoperate.

SSL assumes that the underlying packet delivery mechanism is reliable, and although in theory there are a number of transport protocols that could provide this service, SSL nearly always uses TCP as its transport.

The primary goal of SSL is to provide privacy and reliability between two communicating applications. SSL is a layered protocol consisting of the record protocol, which provides the envelope and security services for the four content layer protocols (handshake, alert, change cipher spec, and application). These are described as follows:

The record protocol—. This protocol is used to exchange application layer data. Application messages are fragmented into manageable blocks, optionally compressed, and a MAC (message authentication code) is applied. (SSL uses the term MAC, which is really a hash or message digest.) The result is encrypted and transmitted. The recipient takes the received data and decrypts it, verifies the MAC, decompresses and reassembles it, and delivers the result to the application protocol.

The handshake protocol—. This protocol negotiates the cryptographic parameters to be used between the client and the server session. When an SSL client and server first start communicating, they agree on a protocol version, select cryptographic algorithms, optionally authenticate each other, and use public key encryption techniques to generate shared secrets.

The alert protocol—. This protocol is used to indicate when errors have occurred or when a session between two hosts is being terminated. It is a one-way information-error message.

The change cipher spec protocol—. This protocol indicates a change in the encryption and authentication of records. It is a one-way messge and instigates a new handshake.

The application protocols—. These define any client/server protocols that use SSL and have specified ports defined. For example POP3S (995), NNTPS 563), HTTPS (443), LDAPS (636), and IRCS (994).

NOTE

Although the SSLv3 and TLS specifications allow for compression, the only compression currently stipulated is NULL. Therefore, compression is not commonly used.

Figure 2-26 shows a diagram of a SSL/TLS record. The content type specifies the content protocol used to process the plaintext fragment. A fragment is the portion of the data stream that is being transmitted. The protocol version is 3.0 for SSL and 3.1 for TLS. The record length is the length of the fragments, in bytes. These three fields comprise the header and are sent in the clear. The rest of the fragment is encrypted. It consists of the data that is sent, the computed MAC, and possibly some padding. The padding is required when using block ciphers such as DES because a block cipher requires the data to be encrypted to be a multiple of the block length.

The SSL handshake protocol provides connection security that has three basic properties:

The peer's identity can be authenticated using asymmetric, or public key, cryptography (for instance, RSA, DSS, and so on). This authentication can be made optional, but is generally required for at least one of the peers.

The negotiation of a shared secret is secure—the negotiated secret is unavailable to eavesdroppers, and for any authenticated connection the secret cannot be obtained, even by an attacker who can place himself in the middle of the connection.

The negotiation is reliable—no attacker can modify the negotiation communication without being detected by the parties to the communication.

Consider an example using a web client and server. The web client initiates an SSL session by connecting to an SSL-capable server. A typical SSL-capable web server accepts SSL connection requests on a different port (port 443 by default) than standard HTTP requests (port 80 by default). When the client connects to this port, it initiates a handshake that establishes the SSL session. After the handshake finishes, communication is encrypted using the negotiated shared session key and message integrity checks are performed until the SSL session expires. SSL creates a session during which the handshake must happen only once.

Figure 2-27 shows the SSL handshake process. (See the section “Public Key Infrastructure and Distribution Models” later in this chapter for more information about digital certificates.) The steps in the process are as follows:

The SSL client connects to the SSL server and requests the server to authenticate itself. It also includes the cipher suites it supports.

The server proves its identity by sending its digital certificate, which has its public key attached to it. This exchange may optionally include an entire certificate chain, up to some root certificate authority (CA).

Certificates are verified by checking validity dates and verifying that the certificate bears the signature of a trusted CA. The certificate is signed but not encrypted.

The server also returns a selected ciphersuite to use for the initial secure tunnel.

The client generates a random secret key, encrypts it with the server's public key, and sends it to the SSL server. Communication from now on is encrypted using the shared secret and specified cipher suite.

The message encryption algorithm and the hash function for integrity are negotiated for the session. Usually the client presents a list of all the algorithms it supports, and the server selects the strongest cipher available.

The client and server generate the session keys by following these steps:

The client generates a random number that it sends to the server, encrypted with the server's public key (obtained from the server's certificate).

The server responds with more random data (encrypted with the client's public key, if available; otherwise, it sends the data in cleartext).

The encryption keys are generated from this random data using hash functions.

The handshake process is complete and a secure session tunnel is created.

NOTE

Mutual authentication can be carried out by using the optional client-based authentication mechanism. This would occur after Step 3 in the preceding example. However, SSL only supports certificate-based client authentication, which is not often used due to the lack of a public key infrastructure. Instead, many application protocols incorporate their own client authentication mechanism, such as username/password or a one-time password technology such as S/Key. These authentication mechanisms are more secure when run over SSL.

The advantage of the SSL protocol is that it provides connection security that has three basic properties:

The connection is private. Encryption is used after an initial handshake to define a secret key. Symmetric cryptography is used for data encryption (for example, DES and RC4).

The peer's identity can be authenticated using asymmetric, or public key, cryptography (for example, RSA, and DSS).

The connection is reliable. Message transport includes a message integrity check using a keyed MAC. Secure hash functions (such as SHA and MD5) are used for MAC computations.

SSL/TLS is widely used with HTTP traffic. Other protocols are also starting to use SSL/TLS and have specific port numbers defined. Refer to http://www.iana.org/assignments/port-numbers for a list of officially assigned application port numbers. Table 2-2 lists some applications that are starting to use TLS to provide application-specific security. Note that most of these port numbers are specified for TCP and UDP.

The Secure Shell (SSH) is a protocol for secure remote login and other secure network services over an insecure network. It provides support for secure remote login, secure file transfer, and the secure forwarding of TCP/IP and X Window System traffic. It can automatically encrypt, authenticate, and compress transmitted data. The work in progress to define the SSH protocol ensures that the SSH protocol can provide strong security against cryptanalysis and protocol attacks, can work reasonably well without a global key management or certificate infrastructure, and can use existing certificate infrastructures (such as DNSSEC and X.509) when available.

The SSH protocol consists of three major components:

The transport layer protocol, which provides server authentication, confidentiality, and integrity with perfect forward secrecy. Optionally, it may also provide compression.

The user authentication protocol, which authenticates the client to the server.

The connection protocol, which multiplexes the encrypted tunnel into several logical channels.

The SSH transport layer is a secure low-level transport protocol. It provides strong encryption, cryptographic host authentication, and integrity protection. Authentication in SSH is host based; this protocol does not perform user authentication. A higher-level protocol for user authentication can be designed on top of SSH.

The protocol has been designed to be simple and flexible enough to allow parameter negotiation and to minimize the number of round trips. The key exchange method, the public key algorithm, the symmetric encryption algorithm, the message authentication algorithm, and the hash algorithm are all negotiated.

Data integrity is protected by including with each packet a MAC computed from a shared secret, a packet sequence number, and the contents of the packet.

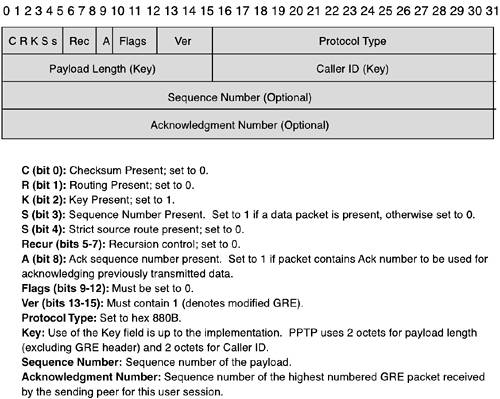

SSH implementations can be found for UNIX, Windows, and Macintosh systems. It is a widely accepted protocol that uses well-known and well-established encryption, integrity, and public key algorithms.