Neural style transfer is the process of applying the style of a reference image to a specific target image, such that the original content of the target image remains unchanged. Here, style is defined as colors, patterns, and textures present in the reference image, while content is defined as the overall structure and higher-level components of the image.

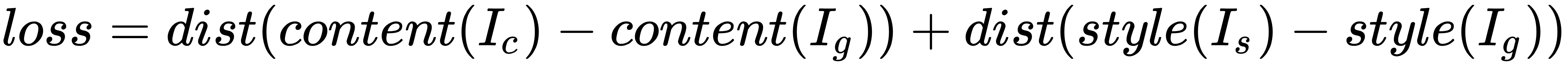

Here, the main objective is to retain the content of the original target image, while superimposing or adopting the style of the reference image on the target image. To define this concept mathematically, consider three images: the original content (denoted as c), the reference style (denoted as s), and the generated image (denoted as g). We would need a way to measure how different images c and g might be in terms of their content. Also, the output image should have less difference compared to the style image, in terms of styling features of the output. Formally, the objective function for neural style transfer can be formulated as follows:

Here, α and β are weights used to control the impact of content and style components on the overall loss. This depiction can be simplified further and represented as follows:

Here, we can define the following components from the preceding formula:

- dist is a norm function; for example, the L2 norm distance

- style(...) is a function to compute representations of style for the reference style and generated images

- content(...) is a function to compute representations of content for the original content and generated images

- Ic, Is, and Ig are the content, style, and generated images respectively

Thus, minimizing this loss causes style(Ig) to be close to style(Is), and also content(Ig) to be close to content(Ic). This helps us in achieving the necessary stipulations for effective style transfer. The loss function we will try to minimize consists of three parts; namely, the content loss, the style loss, and the total variation loss, which we will be talking about soon. The key idea or objective is to retain the content of the original target image, while superimposing or adopting the style of the reference image on the target image. Also, you should remember the following in the context of neural style transfer:

- Style can be defined as color palettes, specific patterns, and textures that present in the reference image

- Content can be defined as the overall structure and higher-level components of the original target image

By now, we know that the true power of deep learning for computer vision lies in leveraging models like deep Convolutional Neural Network (CNN) models, which can be used to extract the right image representations when building these loss functions. In this chapter, we will be using the principles of transfer learning in building our system for neural style transfer to extract the best features. We have already discussed pretrained models for computer vision-related tasks in previous chapters. We will be using the popular VGG-16 model again in this chapter as a feature extractor. The main steps for performing neural style transfer are depicted as follows:

- Leverage VGG-16 to help compute layer activations for the style, content, and generated image

- Use these activations to define the specific loss functions mentioned earlier

- Finally, use gradient descent to minimize the overall loss

If you want to dive deeper into the core principles and theoretical concepts behind neural style transfer, we recommend you read up on the following papers:

- A Neural Algorithm of Artistic Style, by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge (https://arxiv.org/abs/1508.06576)

- Perceptual Losses for Real-Time Style Transfer and Super-Resolution, by Justin Johnson, Alexandre Alahi, and Li Fei-Fei (https://arxiv.org/abs/1603.08155)