0-9

1-10-100 rule – See cost of quality.

3Ds – The idea that an evaluation of a potential automation project should consider automating tasks that are dirty, dangerous, or dull.

The picture at the right is the PackBot EOD robot from the iRobot Corporation designed to assist bomb squads with explosive ordinance disposal. This is a good example of the second “D.”

See automation.

3Gs – A lean management practice based on the three Japanese words gemba, genbutsu, and genjitsu, which translate into “actual place,” “actual thing,” and “actual situation” or “real data.”

• Gemba (or genba) – The actual place where work takes place and value is created.

• Gembutsu (or genbutsu) – The actual things (physical items) in the gemba, such as tools, machines, materials, and defects.

• Genjitsu (or jujitsu) – The real data and facts that describe the situation.

In Japanese, Genchi Gembutsu ![]() means to “go and see” and suggests that the only way to understand a situation is to go to the gemba, which is the place where work is done.

means to “go and see” and suggests that the only way to understand a situation is to go to the gemba, which is the place where work is done.

See gemba, lean thinking, management by walking around, waste walk.

3PL – See Third Party Logistics (3PL) provider.

5 Whys – The practice of asking “why” many times to get beyond the symptoms and uncover the root cause (or causes) of a problem.

Here is a simple example:

• Why did the ink-jet label system stop printing? The head clogged with ink.

• Why did the head clog with ink? The compressed air supply had moisture in it.

• Why did the compressed air supply have moisture in it? The desiccant media was saturated.

• Why was the desiccant media saturated? The desiccant was not changed prior to expiration.

• Why was the desiccant not changed prior to expiration? A change procedure does not exist for the compressed air desiccant.

Galley (2008) and Gano (2007) argue persuasively that problems rarely have only one cause and that assuming a problem has only single root cause can prevent investigators from finding the best solution.

The focus of any type of root cause analysis should be on finding and fixing the system of causes for the problem rather than finding someone to blame. In other words, use the 5 Whys rather than the 5 Who’s.

See Business Process Re-engineering (BPR), causal map, error proofing, impact wheel, kaizen workshop, Root Cause Analysis (RCA).

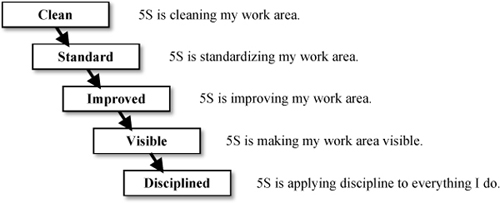

5S – A lean methodology that helps organizations simplify, clean, and sustain a productive work environment. ![]()

The 5S methodology originated in Japan and is based on the simple idea that the foundation of a good production system is a clean and safe work environment. Translated from Japanese words that begin with the letter “S,” the closest English equivalents normally used are Sort, Set in order, Shine, Standardize, and Sustain. The following list is a combination of many variants of the 5S list found in various publications:

• Sort (separate, scrap, sift) – Separate the necessary from the unnecessary and get rid of the unnecessary.

• Set in order (straighten, store, simplify) – Organize the work area (red tag, shadow boards, etc.) and put everything in its place.

• Shine (scrub, sweep) – Sweep, wash, clean, and shine everything around the work area.

• Standardize – Use standard methods to maintain the work area at a high level so it is easy to keep everything clean for a constant state of readiness.

• Sustain (systematize, self-discipline) – Ensure that all 5S policies are followed through the entire organization by means of empowerment, commitment, and accountability.

Some lean practitioners add a sixth “S” for Safety. They use this “S” to establish safety procedures in and around the process. However, most organizations include safety as a normal part of the set in order step.

The benefits of a 5S program include reduced waste and improved visibility of problems, safety, morale, productivity, quality, maintenance, leadtimes, impression on customers, and sense of ownership of the workspace. More fundamentally, a 5S program can help the firm develop a new sense of discipline and order that carries over to all activities.

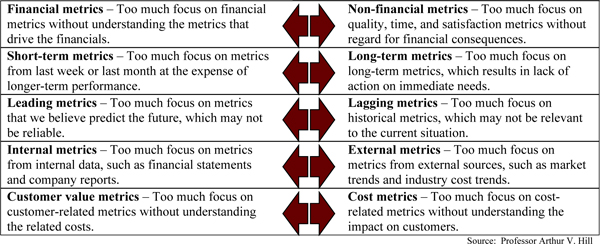

Five stages of understanding the benefits of a 5S program

Source: Professor Arthur V. Hill

Awareness of the benefits of a 5S program goes through five stages, as depicted in the figure below.

• Stage 1: Clean – People tend to assume initially that 5S is just cleaning up the work area. Cleaning a work area is a good practice, but this is only the beginning of 5S. (Some students joke that 5S is just “Mom telling me to clean up my room.”)

• Stage 2: Standard – People understand that 5S is about making this clean work process more standard. This makes it easy to find things because everything is always in the same place.

• Stage 3: Improved – People begin to understand that 5S is about continually improving how work is done. 5S challenges people to always be looking for better ways to organize their work areas, to make the work simple, visible, error-proof, and wasteless.

• Stage 4: Visible – People understand that 5S is about making work more visible so workers can focus on their work and so anything out of place “screams” for immediate attention. A visual work area provides cues that help workers and supervisors know the current status of the system and quickly identify if anything needs immediate attention.

• Stage 5: Disciplined – People wholeheartedly embrace the 5S disciplined mindset for how work is done and apply the discipline to everything they do.

Some practical implementation guidelines for a 5S program include:

• Take pictures before and after to document and encourage improvement.

• Practice the old slogan, “A place for everything and everything in its place.”

• Place tools and instruction manuals close to the point of use.

• Design storage areas with a wide entrance and a shallow depth.

• Lay out the storage area along the wall to save space.

• Place items where they are easy to see and access.

• Store similar items together and different items in separate rows.

• Do not stack items together. Use racks or shelves when possible.

• Use small bins to organize small items.

• Use color for quickly identifying items.

• Clearly label items and storage areas to improve visibility.

• Use see-through/transparent covers and doors for visibility.

• Remove unnecessary doors, walls, and other barriers to visibility, movement, and travel.

• Use carts to organize, move, and store tools, jigs, and measuring devices.

The Japanese characters for 5S are on the right (source: http://net1.ist.psu.edu/chu/wcm/5s/5s.htm, November 7, 2004).

See 8 wastes, facility layout, kaizen workshop, lean thinking, multiplication principle, point of use, red tag, shadow board, standardized work, Total Productive Maintenance (TPM), visual control.

6Ps – The acronym for “Prior Planning Prevents Painfully Poor Performance,” which emphasizes the need for planning ahead.

Wikipedia’s 7Ps entry includes several other variants. Apparently, the phase originated in the British Army, but is also popular in the U.S. Army1. The U.S. Army replaces the word “painfully” with a coarse word.

One somewhat humorous way to write this expression is as ![]() .

.

See personal operations management, project management.

7 wastes – See 8 wastes.

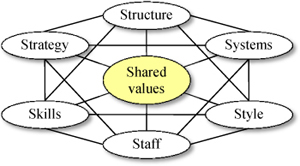

7S Model – A framework developed by McKinsey to help organizations evaluate and improve performance.

The McKinsey 7S Model (Waterman, Peters, & Phillips 1980) can be used to help organizations evaluate and improve their performance. The elements of the 7S Model (with simplified explanations) are as follows:

• Strategy – How to gain competitive advantage.

The McKinsey 7S Model

• Structure – How the organization’s units are interrelated. Options include centralized, functional (top-down), de-centralized, matrix, network, or holding.

• Systems – The procedures and processes that define how the work is done.

• Staff – The employees and their attributes.

• Style – The type of leadership practiced.

• Skills – The employee capabilities.

• Shared values – The organization’s beliefs and attitudes. This is the center of McKinsey’s model and is often presented first in the list.

These seven elements need to be aligned for an organization to perform well. The model can be used to help identify which elements need to be realigned to improve performance. The hard elements (strategy, structure, and systems) are easy to define and can be influenced directly by management. The soft elements (skills, style, staff, and shared values) are less tangible and harder to define, but are just as important as the hard elements.

See operations strategy.

8 wastes – Seven original forms of waste identified by Taiichi Ohno, plus one widely used in North America. ![]()

Taiichi Ohno, the father of the Toyota Production System, defined seven categories of waste (Ohno 1978). Waste (“muda”) includes any activity that does not add value to the customer. More recently, Bodek (2009) defined the eighth waste and called it “underutilized talents of workers.” Liker (2004) used the similar phrase “unused employee creativity.” Most sources now label this “waste of human potential.” The 8 wastes include:

1. Overproduction – Producing more than what is needed or before it is needed.

2. Waiting – Any time spent waiting for tools, parts, raw material, packaging, inspection, repair, etc.

3. Transportation – Any transportation of parts, finished goods, raw materials, packaging, etc. Waste is particularly apparent here when materials are moved into and out of storage or are handled more than once.

4. Excess processing – Doing more work than necessary (e.g., providing higher quality than needed, performing unneeded operations, or watching a machine run).

5. Inventory – Maintaining excess inventory of raw materials, in-process parts, or finished goods.

6. Excessive motion – Any wasted motion or poor ergonomics, especially when picking up or stacking parts, walking to look for items, or walking to look for people.

7. Defects (correction) – Repair, rework, recounts, re-packing, and any other situation where the work is not done right the first time.

8. Unused human potential – Unused employee minds and creativity.

One of the best approaches for eliminating these wastes is to implement a 5S program. The lean thinking entry also suggests many specific approaches for eliminating each of these wastes.

Macomber and Howell (2004) identified several additional wastes, including too much information, complexity, design of goods and services that do not meet users’ needs, providing something the customer does not value, not listening, not speaking, assigning people to roles that they are not suited for, not supporting people in their roles, and high turnover.

Many experts distinguish between necessary waste and unnecessary waste (also known as pure waste). Unnecessary waste is any activity that adds no direct value to the customer, to the team making the product, or to other activities that add direct value to the customer. In contrast, necessary waste is any activity that does not add value directly to the customer, but is still necessary for the team or for another step that does add value. Necessary waste supports the best process known at the current time, but will ideally be eliminated sometime in the future. Examples of necessary waste might include planning meetings and preventive maintenance.

See 5S, efficiency, Lean Enterprise Institute (LEI), lean thinking, muda, overproduction, rework, subtraction principle, waste walk.

80-20 rule – See ABC classification, Pareto’s Law.

A

A3 Report – A lean term for a concise document that combines a project charter and progress report on a single large sheet of paper. ![]()

The A3 Report is named after the A3 paper size used everywhere in the world except for the U.S. The A3 is equivalent to two side-by-side A4 pages and is 297 × 420 mm (about 11 × 17 inches). In the U.S., most organizations use two side-by-side 8.5 × 11 inch pages, which is about the same size as an A3.

Although many types of A3 Reports are used in practice, the A3 is most often used as a combination of a parsimonious project charter, project status report, and project archive. A3 Reports are often organized so it tells a “story,” where the left side is a description and analysis of the current problem and the right side presents countermeasures (solutions) and an implementation plan for the solutions. The A3 Report defines the problem, root causes, and corrective actions and often includes sketches, graphics, simple value stream maps, and other visual descriptions of the current condition and future state. The logical flow from left to right, the short two-page format, and the practice of posting A3s on the wall help develop process thinking and process discipline.

Some lean consultants insist that A3 Reports be done by hand to avoid wasted time in making “pretty” graphs and figures. Although many lean experts in North America insist that A3 problem solving is essential to lean thinking, other lean experts in North America do not use it at all.

See kaizen workshop, lean thinking, project charter, value stream map.

ABAP (Advanced Business Application Programming) – The name of the proprietary object-oriented programming language used by SAP, which is the world’s largest ERP software firm.

See SAP.

ABC – See Activity-Based Costing (ABC).

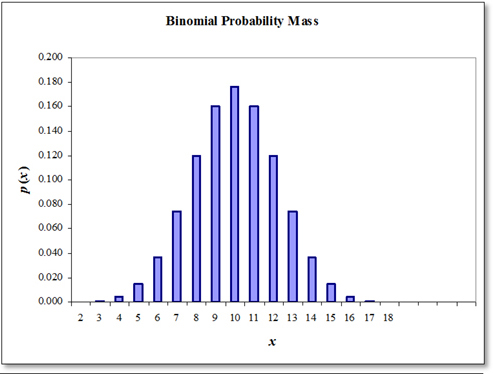

ABC classification – A method for prioritizing items in an inventory system, where A-items are considered the most important; also called ABC analysis, ABC stratification, distribution by value, 80-20 rule, and Pareto analysis. ![]()

The ABC classification is usually implemented based on the annual dollar volume, which is the product of the annual unit sales and unit cost (the annual cost of goods sold). High annual volume items are classified as A-items and low annual dollar volume items are classified as C-items. Based on Pareto’s Law, the ABC classification system demands more careful management of A-items where these items are ordered more often, counted more often, located closer to the door, and forecasted more carefully.

In contrast, C-items are not as important from an investment point of view and therefore should be ordered and counted less frequently. Some firms classify obsolete or non-moving items as D-items.

One justification for this approach is based on the economic order quantity model. Higher dollar volume items are ordered more often and therefore have a higher transaction volume, which means that they are more likely to have data accuracy problems.

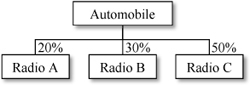

The first step in the ABC analysis is to create a ranked list of items by cost of goods sold (annual dollar volume). The top 20% of the items are labeled A-items. The next 30% of the items in the list are labeled B-items and the remaining 50% are labeled C-items. Of course, these percentages can vary depending upon the needs of the firm. A-items will likely make up roughly 80% of the total annual dollar volume, B-items will likely make up about 15%, and C-items will likely make up about 5%.

A Lorenz Curve is used to graph the ABC distribution, where the x-axis is the percentage of items and the y-axis is the percentage of total annual dollar usage. The graph on the right shows that the first 20% of the items represent about 80% of the annual dollar usage. Items must be first sorted by annual dollar volume to create this graph. See the Lorenz Curve entry for information on how to create this graph.

Some firms use other variables for prioritizing items in the ABC classification such as unit sales, annual sales (instead of cost of goods sold), profit margin, stockout cost (such as medical criticality), shelf life, and cubes (space requirements).

Note that the ABC inventory classification has nothing to do with Activity Based Costing.

See bill of material (BOM), cost of goods sold, cycle counting, Economic Order Quantity (EOQ), inventory management, Lorenz Curve, obsolete inventory, Pareto Chart, Pareto’s Law, warehouse, Warehouse Management System (WMS).

absorption costing – An accounting practice for allocating overhead to measure product and job costs.

With absorption costing, product costs include the direct cost (i.e., labor and materials) and indirect (fixed) costs (e.g., administrative overhead). Overhead costs from each workcenter are assigned to products as they pass through the workcenter. Traditionally, the overhead (indirect) cost is assigned to the product based on the number of direct labor hours. With Activity Based Costing systems, overhead is assigned to products based on cost-drivers, such machine hours, number of orders per year, number of inspections, and product complexity.

Absorption costing is often criticized because it tends to drive operations managers to produce more inventory in order to absorb more overhead. This is contrary to the lean thinking and is only in the best interests of shareholders when capacity is costly and inventory is cheap. Throughput accounting, developed by Goldratt (Noreen, Smith, and Mackey 1995), is a form of variable costing that ignores overhead.

See Activity Based Costing (ABC), cost center, lean thinking, overhead, standard cost, Theory of Constraints (TOC), throughput accounting, variable costing.

absorptive capacity – The ability of an organization to recognize the value of new external information, integrate and assimilate that information, and apply the information to make money.

Absorptive capacity can be examined on multiple levels (an individual, group, firm, and national level), but it is usually studied in the context of a firm. Absorptive capacity can also refer to any type of external information, but is usually applied in the context of research and development (R&D) activities. The theory involves organizational learning, industrial economics, the resource-based view of the firm, and dynamic capabilities. Organizations can build absorptive capacity by conducting R&D projects internally rather than outsourcing them.

The term “absorptive capacity” was first introduced in an article by Cohen and Levinthal (1990). According to the ISI Web of Science, this article has been cited more than 1500 times. This entire article can be found at http://findarticles.com/p/articles/mi_m4035/is_n1_v35/ai_8306388 (May 10, 2010).

Adapted from http://en.wikipedia.org/wiki/Absorptive_capacity and http://economics.about.com/cs/economicsglossary/g/absorptive_cap.htm, May 10, 2010.

See capacity, empowerment, human resources, New Product Development (NPD), organizational design, outsourcing, workforce agility.

Acceptable Quality Level (AQL) – The maximum percentage defective that can be considered satisfactory as a process average.

When deciding whether to accept a batch, a sample of n parts is taken from the batch and a decision is made to accept the batch if the percentage of defects is less than the AQL. The AQL is the highest proportion defective that is considered acceptable as a long-run average for the process.

For example, if 4% nonconforming product is acceptable to both the producer and consumer (i.e., AQL = 4.0), the producer agrees to produce an average of no more than 4% nonconforming product.

See acceptance sampling, consumer’s risk, incoming inspection, Lot Tolerance Percent Defective (LTPD), producer’s risk, quality management, Statistical Process Control (SPC), Statistical Quality Control (SQC), zero defects.

acceptance sampling – Methods used to make accept/reject decisions for each lot (batch) based on inspecting a limited number of units. ![]()

With attribute sampling plans, accept/reject decisions are based on a count of the number of units in the sample that are defective or the number of defects per unit. In contrast, with variable sampling plans, accept/reject decisions are based on measurements. Plans requiring only a single sample set are known as single sampling plans; double, multiple, and sequential sampling plans may require additional samples.

For example, an attribute single sampling plan with a sample size n = 50 and an accept number a = 1 requires that a sample of 50 units be inspected. If the number of defectives in that sample is one or zero, the lot is accepted. Otherwise, it is rejected. Ideally, when a sampling plan is used, all bad lots will be rejected and all good lots will be accepted. However, because accept/reject decisions are based on a sample of the lot, the probability of making an incorrect decision is greater than zero. The behavior of a sampling plan can be described by its operating characteristic curve, which plots the percentage defective against the corresponding probabilities of acceptance.

See Acceptable Quality Level (AQL), attribute, consumer’s risk, incoming inspection, inspection, Lot Tolerance Percent Defective (LTPD), operating characteristic curve, producer’s risk, quality management, sampling, Statistical Process Control (SPC), Statistical Quality Control (SQC).

Accounts Payable (A/P) – The money owed to suppliers for goods and services purchased on credit; a current liability; also used as the name of the department that pays suppliers.

Analysts look at the relationship between accounts payable and purchases for indications of sound financial management. Working capital is controlled by managing accounts payable, accounts receivable, and inventory.

See Accounts Receivable (A/R), invoice, purchase order (PO), purchasing, supplier, terms.

Accounts Receivable (A/R) – The money customers owe an organization for products and services provided on credit; a current asset on the balance sheet; also used as the name of the department that applies cash received from customers against open invoices.

A sale is treated as an account receivable after the customer is sent an invoice. Accounts receivable may also include an allowance for bad debts. Working capital is controlled by managing accounts payable, accounts receivable, and inventory.

See Accounts Payable (A/P), invoice, purchase order (PO), purchasing, supplier, terms.

acquisition – A contracting term used when an organization takes possession of a product, technology, equipment, or another organization.

In a mergers and acquisitions context, acquisition refers to one firm buying another firm. In a learning context, learning is often called acquisition of new knowledge, skills, or behaviors. In a marketing context, the customer acquisition cost is the cost of finding and winning new customers and is sometimes measured as the advertising cost plus other marketing costs targeted toward new customers divided by the number of new customers added during the time period.

See due diligence, e-procurement, forward buy, mergers and acquisitions (M&A), purchasing, service recovery.

active item – Any inventory item that has been used or sold in the recent past (e.g., the last year).

It is common for a retailer to have 100,000 items in their item master, but only 20,000 active items.

See inventory management, part number.

Activity Based Costing (ABC) – An accounting practice that identifies the cost drivers (variables) that have the most influence on the product (or service) cost and then allocates overhead cost to products and services based on these cost drivers. ![]()

Allocating overhead (particularly manufacturing overhead) is an important activity for many firms. Allocating overhead is needed to estimate product costs in product profitability analysis and important decisions with respect to pricing, product rationalization, and marketing and sales efforts.

Traditional standard costing systems usually allocate overhead cost based on direct labor. For example, consider a product that requires one hour of labor and $30 of materials. If the direct labor wage rate (without overhead) is $20 and the overhead burden rate is $200 per direct labor hour, the standard cost for the product is then direct materials ($20), direct labor ($30), and allocated overhead ($200), for a total cost of $250.

One common criticism of traditional standard costing systems is that it does not make sense to allocate the largest cost (the overhead) based on the smallest cost (the direct labor cost). (Overhead is often the largest component of the standard cost and direct labor cost is often the smallest component.) Traditional standard costing systems assume that the only resource related to overhead is direct labor and that all other resources and activities required to create the product or service cannot be related to overhead.

In contrast, Activity Based Costing begins by identifying the major activities and resources required in the process of creating a product or service. ABC then identifies the “cost pools” (overhead cost) for each activity or resource. Finally, ABC defines an equitable way of allocating (assigning) the overhead cost from the cost pools to the products and services based on a variable called a “cost driver.”

A cost driver should reflect the amount of the cost pool (resource) consumed in the process of creating the product or service. Cost drivers might include the number of setups (for a shared setup team), direct materials cost (for allocating purchasing overhead), direct labor time (for allocating labor-related overhead), total throughput time (for allocating manufacturing overhead), inspection time (for allocating quality control overhead), and space used (for allocating building related overhead).

Activity Based Management (ABM) is the use of the Activity Based Costing tools by process owners to control and improve their operations. Building an Activity Based Costing model requires a process analysis, which requires management to have a deep understanding of the business and evaluate value-added and nonvalue-added activities. The cost analysis and the process understanding that is derived from an ABC system can provide strong support for important managerial decisions, such as outsourcing, insourcing, capacity expansion, and other important “what-if” issues.

Some argue that all manufacturing overhead cost should be allocated based on direct labor (or some other arbitrary cost driver), even if the cost is not traceable to any production activity. However, most experts agree that the sole purpose of an ABC system is to provide management with information that is helpful for decision making. Arbitrary allocation of overhead cost does not support decision making in any way. Even with Activity Based Costing, certain costs related to a business are included in overhead without being allocated to the product.

See absorption costing, burden rate, cost center, customer profitability, hidden factory, outsourcing, overhead, product proliferation, standard cost, throughput accounting, variable costing, what-if analysis.

Activity Based Management (ABM) – See Activity Based Costing (ABC).

ad hoc committee – See committee.

addition principle – Combining two tasks and assigning them to one resource (person, machine, etc.).

Love (1979) defines the addition principle for improving a process as combining two or more process steps so one resource (person, machine, contractor, etc.) does all of them. This strategy has many potential advantages, including reducing cost, reducing cycle time, reducing the number of queues, reducing the number of handoffs, reducing lost customer information, reducing customer waiting time, improving customer satisfaction, improving quality, improving job design, accelerating learning, developing people, and improving accountability.

The addition principle is an application of job enlargement where a worker takes on some of a co-worker’s job and job enrichment, where a worker takes on part of the boss’s job. This is closely related to the queuing theory concept of pooling.

The application of the addition principle is particularly effective in the service context, where it can impact customer waiting time and put more of a “face” on the service process. For example, many years ago, Citibank reduced the number of handoffs in its international letter of credit operation from about 14 to 1. Instead of 14 different people handling 14 small steps, one person handled all 14 steps. This change dramatically reduced customer leadtime, improved quality, and improved process visibility. Citibank required workers to be bilingual, which also improved service quality. The visibility of the new process allowed them to further improve the process and prepared the way for automating parts of the process. However, implementing this new process was not without problems. Many of the people in the old process had to be replaced by people with broader skill sets and the new process increased risk because it eliminated some of the checks and balances in the old process.

See customer leadtime, handoff, human resources, job design, job enlargement, multiplication principle, pooling, subtraction principle.

ADKAR Model for Change – A five-step model designed to help organizations affect change.

ADKAR, developed by Prosci (Hiatt 2006), is similar to the Lewin/Schein Theory of Change. ADKAR defines five stages that must be realized for an organization or an individual to successfully change:

• Awareness – An individual or organization must know why the change is needed.

• Desire – Either the individual or organizational members must have the motivation and desire to participate in the proposed change or changes.

• Knowledge – Knowing why one must change is not enough; an individual or organization must know how to change.

• Ability – Every individual and organization that truly wants to change must implement new skills and behaviors to implement the necessary changes.

• Reinforcement – Individuals and organizations must be reinforced to sustain the changes and the new behaviors. Otherwise, the individuals and organization will likely revert to their old behaviors.

See change management, control plan, Lewin/Schein Theory of Change.

adoption curve – The major phases in the product life cycle that reflect the market’s acceptance of a new product or technology.

According to the Product Development and Management Association (www.pdma.org), consumers move from (a) a cognitive state (becoming aware of and knowledgeable about a product) to (b) an emotional state (liking and then preferring the product) and finally into (c) a behavioral state (deciding on and then purchasing the product). At the market level, the new product is first purchased by market innovators (roughly 2.5% of the market), followed by early adopters (roughly 13.5% of the market), early majority (34%), late majority (34%), and finally, laggards (16%).

See Bass Model, New Product Development (NPD), product life cycle management.

Advanced Planning and Scheduling (APS) – An information system used by manufacturers, distributors, and retailers to assist in supply chain planning and scheduling.

Most APS systems augment ERP system functionality by providing forecasting, inventory planning, scheduling, and optimization tools not historically found in ERP systems. For example, APS systems can calculate optimal safety stocks, create detailed schedules that do not exceed available capacity (finite scheduling), and find the near-optimal assignments of products to plants. In contrast, traditional ERP systems were fundamentally transaction processing systems that implemented user-defined safety stocks, created plans that regularly exceeded available capacity (infinite loading), and did not optimize anything.

The best-known dedicated APS software vendors were i2 Technologies and Manugistics, but they are both now owned by JDA Software. SAP has an APS module called APO, which stands for Advanced Planning and Optimization. According to SAP’s website, “SAP APO is a software solution that enables dynamic supply chain management. It includes applications for detailed planning, optimization, and scheduling, allowing the supply chain to be accurately and globally monitored even beyond enterprise boundaries. SAP APO is a component of mySAP Supply Chain Management.”

The sales script for these APS systems in the past (exaggerated here for sake of emphasis) has been that the big ERP systems (SAP, Oracle, etc.) were “brain dead” and had little intelligence built into them. These big ERP systems were only transaction processing systems and did little in the way of creating detailed schedules, forecasting, or optimization. The promise of the APS systems was that they were “smart” and could make the ERP systems a lot smarter. In recent years, the lines have blurred and nearly all ERP systems offer add-on products that do much of what only APS systems could do in the past.

Many APS users have found that several APS features were hard to implement and maintain, which has led to some negative assessments of APS systems. The three main complaints that this author has heard are (1) massive data requirements (capacity information on almost every workcenter for every hour in the day), (2) complexity (few managers understand the mathematical algorithms used in APS applications), and (3) lack of systems integration (the APS must work alongside the ERP system and must share a common database). The finite scheduling entry discusses some of the needs that motivated the development of APS systems.

See algorithm, back scheduling, closed-loop MRP, Enterprise Resources Planning (ERP), finite scheduling, I2, infinite loading, job shop scheduling, load, load leveling, Manugistics, Materials Requirements Planning (MRP), optimization, SAP.

Advanced Shipping Notification (ASN) – An electronic file sent from a supplier to inform a customer when incoming goods are expected to arrive.

An ASN may be a document, a fax, or electronic communication. However, electronic communication is preferred. ASNs usually include PO numbers, SKU numbers, lot numbers, quantity, pallet or container number, carton number, and other information related to the shipment and to each item in the shipment.

ASN files are typically sent electronically immediately when a trailer (destined for a given receiving facility) leaves a DC. The ASN file should be received by the receiving facility well in advance of the time the trailer arrives. When the trailer (or other shipping container) arrives, the contents of the trailer can be electronically compared to the contents of the ASN file as the trailer is unloaded. Any missing items or unexpected items would be highlighted on the OS&D report. The ASN is typically received and processed by the Transportation Management System (TMS) or Warehouse Management System (WMS) at the receiving facility.

The ASN file serves three important purposes:

• The receiving facility uses the ASN to plan inventory or load movement (interline hauls or ground-route distribution) based on the expected inbound mix of goods. Such planning may include scheduling of other resources (drivers, warehouse personnel) or even advance calls to customers to inform them of their expected delivery time windows.

• The TMS or WMS systems at the receiving facility may use the expected inbound mix of goods to prepare warehouse employees to receive the goods by downloading the information to wireless barcode scanners or alerting warehouse planning staff to the expected incoming volume of goods.

• The TMS or WMS system may ultimately use the expected inbound goods to form the basis of an Over/Short/Damaged (OS&D) report upon actual scanning of the inbound goods.

Although commonly used in over-the-road trucking, an ASN can be sent in relation to any shipment, including air, rail, road, and sea shipments. An ASN file is often sent in the agreed-upon EDI 210 (“Advanced Shipping Notification”) format. However, technically, an ASN could be any file format agreed upon by the originating and receiving facilities.

See consignee, cross-docking, distribution center (DC), Electronic Data Interchange (EDI), incoming inspection, manifest, Over/Short/Damaged Report, packing slip, receiving, trailer, Transportation Management System (TMS), Warehouse Management System (WMS).

adverse event – A healthcare term used to describe any unintended and undesirable medical occurrence experienced by a patient due to medical therapy or other intervention, regardless of the cause or degree of severity.

The term “adverse event” is often used in the context of drug therapy and clinical trials. In the drug therapy context, it is also called an adverse reaction or an adverse drug reaction.

Very serious adverse events are usually called sentinel events or never events. However, a few sources treat the terms “adverse event” and “sentinel event” as synonyms. The term “near miss” is used to describe an event that could have harmed the patient, but was avoided through planned or unplanned actions.

Barach and Small (2000) report lessons for healthcare organizations from non-medical near miss reporting systems. This interesting report begins by emphasizing that most near misses and preventable adverse events are not reported and that healthcare systems could be improved significantly if more of these events were reported. The report further argues that healthcare could benefit from what has been learned in other industries. The authors studied reporting systems in aviation, nuclear power technology, petrochemical processing, steel production, military operations, and air transportation as well as in healthcare. They argue that reporting near misses is better than reporting only adverse events, because the greater frequency enables better quantitative analysis and provides more information to process improvement programs. Many of the non-medical industries have developed incident reporting systems that focus on near misses, provide incentives for voluntary reporting (e.g., limited liability, anonymous reporting, and confidentiality), bolster accountability, and implement systems for data collection, analysis, and improvement.

The key to encouraging reporting of near misses and adverse events is to lower the disincentives (costs) of reporting for workers. When people self-report an error or an event, they should not be “rewarded” with disciplinary action or dismissal (at least not the first time). Many organizations allow for anonymous reporting via a website, which makes it possible for the person reporting the event to keep his or her identity confidential. It is also important to make the process easy to use.

See error proofing, Joint Commission, sentinel event.

advertising allowance (ad allowance) – See trade promotion allowance.

affinity diagram – A “bottoms-up” group brainstorming methodology designed to help groups generate and organize a large number of ideas into related groups; also known as the KJ Method and KJ Analysis. ![]()

Affinity diagrams are a simple yet powerful way to extract qualitative data from a group, help the group cluster similar ideas, and develop a consensus view on a subject. For example, an affinity diagram might be used to clarify the question, “What are the root causes of our quality problems?”

Despite the name, affinity diagrams are not really diagrams. Occasionally, circles are drawn around clusters of similar concepts and lines or trees are drawn to connect similar clusters, but these drawings are not central to the affinity diagramming methodology.

For example, affinity diagrams are often used with Quality Function Development (QFD) to sort and organize ideas on customer needs. To do this, the facilitator instructs each individual in a group to identify all known customer needs and write them down on 3M Post-it Notes, with each need on an individual piece of paper. The group then shares their ideas one idea at a time, organizes the notes into clusters, develops a heading for each cluster, and then votes to assign importance to each group.

The steps for creating an affinity diagram are essentially the same as the used in the nominal group technique and the KJ Method. See the Nominal Group Technique (NGT) entry for the specific steps. An affinity diagram example can be found at http://syque.com/quality_tools/tools/TOOLS04.htm (April 7, 2011).

See brainstorming, causal map, cluster analysis, Kepner-Tregoe Model, KJ Method, Nominal Group Technique (NGT), parking lot, Quality Function Deployment (QFD), Root Cause Analysis (RCA).

aftermarket – An adjective used to describe parts or products that are purchased to repair or enhance a product.

For example, many people buy cases for their cell phones as an aftermarket accessory.

See service parts.

aggregate inventory management – The analysis of a large set of items in an inventory system with a focus on lotsizing and safety stock policies to study the trade-offs between carrying cost and service levels.

Inventories with thousands of items are difficult to manage because of the amount of data involved. Aggregate inventory management tools allow managers to group items and explore opportunities to reduce inventory and improve service levels by controlling the target service level, carrying charge, and setup cost parameters for each group of items. Aggregate inventory analysis typically applies economic order quantity logic and safety stock equations in light of warehouse space limitations, market requirements, and company strategies. Aggregate inventory analysis often results in a simultaneous reduction in overall inventory and improvement in overall service levels. This is accomplished by reducing the safety stock inventory for those items that have unnecessarily high safety stocks and by increasing the safety stock inventory for those items that have poor service levels.

The Sales and Operations Plan (S&OP), sometimes called the Sales, Inventory, and Operations Plan (Si&OP), is similar to the aggregate inventory plan. However, unlike aggregate inventory management, S&OP rarely uses mathematical models and focuses on building consensus in the organization.

See Economic Order Quantity (EOQ), inventory management, inventory turnover, lotsizing methods, production planning, safety stock, Sales & Operations Planning (S&OP), service level, unit of measure, warehouse.

aggregate plan – See production planning.

aggregate production planning – See production planning.

agile manufacturing – A business strategy for developing the processes, tools, training, and culture for increasing flexibility to respond to customer needs and market changes while still controlling quality and cost.

The terms agile manufacturing, time-based competition, mass customization, and lean are closely related. Some key strategies for agile manufacturing include commonality, lean thinking, modular design, postponement, setup time reduction, and virtual organizations.

See Goldman, Nagel, and Preiss (1995) and Metes, Gundry, and Bradish (1997) for books on the subject.

See commonality, lean thinking, mass customization, modular design (modularity), operations strategy, postponement, Quick Response Manufacturing, resilience, scalability, setup time reduction methods, time-based competition, virtual organization.

agile software development – A software development methodology that promotes quick development of small parts of a project to ensure that the developers meet user requirements; also known as agile modeling.

Agile software development promotes iterative software development with high stakeholder involvement and open collaboration throughout the life of a software development project. It uses small increments with minimal planning. Agile attempts to find the smallest workable piece of functionality, deliver it quickly, and then continue to improve it throughout the life of the project as directed by the user community. This helps reduce the risk that the project will fail to meet user requirements.

In contrast, the waterfall scheduling requires “gates” (approvals) for each step of the development process: requirements, analysis, design, coding, and testing. Progress is measured by adherence to the schedule. The waterfall approach, therefore, is not nearly as iterative as the agile process.

See beta test, catchball, cross-functional team, Fagan Defect-Free Process, lean thinking, prototype, scrum, sprint burndown chart, stakeholder, waterfall scheduling.

AGV – See Automated Guided Vehicle.

AHP – See Analytic Hierarchy Process.

AI – See artificial intelligence.

alpha test – See prototype.

alignment – The degree to which people and organizational units share the same goals.

Two or more people or organizational units are said to be “aligned” when they are working together toward the same goals. They are said to be “misaligned” when they are working toward conflicting goals. Alignment is usually driven by recognition and reward systems.

For example, sales organizations often forecast demand higher than the actual demand because sales people tend to be much more concerned about running out of stock (and losing sales) than having too much inventory. In other words, they are prone to “add safety stock to the forecast.” Given that sales organizations are typically rewarded only based on sales, this bias is completely logical. However, this behavior is generally not aligned with the overall objectives of the firm.

See balanced scorecard, forecast bias, hoshin planning.

algorithm – A formal procedure for solving a problem.

An algorithm is usually expressed as a series of steps and implemented in a computer program. For example, some algorithms for solving the Traveling Salesperson Problem can require thousands of lines of computer code. Some algorithms are designed to guarantee an optimal (mathematically best) solution and are said to be exact or optimal algorithms. Other algorithms, known as heuristics or heuristic algorithms, seek to find the optimal solution, but do not guarantee that the optimal solution will be found.

See Advanced Planning and Scheduling (APS), Artificial Intelligence (AI), assignment problem, check digit, cluster analysis, Economic Lot Scheduling Problem (ELSP), gamma function, heuristic, job shop scheduling, knapsack problem, linear programming (LP), lotsizing methods, network optimization, operations research (OR), optimization, transportation problem, Traveling Salesperson Problem (TSP), Wagner-Whitin lotsizing algorithm.

alliance – A formal cooperative arrangement with another firm, which could be for almost any purpose, such as new product development, sharing information, entering a new market, etc. Alliances usually involve sharing both risks and rewards.

allocated inventory – A term used by manufacturing and distribution firms to describe the quantity of an item reserved but not yet withdrawn or issued from stock; also called allocated stock, allocated, allocations, committed inventory, committed quantity, quantity allocated, or reserved stock.

The inventory position does not count allocated inventory as available for sale. Allocations do not normally specify which units will go to an order. However, firm allocations will assign specific units to specific orders.

See allocation, backorder, inventory position, issue, Materials Requirements Planning (MRP), on-hand inventory.

allocation – (1) Inventory reserved for a customer. See allocated inventory. (2) A set of rules used to determine what portion of available stock to provide to each customer when demand exceeds supply.

See allocated inventory.

all-time demand – The total of all future requirements (demand) for an item; also called all-time requirement, lifetime requirement, and all-time demand.

All-time demand is the sum of the demand until the product termination date or until the end of time. Organizations need to forecast the all-time demand for a product or component in the following situations:

• Determine the lotsize for a final purchase (“final buy”) – When an item is near the end of its useful life and the organization needs to make one last purchase, it needs to forecast the all-time demand. Future purchases will be expensive due to the supplier’s cost of finding tooling, skills, and components.

• Determine the lotsize for a final manufacturing lot – When an item is near the end of its useful life and the manufacturer needs to make one last run of the item, it needs to forecast the lifetime demand. Future manufacturing will likely be very expensive.

• Identify the amount of inventory to scrap – When an item is near the end of its useful life, a forecast of the all-time demand can be used to help determine how many units should be scrapped and how many should be kept (the keep stock).

• Identify when to discontinue an item – A forecast of the lifetime demand can help determine the date when an item will be discontinued.

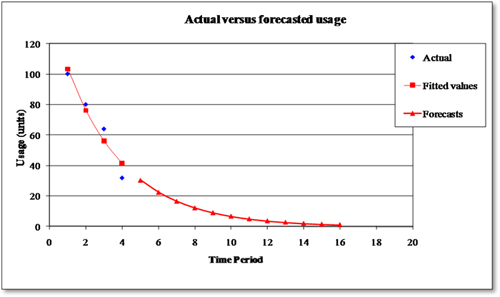

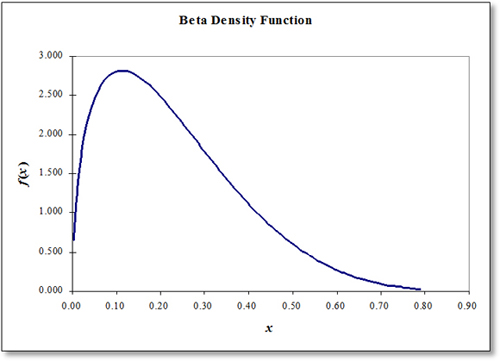

Several empirical studies, such as Hill, Giard, and Mabert (1989), have found that the demand during the end-of-life phase of the product life cycle often decays geometrically. The geometric progression suggests that the demand in any period is a constant times the demand in the previous period (i.e., dt = βdt−1), where 0 < β < 1. The beta parameter is called the common ratio, because β = d1/d0 = ... = dt+1/dt. Given that period 0 had demand of d0 units, the forecasted demand for period 1 is f1 = βd0 and for period 2 is f2 = βf1 = β2d0. In general, given that period 0 demand is d0 units, the forecasted demand t periods into the future is fT = βT d0.

The cumulative forecasted demand through the next T periods after period 0 is the sum of the finite geometric progression FT = f1 + f2 + ... + fT = βd0 + β2d0 + ... + βT d0. Multiplying both sides of this equation by β yields βFT = β2d0 + β3d0 + ... + βT+1d0 and then subtracting this new equation from the first one yields FT − βFT = βd0 − βT+1 d0, which simplifies to FT · − β) = d0β(1 − βT). Given that β < 1, it is clear that 1 − β ≠ 0, which means that it is possible to divide both sides by 1 − β, which yields FT = d0β(1 − βT)/(1 − β). At the limit, as T → ∞, βT → 0, and the sum of the all-time demand after period 0 is F∞ = d0β/(1 −β). In summary, given that the actual demand for the most recent period (period 0) was d0, the forecast of the cumulative demand over the next T time periods is FT = d0β(1 − βT)/(1 −β). The cumulative demand from now until the end of time is then F∞ = d0β/(1 − β).

The graph on the right shows the geometric decay for four historical data points (100, 80, 64, and 32). With β = 0.737, the all-time demand forecast is F∞ = d0β/(1 −β)= 100 · 0.737/(1 − 0.737) = 116 units, and a forecast over a T = 16 period horizon is 113 units.

See all-time order, autocorrelation, Bass Model, demand, forecast horizon, forecasting, geometric progression, obsolete inventory, product life cycle management, slow moving inventory, termination date.

all-time order – The last order for a particular product in the last phase of its life cycle.

The all-time order is sometimes called the “lifetime buy” or “last buy.” The all-time order should be large enough so the inventory provided will satisfy nearly all expected future demand and balance the cost of a stockout with the cost of carrying inventory.

See all-time demand, product life cycle management.

alternate routing – See routing.

American Society for Quality (ASQ) – A professional association that advances learning, quality improvement, and knowledge exchange to improve business results and create better workplaces and communities worldwide.

ASQ has more than 100,000 individual and organizational members. Founded in 1946, and headquartered in Milwaukee, Wisconsin, the ASQ was formerly known as the American Society for Quality Control (ASQC). Since 1991, ASQ has administered the Malcolm Baldrige National Quality Award, which annually recognizes companies and organizations that have achieved performance excellence. ASQ publishes many practitioner and academic journals, including Quality Progress, Journal for Quality and Participation, Journal of Quality Technology, Quality Engineering, Quality Management Journal, Six Sigma Forum Magazine, Software Quality Professional, and Technometrics. The ASQ website is www.asq.org.

See Malcolm Baldrige National Quality Award (MBNQA), operations management (OM), quality management.

Analysis of Variance (ANOVA) – A statistical procedure used to test if samples from two or more groups come from populations with equal means.

ANOVA is closely related to multiple regression in that both are linear models and both use the F test to test for significance. In fact, a regression with dummy variables can be used to conduct an ANOVA, including exploring multiple-way interaction terms. The test statistic for analysis of variance is the F-ratio.

ANOVA is applicable when the populations of interest are normally distributed, populations have equal standard deviations, and samples are randomly and independently selected from each population.

Multivariate Analysis of Variance (MANOVA), an extension of ANOVA, can be used to accommodate more than one dependent variable. MANOVA measures the group differences between two or more metric dependent variables simultaneously, using a set of categorical non-metric independent variables.

See confidence interval, covariance, Design of Experiments (DOE), Gauge R&R, linear regression, sampling, Taguchi methods, t-test, variance.

Analytic Hierarchy Process (AHP) – A structured methodology used to help groups make decisions in a complex environment; also known as Analytical Hierarchy Process.

The AHP methodology, developed by Saaty (2001), can be summarized as follows:2

• Model the problem as a hierarchy containing the decision goal, the alternatives for reaching it, and the criteria for evaluating the alternatives.

• Establish priorities among the elements of the hierarchy by making a series of judgments based on pairwise comparisons of the elements.

• Synthesize these judgments to yield a set of overall priorities for the hierarchy.

• Check the consistency of the judgments.

• Come to a final decision based on the results of this process.

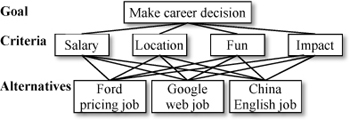

For example, a student has three job offers and needs to select one of them. The student cares about four criteria: salary, location, fun, and impact. The offers include (1) a job setting the price for the Ford Mustang in Detroit, (2) a job as a website developer at Google in San Francisco, and (3) a job teaching English in a remote part of China. The goal, criteria, and alternatives are shown in the figure on the right.

Analytic Hierarchy Process example

The student scores the relative importance of the objectives by comparing each pair of objectives in a table and scoring them on the scale:

1 = Objectives i and j are of equal importance.

3 = Objective i is moderately more important than j.

5 = Objective i is strongly more important than j.

7 = Objective i is very strongly more important than j.

9 = Objective i is absolutely more important than j.

Scores 2, 4, 6, 8 are intermediate values. The respondent only puts a score in a cell where the row is more important than the column. The remainder of the matrix is then filled out by setting all main diagonal values to 1 (i.e., aii = 1) and setting the cell on the other side of the main diagonal to the inverse value (i.e., aji = aij). In general, participants must score n(n − 1)/2 pairs, where n is the number of criteria to be evaluated.

The next step is to compute the eigenvalues and the normalized eigenvector3 of this matrix. The set of n values will add to one. The consistency index can then be computed. The eigenvalue for this problem is λ = 4.2692 and the normalized eigenvector is shown in the table above. The consistency ratio is 9.97%, which is considered acceptable. The next step is to evaluate all pairs of the three alternatives on each of the four dimensions using the same 1 to 9 scale. As a result, each alternative now has a weight for each dimension. These are then weighted by the vector, which suggests a final decision to the user.

An AHP tutorial can be found at http://people.revoledu.com/kardi/tutorial/AHP/index.html (April 10, 2011). See the Kepner-Tregoe Model and Pugh Matrix entries for other methods for multiple-criteria decision making.

See causal map, conjoint analysis, decision tree, force field analysis, Kano Analysis, Kepner-Tregoe Model, New Product Development (NPD), Pugh Matrix, TRIZ, voice of the customer (VOC).

anchoring – Allowing estimates or thinking to be influenced by some starting information or current conditions; also used to describe the difficulty of changing behavior that is heavily influenced by old habits.

According to the Forecasting Dictionary (Armstrong 2001), the initial value (or anchor) can be based on tradition, previous history, or available data. Anchoring is a significant problem in many operations contexts, including forecasting and subjective estimation of probabilities. For example, when making a subjective forecast, people often anchor on the previous period’s demand.

In one interesting study, Tversky and Kahneman (1974) asked subjects to predict the percentage of nations that were African in the United Nations. They selected an initial value by spinning a wheel in the subject’s presence. The subjects were then asked to revise this number upward or downward to obtain an answer. This information-free initial value had a strong influence on the estimate. Those starting with 10% made predictions averaging 25%. In contrast, those starting with 65% made predictions averaging 45%.

See forecasting, newsvendor model.

andon board – See andon light.

andon light – A lean term (pronounced “Ann-Don”) that refers to a warning light, warning board, or signal on (or near) a machine or assembly line that calls attention to defects or equipment problems; also called an andon board; the Japanese word for andon (![]() ) means “lamp.”

) means “lamp.”

An andon is any visual indicator signaling that a team member has found an abnormal situation, such as poor quality, lack of parts, improper paperwork, missing information, or missing tools. When a worker pulls an andon cord (or pushes a button), the red light goes on, the line is stopped, and a supervisor or technician responds immediately to help diagnose and correct the problem. It is important for management to define exactly who is responsible as the support person. The idea here is to have a simple visual system that immediately calls for the right kind of help from the right people at the right time. This is a good example of Rule 5 in the Spear and Bowen (1999) framework.

The number of lights and their possible colors can vary even by workcenter within a plant. Most implementations have three colors: red, yellow, and green (like a stoplight). Red usually means the line is down, yellow means the line is having problems, and green means normal operations. Some firms use other colors to signal other types of issues, such as material shortages or defective components. Some firms use a blinking light to signal that someone is working on the problem.

See assembly line, error proofing, jidoka, lean thinking, visual control.

ANOVA – See Analysis of Variance.

anticipation inventory – Inventory held to (1) satisfy seasonal demand, (2) cope with expected reduced capacity due to maintenance or an anticipated strike, or (3) store seasonal supply for a level demand throughout the year (for example, a crop that is harvested only once per year).

See production planning, seasonality.

antitrust laws – Government regulations intended to protect and promote competition.

Competition is beneficial because it causes firms to add more value to society. Firms that add value to the market (and society) survive, but those that do not add value go out of business.

The four main antitrust laws in U.S. Federal law are:

The Sherman Antitrust Act – Passed in 1890, this act outlaws “every contract, combination in the form of trust or otherwise, or conspiracy, in restraint of trade or commerce among the several States, or with foreign nations.” This law makes it illegal to create a monopoly or engage in practices that hurt competition.

The Clayton Act – Passed in 1914 and revised in 1950, this act keeps prices from skyrocketing due to mergers, acquisitions, or other business practices. By giving the government the authority to challenge largescale moves made by corporations, this act provides a barrier against monopolistic practices.

Robinson-Patman Act – Passed in 1936 to supplement the Clayton Act, this act forbids firms from engaging in interstate commerce to discriminate in price for different purchasers of the same commodity if the effect would be to lessen competition or create a monopoly. This act protects independent retailers from chain-store competition, but it was also strongly supported by wholesalers who were eager to prevent large chain stores from buying directly from the manufacturers at lower prices.

The Federal Trade Commission Act of 1914 – Like the Clayton Act, this act is a civil statute. This act established the Federal Trade Commission (FTC), which seeks to maintain competition in interstate commerce.

In addition to these acts, antitrust violators may be found guilty of criminal activity or civil wrongdoing through other laws. Some of the other possible charges include perjury, obstruction of justice, making false statements to the government, mail fraud, and conspiracy.

See bid rigging, bribery, category captain, General Agreement on Tariffs and Trade (GATT), mergers and acquisitions (M&A), predatory pricing, price fixing, purchasing.

APICS (The Association for Operations Management) – A professional society for operations managers, including production, inventory, supply chain, materials management, purchasing, and logistics.

APICS stands for American Production and Inventory Control Society. However, APICS has adopted the name “The Association for Operations Management,” even though the name no longer matches the acronym.

The APICS website (www.apics.org) states, “The Association for Operations Management is the global leader and premier source of the body of knowledge in operations management, including production, inventory, supply chain, materials management, purchasing, and logistics.” Since 1957, individuals and companies have relied on APICS for training, certifications, comprehensive resources, and a worldwide network of accomplished industry professionals. APICS confers the CIRM, CPIM, and CSCP certifications. APICS produces a number of trade publications and a practitioner/research journal, the Production & Inventory Management Journal.

See operations management (OM).

A-plant – See VAT analysis.

Application Service Provider (ASP) – An organization that provides (hosts) remote access to a software application over the Internet.

The ASP owns a license to the software and customers rent the use of the software and access it over the Internet. The ASP may be the software manufacturer or a third-party business. An ASP operates the software at its data center, which customers access online under a service contract. A common example is a website that other websites use for accepting payment by credit card as part of their online ordering systems. The benefits of an ASP are lower upfront costs, quicker implementation, scalability, and lower operating costs. The term “Software as a Service (SaaS)” seems to have diminished the importance of this term.

The unrelated term Active Server Pages (ASP) describes HTML pages that contain embedded scripts.

See cloud computing, service management, Software as a Service (SaaS).

appraisal cost – An expense of measuring quality through inspection and testing. ![]()

Many popular quality consultants argue that appraisal costs should be eliminated and that firms should not try to “inspect quality into the product,” but should instead “design quality into the product and process.”

See cost of quality.

APS – See Advanced Planning and Scheduling (APS).

AQL – See Acceptable Quality Level.

arbitrage – Buying something in one market and reselling it at a higher price in another market.

Arbitrage involves a combination of matching deals to exploit the imbalance in prices between two or more markets and profiting from the difference between the market prices. A person who engages in arbitrage is called an arbitrageur.

Arbitrage is a combination of transactions designed to profit from an existing discrepancy among prices, exchange rates, or interest rates in different markets, often without risk of these changing. The simplest form of arbitrage is the simultaneous purchase and sale of something in different markets. More complex forms include triangular arbitrage. To arbitrage is to make a combination of bets such that if one bet loses, another one wins, with the implication of having an edge, at no risk or at least low risk. The term “hedge” has a similar meaning, but does not carry the implication of having an edge.

See hedging.

ARIMA – Autoregressive Integrated Moving Average. See Box-Jenkins forecasting.

ARMA – Autoregressive Moving Average. See Box-Jenkins forecasting.

Artificial Intelligence (AI) – Computer software that uses algorithms that emulate human intelligence.

Many applications of AI have been made in operations management, including decision support systems, scheduling, forecasting, computer-aided design, character recognition, pattern recognition, and speech/voice recognition. One challenge for computer scientists is differentiating AI software from other types of software.

See algorithm, expert system, neural network, robotics.

ASN – See Advanced Shipping Notification (ASN).

AS/RS – See Automated Storage & Retrieval System (AS/RS).

ASQ – See American Society for Quality.

assemble to order (ATO) – A customer interface strategy that stocks standard components and modules that can quickly be assembled products to meet a wide variety of customer requirements. ![]()

ATO allows an organization to produce a large variety of final products with a relatively short customer leadtime. Well-known examples of ATO processes include Burger King, which assembles hamburgers with many options while the customer waits, and Dell Computer, which assembles and ships a wide variety of computers on short notice. ATO systems almost never have any finished goods inventory, but usually stock major components. Pack to order and configure to order systems are special cases of ATO.

See assembly, build to order (BTO), customer leadtime, Final Assembly Schedule (FAS), make to stock (MTS), mass customization, Master Production Schedule (MPS), respond to order (RTO).

assembly – A manufacturing process that brings together two or more parts to create a product or a subassembly that will eventually become part of a product; the result of an assembly process.

A subassembly is an intermediate assembly used in the production of higher-level subassemblies, assemblies, and products.

See assemble to order (ATO), assembly line, manufacturing processes.

assembly line – The organization of a series of workers or machines so discrete units can be moved easily from one station to the next to build a product; also called a production line.

On an assembly line, each worker (or machine) performs one relatively simple task and then moves the product to the next worker (or machine). Assembly lines are best suited for assembling large batches of standard products and therefore require a highly standardized process. Unlike continuous processes for liquids or powders, which can move through pipes, assembly lines are for discrete products and often use conveyer belts to move products between workers. Assembly lines use a product layout, which means the sequence is determined by the product requirements. Some automated assembly lines require substantial capital investment, which makes them hard to change.

One issue with an assembly line is assigning work to workers to balance the line to minimize wasted time. See the line balancing entry.

The term “production line” is more general than the term “assembly line.” A production line may include fabrication operations, such as molding and machining, whereas an assembly line only does assembly.

See andon light, assembly, cycle time, discrete manufacturing, fabrication, facility layout, line balancing, manufacturing processes, mixed model assembly, production line, standard products.

asset turnover – A financial ratio that measures the ability of the firm to use its assets to generate sales revenue.

Asset turnover is measured as the ratio of a company’s net sales to its total assets. The assets are often based on an average. Asset turnover is similar to inventory turnover.

See financial performance metrics, inventory turnover.

assignable cause – See special cause variation.

assignment problem – A mathematical programming problem of matching one group of items (jobs, trucks, etc.) with another group of locations (machines, cities, etc.) to minimize the sum of the costs.

The assignment problem is usually shown as a table or a matrix and requires that exactly one match is found in each row and each column. For example, matching students to seats has N students and N seats and results in an N × N table of possible assignments. Each student must be assigned to exactly one seat and each seat must be assigned to exactly one student. The “cost” of assigning student i to seat j is cij, which may be some measure of the student’s disutility (dislike) for that seat. This problem can be solved efficiently on a computer with special-purpose assignment algorithms, network optimization algorithms, and general-purpose linear programming algorithms. Even though it is an integer programming problem, it can be solved with any general linear programming package and be guaranteed to produce integer solutions, because the problem is unimodular.

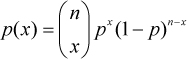

The assignment problem is formulated as the following linear program:

Assignment problem: Minimize  Subject to

Subject to  for all j and

for all j and  for all i where the decision variables are xij

for all i where the decision variables are xij ![]() {0,1} and cij is the cost of assigning item i to location j.

{0,1} and cij is the cost of assigning item i to location j.

See algorithm, integer programming (IP), linear programming (LP), network optimization, operations research (OR), transportation problem, Traveling Salesperson Problem (TSP).

Association for Manufacturing Excellence (AME) – A practitioner-based professional society dedicated to cultivating understanding, analysis, and exchange of productivity methods and their successful application in the pursuit of excellence.

Founded in 1985, AME was the first major professional society in North America to promote lean manufacturing principles. AME sponsors events and workshops that focus on hands-on learning. AME publishes the Target magazine and puts on several regional and national events each year.

The AME website is www.ame.org.

See operations management (OM).

assortment – A retailer’s selection of merchandise to display; also known as “merchandise assortment” and “product assortment.”

The target customer base and physical product characteristics determine the depth and breadth of an assortment and the length of time it is carried.

See category captain, category management, planogram, product proliferation.

ATO – See assemble to order.

ATP – See Available-to-Promise (ATP).

attribute – A quality management term used to describe a zero-one (binary) property of a product by which its quality will be judged by some stakeholder.

Inspection can be performed by attributes or by variables. Inspection by attributes is usually for lot control (acceptance sampling) and is performed with a p-chart (to control the percent defective) or a c-chart (to control the number of defects). Inspection by variables is usually done for process control and is performed with an x-bar chart (to control the mean) or an r-chart (to control the range or variance).

See acceptance sampling, c-chart, inspection, p-chart, quality management, Statistical Process Control (SPC), Statistical Quality Control (SQC).

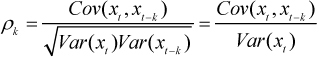

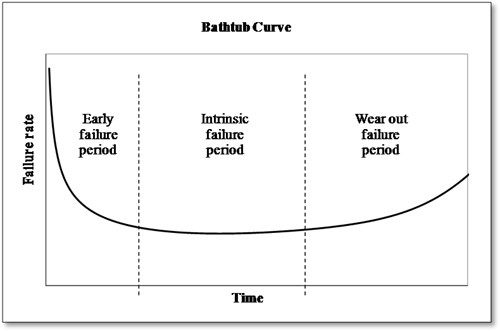

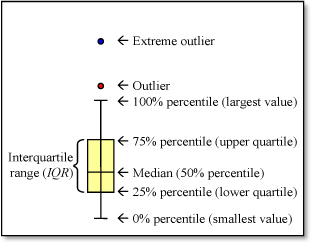

autocorrelation – A measure of the strength of the relationship between a time series variable in periods t and t − k; also called serial correlation.

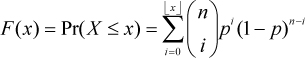

Autocorrelation measures the correlation between a variable in period t and period t − k (i.e., correlation between xt and xt−k). The autocorrelation at lag k is then defined as  , where Var(xt) = Var(xt−k) for a weakly stationary process.

, where Var(xt) = Var(xt−k) for a weakly stationary process.

Testing for autocorrelation is one way to check for randomness in time series data. The Durbin-Watson test can be used to test for first-order (i.e., k = 1) autocorrelation. The runs test can also be used to test for serial independence.

The Box-Jenkins forecasting method uses the autocorrelation structure in the time series to create forecasts.

Excel can be used to estimate the autocorrelation at lag k using CORREL(range1, range2), where range1 includes the first T − 1 values and range2 includes the last T − 1 values of a time series with T values.

See all-time demand, Box-Jenkins forecasting, correlation, Durbin-Watson statistic, learning curve, runs test, safety stock, time series forecasting.

Automated Data Collection (ADC) – Information systems used to collect and process data with little or no human interaction; also called data capture, Automated Identification and Data Capture (AIDC), and Auto-ID.

Automated Data Collection is based on technologies, such as barcodes, Radio Frequency Identification (RFID), biometrics, magnetic stripes, Optical Character Recognition (OCR), smart cards, and voice recognition. Most Warehouse Management Systems and Manufacturing Execution Systems are integrated with ADC systems.

See barcode, Manufacturing Execution System (MES), Optical Character Recognition (OCR), part number, quality at the source, Radio Frequency Identification (RFID), Warehouse Management System (WMS).

Automated Guided Vehicle (AGV) – Unmanned, computer-controlled vehicle equipped with a guidance and collision-avoidance system; sometimes known as an Automated Guided Vehicle System (AGVS).

AGVs typically follow a path defined by wires embedded in the floor to transport materials and tools between workstations. Many firms have found AGVs to be inefficient and unreliable.

See automation, robotics.

Automated Identification and Data Capture (AIDC) – See Automated Data Collection (ADC).

Automated Storage & Retrieval System (AS/RS) – A computer-controlled robotic device used for storing and retrieving items from storage locations; also called ASRS.

Automated Storage and Retrieval Systems are a combination of equipment, controls, and information systems that automatically handle, store, and retrieve materials, components, tools, raw material, subassemblies, or products with great speed and accuracy. Consequently, they are used in many manufacturing and warehousing applications. An AS/RS includes one or more of the following technologies: horizontal carousels, vertical carousels, vertical lift modules (VLM), and the traditional crane-in-aisle storage and retrieval systems that use storage retrieval (SR) cranes.

See automation, batch picking, carousel, warehouse, zone picking.

Automatic Call Distributor (ACD) – A computerized phone system that responds to the caller with a voice menu and then routes the caller to an appropriate agent; also known as Automated Call Distribution.

ACDs are the core technology in call centers and are used for order entry, direct sales, technical support, and customer service. All ACDs provide some sort of routing function for calls. Some ACDs use sophisticated systems that distribute calls equally to agents or identify and prioritize a high-value customer based on the calling number. Some ACDs recognize the calling number via ANI or Caller ID, consult a database, and then route the call accordingly. ACDs can also incorporate “skills-based routing” that routes callers along with appropriate data files to the agent who has the appropriate knowledge and language skills to handle the call. Some ACDs can also route e-mail, faxes, Web-initiated calls, and callback requests.

The business benefits of an ACD include both customer benefits (less average waiting time and higher customer satisfaction) and service provider benefits (more efficient service, better use of resources, and less need for training). However, some customers intensely dislike ACDs because they can be impersonal and confusing.

See automation, call center, customer service.

automation – The practice of developing machines to do work that was formerly done manually. ![]()

Automation is often a good approach for reducing variable cost, improving conformance quality of a process, and manufacturing run time per unit. However, automation requires capital expense, managerial and technical expertise to install, and technical expertise to maintain. Additionally, automation often reduces the product mix flexibility (highly automated equipment is usually dedicated to a narrow range of products), decreases volume flexibility (the firm must have enough volume to justify the capital cost), and increases risk (the automation becomes worthless when the process or product technology becomes obsolete or when the market demand for products requiring the automation declines).

Automation is best used in situations where the work is dangerous, dirty, or dull (“the 3Ds”). For example, welding is dangerous, cleaning a long underground sewer line is dirty, and inserting transistors on a printed circuit board is dull. All three of these tasks can and should be automated when possible. Repetitive (dull) work often results in poor quality work, so automated equipment is more likely to produce defect-free results.