Chapter 16. Keypoints and Descriptors

Keypoints and the Basics of Tracking

This chapter is all about informative feature points in images. We will begin by describing what are called corners and exploring their definition in the subpixel domain. We will then learn how to track such corners with optical flow. Historically, the tracking of corners evolved into the theory of keypoints, to which we will devote the remainder of this chapter, including extensive discussion of keypoint feature detectors and descriptors implemented in the OpenCV library for you to use.1

The concept of corners, as well as that of keypoints, is based on the intuition that it would be useful in many applications to be able to represent an image or object in an invariant form that will be the same, or at least very similar, in other similar images of the same scene or object. Corner and keypoint representations are powerful methods for doing this. A corner is a small patch of an image that is rich in local information and therefore likely to be recognized in another image. A keypoint is an extension of this concept that encodes information from a small local patch of an image such that the keypoint is highly recognizable and, at least in principle, largely unique. The descriptive information about a keypoint is summarized in the form of its descriptor, which is typically much lower-dimensional than the pixel patch that formed the keypoint. The descriptor represents that patch so as to make it much easier to recognize that patch when it appears in another, different image.

From an intuitive point of view, you can think of a keypoint like a piece from a jigsaw puzzle. When you begin the puzzle, some pieces are easily recognized: the handle of a door, a face, the steeple of a church. When you go to assemble the puzzle, you can immediately relate these keypoints to the image on the puzzle box and know immediately where to place them. In addition, if you and a friend had two unassembled puzzles and you wanted to know if they were, for example, both different images of the beautiful Neuschwanstein Castle, you could assemble both puzzles and compare, but you could also just pick out the most salient pieces from each puzzle and compare them. In this latter case, it would take only a few matches before you were convinced that they were either literally the same puzzle, or two puzzles made from two different images of the same castle.

In this chapter, we will start by building up the basics of the theory of keypoints by discussing the earliest ancestor of the modern keypoint: the Harris corner. From there we will discuss the concept of optical flow for such corners, which captures the basic idea of tracking such features from one frame to another in a video sequence. After that we will move on to more modern keypoints and their descriptors and discuss how OpenCV helps us find them as well as how the library will help us match them between frames. Finally, we will look at a convenient method that allows us to visualize keypoints overlaid on top of the images in which they were detected.

Corner Finding

There are many kinds of local features that you can track. It is worth taking a moment to consider what exactly constitutes such a feature. Obviously, if we pick a point on a large blank wall, then it won’t be easy to find that same point in the next frame of a video.

If all points on the wall are identical or even very similar, then we won’t have much luck tracking that point in subsequent frames. On the other hand, if we choose a point that is unique, then we have a pretty good chance of finding that point again. In practice, the point or feature we select should be unique, or nearly unique, and should be parameterizable such that it can be compared to other points in another image (see Figure 16-1).

Returning to our intuition from the large blank wall, we might be tempted to look for points that have some significant change in them—for example, a strong derivative. It turns out that this is not quite enough, but it’s a start. A point to which a strong derivative is associated may be on an edge of some kind, but it still may look like all of the other points along that same edge (see the aperture problem diagrammed in Figure 16-8 and discussed in the section “Introduction to Optical Flow”).

Figure 16-1. The points marked with circles here are good points to track, whereas those marked with boxes—even the ones that are sharply defined edges—are poor choices

However, if strong derivatives are observed nearby in two different directions, then we can hope that this point is more likely to be unique. For this reason, many trackable features are called corners. Intuitively, corners—not edges—are the points that contain enough information to be picked out from one frame to the next.

The most commonly used definition of a corner was provided by Harris [Harris88]. This definition captures the intuition of the previous paragraph in a mathematically specific form. We will look at the details of this method shortly, but for the moment, what is important to know is that you can ask OpenCV to simply find the points in the image that are good candidates for being tracked, and it will use Harris’s method to identify them for you.

Finding corners using cv::goodFeaturesToTrack()

The cv::goodFeaturesToTrack() routine implements Harris’s method and a slight improvement credited to Shi and Tomasi [Shi94]. This function conveniently computes the necessary derivative operators, analyzes them, and returns a list of the points that meet our definition of being good for tracking:

void cv::goodFeaturesToTrack( cv::InputArray image, // Input, CV_8UC1 or CV_32FC1 cv::OutputArray corners, // Output vector of corners int maxCorners, // Keep this many corners double qualityLevel, // (fraction) rel to best double minDistance, // Discard corner this close cv::InputArray mask = noArray(), // Ignore corners where mask=0 int blockSize = 3, // Neighborhood used bool useHarrisDetector = false, // false='Shi Tomasi metric' double k = 0.04 // Used for Harris metric );

The input image can be any 8-bit or 32-bit (i.e., 8U or 32F), single-channel image. The output corners will be a vector or array (depending on what you provide) containing all of the corners that were found. If it is a vector<>, it should be a vector of cv::Point2f objects. If it is a cv::Mat, it will have one row for every corner and two columns for the x and y locations of the points. You can limit the number of corners that will be found with maxCorners, the quality of the returned points with qualityLevel (typically between 0.10 and 0.01, and never greater than 1.0), and the minimum separation between adjacent corners with minDistance.

If the argument mask is supplied, it must be the same dimension as image, and corners will not be generated anywhere mask is 0. The blockSize argument indicates how large an area is considered when a corner is computed; a typical value is 3 but, for high-resolution images, you may want to make this slightly larger. The useHarrisDetector argument, if set to true, will cause cv:: goodFeaturesToTrack() to use an exact corner strength formula of Harris’s original algorithm; if set to false, Shi and Tomasi’s method will be used. The parameter k is used only by Harris’s algorithm, and is best left at its default value.2

Subpixel corners

If you are processing images for the purpose of extracting geometric measurements, as opposed to extracting features for recognition, then you will normally need more resolution than the simple pixel values supplied by cv::goodFeaturesToTrack(). Another way of saying this is that such pixels come with integer coordinates whereas we sometimes require real-valued coordinates—for example, a pixel location of (8.25, 117.16).

If you imagine looking for a particular small object in a camera image, such as a distant star, you would invariably be frustrated by the fact that the point’s location will almost never be in the exact center of a camera pixel element. Of course, in this circumstance, some of the light from the object will appear in the neighboring pixels as well. To overcome this, you might try to fit a curve to the image values and then use a little math to find where the peak occurred between the pixels. Subpixel corner detection techniques all rely on approaches of this kind (for a review and newer techniques, see Lucchese [Lucchese02] and Chen [Chen05]). Common uses of such measurements include tracking for three-dimensional reconstruction, calibrating a camera, warping partially overlapping views of a scene to stitch them together in the most natural way, and finding an external signal such as the precise location of a building in a satellite image.

One of the most common tricks for subpixel refinement is based on the mathematical observation that the dot product between a vector and an orthogonal vector is 0; this situation occurs at corner locations, as shown in Figure 16-2.

Figure 16-2. Finding corners to subpixel accuracy: (a) the image area around the point p is uniform and so its gradient is 0; (b) the gradient at the edge is orthogonal to the vector q-p along the edge; in either case, the dot product between the gradient at p and the vector q-p is 0 (see text)

In Figure 16-2, we assume a starting corner location q that is near the actual subpixel corner location. We examine vectors starting at point q and ending at p. When p is in a nearby uniform or “flat” region, the gradient there is 0. On the other hand, if the vector q-p aligns with an edge, then the gradient at p on that edge is orthogonal to the vector q-p. In either case, the dot product between the gradient at p and the vector q-p is 0. We can assemble many such pairs of the gradient at a nearby point p and the associated vector q-p, set their dot product to 0, and solve this assemblage as a system of equations; the solution will yield a more accurate subpixel location for q, the exact location of the corner.

The function that does subpixel corner finding is cv::cornerSubPix():

void cv::cornerSubPix( cv::InputArray image, // Input image cv::InputOutputArray corners, // Guesses in, and results out cv::Size winSize, // Area is NXN; N=(winSize*2+1) cv::Size zeroZone, // Size(-1,-1) to ignore cv::TermCriteria criteria // When to stop refinement );

The input image is the original image from which your corners were computed. The corners array contains the integer pixel locations, such as those obtained from routines like cv::goodFeaturesToTrack(), which are taken as the initial guesses for the corner locations.

As described earlier, the actual computation of the subpixel location uses a system of dot-product expressions that express the combinations that should sum to zero (see Figure 16-2). Each of these equations arises from considering a single pixel in the region around p. The parameter winSize specifies the size of window from which these equations will be generated. This window is centered on the original integer corner location and extends outward in each direction by the number of pixels specified in winSize (e.g., if winSize.width = 4, then the search area is actually 4 + 1 + 4 = 9 pixels wide). These equations form a linear system that can be solved by the inversion of a single autocorrelation matrix.3 In practice, this matrix is not always invertible owing to small eigenvalues arising from the pixels very close to p. To protect against this, it is common to simply reject from consideration those pixels in the immediate neighborhood of p. The parameter zeroZone defines a window (analogously to winSize, but always with a smaller extent) that will not be considered in the system of constraining equations and thus the autocorrelation matrix. If no such zero zone is desired, then this parameter should be set to cv::Size(-1,-1).

Once a new location is found for q, the algorithm will iterate using that value as a starting point and will continue until the user-specified termination criterion is reached. Recall that this criterion can be of type cv::TermCriteria::MAX_ITER or of type cv::TermCriteria::EPS (or both) and is usually constructed with the cv::TermCriteria() function. Using cv::TermCriteria::EPS will effectively indicate the accuracy you require of the subpixel values. Thus, if you specify 0.10, then you are asking for subpixel accuracy down to one-tenth of a pixel.

Introduction to Optical Flow

The optical flow problem involves attempting to figure out where many (and possibly all) points in one image have moved to in a second image—typically this is done in sequences of video, for which it is reasonable to assume that most points in the first frame can be found somewhere in the second. Optical flow can be used for motion estimation of an object in the scene, or even for ego-motion of the camera relative to the scene as a whole. In many applications, such as video security, it is motion itself that indicates that a portion of the scene is of specific interest, or that something interesting is going on. Optical flow is illustrated in Figure 16-3.

Figure 16-3. Optical flow: target features (left) are tracked over time and their movement is converted into velocity vectors (right); original images courtesy of Jean-Yves Bouguet

The ideal output of an optical flow algorithm would be the association of some estimate of velocity for each and every pixel in a frame pair or, equivalently, a displacement vector for every pixel in one image that indicates the relative location of that pixel in the other image. Such a construction, when it applies to every pixel in the image, is usually referred to as dense optical flow. There is an alternative class of algorithms, called sparse optical flow algorithms, that track only some subset of the points in the image. These algorithms are often fast and reliable because they restrict their attention to specific points in the image that will be easier to track. OpenCV has many ways of helping us identify points that are well suited for tracking, with the corners introduced earlier being only one among a long list. For many practical applications, the computational cost of sparse tracking is so much less than dense tracking that the latter is relegated to only academic interest.4 In this section, we will look at one sparse optical flow technique. Later, we will look at more powerful tools for sparse optical flow, and then finally move on to dense optical flow.

Lucas-Kanade Method for Sparse Optical Flow

The Lucas-Kanade (LK) algorithm [Lucas81], as originally proposed in 1981, was an attempt to produce dense optical flow (i.e., flow for every pixel). Yet, because the method is easily applied to a subset of the points in the input image, it has become an important technique for sparse optical flow. The algorithm can be applied in a sparse context because it relies only on local information that is derived from some small window surrounding each point of interest. The disadvantage of using small local windows in Lucas-Kanade is that large motions can move points outside of the local window and thus become impossible for the algorithm to find. This problem led to development of the “pyramidal” LK algorithm, which tracks starting from highest level of an image pyramid (lowest detail) and working down to lower levels (finer detail). Tracking over image pyramids allows large motions to be caught by local windows.5

Because this is an important and effective technique, we will go into some mathematical detail; readers who prefer to forgo such details can skip to the function description and code. However, it is recommended that you at least scan the intervening text and figures, which describe the assumptions behind Lucas-Kanade optical flow, so that you’ll have some intuition about what to do if tracking isn’t working well.

How Lucas-Kanade works

The basic idea of the LK algorithm rests on three assumptions:

- Brightness constancy

- A pixel from the image of an object in the scene does not change in appearance as it (possibly) moves from frame to frame. For grayscale images (LK can also be done in color), this means we assume that the brightness of a pixel does not change as it is tracked from frame to frame.

- Temporal persistence, or “small movements”

- The image motion of a surface patch changes slowly in time. In practice, this means the temporal increments are fast enough relative to the scale of motion in the image that the object does not move much from frame to frame.

- Spatial coherence

- Neighboring points in a scene belong to the same surface, have similar motion, and project to nearby points on the image plane.

We now look at how these assumptions, illustrated in Figure 16-4, lead us to an effective tracking algorithm. The first requirement, brightness constancy, is just the requirement that pixels in one tracked patch look the same over time, defining:

![Assumptions behind Lucas-Kanade optical flow: for a patch being tracked on an object in a scene, the patch’s brightness doesn’t change (left); motion is slow relative to the frame rate (center); and neighboring points stay neighbors (right). (Component images courtesy of Michael Black [Black92].)](http://images-20200215.ebookreading.net/24/4/4/9781491937983/9781491937983__learning-opencv-3__9781491937983__assets__lcv3_1604.png)

Figure 16-4. Assumptions behind Lucas-Kanade optical flow: for a patch being tracked on an object in a scene, the patch’s brightness doesn’t change (left); motion is slow relative to the frame rate (center); and neighboring points stay neighbors (right); component images courtesy of Michael Black [Black92]

The requirement that our tracked pixel intensity exhibits no change over time can simply be expressed as:

The second assumption, temporal persistence, essentially means that motions are small from frame to frame. In other words, we can view this change as approximating a derivative of the intensity with respect to time (i.e., we assert that the change between one frame and the next in a sequence is differentially small). To understand the implications of this assumption, first consider the case of a single spatial dimension.

In this case, we can start with our brightness consistency equation, substitute the definition of the brightness f(x, t) while taking into account the implicit dependence of x on t, I(x(t)t), and then apply the chain rule for partial differentiation. This yields:

where Ix is the spatial derivative across the first image, It is the derivative between images over time, and v is the velocity we are looking for. We thus arrive at the simple equation for optical flow velocity in the simple one-dimensional case:

Let’s now try to develop some intuition for this one-dimensional tracking problem. Consider Figure 16-5, which shows an “edge”—consisting of a high value on the left and a low value on the right—that is moving to the right along the x-axis. Our goal is to identify the velocity v at which the edge is moving, as plotted in the upper part of Figure 16-5. In the lower part of the figure, we can see that our measurement of this velocity is just “rise over run,” where the rise is over time and the run is the slope (spatial derivative). The negative sign corrects for the slope of x.

Figure 16-5. Lucas-Kanade optical flow in one dimension: we can estimate the velocity of the moving edge (upper panel) by measuring the ratio of the derivative of the intensity over time divided by the derivative of the intensity over space

Figure 16-5 reveals another aspect to our optical flow formulation: our assumptions are probably not quite true. That is, image brightness is not really stable; and our time steps (which are set by the camera) are often not as fast relative to the motion as we’d like. Thus, our solution for the velocity is not exact. However, if we are “close enough,” then we can iterate to a solution. Iteration is shown in Figure 16-6 where we use our first (inaccurate) estimate of velocity as the starting point for our next iteration and then repeat. Note that we can keep the same spatial derivative in x as computed on the first frame because of the brightness constancy assumption—pixels moving in x do not change. This reuse of the spatial derivative already calculated yields significant computational savings. The time derivative must still be recomputed each iteration and each frame, but if we are close enough to start with, then these iterations will converge to near exactitude within about five iterations. This is known as Newton’s method. If our first estimate was not close enough, then Newton’s method will actually diverge.

Figure 16-6. Iterating to refine the optical flow solution (Newton’s method): using the same two images and the same spatial derivative (slope) we solve again for the time derivative; convergence to a stable solution usually occurs within a few iterations

Now that we’ve seen the one-dimensional solution, let’s generalize it to images in two dimensions. At first glance, this seems simple: just add in the y-coordinate. Slightly changing notation, we’ll call the y-component of velocity v and the x-component of velocity u; then we have:

This is often written as a single vector equation:

where:

Unfortunately, for this single equation there are two unknowns for any given pixel. This means that measurements at the single-pixel level are underconstrained and cannot be used to obtain a unique solution for the two-dimensional motion at that point. Instead, we can solve only for the motion component that is perpendicular or “normal” to the line described by our flow equation. Figure 16-7 illustrates the geometry.

Figure 16-7. Two-dimensional optical flow at a single pixel: optical flow at one pixel is underdetermined and so can yield at most motion, which is perpendicular (“normal”) to the line described by the flow equation (figure courtesy of Michael Black)

Normal optical flow results from the aperture problem, which arises when you have a small aperture or window in which to measure motion. When motion is detected with a small aperture, you often see only an edge, not a corner. But an edge alone is insufficient to determine exactly how (i.e., in what direction) the entire object is moving; see Figure 16-8.

Figure 16-8. Aperture problem: a) An object is moving to the right and down. (b) Through a small aperture, we see an edge moving to the right but cannot detect the downward part of the motion

So then how do we get around this problem that, at one pixel, we cannot resolve the full motion? We turn to the last optical flow assumption for help. If a local patch of pixels moves coherently, then we can easily solve for the motion of the central pixel by using the surrounding pixels to set up a system of equations. For example, if we use a 5 × 56 window of brightness values (you can simply triple this for color-based optical flow) around the current pixel to compute its motion, we can then set up 25 equations as follows:

We now have an overconstrained system for which we can solve provided it contains more than just an edge in that 5 × 5 window. To solve for this system, we set up a least-squares minimization of the equation, whereby ![]() is solved in standard form as:

is solved in standard form as:

From this relation we obtain our u and v motion components. Writing this out in more detail yields:

The solution to this equation is then:

When can this be solved? When (ATA) is invertible. And (ATA) is invertible when it has full rank (2), which occurs when it has two large eigenvectors. This will happen in image regions that include texture running in at least two directions. In this case, (ATA) will have the best properties when the tracking window is centered over a corner region in an image. This ties us back to our earlier discussion of the Harris corner detector. In fact, those corners were “good features to track” (see our previous remarks concerning cv::goodFeaturesToTrack()) for precisely the reason that (ATA) had two large eigenvectors there! We’ll see shortly how all this computation is done for us by the cv::calcOpticalFlowPyrLK() function.

The reader who understands the implications of our assuming small and coherent motions will now be bothered by the fact that, for most video cameras running at 30 Hz, large and noncoherent motions are commonplace. In fact, Lucas-Kanade optical flow by itself does not work very well for exactly this reason: we want a large window to catch large motions, but a large window too often breaks the coherent motion assumption! To circumvent this problem, we can track first over larger spatial scales using an image pyramid and then refine the initial motion velocity assumptions by working our way down the levels of the image pyramid until we arrive at the raw image pixels.

Hence, the recommended technique is first to solve for optical flow at the top layer and then to use the resulting motion estimates as the starting point for the next layer down. We continue going down the pyramid in this manner until we reach the lowest level. Thus we minimize the violations of our motion assumptions and so can track faster and longer motions. This more elaborate function is known as pyramid Lucas-Kanade optical flow and is illustrated in Figure 16-9. The OpenCV function that implements Pyramid Lucas-Kanade optical flow is cv::calcOpticalFlowPyrLK(), which we examine next.

Figure 16-9. Pyramid Lucas-Kanade optical flow: running optical flow at the top of the pyramid first mitigates the problems caused by violating our assumptions of small and coherent motion; the motion estimate from the preceding level is taken as the starting point for estimating motion at the next layer down

Pyramid Lucas-Kanade code: cv::calcOpticalFlowPyrLK()

We come now to OpenCV’s algorithm that computes Lucas-Kanade optical flow in a pyramid, cv::calcOpticalFlowPyrLK(). As we will see, this optical flow function makes use of “good features to track” and also returns indications of how well the tracking of each point is proceeding.

void cv::calcOpticalFlowPyrLK(

cv::InputArray prevImg, // Prior image (t-1), CV_8UC1

cv::InputArray nextImg, // Next image (t), CV_8UC1

cv::InputArray prevPts, // Vector of 2d start points (CV_32F)

cv::InputOutputArray nextPts, // Results: 2d end points (CV_32F)

cv::OutputArray status, // For each point, found=1, else=0

cv::OutputArray err, // Error measure for found points

cv::Size winSize = Size(15,15), // size of search window

int maxLevel = 3, // Pyramid layers to add

cv::TermCriteria criteria = TermCriteria( // How to end search

cv::TermCriteria::COUNT | cv::TermCriteria::EPS,

30,

0.01

),

int flags = 0, // use guesses, and/or eigenvalues

double minEigThreshold = 1e-4 // for spatial gradient matrix

);

This function has a lot of inputs, so let’s take a moment to figure out what they all do. Once we have a handle on this routine, we can move on to the problem of which points to track and how to compute them. The basic plan is simple, however: you supply the images, list the points you want to track in prevPts, and call the routine. When the routine returns, you check the status array to see which points were successfully tracked and then check nextPts to find the new locations of those points. Now let’s proceed into the details.

The first two arguments of cv::calcOpticalFlowPyrLK(), prevImg and nextImg, are the initial and final images. Both should be the same size and have the same number of channels.7 The next two arguments, prevPts and nextPts, are the input list of features from the first image, and the output list to which matched points in the second image will be written. These can be either N × 2 arrays or vectors of points. The arrays status and err will be filled with information to tell you how successful the matching was. In particular, each entry in status will tell whether the corresponding feature in prevPts was found at all (status[i] will be nonzero if and only if prevPts[i] was found in nextImg). Similarly, err[i] will indicate an error measure for any point prevPts[i] that was found in nextImg (if point i was not found, then err[i] is not defined).

The window used for computing the local coherent motion is given by winSize. Because we are constructing an image pyramid, the argument maxLevel is used to set the depth of the stack of images. If maxLevel is set to 0, then the pyramids are not used. The argument criteria is used to tell the algorithm when to quit searching for matches; recall that cv::TermCriteria is the structure used by many OpenCV algorithms that iterate to a solution:

struct cv::TermCriteria(

public:

enum {

COUNT = 1,

MAX_ITER = COUNT,

EPS = 2

};

TermCriteria();

TermCriteria( int _type, int_maxCount, double _epsilon );

int type, // one of the enum types above

int max_iter,

double epsilon

);

The default values will be satisfactory for most situations. As is often the case, however, if your image is unusually large, you may want to slightly increase the maximum allowed number of iterations.

The argument flags can have one or both of the following values:

cv::OPTFLOW_LK_GET_MIN_EIGENVALS- Set this flag for a somewhat more detailed error measure. The default error measure for the

erroroutput is the average per-pixel change in intensity between the window around the previous corner and the window around the new corner. With this flag set totrue, that error is replaced with the minimum eigenvalue of the Harris matrix associated with the corner.8 cv::OPTFLOW_USE_INITIAL_FLOW- Use when the array

nextPtsalready contains an initial guess for the feature’s coordinates when the routine is called. (If this flag is not set, then the initial guesses will just be the point locations inprevPts.)

The final argument, minEigThreshold, is used as a filter for removing points that are, in fact, not such good choices to track after all. In effect, it is somewhat analogous to the qualityLevel argument to cv::goodFeaturesToTrack, except its exact method of computation is different. The default value of 10–4 is a good choice; it can be increased in order to throw away more points.

A worked example

Putting this all together, we now know how to find features that are good ones to track, and we know how to track them. We can obtain good results by using the combination of cv::goodFeaturesToTrack() and cv::calcOpticalFlowPyrLK(). Of course, you can also use your own criteria to determine which points to track.

Let’s now look at a simple example (Example 16-1) that uses both cv::goodFeaturesToTrack() and cv::calcOpticalFlowPyrLK(); see also Figure 16-10.

Example 16-1. Pyramid Lucas-Kanade optical flow code

// Pyramid L-K optical flow example

//

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace std;

static const int MAX_CORNERS = 1000;

void help( argv ) {

cout << "Call: " <<argv[0] <<" [image1] [image2]" << endl;

cout << "Demonstrates Pyramid Lucas-Kanade optical flow." << endl;

}

int main(int argc, char** argv) {

if( argc != 3 ) { help( char** argv ); exit( -1 ); }

// Initialize, load two images from the file system, and

// allocate the images and other structures we will need for

// results.

//

cv::Mat imgA = cv::imread(argv[1], cv::LOAD_IMAGE_GRAYSCALE);

cv::Mat imgB = cv::imread(argv[2], cv::LOAD_IMAGE_GRAYSCALE);

cv::Size img_sz = imgA.size();

int win_size = 10;

cv::Mat imgC = cv::imread(argv[2], cv::LOAD_IMAGE_UNCHANGED);

// The first thing we need to do is get the features

// we want to track.

//

vector< cv::Point2f > cornersA, cornersB;

cv::goodFeaturesToTrack(

imgA, // Image to track

cornersA, // Vector of detected corners (output)

MAX_CORNERS, // Keep up to this many corners

0.01, // Quality level (percent of maximum)

5, // Min distance between corners

cv::noArray(), // Mask

3, // Block size

false, // true: Harris, false: Shi-Tomasi

0.04 // method specific parameter

);

cv::cornerSubPix(

imgA, // Input image

cornersA, // Vector of corners (input and output)

cv::Size(win_size, win_size), // Half side length of search window

cv::Size(-1,-1), // Half side length of dead zone (-1=none)

cv::TermCriteria(

cv::TermCriteria::MAX_ITER | cv::TermCriteria::EPS,

20, // Maximum number of iterations

0.03 // Minimum change per iteration

)

);

// Call the Lucas Kanade algorithm

//

vector<uchar> features_found;

cv::calcOpticalFlowPyrLK(

imgA, // Previous image

imgB, // Next image

cornersA, // Previous set of corners (from imgA)

cornersB, // Next set of corners (from imgB)

features_found, // Output vector, elements are 1 for tracked

cv::noArray(), // Output vector, lists errors (optional)

cv::Size( win_size*2+1, win_size*2+1 ), // Search window size

5, // Maximum pyramid level to construct

cv::TermCriteria(

cv::TermCriteria::MAX_ITER | cv::TermCriteria::EPS,

20, // Maximum number of iterations

0.3 // Minimum change per iteration

)

);

// Now make some image of what we are looking at:

// Note that if you want to track cornersB further, i.e.

// pass them as input to the next calcOpticalFlowPyrLK,

// you would need to "compress" the vector, i.e., exclude points for which

// features_found[i] == false.

for( int i = 0; i < (int)cornersA.size(); i++ ) {

if( !features_found[i] )

continue;

line(imgC, cornersA[i], cornersB[i], Scalar(0,255,0), 2, cv::LINE_AA);

}

cv::imshow( "ImageA", imgA );

cv::imshow( "ImageB", imgB );

cv::imshow( "LK Optical Flow Example", imgC );

cv::waitKey(0);

return 0;

}

Generalized Keypoints and Descriptors

Two of the essential concepts that we will need to understand tracking, object detection, and a number of related topics are keypoints and descriptors. Our first task is to understand what these two things are and how they differ from one another.

At the highest level of abstraction, a keypoint is a small portion of an image that, for one reason or another, is unusually distinctive, and which we believe we might be able to locate in another related image. A descriptor is some mathematical construction, typically (but not always) a vector of floating-point values, which somehow describes an individual keypoint, and which can be used to determine whether—in some context—two keypoints are “the same.”9

Historically, one of the first important keypoint types was the Harris corner, which we encountered at the beginning of this chapter. Recall that the basic concept behind the Harris corner was that any point in an image that seemed to have a strong change in intensity along two different axes was a good candidate for matching another related image (for example, an image in a subsequent frame of a video stream).

Take a look at the images in Figure 16-10; the image is of text from a book.10 You can see that the Harris corners are found at the locations where lines that make up the individual text characters begin or end, or where there are intersections of lines (such as the middle of an h or b. You will notice that “corners” do not appear along the edges of long lines in the characters, only at the end. This is because a feature found on such an edge looks very much like any other feature found anywhere on that edge. It stands to reason that if something is not unique in the current image, it will not be unique in another image, so such pixels do not qualify as good features.

Figure 16-10. Two images containing text, with the Harris corners shown on the right as white circles; note that in the portion of the image that is slightly out of focus, no corners are detected at all

To our human eyes, each feature will look somewhat different than any other. The question of feature descriptors addresses the problem of how to have the computer make such associations. We mentioned that the three-way intersections that appear in an h or a b make good “features,” but how would the computer tell the difference between them? This is what feature descriptors do.

We could construct a feature descriptor any way we would like—for example, we might make a vector from the intensity values of the 3 × 3 area around the keypoint. A problem with such a prescription is that the descriptor can have different values if the keypoint is seen from even a slightly different angle. In general, rotational invariance11 is a desirable property for a feature descriptor. Of course, whether or not you need a rotationally invariant descriptor depends on your application. When detecting and tracking people, gravity plays a strong role in creating an asymmetric world in which people’s heads are usually at the top and their feet are usually at the bottom. In such applications, a descriptor lacking rotational symmetry may not be a problem. In contrast, aerial imagery of the ground “rotates” when the aircraft travels in a different direction, and so the imagery may appear in what seems to be a random orientation.

Optical Flow, Tracking, and Recognition

In the previous section, we discussed optical flow in the context of the Lucas Kanade algorithm. In that case, OpenCV provided us with a single high-level tool that would take a list of keypoints and try to locate the best matches for those points in a new frame. These points did not have a lot of structure or identity, just enough to make them locatable from one frame to the next in two very similar frames. Generalized keypoints can have associated descriptors that are very powerful and will allow those points to be matched not only in sequential frames of video, but even in completely different images. This can allow us to locate known objects in new environments, or to track an object through complex changing scenery, as well as many other applications.

The three major categories of tasks for which keypoints (and their descriptors) are useful are tracking, object recognition, and stereoscopy.

Tracking is the task of following the motion of something as the scene evolves in a sequential image stream. Tracking comes up in two major subcategories: the first is tracking objects in a stationary scene, and the other is tracking the scene itself for the purpose of estimating the motion of the camera. Usually, the term tracking alone refers to the former, and the latter is referred to as visual odometry. Of course, it is very common to want to do both of these things at once.

The second task category is object recognition. In this case, one is looking at a scene and attempting to recognize the presence of one or more known objects. The idea here is to associate certain keypoint descriptors with each object, with the reasoning that if you were to see enough keypoints associated with a particular object, you could reasonably conclude that this object was present in the scene.

Finally, there is stereoscopy. In this case, we are interested in locating corresponding points in two or more camera views of the same scene or object. Combining these locations with information about the camera locations and the optical properties of the cameras themselves, we can compute the location, in three dimensions, of the individual points we were able to match.

OpenCV provides methods for handling many types of keypoints and many kinds of keypoint descriptors. It also provides methods for matching them, either between pairs of frames (in the manner of sparse optical flow, tracking and visual odometry, or stereoscopy) or between a frame and a database of images (for object recognition).

How OpenCV Handles Keypoints and Descriptors, the General Case

When you are doing tracking, as well as many other kinds of analysis for which keypoints and their descriptors are useful, there are typically three things you would like to do. The first is to search an image and find all of the keypoints that are in that image, according to some keypoint definition. The second is to create a descriptor for every keypoint you found. The third is to compare those keypoints you found, by means of their descriptors, to some existing set of descriptors, and see whether you can find any matches. In tracking applications, this last step involves looking for features in one frame of a sequence and trying to match those with features in the previous frame. In object-detection applications, one is often searching for features in some (potentially vast) database of “known” features that are “known” to be associated with either individual objects or object classes.

For each of these layers, OpenCV provides a generalized mechanism that follows the “classes that do stuff” (functor) model. Thus, for each of these stages, there is an abstract base class that defines a common interface for a family of objects that are derived from it; each derived class implements a particular algorithm.

The cv::KeyPoint object

Of course, if we are going to find keypoints, we will need some way to represent them. Recall that the keypoint is not the feature descriptor, so most of what we need to know when we have a keypoint is where it is located. After that, there are some secondary features that some keypoints have and some do not. Here is the actual definition of the cv::KeyPoint class:

class cv::KeyPoint {

public:

cv::Point2f pt; // coordinates of the keypoint

float size; // diameter of the meaningful keypoint neighborhood

float angle; // computed orientation of the keypoint (-1 if none)

float response; // response for which the keypoints was selected

int octave; // octave (pyramid layer) keypoint was extracted from

int class_id; // object id, can be used to cluster keypoints by object

cv::KeyPoint(

cv::Point2f _pt,

float _size,

float _angle=-1,

float _response=0,

int _octave=0,

int _class_id=-1

);

cv::KeyPoint(

float x,

float y,

float _size,

float _angle=-1,

float _response=0,

int _octave=0,

int _class_id=-1

);

...

};

As you can see, every keypoint has a cv::Point2f member that just tells us where it is located.12 The concept of size tells us something about the region around the keypoint that either was somehow included in the determination that the keypoint existed in the first place, or is going to play a role in that keypoint’s descriptor. The angle of a keypoint is meaningful only for some keypoints. Many keypoints achieve rotational symmetry not by actually being invariant in the strictest sense, but by having some kind of natural orientation that you can take into account when comparing two descriptors. (This is not a complicated idea; if you were looking at two images of pencils, rotation obviously matters, but if you wanted to compare them, you could easily visualize them both in the same orientation before making the comparison.)

The response is used for detectors that can respond “more strongly” to one keypoint than another. In some cases, this can be interpreted as a probability that the feature is in fact present. The octave is used when the keypoint was found in an image pyramid. In these cases, it is important to know which scale a keypoint was found at because, in most cases, we would expect to find matches at the same or similar scale in new images. Finally, there is the class ID. You use class_id when constructing keypoint databases to distinguish the keypoints that are associated with one object from those that are associated with another (we will return to this point when we discuss the keypoint matching interface in the section “The (abstract) keypoint matching class: cv::DescriptorMatcher”).

The cv::KeyPoint object has two constructors, which are essentially the same; their only difference is that you can set the location of the keypoint either with two floating-point numbers or with a single cv::Point2f object. In fact, though, unless you are writing your own keypoint finder, you will not tend to use these functions. If you are using the detection, descriptor construction, and comparison functions available to you from the library, you will typically never even look inside the keypoint objects.

The (abstract) class that finds keypoints and/or computes descriptors for them: cv::Feature2D

For finding keypoints or computing descriptors (or performing both these tasks simultaneously), OpenCV provides the cv::Feature2D class. There are classes called cv::FeatureDetector and cv::DescriptorExtractor that used to be separate classes for pure feature detection or descriptor extraction algorithms, but since OpenCV 3.x they are all synonyms of cv::Feature2D. This abstract class has just a few methods, described next. Derived classes add some more methods to set and retrieve various properties, as well as static methods that construct the algorithm instance and return a smart pointer to it. There are two methods for actually asking the cv::Feature2D class to detect keypoints, functions that allow you to save and restore from disk, and a handy (static) function that allows you to create a feature detector–derived class by providing the name of the detector type (as a string). The two provided detection methods (as well as the two provided “compute” methods) differ only in that one operates on a single image, while the other operates on a set of images. There is some amount of efficiency to be gained by operating on many images at once (this is more true for some detectors than for others). Here is the relevant excerpt from the class description of cv::Feature2D:

class cv::Feature2D : public cv::Algorithm {

public:

virtual void detect(

cv::InputArray image, // Image on which to detect

vector< cv::KeyPoint >& keypoints, // Array of found keypoints

cv::InputArray mask = cv::noArray()

) const;

virtual void detect(

cv::InputArrayOfArrays images, // Images on which to detect

vector<vector< cv::KeyPoint > >& keypoints, // keypoints for each image

cv::InputArrayOfArrays masks = cv::noArray ()

) const;

virtual void compute(

cv::InputArray image, // Image where keypoints are located

std::vector<cv::KeyPoint>& keypoints, // input/output vector of keypoints

cv::OutputArray descriptors ); // computed descriptors, M x N matrix,

// where M is the number of keypoints

// and N is the descriptor size

virtual void compute(

cv::InputArrayOfArrays image, // Images where keypoints are located

std::vector<std::vector<cv::KeyPoint> >& keypoints, //I/O vec of keypnts

cv::OutputArrayOfArrays descriptors ); // computed descriptors,

// vector of (Mi x N) matrices, where

// Mi is the number of keypoints in

// the i-th image and N is the

// descriptor size

virtual void detectAndCompute(

cv::InputArray image, // Image on which to detect

cv::InputArray mask, // Optional region of interest mask

std::vector<cv::KeyPoint>& keypoints, // found or provided keypoints

cv::OutputArray descriptors, // computed descriptors

bool useProvidedKeypoints=false ); // if true,

// the provided keypoints are used,

// otherwise they are detected

virtual int descriptorSize() const; // size of each descriptor in elements

virtual int descriptorType() const; // type of descriptor elements

virtual int defaultNorm() const; // the recommended norm to be used

// for comparing descriptors.

// Usually, it's NORM_HAMMING for

// binary descriptors and NORM_L2

// for all others.

virtual void read( const cv::FileNode& );

virtual void write( cv::FileStorage& ) const;

...

};

The actual implementation may implement just cv::Feature2D::detect(), if it’s a pure keypoint detection algorithm (like FAST); just cv::Feature2D::compute(), if it’s a pure feature description algorithm (like FREAK); or cv::Feature2D::detectAndCompute() in the case of “complete solution” algorithm, like SIFT, SURF, ORB, BRISK, and so on, in which case detect() and compute() will call it implicitly:

-

detect(image, keypoints, mask) ~ detectAndCompute(image, mask, keypoints, noArray(), false) -

compute(image, keypoints, descriptors) ~ detectAndCompute(image, noArray(), keypoints, descriptors, true)

cv::Feature2D::detect() methods are the ones that do the basic work of finding keypoints for you—directly or through a call to detectAndCompute(). The first takes an image, a vector of keypoints, and an optional mask. It then searches the image (or the portion corresponding to the mask, if you provided one) for keypoints, and places whatever it finds in the vector you provided. The second variant does exactly the same thing, except that it expects a vector of images, a vector of masks (or none at all), and a vector of vectors of keypoints. The number of images, the number of masks (if not zero), and the number of keypoint vectors must all be equal. Then every image will be searched, and the corresponding keypoint vector in keypoints will be filled with the found keypoints.

The actual method used to find the keypoints is, of course, different for each of the many available derived classes from cv::Feature2D. We will get to the details of these shortly. In the meantime, it is important to keep in mind that the actual keypoints detected may be different (if and how some of the internal parameters are used) from one detector to another. This will mean that keypoints detected by one method may not be universally usable by every kind of feature descriptor.

Once you have found your keypoints, the next thing to do is to compute descriptors for them. As was mentioned earlier, these descriptors will allow you to compare keypoints to one another (based on their appearance, as opposed to by their location). This capability can then serve as the basis for tracking or object recognition.

The descriptors are computed by the compute() methods (that may also use detectAndCompute()). The first compute() method requires an image, a list of keypoints (presumably produced by the detect() method of the same class, but possibly a different one), and the output cv::Mat (passed as cv::OutputArray) that is used by compute() as a place to put the computed features. All descriptors generated by objects derived from cv::Feature2D are representable as vectors of fixed length. As a result, it’s possible to arrange them all into an array. The convention used is that each row of descriptors is a separate descriptor, and the number of such rows is equal to the number of elements in keypoints.

The second compute() method is used when you have several images you would like to process at once. In this case the images argument expects an STL vector containing all the images you would like to process. The keypoints argument expects a vector containing vectors of keypoints, and the descriptors argument expects a vector containing arrays that can be used to store all of the resulting descriptors. This method is a companion to the multiple-image detect() method and expects the images as you would have given them to that detect() method, and the keypoints as you would have received them from that method.

Depending on when the algorithm was implemented, many of the keypoint feature detectors and extractors are bundled together into a single object. In this case the method cv::Feature2D::detectAndCompute() is implemented. Whenever a certain algorithm provides detectAndCompute(), it’s strongly recommended to use it instead of subsequent calls to detect() and then to compute(). The obvious reason for that is a better performance: normally such algorithms require special image representation (called a scale-space representation), and it may be quite expensive to compute. When you find keypoints and compute descriptors in two separate steps, the scale-space representation is basically computed twice.

In addition to the main compute methods, there are the methods descriptorSize(), descriptorType(), and defaultNorm(). The first of these, descriptorSize(), tells us the length of the vector representation of the descriptor,13 The descriptorType() method returns information about the specific type of the elements of the vector descriptor (e.g., CV_32FC1 for 32-bit floating-point numbers, CV_8UC1 for 8-bit descriptors or binary descriptors, etc.).14 The defaultNorm() basically tells you how to compare the descriptors. In the case of binary descriptors, it’s NORM_HAMMING, and you would rather use it. In the case of descriptors like SIFT or SURF, it’s NORM_L2 (e.g., Euclidean distance), but you can obtain equally good or even better results by using NORM_L1.

The cv::DMatch object

Before we get deep into the topic of how matchers work, we need to know how matches are expressed. In general, a matcher will be an object that tries to match keypoints in one image with either a single other image or a collection of other images called a dictionary. When matches are found, OpenCV describes them by generating lists (STL vectors) of cv::DMatch objects. Here is the class definition for the cv::DMatch object:

class cv::DMatch {

public:

DMatch(); // sets this->distance

// to std::numeric_limits<float>::max()

DMatch( int _queryIdx, int _trainIdx, float _distance );

DMatch( int _queryIdx, int _trainIdx, int _imgIdx, float _distance );

int queryIdx; // query descriptor index

int trainIdx; // train descriptor index

int imgIdx; // train image index

float distance;

bool operator<( const DMatch &m ) const; // Comparison operator

// based on 'distance'

}

The data members of cv::DMatch are queryIdx, trainIdx, imgIdx, and distance. The first two identify the keypoints that were matched relative to the keypoint lists in each image. The convention used by the library is to always refer to these two images as the query image (the “new” image) and the training image (the “old” image), respectively.15 imgIdx is used to identify the particular image from which the training image came in such cases that a match was sought between an image and a dictionary. The last member, distance, is used to indicate the quality of the match. In many cases, this is something like a Euclidean distance between the two keypoints in the many-dimensional vector space in which they live. Though this is not always the metric, it is guaranteed that if two different matches have different distance values, the one with the lower distance is the better match. To facilitate such comparisons (particularly for the purpose of sorting), the operator cv::DMatch::operator<() is defined, which allows two cv::DMatch objects to be compared directly with the meaning that it is their distance members that are actually compared.

The (abstract) keypoint matching class: cv::DescriptorMatcher

The third stage in the process of detect-describe-match is also implemented through a family of objects that are all derived from a common abstract base class whose interface they all share. This third layer is based on the cv::DescriptorMatcher class.

Before we get deep into how the interface works, it is important to understand that there are two basic situations in which you would want to use a matcher: object recognition and tracking, as described earlier in the chapter. In the object recognition case, one first compiles keypoints associated with a variety of objects into a kind of database called a dictionary. Thereafter, when a new scene is presented, the keypoints in that image are extracted and compared to the dictionary to estimate what objects from the dictionary might be present in the new scene. In the tracking case, the goal is to find all the keypoints in some image, typically from a video stream, and then to look for all of those keypoints in another image, typically the prior or next image in that video stream.

Because there are these two different situations, the matching methods in the class have two corresponding variations. For the object recognition case, we need to first train the matcher with a dictionary of descriptors, and then be able to present a single descriptor list to the matcher and have it tell us which (if any) keypoints that the matcher has stored are matches with the ones in the list we provided. For the tracking case, we would like to provide two lists of descriptors and have the matcher tell us where the matches are between them. The cv:DescriptorMatcher class interface provides three functions, match(), knnMatch(), and radiusMatch(); and for each, there are two different prototypes—one for recognition (takes one list of features and uses the trained dictionary) and another for tracking (takes two lists of features).

Here is the part of the class definition relevant to us for the generic descriptor matcher base class:

class cv::DescriptorMatcher {

public:

virtual void add( InputArrayOfArrays descriptors ); // Add train descriptors

virtual void clear(); // Clear train descriptors

virtual bool empty() const; // true if no descriptors

void train(); // Train matcher

virtual bool isMaskSupported() const = 0; // true if supports masks

const vector<cv::Mat>& getTrainDescriptors() const; // Get train descriptors

// methods to match descriptors from one list vs. "trained" set (recognition)

//

void match(

InputArray queryDescriptors,

vector<cv::DMatch>& matches,

InputArrayOfArrays masks = noArray ()

);

void knnMatch(

InputArray queryDescriptors,

vector< vector<cv::DMatch> >& matches,

int k,

InputArrayOfArrays masks = noArray (),

bool compactResult = false

);

void radiusMatch(

InputArray queryDescriptors,

vector< vector<cv::DMatch> >& matches,

float maxDistance,

InputArrayOfArrays masks = noArray (),

bool compactResult = false

);

// methods to match descriptors from two lists (tracking)

//

// Find one best match for each query descriptor

void match(

InputArray queryDescriptors,

InputArray trainDescriptors,

vector<cv::DMatch>& matches,

InputArray mask = noArray ()

) const;

// Find k best matches for each query descriptor (in increasing

// order of distances)

void knnMatch(

InputArray queryDescriptors,

InputArray trainDescriptors,

vector< vector<cv::DMatch> >& matches,

int k,

InputArray mask = noArray(),

bool compactResult = false

) const;

// Find best matches for each query descriptor with distance less

// than maxDistance

void radiusMatch(

InputArray queryDescriptors,

InputArray trainDescriptors,

vector< vector<cv::DMatch> >& matches,

float maxDistance,

InputArray mask = noArray (),

bool compactResult = false

) const;

virtual void read( const FileNode& ); // Reads matcher from a file node

virtual void write( FileStorage& ) const; // Writes matcher to a file storage

virtual cv::Ptr<cv::DescriptorMatcher> clone(

bool emptyTrainData=false

) const = 0;

static cv::Ptr<cv::DescriptorMatcher> create(

const string& descriptorMatcherType

);

...

};

The first set of methods is used to match an image against prestored set of descriptors, one array per image. The purpose is to build up a keypoint dictionary that can be referenced when novel keypoints are provided. The first method is the add() method, which expects an STL vector of sets of descriptors, each of which is in the form of a cv::Mat object. Each cv::Mat object should have N rows and D columns, where N is the number of descriptors in the set, and D is the dimensionality of each descriptor (i.e., each “row” is a separate descriptor of dimension D). The reason that add() accepts an array of arrays (which is usually represented as std::vector<cv::Mat>) is that in practice, one often computes a set of descriptors from each image in a set of images.16 That set of images was probably presented to a cv::Feature2D-based class as a vector of images, and returned as a vector of sets of keypoint descriptors.

Once you have added some number of keypoint descriptor sets, if you would like to access them, you may do so with the (constant) methods getTrainDescriptors(), which will return the descriptors to you in the same way you first provided them (i.e., as a vector of descriptor sets, each of type cv::Mat, with each row of each cv::Mat being a single descriptor). If you would like to clear the added descriptors, you may do so with the clear() method, and if you would like to test whether a matcher has descriptors stored in it, you may do so with the empty() method.

Once you have loaded all of the keypoint descriptors you would like to load, you may need to call train(). Only some implementations require the train() operation (i.e., some classes derived from cv::DescriptorMatcher). This method’s purpose is to tell the matcher that you are done loading images, and that it can proceed to precompute any internal information that it will need in order to perform matches on the provided keypoints. By way of example, if a matcher performs matches using only the Euclidean distance between a provided new keypoint and those in the existing dictionary, it would be prudent to construct a quad-tree or similar data structure to greatly accelerate the task of finding the closest dictionary keypoint to the provided keypoint. Such data structures can require substantial effort to compute, and are computed only once after all of the dictionary keypoints are loaded. The train() method tells the matcher to take the time to compute these sorts of adjunct internal data structures. Typically, if a train() method is provided, you must call it before calling any matching method that uses the internal dictionary.

The next set of methods is the set of matching methods used in object recognition. They each take a list of descriptors, called a query list, which they compare with the descriptors in the trained dictionary. Within this set, there are three methods: match(), knnMatch(), and radiusMatch(). Each of these methods computes matches in a slightly different way.

The match() method expects a single list of keypoint descriptors, queryDescriptors, in the usual cv::Mat form. In this case, recall that each row represents a single descriptor, and each column is one dimension of that descriptor’s vector representation. match() also expects an STL vector of cv::DMatch objects that it can fill with the individual detected matches. In the case of the match() method, each keypoint on the query list will be matched to the “best match” from the train list.

The match() method also supports an optional mask argument. Unlike most mask arguments in OpenCV, this mask does not operate in the space of pixels, but rather in the space of descriptors. The type of mask, however, should still be CV_8U. The mask argument is an STL-vector of cv::Mat objects. Each entire matrix in that vector corresponds to one of the training images in the dictionary.17 Each row in a particular mask corresponds to a row in queryDescriptors (i.e., one descriptor). Each column in the mask corresponds to one descriptor associated with the dictionary image. Thus, masks[k].at<uchar>(i,j) should be nonzero if descriptor j from image (object) k should be compared with descriptor i from the query image.

The next method is the knnMatch() function, which expects the same list descriptors as match(). In this case, however, for each descriptor in the query list, it will find a specific number of best matches from the dictionary. That number is given by the k integer argument. (The “knn” in the function name stands for k-nearest neighbors.) The vector of cv::DMatch objects from match() is replaced by a vector of vectors of cv::DMatch objects called matches in the knnMatch() method. Each element of the top-level vector (e.g., matches[i]) is associated with one descriptor from queryDescriptors. For each such element, the next-level element (e.g., matches[i][j]) is the jth best match from the descriptors in trainDescriptors.18 The mask argument to knnMatch() has the same meaning as for match(). The final argument for knnMatch() is the Boolean compactResult. If compactResult is set to the default value of false, the matches vector of vectors will contain one vector entry for every entry in queryDescriptors—even those entries that have no matches (for which the corresponding vector of cv::DMatch objects is empty). If, however, compactResult is set to true, then such noninformative entries will simply be removed from matches.

The third matching method is radiusMatch(). Unlike k-nearest neighbor matching, which searches for the k best matches, radius matching returns all of the matches within a particular distance of the query descriptor.19 Other than the substitution of the integer k for the maximum distance maxDistance, the arguments and their meanings for radiusMatch() are the same as those for knnMatch().

Note

Don’t forget that in the case of matching, “best” is determined by the individually derived class that implements the cv::DescriptorMatcher interface, so the exact meaning of “best” may vary from matcher to matcher. Also, keep in mind that there is typically no “optimal assignment” being done, so one descriptor on the query list could match several on the train list, or vice versa.

The next three methods—the alternate forms of match(), knnMatch(), and radiusMatch()—support two lists of descriptors. These are typically used for tracking. Each of these has the same inputs as their aforementioned counterparts, with the addition of the trainDescriptors argument. These methods ignore any descriptors in the internal dictionary, and instead compare the descriptors in the queryDescriptors list only with the provided trainDescriptors.20

After these six matching methods, there are some methods that you will need for general handling of matcher objects. The read() and write() methods require a cv::FileNode and cv::FileStorage object, respectively, and allow you to read and write a matcher from or to disk. This is particularly important when you are dealing with recognition problems in which you have “trained” the matcher by loading information in from what might be a very large database of files. This saves you from needing to keep the actual images around and reconstruct the keypoints and their descriptors from every image every time you run your code.

Finally, the clone() and create() methods allow you to make a copy of a matcher or create a new one by name, respectively. The first method, clone(), takes a single Boolean, emptyTrainData, which, if true, will create a copy with the same parameter values (for any parameters accepted by the particular matcher implementation) but without copying the internal dictionary. Setting emptyTrainData to false is essentially a deep copy, which copies the dictionary in addition to the parameters. The create() method is a static method, which will accept a single string from which a particular derived class can be constructed. The currently available values for the descriptorMatcherType argument to create() are given in Table 16-1. (The meaning of the individual cases will be described in the next section.)

| descriptorMatcherType string | Matcher type |

|---|---|

"FlannBased" |

FLANN (Fast Library for Approximate Nearest Neighbors) method; L2 norm will be used by default |

"BruteForce" |

Element-wise direct comparison using L2 norm |

"BruteForce-SL2" |

Element-wise direct comparison using squared L2 norm |

"BruteForce-L1" |

Element-wise direct comparison using L1 norm |

"BruteForce-Hamming" |

Element-wise direct comparison using Hamming distancea |

"BruteForce-Hamming(2)" |

Element-wise direct comparison using Multilevel Hamming distance (two levels) |

a All the Hamming distance methods can be applied only to binary descriptors that are encoded with the | |

Core Keypoint Detection Methods

In the last 10 years, there has been tremendous progress in tracking and image recognition. Within this space, one very important theme has been the development of keypoints that, as you now know, are small fragments of an image that contain the highest density of information about the image and its contents. One of the most important features of the keypoint concept is that it allows an image to be “digested” into a finite number of essential elements, even as the resolution of the image becomes very high. In this sense, keypoints offer a way to get from an image in a potentially very high dimensionality pixel representation into a more compact representation whose quality increases with image size, but whose actual size does not. It is thought that the human visual cortex “chunks” individual retinal responses (essentially pixels) up into higher-level blocks of information, at least some of which are analogous to the kind of information contained in a keypoint.

Early work that focused on concepts like corner detection (which we saw earlier) gave way to increasingly sophisticated keypoints with increasingly expressive descriptors (the latter we will visit in the next section), which exhibit a variety of desirable characteristics—such as rotational or scale invariance, or invariance to small affine transformations—that were not present in the earlier keypoint detectors.

The current state of the art, however, is that there is a large number of keypoint-detection algorithms (and keypoint descriptors), none of which is “clearly better” than the others. As a result, the approach of the OpenCV library has been to provide a common interface to all of the detectors, with the hope of encouraging and facilitating experimentation and exploration of their relative merits within your individual context. Some are fast, and some are comparatively quite slow. Some find features for which very rich descriptors can be extracted, and some do not. Some exhibit one or more useful invariance properties, of which some might be quite necessary in your application, and some might actually work against you.

In this section, we will look at each keypoint detector in turn, discussing its relative merits and delving into the actual science of each detector, at least deeply enough that you will get a feel for what each one is for and what it offers that may be different than the others. As we have learned, for each descriptor type, there will be a detector that locates the keypoints and a descriptor extractor. We will cover each of these as we discuss each detection algorithm.

Note

In general, it is not absolutely necessary to use the feature extractor that is historically associated with a particular keypoint detector. In most cases, it is meaningful to find keypoints using any detector and then proceed to characterize those keypoints with any feature extractor. In practice, however, these two layers are usually developed and published together, and so the OpenCV library uses this pattern as well.

The Harris-Shi-Tomasi feature detector and cv::GoodFeaturesToTrackDetector

The most commonly used definition of a corner was provided by Harris [Harris88], though others proposed similar definitions even earlier. Such corners, known as Harris corners, can be thought of as the prototypical keypoint.21 Figure 16-11 shows the Harris corners on a pair of images that we will continue to use for other keypoints in this section (for convenient visual comparison). Their definition relies on a notion of autocorrelation between the pixels in a small neighborhood. In plain terms, this means “if the image is shifted a small amount (Δx, Δy), how similar is it to its original self?”

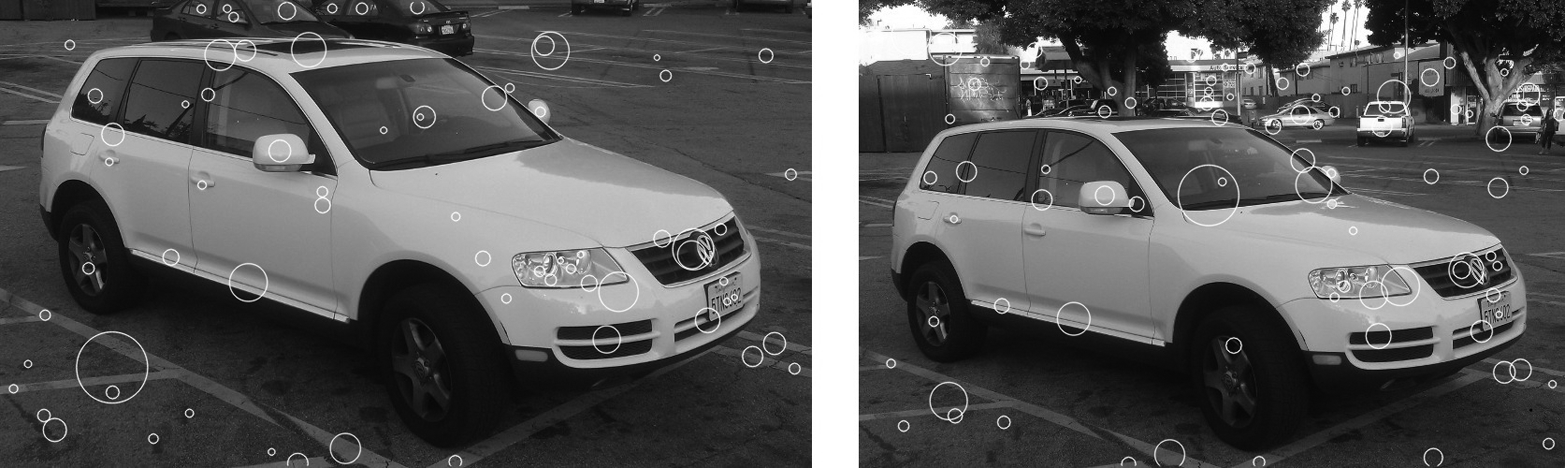

Figure 16-11. Two images of the same vehicle; in each image are the 1,000 strongest Harris-Shi-Tomasi corners. Notice that, in the image on the right, the corners in the background are stronger than those on the car, and so most of the corners in that image originate from there

Harris began with the following autocorrelation function, for image intensity pixels I(x, y):

This is just a weighted sum over a small window around a point (x,y) of the squared difference between the image at some point (i,j) in the window and some other point displaced by (Δx, Δy). (The weighting factor wi, j is a Gaussian weighting that makes the differences near the center of the window contribute more strongly than those farther away from the center.)

What follows in Harris’s derivation is a small amount of algebra, and the approximation that because Δx and Δy are assumed to be small, the term I(i + Δx, j + Δy) can be estimated by I(i, j) + Ix (i, j)Δx + Iy (i, j)Δy) (here Ix and Iy are the first-order partial derivatives of I(x,y) in x and y, respectively).22 The result is the re-expression of the autocorrelation in the form:

Where M(x, y) is the symmetric autocorrelation matrix defined by:

Corners, by Harris’s definition, are places in the image where the autocorrelation matrix has two large eigenvalues. In essence, this means that moving a small distance in any direction will change the image.23 This way of looking at things has the advantage that, when we consider only the eigenvalues of the autocorrelation matrix, we are considering quantities that are invariant also to rotation, which is important because objects that we are tracking might rotate as well as translate.

Note

In this case these two eigenvalues of the Harris corner do more than determine whether a point is a good feature to track (i.e., a keypoint); they also provide an identifying signature for the point (i.e., a keypoint descriptor). It is a common, though by no means universal, feature of keypoints that they are intimately tied to their descriptors in this way. In many cases, the keypoint is, in essence, any point for which the associated descriptor (in this case the two eigenvalues of M(x, y)) meets some threshold criteria. At the same time, it is also noteworthy that Harris’s original threshold criterion was not the same as that later proposed by Shi and Tomasi; the latter turns out to be superior for most tracking applications.

Harris’s original definition involved taking the determinant of M(x, y) and subtracting its squared trace (with some weighting coefficient):

One then found the “corners” (what we now call keypoints) by searching for local maxima of this function (and often also comparing this function to a predetermined threshold). This function H, known as the Harris measure, effectively compares the eigenvalues of M (which we refer to as λ1 and λ2 in our definition of H) without requiring their explicit computation. This comparison implicitly contains the parameter κ, termed the sensitivity, which can be set meaningfully to any value between 0 and 0.24, but is typically set to about 0.04.24 Figure 16-12 shows an image in which the regions around some individual keypoint candidates are shown enlarged.

Figure 16-12. In a classic image (a), keypoints found by the Shi-Tomasi method are shown as black dots. Below that are three images that are enlargements of a small subsection of the original. On the left (b) are shown (as Xs) points that are not keypoints. These points have small eigenvalues in both dimensions. In the center (c) are shown (as Xs) points that are also not keypoints; these are edges, and have one small eigenvalue and one large eigenvalue associated with them. On the right (d) are actual found keypoints; for these points, both eigenvalues are large. The ovals visualize the inverse of these eigenvalues

It was later found by Shi and Tomasi [Shi94] that good corners resulted as long as the smaller of the two eigenvalues was greater than a minimum threshold. Shi and Tomasi’s method was not only sufficient, but in many cases gave more satisfactory results than Harris’s method. The OpenCV implementation of cv::GFTTDetector, as a default, uses Shi and Tomasi’s measure, but other keypoint detectors we will discuss later often use either Harris’s original measure or a variation of it.

Keypoint finder

The Harris-Shi-Tomasi corner detector is also the simplest implementation of the cv::Feature2D (the detector part) interface:

class cv::GFTTDetector : public cv::Feature2D {

public:

static Ptr<GFTTDetector> create(

int maxCorners = 1000, // Keep this many corners

double qualityLevel = 0.01, // fraction of largest eigenvalue

double minDistance = 1, // Discard corners if this close

int blockSize = 3, // Neighborhood used

bool useHarrisDetector = false, // If false, use Shi Tomasi

double k = 0.04 // Used for Harris metric

);

...

};

The constructor for cv::GFTTDetector takes arguments that set all of the basic runtime parameters for the algorithm. The maxCorners parameter indicates the maximum number of points that you would like returned.25 The parameter qualityLevel indicates the minimal acceptable lower eigenvalue for a point to be included as a corner. The actual minimal eigenvalue used for the cutoff is the product of the qualityLevel and the largest lower eigenvalue observed in the image. Hence, the qualityLevel should not exceed 1 (a typical value might be 0.10 or 0.01). Once these candidates are selected, a further culling is applied so that multiple points within a small region need not be included in the response. In particular, the minDistance guarantees that no two returned points are within the indicated number of pixels.

The blockSize is the region around a given pixel that is considered when you are computing the autocorrelation matrix of derivatives. It turns out that in almost all cases you will get superior results if you sum these derivatives over a small window than if you simply compute their value at only a single point (i.e., at a blockSize of 1).

If useHarris is true, then the Harris corner definition is used rather than the Shi-Tomasi definition, and the value k is the weighting coefficient used to set the relative weight given to the trace of the autocorrelation matrix Hessian compared to the determinant of the same matrix.

Of course, when you want to actually compute keypoints, you do that with the detect() method, which cv::GFTTDetector inherits from the cv::Feature2D base class.

Additional functions