Chapter 21. StatModel: The Standard Model for Learning in OpenCV

In the previous chapter we discussed machine learning broadly and looked at just a few basic algorithms that were implemented in the library long ago. In this chapter we will look at several more modern techniques that will prove to be of very wide application. Before we start on those, however, we will introduce cv::ml::StatModel, which forms the basis of the implementation for the interfaces to all of the more advanced algorithms we will see in this chapter. Once armed with an understanding of cv::ml::StatModel, we will spend the remainder of the chapter looking at various learning algorithms available in the OpenCV library. The algorithms are presented here in an approximately chronological order relative to their introduction into the computer vision community.1

Common Routines in the ML Library

The contemporary routines in the ML library are implemented within classes that are derived from the common base class cv::ml::StatModel. This base class defines the interface methods that are universal to all of the available algorithms. Some of the methods are declared in the base class cv::Algorithm, from which cv::ml::StatModel itself is derived. Here is the (somewhat abbreviated) cv::ml::StatModel base class definition straight from the machine learning (ML) library:

// Somewhere above...

// namespace cv {

// namespace ml {

class StatModel : public cv::Algorithm {

public:

/** Predict options */

enum Flags {

UPDATE_MODEL = 1,

RAW_OUTPUT = 1,

COMPRESSED_INPUT = 2,

PREPROCESSED_INPUT = 4

};

virtual int getVarCount() const = 0; // number training samples

virtual bool empty() const; // true if no data loaded

virtual bool isTrained() const = 0; // true if the model is trained

virtual bool isClassifier() const = 0; // true if the model is a classifier

virtual bool train(

const cv::Ptr<cv::ml::TrainData>& trainData, // data to be loaded

int flags = 0 // (depends on model)

);

// Trains the statistical model

//

virtual bool train(

InputArray samples, // training samples

int layout, // layout See ml::SampleTypes

InputArray responses // responses associated with the training samples

);

// Predicts response(s) for the provided sample(s)

//

virtual float predict(

InputArray samples, // input samples, float matrix

OutputArray results = cv::noArray(), // optional output results matrix

int flags = 0 // (model-dependent)

) const = 0;

// Computes error on the training or test dataset

//

virtual float calcError(

const Ptr<TrainData>& data, // training samples

bool test, // true: compute over test set

// false: compute over training set

cv::OutputArray resp // the optional output responses

) const;

// In addition, each class must implement static `create()` method with no

// parameters or with all default parameter values.

//

// example:

// static Ptr<SVM> SVM::create();

};

You will notice that cv::ml::StatModel inherits from cv::Algorithm. Though we will not include everything from that class here, these are a few salient methods that are likely to come up often in the context of cv::ml::StatModel and your usage of it:

// Somewhere above

// namespace cv:: {

// namespace ml:: {

class Algorithm {

...

public:

virtual void save(

const String& filename

) const;

// Calling example: Ptr<SVM> svm = Algorithm::load<SVM>("my_svm_model.xml");

//

template<typename _Tp> static Ptr<_Tp> static load(

const String& filename,

const String& objname = String()

);

virtual void clear();

...

}

The methods of cv::StatModel provide mechanisms for reading and writing trained models from and to disk, and a method for clearing the data in the model. These three actions are essentially universal.2 On the other hand, the routines for training the algorithms and for applying them for prediction vary in interface from algorithm to algorithm. This is natural because the training and prediction aspect of the different algorithms will have different capabilities and, at the very least, will require different parameters to be configured.

Training and the cv::ml::TrainData Structure

The training and prediction methods shown in the cv::ml::StatModel prototype will naturally vary from one learning technique to the next. In this section we will look at how those methods are structured and how they are used.

Recall that there were two methods called train() in the cv::ml::StatModel prototype. The first train() method takes the training data in the form of a cv::ml::TrainData structure pointer and various algorithm-dependent training flags. The second method is a shortcut variant that constructs that same training data structure using the provided samples and the ground-truth responses directly. As we will see, the cv::ml::TrainData interface allows us to prepare the data in some useful ways, so it is generally the more expressive way of training a model.

Constructing cv::ml::TrainData

The cv::ml::TrainData class allows you to package up your data, along with some instructions about how it is to interpreted and used in training. In practice, this additional information is extremely useful. Here is the create() method used to generate a new cv::ml::TrainData object.

// Construct training data from the specified matrix of data points and responses.

// It's possible to use a subset of features (a.k.a. variables) and/or subset of

// samples; it's possible to assign weights to individual samples.

//

static cv::Ptr<cv::ml::TrainData> cv::ml::TrainData::create(

cv::InputArray samples, // Array of samples (CV_32F)

int layout, // row/col (see ml::SampleTypes)

cv::InputArray responses, // Float array of responses

cv::Inputarray varIdx = cv::noArray(), // Specifies training variables

cv::InputArray sampleIdx = cv::noArray(), // Specifies training samples

cv::InputArray sampleWeights = cv::noArray(), // Optional sample wts (CV_32F)

cv::InputArray varType = cv::noArray() // Optional, types for each

// input and output

// variable (CV_8U)

);

This method constructs training data from the preallocated arrays of training samples and the associated responses. The matrix of samples must be of type CV_32FC1 (32-bit, floating-point, and single-channel). Though the cv::Mat class is clearly capable of representing multichannel images, the machine learning algorithms take only a single channel—that is, just a two-dimensional array of numbers. Typically, this array is organized as rows of data points, where each “point” is represented as a vector of features. Hence, the columns contain the individual features for each data point and the data points are stacked to yield the 2D single-channel training matrix. To belabor the topic: the typical data matrix is thus composed of (rows, columns) = (data points, features).

Some of the algorithms can handle transposed matrices directly. The parameter layout specifies how the data is stored:

layout = cv::ml::ROW_SAMPLE- Means that the feature vectors are stored as rows (this is the most common layout)

layout = cv::ml::COL_SAMPLE- Means that the feature vectors are stored as columns

You may well ask: What if my training data is not floating-point numbers but instead is letters of the alphabet or integers representing musical notes or names of plants? The answer is: Fine, just turn them into unique 32-bit floating-point numbers when you fill the cv::Mat. If you have letters as features or labels, you can cast the ASCII character to floats when filling the data array. The same applies to integers. As long as the conversion is unique, things should work out fine. Remember, however, that some routines are sensitive to widely differing variances among features. It’s generally best to normalize the variance of features, as we saw in the previous section. With the exception of the tree-based algorithms (decision trees, random trees, and boosting) that support both categorical and ordered input variables, all other OpenCV ML algorithms work only with ordered inputs. A popular technique for making ordered-input algorithms work with categorical data is to represent them in “1-radix” or “1-hot” notation; for example, if the input variable color may have seven different values, then it may be replaced by seven binary variables, where one and only one of the variables may be set to 1.3

The responses parameter will contain either categorical labels such as poisonous or nonpoisonous, in the case of mushroom identification, or are regression values (numbers) such as body temperatures taken with a thermometer. The response values, or “labels,” are usually a one-dimensional vector with one value per data point. One important exception is neural networks, which can have a vector of responses for each data point. For categorical responses, the response value type must be an integer (CV_32SC1); for regression problems, the response should be of 32-bit floating-point type (CV_32FC1). In the special case of neural networks, as alluded to before, it is common to put a little twist on this, and actually perform categorization using a regression framework. In this case, the 1-hot encoding mentioned earlier is used to represent the various categories and floating-point output is used for all of the multiple outputs. In this case the network is, in essence, being trained to regress to something like the probability that the input is in each category.

Recall, however, that some algorithms can deal only with classification problems and others only with regression, while still others can handle both. In this last case, the type of output variable is passed either as a separate parameter or through the varType vector. This vector can be either a single column or a single row, and must be of type CV_8UC1 or cv::S8C1. The number of entries in varType is equal to the number of input variables (![]() ) plus the number of responses (typically one).4 The first

) plus the number of responses (typically one).4 The first ![]() entries will tell the algorithm the type of the corresponding input feature, while the remainder indicate the types of the output. Each entry in

entries will tell the algorithm the type of the corresponding input feature, while the remainder indicate the types of the output. Each entry in varType should be set to one of the following values:

cv::ml::VAR_CATEGORICAL- Means that the output values are discrete class labels

cv::ml::VAR_ORDERED (= cv::ml::VAR_NUMERICAL)- Means that the output values are ordered; that is, different values can be compared as numbers and so this is a regression problem

Note

Algorithms of the regression type can handle only ordered-input variables. Sometimes it is possible to make up an ordering for categorical variables as long as the order is kept consistent, but this can sometimes cause difficulties for regression because the pretend “ordered” values may jump around wildly when they have no philosophical basis for their imposed order.

Many models in the ML library may be trained on a selected feature subset and/or on a selected sample subset of the training set. To make this easier for the user, the cv::ml::TrainData::create() method includes the vectors varIdx and sampleIdx as parameters. The varIdx vector can be used to identify specific variables (features) of interest, while sampleIdx can identify specific data points of interest. Either of these may simply be omitted or set to cv::noArray() (the default value) to indicate that you would like to use “all of the features” or “all of the points.” Both vectors are either provided as lists of zero-based indices or as masks of active variables/samples, where a nonzero value signifies active. In the former case, the vector must be of type CV_32SC1 and may have any length. In the latter case, the array must be of type CV_8UC1 and must have the same length as the number of features or samples (as appropriate). The parameter sampleIdx is particularly helpful when you’ve read in a chunk of data and want to use some of it for training and some of it for testing without having to first break it into two different vectors.

Constructing cv::ml::TrainData from stored data

Often, you will have data already saved on disk. If this data is in CSV (comma-separated value) format, or you can put it into this format, you can create a new cv::ml::TrainData object from that CSV file using cv::ml::TrainData::loadFromCSV().

// Load training data from CSV file; part of each row may be treated as the // scalar or vector responses; the rest are input values. // static cv::Ptr<cv::ml::TrainData> cv::ml::TrainData::loadFromCSV( const String& filename, // Input file name int headerLineCount, // Ignore this many lines int responseStartIdx = -1, // Idx of first out var (-1=last) int responseEndIdx = -1, // Idx of last out var plus one String& varTypeSpec = String(), // Optional, specifies var types char delimiter = ',', // Char used to separate values char missch = '?' // Used for missing data in CSV );

The CSV reader skips the first headerLineCount lines and then reads the data. The data is read row by row5 and individual features are separated based on commas. If some other separator is used in the CSV file, the delimeter argument may be used to replace the default comma (e.g., by a space or semicolon). Often, the responses will be found in the leftmost or the rightmost column, but the user may specify any column (or a range of columns) as necessary. The responses will be drawn from the interval [responseStartIdx, responseEndIdx), inclusive of responseStartIdx but exclusive of responseEndIdx. The variable types, if required, are specified via single compact text string, vatTypeSpec. For example:

"ord[0-9,11]cat[10]"

means that the data has 12 columns, the first 10 columns contain ordered values, then there is column of categorical values, and then there is yet another column of ordered values.

If you do not provide a variable type specification, then the reader tries to “do the right thing” by following a few simple rules. It considers input variables to be ordered (numerical) unless they clearly contain non-numerical values (e.g., "dog", "cat"), in which case they are made categorical. If there is only one output variable, then it will follow pretty much the same rule,6 but if there are multiple, then they are always considered ordered.

It is also possible to specify a special character, using the missch argument, to be used for missing measurements. However, it is important to know that some of the algorithms cannot handle such missing values. In such cases missing points should be interpolated or otherwise handled by the user before training or the corrupted records should be rejected in advance.7

Note

The problem of missing data comes up in real world problems quite often. For example, when the authors were working with manufacturing data, some measurement features would end up missing during the time that workers took coffee breaks. Sometimes experimental data simply is forgotten, such as forgetting to take a patient’s temperature one day during a medical experiment.

Secret sauce and cv::ml::TrainDataImpl

Were you to go to the source code and look at the class definition for cv::ml::TrainData, you would see that it is full of pure virtual functions. In fact, it is just an interface, from which you can derive and create your own training data containers. This fact immediately leads to two obvious questions: why would you want to do this, and what exactly is going on inside of cv::ml::TrainData::create() if cv::ml::TrainData is a virtual class type?

As for the why, training data can be very complex in real-life situations and the data itself can be very large. In many cases it is necessary to implement more sophisticated strategies for managing the data and for storing it. For these reasons, you might want to implement your own training data container that, for example, uses a database for the management of the bulk of the available data. Using the cv::ml::TrainData interface, you can implement your own data container and the available algorithms will run on that data transparently.

For the second question, the how, the answer is that the cv::ml::TrainData::create() method actually creates an object of a different class than it appears to. There is a class called cv::ml::TrainDataImpl that is essentially the default implementation of a data container. This object manages the data just the way you would expect—in the form of a few arrays inside that hold the various things you think you have put in there.

In fact, the existence of this class will be largely invisible to you unless (until) you start looking at the library source code directly. Of course, if you do find yourself wanting to build your own cv::ml::TrainData–derived container class, it will be very useful to look at the implementation of cv::ml::TrainDataImpl in .../opencv/modules/ml/src/data.cpp.

Splitting training data

In practice, when you are training a machine learning system, you don’t want to use all of the data you have to train the algorithm. You will need to hold some back to test the algorithm when it is done. If you don’t do this, you will have no way of estimating how your trained system will behave when presented with novel data. By default, when you construct a new instance of TrainData, it’s all considered available to be used for training data, and none of it is held back for such testing. Using cv::ml::TrainData::setTrainTestSplit(), you can split the data into a training and a test part, and just use the training part for training your model. Using just this training data is the automatic behavior of cv::ml::StatModel::train(), assuming you have marked what data you want it to use.

// Splits the training data into the training and test parts // void cv::ml::TrainData::setTrainTestSplit( int count, bool shuffle = true ); void cv::ml::TrainData::setTrainTestSplitRatio( double ratio, bool shuffle = true ); void cv::ml::TrainData::shuffleTrainTest();

The three members of cv::ml::TrainData that will help you out with this are: setTrainTestSplit(), setTrainTestSplitRatio(), and shuffleTrainTest(). The first takes a count argument that specifies how many of the vectors in the data set should be labeled as training data (with the remainder being test data). Similarly the second function does the same thing, but allows you to specify the ratio of points (e.g., 0.90 = 90%) that will be labeled as training data. Finally, the third “shuffle” method will randomly assign the train and test vectors (while keeping the number of each fixed). Either of the first two methods supports a shuffle argument. If true, then the test and train labels will be assigned randomly; otherwise, the train samples will start from the beginning and the test samples will be those vectors thereafter.

Note that internally, the default implementation IMPL has three separate indices that do similar things: the sample index, the train index, and the test index. Each is a list of indices into the overall array of samples in the container that indicates which samples are to be used in a particular context. The sample index is an array listing all samples that will be used. The train index and test index are similar, but list which samples are for training and which are for testing. As implemented, these three indices have a relationship that some might find unintuitive.

If the train index is defined, it should always be the case that the test index is defined. This is the natural result of the use of the functions just described to create these internal indices. If either (both) is defined, then its behavior will always define how train() responds; this is regardless of anything that might be in the sample index. Only when these two indices are undefined will the sample index be used. In that case, all data indicated by the sample index will be assumed available for training, and no data will be marked as test data.

Accessing cv::ml::TrainData

Once the training data is constructed, it’s possible to retrieve its parts, with or without preprocessing, using the methods described next. The function cv::ml::TrainData::getTrainSamples() retrieves a matrix only of the training data.

// Retrieve only the active training data into a cv::Mat array. // cv::Mat cv::ml::TrainData::getTrainSamples( int layout = ROW_SAMPLE, bool compressSamples = true, bool compressVars = true ) const;

When compressSamples or compressVars are true, the method will retain only rows or columns set by sampleIdx and varIdx, respectively (typically at construction time). The method also transposes the data if the desired layout is different from the original one. If the sample index or the train index is defined, then only the indicated samples will be returned. Recall, however, that, if both are defined, it will be the train index that determines what is returned.

Similarly, the cv::ml::TrainData::getTrainResponses() method extracts only the active response vector elements.

// Return the train responses (for the samples selected using sampleIdx). // cv::Mat cv::ml::TrainData::getTrainResponses() const;

As with cv::ml::TrainData::getTrainSamples(), if the sample index or the train index is defined, then only the indicated samples will be returned. Recall, however, that, if both are defined, it will be the train index that determines what is returned.

Similarly, there are two functions—cv::ml::TrainData::getTestSamples() and cv::ml::TrainData::getTestResponses()—that return the analogous arrays constructed from only the test samples. In this case, however, if the test index is not defined, then an empty array will be returned.

Finally, there are accessors that will simply tell you how many of various kinds of samples are in the data container. We list them here.

int getNTrainSamples() const; // Number of samples indicated by train idx

// or total samples if samples idx is not defined

int getNTestSamples() const; // Number of samples indicated by test idx

// or zero test idx is not defined

int getNSamples() const; // Number of samples indicated by samples idx

// or total samples if samples idx is not defined

int getNVars() const; // Number of features indicated by variable idx

// or total samples if variable idx is not defined

int getNAllVars() const; // Number of features total

Prediction

Recall from the prototype that the general form of the predict() method is as follows:

float cv::ml::StatModel::predict( cv::InputArray samples, // input samples, float matrix cv::OutputArray results = cv::noArray(), // optional output matrix of results int flags = 0 // (model-dependent) ) const;

This method is used to predict the response for a new input data vector. When you are using a classifier, predict() returns a class label. For the case of regression, this method returns a numerical value. Note that the input sample must have as many components as the train_data that was used for training.8 In general, samples will be an input floating-point array, with one sample per row, and results will be one result per row. When only a single sample is provided, the predicted result will be returned form the predict() function. Keep in mind, however, that in some cases, these general behaviors will be slightly different for any particular derived classifier. Additional flags are algorithm-specific and allow for such things as missing feature values in tree-based methods. The function suffix const tells us that prediction does not affect the internal state of the model. This method is thread-safe and can be run in parallel, which is useful for web servers performing image retrieval for multiple clients and for robots that need to accelerate the scanning of a scene.

In addition to being able to generate a prediction, we can compute the error of the model over the training or test data. When the model is being used for classification, this is the percentage of incorrectly classified samples; when we are using the model for regression, this is the mean squared error. The method that does it is called cv::ml::StatModel::calcError():

float cv::ml::StatModel::calcError(

const cv::Ptr<cv::ml::TrainData>& data, // training samples

bool test, // false: compute over training set

// true: compute over test set

cv::OutputArray resp // the optional output responses

) const;

In this case, we typically pass in the same cv::ml::TrainData data container that we used for training. We then use the test argument to determine if we want to know how well the trained algorithm did on either the training data used (test set to false) or on the test data that we withheld from the training process (test set to true). Finally, we can use the resp array to collect the responses to the individual vectors tested. Though this is optional, the argument is not. If you are not interested in the output responses, you must pass cv::noArray() here.

We are now ready to move on to the ML library proper with the normal Bayes classifier, after which we will discuss decision-tree algorithms (decision trees, boosting, random trees, and Haar cascade). For the other algorithms we’ll provide short descriptions and usage examples.

Machine Learning Algorithms Using cv::StatModel

Now that we have a good feel for how the ML library in OpenCV works, we can move on to how to use individual learning methods. This section looks briefly at eight machine learning routines, that latter four of which have recently been added to OpenCV. Each implements a well-known learning technique, by which we mean that a substantial body of literature exists on each of these methods in books, published papers, and on the Internet. In time, it is expected that more new algorithms will appear.

Naïve/Normal Bayes Classifier

Earlier, we looked at some legacy routines from before the machine learning library was systematized; now we will look at a simple classifier that uses the new cv::ml::StatModel interface introduced in this chapter. We’ll begin with OpenCV’s simplest supervised classifier, cv::ml::NormalBayesClassifier, which is alternatively known as a normal Bayes classifier or a naïve Bayes classifier. It’s “naïve” because, in its mathematical implementation, it assumes that all the features we observe are independent variables from one another (even though this is seldom actually the case). For example, finding one eye usually implies that another eye is lurking nearby; these are not uncorrelated observations. However, it is often possible to ignore this correlation in practice and still get good results. Zhang discusses possible reasons for the sometimes surprisingly good performance of this classifier [Zhang04]. Naïve Bayes is not used for regression, but it is an effective classifier that can handle multiple classes, not just two. This classifier is the simplest possible case of what is now the large and growing field of Bayesian networks, or “probabilistic graphical models.”9

By way of example, consider the case in which we have a collection of images, some of which are images of faces, while others are images of other things (maybe cars and flowers). Figure 21-1 portrays a model in which certain measureable features are caused to exist if the object we are looking at is, in fact, a face. In general, Bayesian networks are causal models. In the figure, facial features in an image are asserted to be caused by (or not caused by) the existence of an object, which may (or may not) be a face. Loosely translated into words, the graph in the figure says, “An object, which may be of type ‘face’ or of some other type, would imply either the truth of falsehood of five additional assertions: ‘there is a left eye,’ ‘there is a right eye,’ etc., for each of five facial features.” In general, such a graph is normally accompanied by additional information that tells us the possible values of each node and the actual probabilities of each value in each bubble as a function of the values of the nodes that have arrows pointing into the bubble. In our case, the node O can take values “face,” “car,” or “flower,” and the other five nodes can take the values present or absent. The probabilities for each feature, given the nature of the object, we will learn from data.

Note

Note that this is precisely where the “uncorrelated” nature of the graph comes in; specifically, the probability that there is a nose depends only on whether the object is a face, and is independent (or at least is asserted to be independent) of whether or not there is a mouth, a hairline, and so on. As a result, there are a lot fewer combinations of cases to learn, because everything we care about essentially factorizes into the question of how each feature is statistically related to the object’s presence. This factorization is the precise meaning of uncorrelated.

Figure 21-1. A (naïve) Bayesian network, where the lower-level features are caused by the presence of an object (such as a face)

In use, the object variable in a naïve Bayes classifier is usually a hidden variable and the features—via image processing operations on the input image—constitute the observed evidence that the value of the object variable is of whatever type (i.e., “face”). Models such as this are called generative models because the object causally generates (or fails to generate) the face features.10 Because it is generative, after training, we could instead start by assuming the value of the object node is “face” and then randomly sample what features are probabilistically generated given that we have assumed a face to exist.11 This top-down generation of data with the same statistics as the learned causal model is a useful capability. For example, one might generate faces for computer graphics display, or a robot might literally “imagine” what it should do next by generating scenes, objects, and interactions. In contrast to Figure 21-1, a discriminative model would have the direction of the arrows reversed.

Bayesian networks, in their generality, are a deep field and initially can be a difficult topic. However, the naïve Bayes algorithm derives from a simple application of Bayes’ rule.12 In this case, the probability (denoted p) that an object is a face, given that the features are found (denoted, left to right in Figure 21-1, by LE, RE, N, M, and H) is:

In words, the components of this equation are typically read as:

The significance of this equation is that, in practice, we compute some evidence and then decide what object caused it (not the other way around). Since the computed evidence term is the same for any object, we can ignore that term in comparisons. Said another way, if we have many object types then we need only find the one with the maximum numerator. The numerator is exactly the joint probability of the model with the data: p(O=“face”, LE, RE, N, M, H).

Up to this point, we have not really used the “naïve” part of the naïve Bayes classifier. So far, these equations would be true for any Bayesian classifier. In order to make use of the assumption that the different features are statistically independent of one another (recall that this is the primary informative content of the graph in Figure 21-1), we now use the chain rule for probability to derive the joint probability:

Finally, when we apply our assumption of independence of features, the conditional features drop out. For example, the probability of a nose, given that the object is a face, and that we observe both a left eye and a right eye (i.e., ![]() ) is, by our assumption, equal to the probability of a nose given just by the fact of a face being present:

) is, by our assumption, equal to the probability of a nose given just by the fact of a face being present: ![]() . Similar logic applies to every term on the righthand side of the preceding equation, with the result that:

. Similar logic applies to every term on the righthand side of the preceding equation, with the result that:

So, generalizing face to “object” and our list of features to “all features,” we obtain the reduced equation:

To use this as an overall classifier, we learn models for the objects that we want. In run mode we compute the features and find particular objects that maximize this equation. Typically, we then test to see whether the probability for that “winning” object is over a given threshold. If it is, then we declare the object to be found; if not, we declare that no object was recognized.

Note

If (as frequently occurs) there is only one object of interest, then you might ask: “The probability I’m computing is the probability relative to what?” In such cases, there is always an implicit second object—namely, the background—which is everything that is not the object of interest that we’re trying to learn and recognize.

In practice, learning the models is easy. We take many images of the objects; we then compute features over those objects and compute the fraction of how many times a feature occurred over the training set for each object. In general, if you don’t have much data, then simple models such as naïve Bayes will tend to outperform more complex models, which will “assume” too much about the data (bias).

The naïve/normal Bayes classifier and cv::ml::NormalBayesClassifier

The following is the class definition for the normal Bayes classifier. Note that the class name cv::ml::NormalBayesClassifier is actually another layer of interface definition, while cv::ml::NormalBayesClassifierImpl is the name of the actual class that implements the normal Bayes classifier. For convenience, this definition lists some important inherited methods as comments.

// Somewhere above...

// namespace cv {

// namespace ml {

//

class NormaBayesClassifierImpl : public NormaBayesClassifier {

// cv::ml::NormaBayesClassifier is derived

// from cv::ml::StatModel

public:

...

float predictProb(

InputArray inputs,

OutputArray outputs,

OutputArray outputProbs,

int flags = 0

);

...

// From class NormaBayesClassifier

//

// Ptr<NormaBayesClassifier> NormaBayesClassifier::create(); // constructor

};

The training method for the normal Bayes classifier, inherited from cv::ml::StatModel, is:

bool cv::ml::NormalBayesClassifier::train( const Ptr<cv::ml::TrainData>& trainData, // your data int flags = 0 // 0=new data or UPDATE_MODEL=add );

The flags parameter may be 0 or include the cv::ml::StatModel::UPDATE_MODEL flag, which means that the model needs to be updated using the additional training data rather than retrained from scratch.

The cv::NormalBayesClassifier implements the inherited predict() interface described in cv::ml::StatModel, which computes and returns the most probable class for its input vectors. If more than one input data vector (row) is provided in the samples matrix, the predictions are returned in corresponding rows of the results vector. If there is only a single input in samples, then the resulting prediction is also returned as a float value by the predict() method and the results array may be set to cv::noArray().

float cv::ml::NormalBayesClassifier::predict( cv::InputArray samples, // input samples, float matrix cv::OutputArray results = cv::noArray(), // optional output results matrix int flags = 0 // (model-dependent) ) const;

Alternatively, the normal Bayes classifier also offers the method predictProb(). This method takes the same arguments as cv::ml::NormalBayesClassifier::predict(), but also the arrray resultProbs. This is a floating-point matrix of number_of_samples × number_of_classes size, where the computed probabilities (that the corresponding samples belong to the particular classes) will be stored.13 The format for this prediction method is:

float cv::ml::NormalBayesClassifier::predictProb( // prob if single sample InputArray samples, // one sample per row OutputArray results, // predictions, one per row OutputArray resultProbs, // row=sample, column=class int flags = 0 // 0 or StatModel::RAW_OUTPUT ) const;

Though the naïve Bayes classifier is extremely useful for small data sets, it does not generally perform well when the data has a great degree of structure. With this in mind, we move next to a discussion of tree-based classifiers, which can dramatically outperform something as simple as the naïve Bayes classifier, particularly when sufficient data is present.

Binary Decision Trees

We will go through decision trees in detail, since they are highly useful and use most of the functionality in the machine learning library (and thus serve well as an instructional example more generally). Binary decision trees were invented by Leo Breiman and colleagues,14 who named them classification and regression trees (CART). This is the decision tree algorithm that OpenCV implements. The gist of the algorithm is to define what is called an impurity metric relative to the data in every node of a tree of decisions, and to try to minimize the impurity with those decisions. When using CART for regression to fit a function, one often uses the sum of squared differences between the true values and the predicted values; thus, minimizing the impurity means making the predicted function more similar to the data. For categorical labels, one typically defines a measure that is minimal when most values in a node are of the same class. Three common measures to use are entropy, Gini index, and misclassification (all described in this section). Once we have such a metric, a binary decision tree searches through the feature vector to find which feature, combined with which threshold for the value of that feature, most “purifies” the data. By convention, we say that features above the threshold are true and that the data thus classified will branch to the left; the other data points branch right.15 This procedure is then used recursively down each branch of the tree until the data is of sufficient purity at the leaves or until the number of data points in a node reaches a set minimum. Figure 21-2 shows an example.

Figure 21-2. In this example, a hypothetical group of 100 laptop computers is analyzed and the primary factors determining failure rate are used to build the classification tree; all 100 computers are accounted for by the leaf nodes of the tree

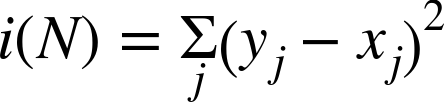

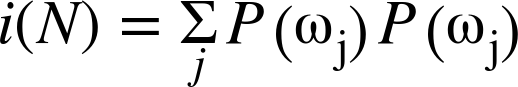

The equations for several available definitions of node impurity i(N) are given next. Different definitions are suited to the distinct problem cases, and for regression versus classification.

Classification impurity

For classification, decision trees often use one of three methods: entropy impurity, Gini impurity, or misclassification impurity. For these methods, we use the notation ![]() to denote the fraction of patterns at node N that are in class

to denote the fraction of patterns at node N that are in class ![]() . Each of these impurities has slightly different effects on the splitting decision. Gini is the most commonly used, but all the algorithms attempt to minimize the impurity at a node. Figure 21-3 graphs the impurity measures that we want to minimize. In practice, it is best to just try each impurity to determine on a validation set which one works best.

. Each of these impurities has slightly different effects on the splitting decision. Gini is the most commonly used, but all the algorithms attempt to minimize the impurity at a node. Figure 21-3 graphs the impurity measures that we want to minimize. In practice, it is best to just try each impurity to determine on a validation set which one works best.

Figure 21-3. Decision tree impurity measures

Entropy impurity:

Gini impurity:

Misclassification impurity:

Decision trees are perhaps the most widely used classification technology. This is due to their simplicity of implementation, ease of interpretation of results, flexibility with different data types (categorical, numerical, unnormalized, and mixes thereof), ability to handle missing data through surrogate splits, and natural way of assigning importance to the data features by order of splitting. Decision trees form the basis of other algorithms such as boosting and random trees, which we will discuss shortly.

OpenCV implementation

The following is the abbreviated declaration of cv::ml::DTrees. The train() methods are just derived from the base class; most of what we need in the definition is how the parameters to the model are set and loaded.

// Somewhere above...

// namespace cv {

// namespace ml {

//

class DTreesImpl : public Dtrees { // cv::ml::DTrees is derived

// from cv::ml::StatModel

public:

// (Inherited from cv::ml::DTrees)

//

//enum Flags {

// PREDICT_AUTO = 0,

// PREDICT_SUM = (1<<8),

// PREDICT_MAX_VOTE = (2<<8),

// PREDICT_MASK = (3<<8)

//};

int getCVFolds() const; // get num cross validation folds

int getMaxCategories() const; // get max number of categories

int getMaxDepth() const; // get max tree depth

int getMinSampleCount() const; // get min sample count

Mat getPriors() const; // get priors for categories

float getRegressionAccuracy() const; // get required regression acc.

bool getTruncatePrunedTree() const; // get to truncate pruned trees

bool getUse1SERule() const; // get for use 1SE rule in pruning

bool getUseSurrogates() const; // get to use surrogates

void setCVFolds( int val ); // set num cross validation folds

void setMaxCategories( int val ); // set max number of categories

void setMaxDepth( int val ); // set max tree depth

void setMinSampleCount( int val ); // set min sample count

void setPriors( const cv::Mat &val ); // set priors for categories

void setRegressionAccuracy( float val ); // set required regression acc.

void setTruncatePrunedTree( bool val ); // set to truncate pruned trees

void setUse1SERule( bool val ); // set for use 1SE rule in pruning

void setUseSurrogates( bool val ); // set to use surrogates

...

// Experts can use these, but non-experts can ignore them.

//

const std::vector<Node>& getNodes() const;

const std::vector<int>& getRoots() const;

const std::vector<Split>& getSplits() const;

const std::vector<int>& getSubsets() const;

...

// From class DTrees

//

//Ptr<DTrees> DTrees::create(); // The algorithm "constructor",

// // returns Ptr<DTreesImpl>

};

First of all, you might have noticed that the name is plural: DTrees. This is because in computer vision it’s not standalone decision trees that are primarily used, but rather ensembles of decision trees—that is, collections of decision trees that produce some joint decision. Two such popular ensembles, implemented in OpenCV and discussed later in this chapter, are RTrees (random trees) and Boost (boosting). Both share a lot of internal machinery and so DTrees is a sort of base class that, in our current case, can be viewed as an ensemble consisting of a single tree.

The second thing you might have noticed is that again, as we saw with the data container cv::ml::TrainData, these prototypes are mostly pure virtual. This is essentially the same thing again. There is actually a hidden class called cv::ml::DTreesImpl that is derived from cv::ml::DTrees and that contains all of the default implementation. The bottom line is that you can effectively ignore the fact that those functions are pure virtual in the preceding class definition. If, however, at some point you would like to go and look at the default implementation, you will need this information in order to find the member functions you want in .../modules/ml/src/tree.cpp.

Once you have constructed a cv::ml::DTrees object using cv::ml::DTrees::create(), you will need to configure the various runtime parameters. You can do this one of two ways. You will need to construct a structure that contains the needed parameters to configure the tree. This structure is called cv::ml::TreeParams. The salient parts of its definition are given next.

You will notice that you can create the structure either with the form of its constructor that has an argument for every component, or you can just create it using the default constructor—in which case everything will be set to default values—and then use individual accessors to set the values you want to customize.

Table 21-1 contains a brief description of the components of cv::ml::TreeParams(), their default values, and their meanings.

| Params() argument | Value for default constructor | Definition |

|---|---|---|

maxDepth |

INT_MAX |

Tree will not exceed this depth, but may be less deep. |

minSampleCount |

10 |

Do not split a node if there are fewer than this number of samples at that node. |

regressionAccuracy |

0.01f |

Stop splitting if difference between estimated value and value in the train samples is less than regressionAccuracy. |

useSurrogates |

false |

Allow surrogate splits to handle missing data. [Not yet implemented.] |

maxCategories |

10 |

Limits the number of categorical values before which the decision tree will precluster those categories. |

CVFolds |

10 |

If (CVFolds > 1) then prune the decision tree using K-fold cross-validation where K is equal to CVFolds. |

use1SERule |

true |

true for more aggressive pruning. Resulting tree will be smaller, but less accurate. This can help with overfitting, however. |

truncatePrunedTree |

true |

If true, remove pruned branches from the tree. |

priors |

cv::Mat() |

Sets alternative weights for incorrect answers. |

Two of these arguments warrant a little further investigation. maxCategories limits the number of categorical values before which the decision tree will precluster those categories so that it will have to test no more than ![]() possible value subsets.16 Those variables that have more categories than

possible value subsets.16 Those variables that have more categories than maxCategories will have their category values clustered down to maxCategories possible values. In this way, decision trees will have to test no more than maxCategories levels at a time, which results in considering no more than ![]() possible decision subsets for each categorical input. This parameter, when set to a low value, reduces computation but at the cost of accuracy.

possible decision subsets for each categorical input. This parameter, when set to a low value, reduces computation but at the cost of accuracy.

The last parameter, priors, sets the relative weight that you give to misclassification. That is, if we build a two-class classifier and if the weight of the first output class is 1 and the weight of the second output class is 10, then each mistake in predicting the second class is equivalent to making 10 mistakes in predicting the first class. In the example we will look at momentarily, we use edible and poisonous mushrooms. In this context, it makes sense to “punish” mistaking a poisonous mushroom for an edible one 10 times more than mistaking an edible mushroom for a poisonous one. The parameter priors is an array of floats with the same number of elements as there are classes. The assigned values are in the same order as the classes themselves.

The train() method is derived directly from cv::ml::StatModel:

// Work directly with decision trees: // bool cv::ml::DTrees::train( const cv::Ptr<cv::ml::TrainData>& trainData, // your data int flags = 0 // use UPDATE_MODEL to add data );

In the train() method, we have the floating-point trainData matrix. With decision trees, you can set the layout to cv::COL_SAMPLE when constructing trainData if you want to arrange your data into columns instead of the usual rows, which is the most efficient layout for this algorithm. Example 21-1 details the creation and training of a decision tree.

The function for prediction with a decision tree is the same as for its base class, cv::ml::StatModel:

float cv::ml::DTrees::predict( cv::InputArray samples, cv::OutputArray results = cv::noArray(), int flags = 0 ) const;

Here, samples is a floating-point matrix, with one row per sample. In the case of a single input, the return value will be enough and results can be set to cv::noArray(). In the case of multiple vectors to be evaluated, the output results will contain a prediction for each input vector. Finally, flags specifies various possible options. For example, cv::ml::StatModel::PREPROCESSED_INPUT indicates that the values of each categorical variable j are normalized to the range 0..Nj – 1, where Nj is the number of categories for jth variable. For example, if some variable may take just two values, A and B, after normalization A is converted to 0 and B to 1. This is mainly used in ensembles of trees to speed up prediction. Normalizing data to fit within the (0, 1) interval is simply a computational speedup because the algorithm then knows the bounds in which data may fluctuate. Such normalization has no effect on accuracy. This method returns the predicted value, normalized (when flags includes cv::ml::StatModel::RAW_OUTPUT) or converted to the original label space (when flags does not include the RAW_OUTPUT flag).

Most users will only train and use the decision trees, but advanced or research users may sometimes wish to examine and/or modify the tree nodes or the splitting criteria. As stated in the beginning of this section, the information for how to do this is in the ML documentation online at http://docs.opencv.org. The sections of interest for such advanced analysis are the class structure cv::ml::DTrees, the node structure cv::ml::DTrees::Node, and its contained split structure cv::ml::DTrees::Split.

Decision tree usage

We will now explore the details by looking at a specific example. Consider a program whose purpose is to learn to identify poisonous mushrooms. There is a public data set called agaricus-lepiota.data that contains information about some 8,000 different kinds of mushrooms. It lists many features that might distinguish a mushroom visually, such as the color of the cap, the size and spacing of the gills, as well as—and this is very important—whether or not that type of mushroom is poisonous.17 The data file is in CSV format and consists of a label 'p' or 'e' (denoting poisonous or edible, respectively) followed by 22 categorical attributes, each represented by a single letter. It should also be noted that the file contains examples in which certain data is missing (i.e., one or more of the attributes is unknown for that particular type of mushroom). In this case, the entry is a '?' for that feature.

Let’s take the time to look at this program in detail, which will use binary decision trees to learn to recognize poisonous from edible mushrooms based on their various visible attributes (Example 21-1).18

Example 21-1. Creating and training a decision tree

#include <opencv2/opencv.hpp>

#include <stdio.h>

#include <iostream>

using namespace std;

using namespace cv;

int main( int argc, char* argv[] ) {

// If the caller gave a filename, great. Otherwise, use a default.

//

const char* csv_file_name = argc >= 2

? argv[1]

: "agaricus-lepiota.data";

cout <<"OpenCV Version: " <<CV_VERSION <<endl;

// Read in the CSV file that we were given.

//

cv::Ptr<cv::ml::TrainData> data_set = cv::ml::TrainData::loadFromCSV(

csv_file_name, // Input file name

0, // Header lines (ignore this many)

0, // Responses are (start) at thie column

1, // Inputs start at this column

"cat[0-22]" // All 23 columns are categorical

); // Use defaults for delimeter (',') and missch ('?')

// Verify that we read in what we think.

//

int n_samples = data_set->getNSamples();

if( n_samples == 0 ) {

cerr <<"Could not read file: " <<csv_file_name <<endl;

exit( -1 );

} else {

cout <<"Read " <<n_samples <<" samples from " <<csv_file_name <<endl;

}

// Split the data, so that 90% is train data

//

data_set->setTrainTestSplitRatio( 0.90, false );

int n_train_samples = data_set->getNTrainSamples();

int n_test_samples = data_set->getNTestSamples();

cout <<"Found " <<n_train_samples <<" Train Samples, and "

<<n_test_samples <<" Test Samples" <<endl;

// Create a DTrees classifier.

//

cv::Ptr<cv::ml::RTrees> dtree = cv::ml::RTrees::create();

// set parameters

//

// These are the parameters from the old mushrooms.cpp code

// Set up priors to penalize "poisonous" 10x as much as "edible"

//

float _priors[] = { 1.0, 10.0 };

cv::Mat priors( 1, 2, CV_32F, _priors );

dtree->setMaxDepth( 8 );

dtree->setMinSampleCount( 10 );

dtree->setRegressionAccuracy( 0.01f );

dtree->setUseSurrogates( false /* true */ );

dtree->setMaxCategories( 15 );

dtree->setCVFolds( 0 /*10*/ ); // nonzero causes core dump

dtree->setUse1SERule( true );

dtree->setTruncatePrunedTree( true );

//dtree->setPriors( priors );

dtree->setPriors( cv::Mat() ); // ignore priors for now...

// Now train the model

// NB: we are only using the "train" part of the data set

//

dtree->train( data_set );

// Having successfully trained the data, we should be able

// to calculate the error on both the training data, as well

// as the test data that we held out.

//

cv::Mat results;

float train_performance = dtree->calcError(

data_set,

false, // use train data

results //cv::noArray()

);

std::vector<cv::String> names;

data_set->getNames(names);

Mat flags = data_set->getVarSymbolFlags();

// Compute some statistics on our own:

//

{

cv::Mat expected_responses = data_set->getResponses();

int good=0, bad=0, total=0;

for( int i=0; i<data_set->getNTrainSamples(); ++i ) {

float received = results.at<float>(i,0);

float expected = expected_responses.at<float>(i,0);

cv::String r_str = names[(int)received];

cv::String e_str = names[(int)expected];

cout <<"Expected: " <<e_str <<", got: " <<r_str <<endl;

if( received==expected ) good++; else bad++; total++;

}

cout <<"Correct answers: " <<(float(good)/total) <<"%" <<endl;

cout <<"Incorrect answers: " <<(float(bad)/total) <<"%" <<endl;

}

float test_performance = dtree->calcError(

data_set,

true, // use test data

results //cv::noArray()

);

cout <<"Performance on training data: " <<train_performance <<"%" <<endl;

cout <<"Performance on test data: " <<test_performance <<"%" <<endl;

return 0;

}

We start out by parsing the command line for a single argument, the CSV file to read; if there is no such file, we read the mushroom file by default. We print the OpenCV version number,19 and then continue on to parse the CSV file. This file has no header, but the results are in the first column, so it is important to specify that. Finally, all of the inputs are categorical, so we state that explicitly. Once we have read the CSV file, we state how many samples were read, which is a good way to verify that the reading went well and that you read the file you thought you were reading.

Next, we set the train-test split. In this case, we do so with setTrainTestRatio(), and so we specify what fraction we would like to be training data; in this case it is 90% (0.90). Also note that the default behavior of the split is to also shuffle the data. If we do not want to do that shuffling, we need to pass false as the second argument. Once this split is done, we print out how many training samples there are and how many test samples.

Once the data is all prepared, we can go on and create the cv::ml::DTrees object that we will be training. This object is configured with a series of calls to its various set*() methods. Notably, we pass an array of values to setPriors(). This allows us to set relative weight of missing a poisonous mushroom as opposed to incorrectly marking an edible mushroom as poisonous. The reason the 1.0 comes first and the 10.0 comes after is because e comes before p in the alphabet (and thus, once converted to ASCII, then to a floating-point number, it comes first numerically).

In this example, we simply train DTrees and then use it to predict results on some test data. In a more realistic application, the decision tree may also be saved to disk via save() and loaded via load() (see the following). In this way, it is possible to train a classifier and then distribute the trained classifier in your code, without having to distribute the data (or make your users retrain every time!) The following code shows how to save and to load a tree file called tree.xml.

// To save your trained classifier to disk:

//

dtree->save("tree.xml","MyTree");

// To load a trained classifier from disk:

//

dtree->load("tree.xml","MyTree");

// You can also clear an existing trained classifier.

//

dtree->clear();

Note

Using the .xml extension stores an XML data file; if we used a .yml or .yaml extension, it would store a YAML data file. The optional "MyTree" is a tag that labels the tree within the tree.xml file. As with other statistical models in the machine learning module, you cannot store multiple objects in a single .xml or .yml file when using save(); for multiple storage, you need to use cv::FileStorage() and operator<<(). However, load() is a different story: this function can load an object by its name even if there is some other data stored in the file.

Decision tree results

By tinkering with the previous code and experimenting with various parameters, we can learn several things about edible or poisonous mushrooms from the agaricus-lepiota.data file. If we just train a decision tree without pruning and without priors, so that it learns the data perfectly, we might get the tree shown in Figure 21-4. Although the full decision tree may learn the training set of data perfectly, remember the lessons from the section “Diagnosing Machine Learning Problems” in Chapter 20 about variance/overfitting. What’s happened in Figure 21-4 is that the data has been memorized along with its mistakes and noise. Such a tree unlikely to perform well on real data. For this reason, OpenCV decision trees (and CART type trees generally) typically include the additional step of penalizing complex trees and pruning them back until complexity is in balance with performance.20

Figure 21-5 shows a pruned tree that will still do quite well (but not perfectly) on the training set but will probably perform better on real data because it has a better balance between bias and variance. However, the classifier shown has a serious shortcoming: although it performs well on the data, it now labels poisonous mushrooms as edible 1.23% of the time.

Figure 21-4. Full decision tree for poisonous (p) or edible (e) mushrooms: this tree was built out to full complexity for 0% error on the training set and so would probably suffer from variance problems on test or real data

Figure 21-5. Pruned decision tree for poisonous (p) and edible (e) mushrooms. Despite being pruned, this tree shows low error on the training set and would likely work well on real data

As you might imagine, there are big advantages to a classifier that may label many edible mushrooms as poisonous but which nevertheless does not invite us to eat a poisonous mushroom! As we saw previously, we can create such a classifier by intentionally biasing (or as adding a cost to) the classifier and/or the data. This is what we did in Example 21-1, we added a higher cost for misclassifying poisonous mushrooms than for misclassifying edible mushrooms. By adjusting the priors vector, we imposed a cost into the classifier and, as a result, changed the weighting of how much a “bad” data point counts versus a “good” one. Alternatively, if one did not, or could not, modify the classifier code to change the prior, one can equivalently impose additional cost by duplicating (or resampling from) “bad” data. Duplicating “bad” data points implicitly gives a higher weight to the “bad” data, a technique that can work with almost any classifier.

Figure 21-6 shows a tree where a 10× bias was imposed against poisonous mushrooms. This tree makes no mistakes on poisonous mushrooms at a cost of many more mistakes on edible mushrooms, a case of “better safe than sorry.” Confusion matrices for the (pruned) unbiased and biased trees are shown in Figure 21-7.

Figure 21-6. An edible mushroom decision tree with 10× bias against misidentification of poisonous mushrooms as edible; note that the lower-right rectangle, though containing a vast majority of edible mushrooms, does not contain a 10× majority and so would be classified as inedible

Figure 21-7. Confusion matrices for (pruned) edible mushroom decision trees: the unbiased tree yields better overall performance (top panel) but sometimes misclassifies poisonous mushrooms as edible; the biased tree does not perform as well overall (lower panel) but never misclassifies poisonous mushrooms

Boosting

Decision trees are extremely useful but, used by themselves, are often not the best-performing classifiers. In this and the next section we present two techniques, boosting and random trees, that use trees in their inner loop and so inherit many of the trees’ useful properties (e.g., being able to deal with mixed and unnormalized data types, categorical or ordered). These techniques typically perform at or near the state of the art; thus they are often the best “out of the box” supervised classification techniques available in the library.21

In the field of supervised learning there is a meta-learning algorithm (first described by Michael Kerns in 1988) called statistical boosting. Kerns wondered whether it was possible to learn a strong classifier out of many weak classifiers. The output of a “weak classifier” is only weakly correlated with the true classifications, whereas that of a “strong classifier” is strongly correlated with true classifications. Thus, weak and strong are defined in a statistical sense.

The first boosting algorithm, known as AdaBoost, was formulated shortly thereafter by Freund and Schapire [Freund97]. Subsequently, other variations of this original boosting algorithm were developed. OpenCV ships with the four types of boosting listed in Table 21-2.

| Boosting method | OpenCV enum value |

|---|---|

| Discrete AdaBoost | cv::ml::Boost::DISCRETE |

| Real AdaBoost | cv::ml::Boost::REAL |

| LogitBoost | cv::ml::Boost::LOGIT |

| Gentle AdaBoost | cv::ml::Boost::GENTLE |

Of these, one often finds that the (only slightly different) “real” and “gentle” variants of AdaBoost work best. Real AdaBoost is a technique that utilizes confidence-rated predictions and works well with categorical data. Gentle AdaBoost puts less weight on outlier data points and for that reason is often good with regression data. LogitBoost can also produce good regression fits. Because you need only set a flag, there’s no reason not to try all types on a data set and then select the boosting method that works best.22 Here we’ll describe the original AdaBoost. For classification it should be noted that, as implemented in OpenCV, boosting is a two-class (yes or no) classifier,23 unlike the decision tree or random tree classifiers, which can handle multiple classes at once.

One word of warning: though in theory LogitBoost and GentleBoost (referenced previously, and in the subsection “Boosting code”) can be used to perform regression in addition to binary classification, in OpenCV boosting can only be trained for classification as implemented at this time.

AdaBoost

Boosting algorithms are used to train ![]() weak classifiers

weak classifiers ![]() ,

, ![]() . These classifiers are generally very simple individually. In most cases these classifiers are decision trees with only one split (called decision stumps) or at most a few levels of splits (perhaps up to three). Each of the classifiers is assigned a weighted vote

. These classifiers are generally very simple individually. In most cases these classifiers are decision trees with only one split (called decision stumps) or at most a few levels of splits (perhaps up to three). Each of the classifiers is assigned a weighted vote ![]() in the final decision-making process for the resulting final strong classifier. We use a labeled data set of input feature vectors

in the final decision-making process for the resulting final strong classifier. We use a labeled data set of input feature vectors ![]() , each with scalar label yi (where

, each with scalar label yi (where ![]() indexes the data points). For AdaBoost the label is binary,

indexes the data points). For AdaBoost the label is binary, ![]() , though it can be any floating-point number in other algorithms. We also initialize a data point weighting distribution

, though it can be any floating-point number in other algorithms. We also initialize a data point weighting distribution ![]() ; this tells the algorithm how much misclassifying a data point will “cost.” The key feature of boosting is that, as the algorithm progresses, this cost will evolve so that weak classifiers trained later will focus on the data points that the earlier trained weak classifiers tended to do poorly on. The algorithm is as follows:

; this tells the algorithm how much misclassifying a data point will “cost.” The key feature of boosting is that, as the algorithm progresses, this cost will evolve so that weak classifiers trained later will focus on the data points that the earlier trained weak classifiers tended to do poorly on. The algorithm is as follows:

-

.

. -

For

:

:-

Find the classifier

that minimizes the

that minimizes the  weighted error:

weighted error: , where

, where  (for

(for  as long as

as long as  ; else quit).

; else quit). -

Set the

“voting weight”

“voting weight”  , where

, where  is the argmin error from Step 2a.

is the argmin error from Step 2a. -

Update the data point weights:

Here,

normalizes the equation over all data points

normalizes the equation over all data points  , so that

, so that  for all w.

for all w. -

Note that, in Step 2a, if we can’t find a classifier with less than a 50% error rate then we quit; we probably need better features.

When the training algorithm just described is finished, the final strong classifier takes a new input vector ![]() and classifies it using a weighted sum over the learned weak classifiers

and classifies it using a weighted sum over the learned weak classifiers ![]() :

:

Here, the sign function converts anything positive into a 1 and anything negative into a –1 (zero remains 0). For performance reasons the leaf values of the just trained ith decision tree are scaled by ![]() and then H(x) reduces to the sign of the sum of weak classifier responses on x.

and then H(x) reduces to the sign of the sum of weak classifier responses on x.

Boosting code

The code for boosting is similar to the code for decision trees, and the cv::ml::Boost class is derived from cv::ml::DTrees with a few extra control parameters. As we have seen elsewhere in the library, when you call cv::ml::Boost::create(), you will get back an object of type cv::Ptr<cv::ml::Boost>, but this object will actually be a pointer to an object of the (generally invisible) cv::ml::BoostImpl class type.

// Somewhere above..

// namespace cv {

// namespace ml {

//

class BoostImpl : public Boost { // cv::ml::Boost is derived from cv::ml::DTrees

public:

// types of boosting

// (Inherited from cv::ml::Boost)

//

//enum Types {

// DISCRETE = 0,

// REAL = 1,

// LOGIT = 2,

// GENTLE = 3

//};

// get/set one of boosting types:

//

int getBoostType() const; // get type: DISCRETE, REAL, LOGIT, GENTLE

int getWeakCount() const; // get the number of weak classifiers

double getWeightTrimRate() const; // get the trimming rate, see text

void setBoostType(int val); // get type: DISCRETE, REAL, LOGIT, GENTLE

void setWeakCount(int val); // get the number of weak classifiers

void setWeightTrimRate(double val); // get the trimming rate, see text

...

// from class Boost

//

//static Ptr<Boost> create(); // The algorithm "constructor",

// // returns Ptr<BoostImpl>

};

The member setWeakCount() sets the number of weak classifiers that will be used to form the final strong classifier. The default value for this number is 100.

The number of weak classifiers is distinct from the maximum complexity that is allowed to each of the individual classifiers. The latter is controlled by setMaxDepth(), which sets the maximum number of layers that an individual weak classifier can have. As mentioned earlier, a value of 1 is common, in which case these little trees are just “stumps” and contain only a single decision.

The next parameter, the weight trim rate, is used to make the computation more efficient and therefore much faster. As training goes on, many data points become unimportant. That is, the weight Dt(i) for the ith data point becomes very small. The setWeightTrimRate() function sets a threshold, between 0 and 1 (inclusive), that is implicitly used to throw away some training samples in a given boosting iteration. For example, suppose weight trim rate is set to 0.95 (the default value). This means that the “heaviest” samples (i.e., samples that have the largest weights) with a total weight of at least 95% are accepted into the next iteration of training, while the remaining “lightest” samples with a total weight of at most 5% are temporarily excluded from the next iteration. Note the words “from the next iteration”—the samples are not discarded forever. When the next weak classifier is trained, the weights are computed for all samples and so some previously insignificant samples may be returned to the next training set. Typically, because of the trimming, only about 20% of samples or so take part in each individual round of training and therefore the training accelerates by factor of 5 or so. To turn this functionality off, call setWeightTrimRate(1.0).

For other parameters, note that cv::ml::BoostImpl inherits (via cv::ml::Boost) from cv::ml::DTrees, so we may set the parameters that are related to the decision trees themselves through the inherited interface functions. Overall, training of the boosting model and then running prediction is done in precisely the same way as with cv::ml::DTrees or essentially any other StatModel derived class from the ml module.

The code .../opencv/samples/cpp/letter_recog.cpp from the OpenCV package shows an example of the use of boosting. The training code snippet is shown in Example 21-2. This example uses the classifier to try to recognize a–z characters, starting with a public data set. That data set has 20,000 entries, each with 16 features and one “result.” The features are floating-point numbers and the result is a single character. Because boosting can only be used for two-class discrimination, this program uses the “unrolling” technique that we (briefly) encountered earlier. We will discuss that technique in more detail here.

In unrolling, the data set is essentially expanded from one set of training data to 26 sets, each of which is extended such that what was once the response is now added as a feature. At the same time, the new responses for these extended vectors are now just 1 or 0: true or false. In this way the classifier is being trained to, in effect, answer the question: is ![]() equal to

equal to ![]() by learning the relationships

by learning the relationships ![]() and

and ![]() ?24 See Example 21-2.

?24 See Example 21-2.

Example 21-2. Training snippet for boosted classifiers

... cv::Mat var_type( 1, var_count + 2, CV_8U ); // var_count is # features (16 here) var_type.setTo( cv::Scalar::all(VAR_ORDERED) ); var_type.at<uchar>(var_count) = var_type.at<uchar>(var_count+1) = VAR_CATEGORICAL; ...

The first thing to do is to create the array var_type that indicates how to treat each feature and the results (Example 21-2). Then the training data structure is created. Note that this is wider than you might expect by one. Not only are there var_count (in this case, this happens to be 16) features from the original data, there is the one extra column for the response, and there is one more column in between for the extension of the features to include what was once the alphabet-character response (before the unrolling).

cv::Ptr<cv::ml::TrainData> tdata = cv::ml::TrainData::create( new_data, // extended, 26x as many vectors, each contains y_i ROW_SAMPLE, // feature vectors are stored as rows new_responses, // extended, 26x as many vectors, true or false cv::noArray(), // active variable index, here just "all" cv::noArray(), // active sample index, here just "all" cv::noArray(), // sample weights, here just "all the same" var_type // extended, has 16+2 entries now );

The next thing to do is to construct the classifier. Most of this is pretty usual stuff, but one thing that is unusual is the priors. Note that the price of getting a wrong answer has been inflated to 25 over the price of getting a wrong answer. This is not because some letters are poisonous, but because of the unrolling. What this is saying is that it is 25 times costlier to say that a letter is not something that it is, than to say that it is something that it is not. This needs to be done because there are 25× more vectors effectively enforcing the “negative” rules, so the “positive” rules need correspondingly more weight.25

vector<double> priors(2); priors[0] = 1; // For false (0) answers priors[1] = 25 // For true (1) answers model = cv::ml::Boost::create(); model->setBoostType( cv::ml::Boost::GENTLE ); model->setWeakCount( 100 ); model->setWeightTrimRate( 0.95 ); model->setMaxDepth( 5 ); model->setUseSurrogates( false ); cout << "Training the classifier (may take a few minutes)... "; model->setPriors( cv::Mat(priors) ); model->train( tdata );

The prediction function for boosting is also similar to that for decision trees, in this case using model->predict(). As described earlier, in the context of boosting, this method computes the weighted sum of weak classifier responses, takes the sign of the sum, and then converts it to the output class label. In some cases, it may be useful to get the actual sum value—for example, to evaluate how confident the decision is. In order to do that, pass the cv::ml::StatModel::RAW_OUTPUT flag to the predict method. In fact, that is what needs to be done in this case. When dealing with unrolled data, it is not so rare to get two (or more) “true” responses. In this case, one typically chooses the most confident answer.

Mat temp_sample( 1, var_count + 1, CV_32F ); // An extended sample "proposition"

float* tptr = temp_sample.ptr<float>(); // Pointer to start of proposition

double correct_train _answers = 0, correct_test _answers = 0;

for( i = 0; i < nsamples_all; i++ ) {

int best_class = 0; // Strongest proposition found so far

double max_sum = -DBL_MAX; // Strength of current best prop

const float* ptr = data.ptr<float>(i); // Points at current sample

// Copy features from current sample into temp extended sample

//

for( k = 0; k < var_count; k++ ) tptr[k] = ptr[k];

// Add class to sample proposition, then make a prediction for this proposition

// If this proposition is more true than any previous one, then record this

// one as the new "best".

//

for( j = 0; j < class_count; j++ ) {

tptr[var_count] = (float)j;

float s = model->predict(

temp_sample, noArray(), StatModel::RAW_OUTPUT

);

if( max_sum < s ) { max_sum = s; best_class = j + 'A'; }

}

// If the strongest (truest) proposition matched the correct response, then

// score 1, else 0.

//

double r = std::abs( best_class - responses.at<int>(i) ) < FLT_EPSILON ? 1 : 0;

// If we are still in the train samples, record one more correct train result.

// Otherwise, record one more correct test result.

// Hope nobody shuffled the samples!

//

if( i < ntrain_samples )

correct_train _answers += r;

else

correct_test _answers += r;

}

Of course, this isn’t the fastest or most convenient method of dealing with many class problems. Random trees may be a preferable solution, and we will consider it next.

Random Trees

OpenCV contains a random trees class, which is implemented following Leo Breiman’s theory of random forests.26 Random trees can learn more than one class at a time simply by collecting the class “votes” at the leaves of each of many trees and selecting the class receiving the maximum votes as the winner. We perform regression by averaging the values across the leaves of the “forest.” Random trees consist of randomly perturbed decision trees and were among the best-performing classifiers on data sets studied while the ML library was being assembled. Random trees also have the potential for parallel implementation, even on nonshared-memory systems, a feature that lends itself to increased use in the future. The basic subsystem on which random trees are built is once again a decision tree. This decision tree is built all the way down until it’s pure. Thus (see the upper-right panel of Figure 20-3), each tree is a high-variance classifier that nearly perfectly learns its training data. To counterbalance the high variance, we average together many such trees (hence the name “random trees”).