C H A P T E R 13

Logging and Auditing

Uncontrolled access to data, with no audit trail of activity and no oversight would be going too far.

—US Naval Officer John Poindexter

A couple of key advantages provided by enterprise systems, which are often overlooked until the auditors start poking around, are process logging,auditing, and data lineage. The conceptsare simple: when you move data from system to system and manipulate it along the way,you might need to keep track of where it came from, maintain summary information about data processing, and know which processes touched that data along the way. There are a few reasons for keeping track of this additional information:

- You may need to keep it for troubleshooting purposes, to trace data issues backward through your processes to their source.

- The legal department may have mandated a new legal requirement to document every step of processing in real-time in the event of issues.

- Your business may fall under a regulation such asSarbanes-Oxley Act, SOX, or Health Insurance Portability and Accountability Act ,HIPAA, that requires high levels of security and controls around confidential medical or consumer data.

SSIS provides extensive standard logging functionality that captures runtime information about the status of ETL processing. In addition, it is relatively simple to add auditing capabilities to your enterprise ETL applications with SSIS. In this chapter, you’ll look at ways to take advantage of standard logging and ways to add auditing to your SSIS packages.

Logging

SSIS provides built-in logging capabilities that are easy to enable. In previous chapters, we’ve enabled this feature in some code samples to demonstrate SSIS’s logging capabilities. In this section, we’ll review how to enable and configure logging in your SSIS packages. We’ll also talk about SSIS logging best practices.

Enabling Logging

Logging options in SSIS are accessed at the package level by right-clicking on the control flow and choosing the Logging option from the pop-up context menu, as shown in Figure 13-1.

Figure 13-1. Selecting the Logging option from the pop-up menu

The logging configuration screen allows you to configure SSIS logging options, as shown in Figure 13-2. In this screen, you can set the following logging options:

- The SSIS log provider to use. The most common options are the SSIS log provider for SQL Server, which logs to a SQL Server table, and the SSIS log provider for text files, which outputs logging information to text files. You can, however, choose to log information to the Windows event log, XML files, or SQL Server profiler trace files. The latter options are generally used for specialized troubleshooting tasks.

- The containers for which events should be logged. You can configure logging of specific events at the package, container, and Data Flow task levels. In our example, we’re going to configure at both the package and Data Flow task levels.

- The details you would like logged at the event level. You choose these details from the Details tab.

![]() NOTE: As a general rule, we tend to prefer the SSIS log provider for SQL Server over other logging methods. Becausethis log provider logs to a SQL Server database table, the logged information is easy to query, simplifying troubleshooting exercises. When you designate the SSIS log provider for SQL Server, it will automatically generate the

NOTE: As a general rule, we tend to prefer the SSIS log provider for SQL Server over other logging methods. Becausethis log provider logs to a SQL Server database table, the logged information is easy to query, simplifying troubleshooting exercises. When you designate the SSIS log provider for SQL Server, it will automatically generate the dbo.sysssislog table and dbo.sp_ssis_addlogentry procedure in your database (if they don’t exist), both of which it marks as system objects.

Figure 13-2. SSIS logging configuration screen

To configure logging, choose the package, or the containers or tasks in the package, for which you want logging turned on. Then choose a log provider and click the Add button. In Figure 13-3, we’ve added the SSIS log provider for SQL Server to a package and turned on logging at the package level. For many log providers, you must choose a connection from the Configuration drop-down. In the figure, we’ve selected an OLE DB connection named LOG, which is pointed at a SQL Server database we wish to log to.

![]() NOTE: When we configure the SSIS log provider for SQL Server, we prefer to create an OLE DB Connection Manager specifically for the log provider connection. This keeps the logging information and data connections separate. One of the main reasons to do this is to avoid the occasional “Connection is busy with results for another command” error, which can be difficult to troubleshoot in some cases.

NOTE: When we configure the SSIS log provider for SQL Server, we prefer to create an OLE DB Connection Manager specifically for the log provider connection. This keeps the logging information and data connections separate. One of the main reasons to do this is to avoid the occasional “Connection is busy with results for another command” error, which can be difficult to troubleshoot in some cases.

Figure 13-3. Configuring the SSIS log provider for SQL Server

In our example, we’ve enabled logging at the package level, but we did not change settings for the Data Flow task contained in the package. In this case, all containers and tasks in the package inherit their logging settings from the package as indicated by the grayed-out checkboxes. To configure the events that are logged, choose the Details tab of the logging configuration page. All the events you can log are listed, as shown in Figure 13-4.

Figure 13-4. Selecting events to log

You can also choose the detailed information to log for each event by clicking the Advanced button, as shown in Figure 13-5. The default logs all relevant information for every event. Unless you have a specific reason to eliminate some data from the logs, the default is normally adequate.

Figure 13-5. Selecting data to log for each event

Choosing Log Events

When configuring logging, one of the things to keep in mind is that each logged event consumes some resources. In the case of the SSIS log provider for SQL Server, each event requires a call to a SQL Server stored procedure, which then writes to a table in the database. Logging large quantities of events can slow down packages and require large amounts of storage.

Although all of the SSIS events provide some level of insight into your package executions, some are better saved for specialized debugging situations. Therefore, we recommend limiting the events you log in production settings. There is a performance and storage hit associated with logging too much information, and when troubleshooting, you can encounter “information overload” if you choose to log too much information. The following list indicates the core events we recommend capturing in a production environment. Note that this list is just a starting point, and you may encounter a need to capture other events in your production environments:

Events we recommend avoiding include OnInformation, OnProgress, and Diagnostic, amongothers. Although often useful in debugging and test scenarios, these events in particular log a very high ratio of “noise” to useful information. They tend to clutter up the log and complicate troubleshooting while adversely affecting performance to varying degrees.

On SQL Logging

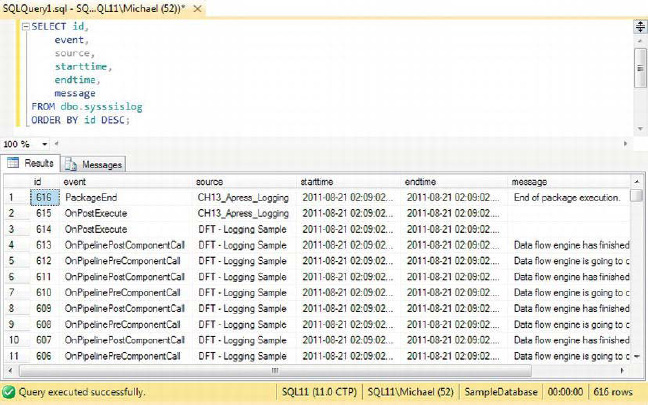

As mentioned previously in this chapter, we tend to handle our SSIS logging needs with the SSIS log provider for SQL Server. This log provider relies on two database objects to log information: a stored procedure named dbo.sp_ssis_addlogentry and a table named dbo.sysssislog, both marked as system objects. SSIS calls the stored procedure every time an event occurs. The procedure, in turn, writes an entry to the dbo.sysssislog table. The SSIS log table can be queried to retrieve relevant information with a query similar to the one shown in Figure 13-6.

Figure 13-6. Querying the SSIS log table

Summary Auditing

Summary auditing encompasses grabbing and storing abridged processing information for the SSIS packages. As part of auditing, we generally store package auditing information that can be grabbed from system variables in the SSIS package, start and end times, and other summary execution information.

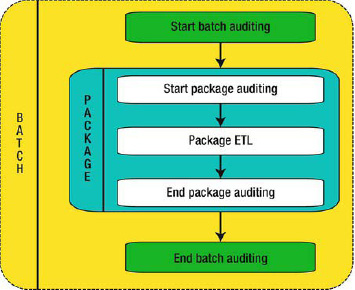

The easiest way to enable auditing is to create and call stored procedures that start and end your auditing processes.In the model the authors normally employ, we like to divide the process into batch-level and package-level auditing. Figure 13-7 is a logical representation of this two-part batch/package auditing process. Note that a batch can encompass one or more packages.

![]() NOTE: Multiple packages can be wrapped in a single batch by using the Execute Package task to implement the parent-child SSIS design pattern.

NOTE: Multiple packages can be wrapped in a single batch by using the Execute Package task to implement the parent-child SSIS design pattern.

Figure 13-7. Logical view of batch/package auditing

Batch-Level Auditing

Batch-level auditing encompasses all of the packages executed during a given run. At the batch level, we record the start time and end time of the entire batch, as well as summary information about the tables in the database. For security purposes, and for simplified management, the implementation of our auditing processes puts its database objects in a new schema. In this instance, we named it Audit:

IF SCHEMA_ID(N'Audit') IS NULL

EXEC(N'CREATE SCHEMA Audit;')

GO

We first create a table named AuditBatch, which contains batch-level auditing information including the start and end times for a given batch. You can use the information stored in the AuditBatch table to determine the performance of an entire batch of packages, and to wrap up log entries that belong to a single batch execution. Here is the code we are executing:

IF OBJECT_ID(N'Audit.AuditBatch') IS NOT NULL

DROP TABLE Audit.AuditBatch;

GO

CREATE TABLE Audit.AuditBatch

(

BatchID BIGINT NOT NULL IDENTITY(1, 1),

BatchStartTime DATETIMEOFFSET,

BatchEndTime DATETIMEOFFSET,

BatchElapsedTimeMS AS (DATEDIFF(MILLISECOND, BatchStartTime, BatchEndTime)),

BatchStatus NVARCHAR(20),

CONSTRAINT PK_AUDIT_AUDITBATCH PRIMARY KEY CLUSTERED

(

BatchID )

);

GO

We also create, in this next example, a batch-level auditing table called AuditTable, which captures state information for tables in the database including the number of rows in each table and how much space each table consumes. The information stored in this table can be used to determine resource usage for a given batch process. You can use this raw data to estimate long-term storage requirements, for instance.

IF OBJECT_ID(N'Audit.AuditTable') IS NOT NULL

DROP TABLE Audit.AuditTable;

GO

CREATE TABLE Audit.AuditTable

(

TableAuditID BIGINT NOT NULL IDENTITY(1, 1),

BatchID BIGINT NOT NULL,

BatchStartFlag BIT NOT NULL,

TableSchema NVARCHAR(128),

TableName NVARCHAR(128),

TableObjectID INT,

TableReservedKB FLOAT,

TableDataKB FLOAT,

TableIndexKB FLOAT,

TableUnusedKB FLOAT,

TableRows INT,

TableDataBytesPerRow INT,

CONSTRAINT PK_AUDIT_AUDITTABLE PRIMARY KEY CLUSTERED

(

TableAuditID

)

);

GO

To simplify our upcoming stored procedure code, we capture the table size data in a view called SummaryTableData. For speed purposes, we use the sys.partitions catalog view to grab row counts from tables:

IF OBJECT_ID(N'Audit.SummaryTableData') IS NOT NULL

DROP VIEW Audit.SummaryTableData;

GO

CREATE VIEW Audit.SummaryTableData

AS

WITH CTE

AS

(

SELECT OBJECT_SCHEMA_NAME(p.object_id) AS table_schema,

OBJECT_NAME(p.object_id) AS table_name,

p.object_id,

SUM(a.total_pages) AS reserved_pages,

SUM(a.used_pages) AS used_pages,

SUM(CASE

WHEN it.internal_type IN (202, 204, 207, 211, 212, 213, 214, 215, 216, 221, 222)

THEN 0

WHEN a.type <> 1 AND p.index_id < 2 THEN a.used_pages

WHEN p.index_id < 2 THEN a.data_pages

ELSE 0

END

) AS pages,

(

SELECT SUM(p1.rows)

FROM sys.partitions p1

WHERE p1.index_id in (0,1)

AND p1.object_id = p.object_id

) AS rows

FROM sys.partitions p

INNER JOIN sys.tables t

ON p.object_id = t.object_id

INNER JOIN sys.allocation_units a

ON p.partition_id = a.container_id

LEFT JOIN sys.internal_tables it

ON p.object_id = it.object_id

GROUP BY OBJECT_SCHEMA_NAME(p.object_id),

OBJECT_NAME(p.object_id),

p.object_id

)

SELECT table_schema,

table_name,

object_id,

reserved_pages * 8192 / 1024.0 AS reserved_kb,

pages * 8192 / 1024.0 AS data_kb,

(used_pages - pages) * 8192 / 1024.0 AS index_kb,

(reserved_pages - used_pages) * 8192 / 1024.0 AS unused_kb,

rows,

pages * 8192 / CASE rows

WHEN 0.0 THEN NULL

ELSE rows

END AS data_bytes_per_row

FROM CTE;

GO

![]() TIP: The row counts in the

TIP: The row counts in the sys.partitions catalog view are technically approximations, but the instances in which they could be incorrect are indicated to be rare. We have it on good authority that if your row count in sys.partitions is off considerably, you should report it to Microsoft because it may be an issue that needs to be investigated. We use it here because it is considerably faster than the SELECT COUNT(*) method of obtaining table row counts.

Our view calculates the space used by a table by using a method that is very similar to the legacy sp_spaceused system stored procedure. Finally, we create two procedures to start and end batch auditing (StartAuditBatch and EndAuditBatch). The initial call to StartAuditBatch returns a unique batch ID number after each call and stores initial state information for the tables in the database. The EndAuditBatch procedure in the following example accepts this previouslygenerated batch ID number and closes out the batch information.

IF OBJECT_ID(N'Audit.StartAuditBatch') IS NOT NULL

DROP PROCEDURE Audit.StartAuditBatch;

GO

CREATE PROCEDURE Audit.StartAuditBatch @BatchID BIGINT OUTPUT

AS

BEGIN

SET NOCOUNT ON;

INSERT INTO Audit.AuditBatch

(

BatchStartTime,

BatchStatus

)

VALUES

(

SYSDATETIMEOFFSET(),

N'STARTED'

);

SET @BatchID = SCOPE_IDENTITY();

INSERT INTO Audit.AuditTable

(

BatchID,

BatchStartFlag,

TableSchema,

TableName,

TableObjectID,

TableReservedKB,

TableDataKB,

TableIndexKB,

TableUnusedKB,

TableRows,

TableDataBytesPerRow

)

SELECT @BatchID,

1,

table_schema,

table_name,

object_id,

reserved_kb,

data_kb,

index_kb,

unused_kb,rows,

data_bytes_per_row

FROM Audit.SummaryTableData;

END;

GO

IF OBJECT_ID(N'Audit.EndAuditBatch') IS NOT NULL

DROP PROCEDURE Audit.EndAuditBatch;

GO

CREATE PROCEDURE Audit.EndAuditBatch @BatchID BIGINT

AS

BEGIN

SET NOCOUNT ON;

UPDATE Audit.AuditBatch

SET BatchStatus = N'COMPLETED',

BatchEndTime = SYSDATETIMEOFFSET()

WHERE BatchID = @BatchID;

INSERT INTO Audit.AuditTable

(

BatchID,

BatchStartFlag,

TableSchema,

TableName,

TableObjectID,

TableReservedKB,

TableDataKB,

TableIndexKB,

TableUnusedKB,

TableRows,

TableDataBytesPerRow

)

SELECT @BatchID,

0,

table_schema,

table_name,

object_id,

reserved_kb,

data_kb,

index_kb,

unused_kb,

rows,

data_bytes_per_row

FROM Audit.SummaryTableData;

END;

GO

Package-Level Auditing

Our package-level auditing functionality records package identification and execution information including an execution GUID that you can use to join back to the SSIS log table. Thisadds context to the SSIS log entries and provides additional information that can be used for troubleshootingon a per-package basis.

To store package audit information, we create an AuditPackage table, which holds package information including name, version, and a GUID that can be used to join the entries in this table to the SSIS log entries (if you use the SSIS log provider for SQL Server). Here’s the code for our table:

IF OBJECT_ID ('Audit.AuditPackage') IS NOT NULL

DROP TABLE Audit.AuditPackage;

GO

CREATE TABLE Audit.AuditPackage

(

AuditPackageID BIGINT NOT NULL IDENTITY(1, 1),

BatchID BIGINT NOT NULL,

PackageName NVARCHAR(128),

ExecutionInstanceGUID UNIQUEIDENTIFIER,

ProductVersion NVARCHAR(20),

MachineName NVARCHAR(128),

VersionMajor INT,

VersionMinor INT,

VersionBuild INT,

LocaleID INT,

PackageStartTime DATETIMEOFFSET,

PackageEndTime DATETIMEOFFSET,

PackageElapsedTimeMS AS (DATEDIFF(MILLISECOND, PackageStartTime, PackageEndTime)),

CONSTRAINT PK_AUDIT_AUDITPACKAGE PRIMARY KEY CLUSTERED

(

AuditPackageID

)

);

GO

We created two procedures for package auditing:StartAuditPackage to start the audit process, and EndAuditPackage to end the audit. The start procedure returns a unique package audit ID number after each call. That same audit ID number is passed into the end procedure to complete the audit entry. The code is as follows:

IF OBJECT_ID('Audit.StartAuditPackage') IS NOT NULL

DROP PROCEDURE Audit.StartAuditPackage;

GO

CREATE PROCEDURE Audit.StartAuditPackage @ExecutionInstanceGUID UNIQUEIDENTIFIER,

@ProductVersion NVARCHAR(20),

@MachineName NVARCHAR(128),

@VersionMajor INT,

@VersionMinor INT,

@VersionBuild INT,

@LocaleID INT,

@PackageName NVARCHAR(128),@BatchID BIGINT,

@AuditPackageID BIGINT OUTPUT

AS

BEGIN

SET NOCOUNT ON;

INSERT INTO Audit.AuditPackage

(

PackageName,

BatchID,

ExecutionInstanceGUID,

ProductVersion,

MachineName,

VersionMajor,

VersionMinor,

VersionBuild,

LocaleID,

PackageStartTime

)

VALUES

(

@PackageName,

@BatchID,

@ExecutionInstanceGUID,

@ProductVersion,

@MachineName,

@VersionMajor,

@VersionMinor,

@VersionBuild,

@LocaleID,

SYSDATETIMEOFFSET()

);

SET @AuditPackageID = SCOPE_IDENTITY();

END;

GO

IF OBJECT_ID('Audit.EndAuditPackage') IS NOT NULL

DROP PROCEDURE Audit.EndAuditPackage;

GO

CREATE PROCEDURE Audit.EndAuditPackage @AuditPackageID BIGINT

AS

BEGIN

SET NOCOUNT ON;

UPDATE Audit.AuditPackage

SETPackageEndTime = SYSDATETIMEOFFSET()

WHERE AuditPackageID = @AuditPackageID;

END;

GO

Adding Auditing to Packages

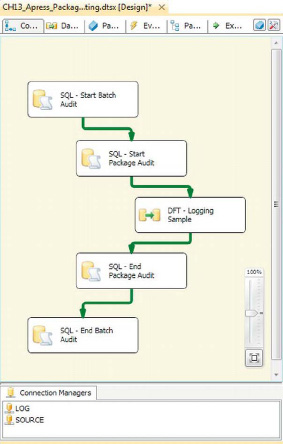

Auditing can be added to your process by including Execute SQL tasks to call the appropriate stored procedures. In our sample package, we created two 64-bit integer (Int64) variables named User::AuditBatchID and User::AuditPackageID. We set up our sample package as shown in Figure 13-8.

Figure 13-8.Sample package with auditing calls

The Data Flow task in this example is wrapped with start and end audit calls, in the following order:

- SQL – Start Batch Audit begins the batch audit process. Calls the start batch audit procedure and returns a unique batch audit ID. This is used to set the variable in the package.

- SQL – Start Package Audit begins the package audit process. Calls the start package audit procedure and returns a unique package audit ID, which sets the appropriate package variable. We pass several system variables to this procedure so they can be saved in the package audit table.

- SQL – End Package Audit ends the package audit process. This procedure closes up the auditing loop at the package level, recording audit finalization information.

- SQL – End Batch Audit completes the batch audit process. Calls the end batch audit procedure to finish auditing at the batch level after all packages are complete. The batch audit information recorded includes the change in table size information.

As we mentioned, the audit information you capture can be joined back to the standard SSIS log tables via the Execution Instance GUID. This GUID will give you all the log messages relevant to any given package execution, using a query such as the following.Sample results are shown in Figure 13-9.

SELECT au.AuditPackageID,

au.BatchID,

au.PackageName,

au.MachineName,

au.PackageStartTime,

au.PackageEndTime,

s.event,

s.source,

s.message

FROM Audit.AuditPackage au

INNER JOIN dbo.sysssislog s

ON au.ExecutionInstanceGUID = s.executionid;

GO

Figure 13-9. Sample results of audit data joined to the SSIS log table

Simple Data Lineage

One of the advantages of an auditing process as we’ve described it in this chapter is that you can easily extend it to include simple data lineage processes. Data lineage is a process by which you can trace data back to its source, essentially reverseengineering the processes by which your data arrived in its current state. In its simplest form, which we demonstrate in this section, you can use data lineage information to determine which ETL processes manipulated your data and determine the precise source from which it is derived.

![]() NOTE: Microsoft introduced a new feature known as Impact and Data Lineage Analysis (IAL) in early prerelease versions of SQL Server Denali. IAL was supposed to provide enterprise data lineage features out of the box. Alas, this feature was pulled prior to Release to Market, RTM, for an “overhaul.” So for now we must implement our own data lineage process until Microsoft’s offering is released. The revamped IAL is expected to be released shortly after SQL Server Denali as part of Project Barcelona (more information is available at

NOTE: Microsoft introduced a new feature known as Impact and Data Lineage Analysis (IAL) in early prerelease versions of SQL Server Denali. IAL was supposed to provide enterprise data lineage features out of the box. Alas, this feature was pulled prior to Release to Market, RTM, for an “overhaul.” So for now we must implement our own data lineage process until Microsoft’s offering is released. The revamped IAL is expected to be released shortly after SQL Server Denali as part of Project Barcelona (more information is available at http://blogs.msdn.com/b/project_barcelona_team_blog/).

The majority of the information we’ll need to record for our simple data lineage solution is already stored in the batch and package auditing tables. This includes the start and stop times, specific package and platform identification information, and related standard log entries. All of this information can be used to reverseengineer your process, but there are a few items missing. After you’ve stepped backward through your process, you need to ultimately determine where your data came from, and you may in fact need additional supporting data that’s not captured by the auditing solution.

For this purpose, we create a simple table that allows you to store key-value pairs related to a specific batch and package execution. Rather than limit you to a specific set of predefined entries, this design lets you store any necessary metadata at any point in your process. The table we created for this purpose is the LineageMetadata table, shown here:

IF OBJECT_ID(N'Audit.LineageMetadata') IS NOT NULL

DROP TABLE Audit.LineageMetadata;

GO

CREATE TABLE Audit.LineageMetadata

(

LineageMetaID int not null identity(1, 1),

BatchID int not null,

AuditPackageID int not null,

LineageKey varchar(1000) not null,

LineageValue varchar(1000),

CONSTRAINT PK_AUDIT_LINEAGEMETADATA PRIMARY KEY CLUSTERED

(

LineageMetaID

)

);

GO

We also provide a simple procedure to add entries to the table on demand, as shown here:

IF OBJECT_ID(N'Audit.AddLineageMetadata') IS NOT NULL

DROP PROCEDURE Audit.AddLineageMetadata;

GOCREATE PROCEDURE Audit.AddLineageMetadata @BatchID int,

@AuditPackageID int,

@LineageKey varchar(1000),

@LineageValue varchar(1000)

AS

BEGIN

INSERT INTO Audit.LineageMetadata

(

BatchID,

AuditPackageID,

LineageKey,

LineageValue

)

VALUES

(

@BatchID,

@AuditPackageID,

@LineageKey,

@LineageValue

);

END;

GO

A sample package that implements our simple data lineage solution extends the previous auditing package to include lineage entities, such as storing the source file name, as shown in Figure 13-10.

Figure 13-10. SSIS package with additional data lineage

To get row-level data lineage, we need to add the batch ID and package ID as columns to our data flow, and store them in the target table with the data. In our example, we’ve done this with a Derived Column transformation, shown in Figure 13-11.

Figure 13-11. Adding lineage data to the data flow with a Derived Column transformation

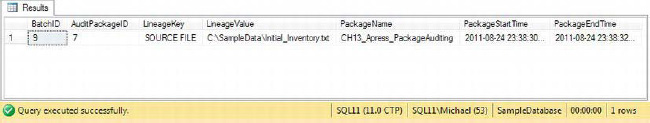

The lineage data can be joined to the auditing data with the BatchID and AuditPackageID, as shown in the following sample query. Sample results from our test run are shown in Figure 13-12.

SELECT lm.BatchID,

lm.AuditPackageID,

lm.LineageKey,

lm.LineageValue,au.PackageName,

au.PackageStartTime,

au.PackageEndTime

FROM Audit.LineageMetadata lm

INNER JOIN Audit.AuditPackage au

ON lm.BatchID = au.BatchID

AND lm.AuditPackageID = au.AuditPackageID;

GO

Figure 13-12. Sample lineage and audit data

As you can see in the results in Figure 13-12, we have captured enough metadata information to trace every row of data from the source file it started in, through the package that manipulated it, and finally to the destination table it ended up in.

![]() NOTE: As we noted previously, this is a simple data lineage solution, but it can be used to build much more complex solutions. The authors have, on occasion, implemented custom data lineage solutions requiring a much finer grain of detail, such as capturing the state of individual rows and columns of data as it is processed. As you can imagine, we’re enthusiastically looking forward to the release of Project Barcelona.

NOTE: As we noted previously, this is a simple data lineage solution, but it can be used to build much more complex solutions. The authors have, on occasion, implemented custom data lineage solutions requiring a much finer grain of detail, such as capturing the state of individual rows and columns of data as it is processed. As you can imagine, we’re enthusiastically looking forward to the release of Project Barcelona.

Summary

In many enterprise systems that are currently in production use around the world—in many industries—logging and auditing features are often overlooked. In some scenarios, lack of proper logging and auditing processes can result in countless hours of reverseengineering thousands of lines of code to uncover and fix even the simplest of errors. In the worst case, failure to implement these enterprise features could end in trouble with auditors and regulatory agencies followed by big fines and people losing their jobs. This chapter presentedSSIS’s built-in logging capabilities and showed you how to extend them to add standardized auditing features to your ETL processes.

In addition, it’s becoming increasingly important to store data lineage information that allows you to track data moving from one system to another as it makes its way to your data warehouses, data marts, and other data repositories. Although SSIS does not currently offer enterprise data lineage facilities out of the box, we demonstrated how to extend your auditing system to implement a basic data lineage solution that will allow you to track data at the row level back through the packages that manipulated it, all the way back to its source.

The next chapter presents ETL in a heterogeneous environment—one in which source data comes in many forms and formats.