CONCLUDING YOUR ACTIVITY

First Prioritization: Usability Perspective

Second Prioritization: Merging Usability and Product Development Priorities

Introduction

In earlier chapters we have presented a variety of user requirements activities to fit your needs. After conducting a user requirements activity, you have to effectively relay the information you have collected to the stakeholders in order for it to impact your product. If your findings are not communicated clearly and successfully, you have wasted your time. There is nothing worse than a report that sits on a shelf, never to be read. In this, the concluding chapter, we show you how to prioritize and report your findings, present your results to stakeholders, and ensure that your results get incorporated into the product.

In addition, we have included a case study from a usability specialist at Sun Microsystems, Inc. Tim McCollum discusses the resistance he encountered at a previous company when planning user requirements activities and promoting the results – and, more importantly, how he overcame that resistance.

Prioritization of Findings

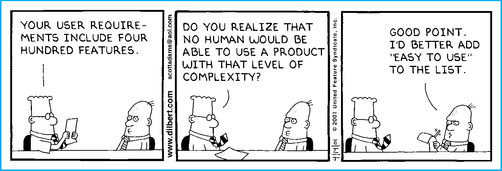

Clearly, you would never want to go to your stakeholders with a flat list of 400 user requirements. It would overwhelm them and they would have no idea where to begin. You must prioritize the issues and requirements you have obtained and then make recommendations based on those priorities. Prioritization conveys your assessment of the impact of the findings uncovered by your user requirements activity. In addition, this prioritization shows the order in which requirements should be addressed by the product team.

It is important to realize that usability prioritization is not suitable for all types of user requirements activities. In methods such as a card sort or task analysis, the findings are presented as a whole rather than as individual recommendations. For example, if you have completed a card sort to help you understand the necessary architecture for your product (see Chapter 10, page 414), typically, the recommendation to the team is a whole architecture. As a result the entire object (the architecture) has high priority. On the other hand, interviews (Chapter 7, page 246), surveys (Chapter 8, page 312), wants and needs analyses (Chapter 9, page 370), focus groups (Chapter 12, page 514), and field studies (Chapter 13, page 562) typically generate data that are conductive to prioritization. For example, if you conducted a focus group to understand users’ issues and new requirements for the current product so that you could use this information to improve the next version, you would likely get a long list of issues and requests each having varying degrees of support from the participants. Rather than just handing over the list of findings to the product team, you can help the team understand what findings are the most important so that they can allocate their resources effectively. We discuss how to do this. We also distinguish between usability prioritization and prioritization that takes into consideration not only the usability perspective but also the product development perspective. The typical sequence of the prioritization activities is shown in Figure 14.1.

This section discusses both types of prioritization, but note that the presentation of your findings (discussed later) typically takes place prior to the second prioritization.

First Prioritization: Usability Perspective

There are two key criteria (from a usability perspective) to take into consideration when assigning priority to a recommendation: impact and number.

![]() Impact refers to your judgment of the impact of the usability finding on users. Information about consequence and frequency is used to determine impact. For example, if a particular user requirement is not addressed in your product so that users will be unable to do their jobs, clearly this would be rated as “high” priority.

Impact refers to your judgment of the impact of the usability finding on users. Information about consequence and frequency is used to determine impact. For example, if a particular user requirement is not addressed in your product so that users will be unable to do their jobs, clearly this would be rated as “high” priority.

![]() Number refers to the number of users who identified the issue or made a particular request. For example, if you conducted 20 interviews and two participants stated they would like (rather than need) a particular feature in the product, it will likely be given a “low” priority. Ideally, you also want to assess how many of your true users this issue will impact. Even if only a few usersmentioned it, it may still affect a lot of other users. For example, if an issue arose from two users regarding logging in to the product, this issue – although mentioned by only a small number of users – would impact all users.

Number refers to the number of users who identified the issue or made a particular request. For example, if you conducted 20 interviews and two participants stated they would like (rather than need) a particular feature in the product, it will likely be given a “low” priority. Ideally, you also want to assess how many of your true users this issue will impact. Even if only a few usersmentioned it, it may still affect a lot of other users. For example, if an issue arose from two users regarding logging in to the product, this issue – although mentioned by only a small number of users – would impact all users.

The reality is that prioritization tends to be more of an art than a science. Requirement priorities are sometime gray as opposed to black and white. We do our best to use guidelines to determine these priorities. They help us decide how the various combinations of the impact and number translate into a rating of “high,” “medium,” or “low” for a particular user requirement. To allow stakeholders and readers of your report to understand the reasons for a particular priority rating, the description of the requirement should contain information about the impact of the finding (e.g., the issue is severe and re-occurring, or the requested function is cosmetic) and the number of participants who requested the feature or identified the issue during the requirements activity (e.g., 20 of 25 participants).

Guidelines for the “high,” “medium,” and “low” priorities are as follows (keep in mind that these may not apply to the results of every activity):

![]() The finding/requirement is extreme. It will be difficult for the majority of users to use the product, and will likely result in task failure without the requirement.

The finding/requirement is extreme. It will be difficult for the majority of users to use the product, and will likely result in task failure without the requirement.

![]() The finding/requirement is frequent and re-occurring.

The finding/requirement is frequent and re-occurring.

![]() The finding/requirement is broad in scope, has interdependencies, or is symptomatic of an underlying infrastructure issue.

The finding/requirement is broad in scope, has interdependencies, or is symptomatic of an underlying infrastructure issue.

![]() The finding/requirement is moderate. It will be difficult for some participants to use the product without it.

The finding/requirement is moderate. It will be difficult for some participants to use the product without it.

![]() It will be difficult for some participants to complete their work without the requirement.

It will be difficult for some participants to complete their work without the requirement.

![]() A majority of participants will experience frustration and confusion when completing their work (but not an inability to get their work done) without the requirement.

A majority of participants will experience frustration and confusion when completing their work (but not an inability to get their work done) without the requirement.

![]() The requirement is less broad in scope and its absence might affect other tasks.

The requirement is less broad in scope and its absence might affect other tasks.

![]() A few participants might experience frustration and confusion without the requirement.

A few participants might experience frustration and confusion without the requirement.

![]() The requirement is slight in scope and its absence will not affect other tasks.

The requirement is slight in scope and its absence will not affect other tasks.

![]() Only a few participants will be affected without the requirement.

Only a few participants will be affected without the requirement.

![]() It is a cosmetic requirement, mentioned by only a few participants.

It is a cosmetic requirement, mentioned by only a few participants.

If a requirement meets the guidelines for more than one priority rating, then it is typically assigned the highest rating.

Of course, when using these rating guidelines you will also need to consider your domain. If you work in a field when any error can lead to loss of life or an emergency situation (e.g., a nuclear power plant, hospital) you will likely need to modify these guidelines to make them more stringent.

Second Prioritization: Merging Usability and Product Development Priorities

The previous section described how to prioritize recommendations of a user requirements activity from a usability perspective. The focus for this kind of prioritization is, of course, on how each finding impacts the user. In an ideal world, we would like the product development team to make changes to the product by dealing with all of the high-priority issues first, then the medium-priority, and finally the low-priority issues. However, the reality is that factors such as budgets, contract commitments, resource availability, technological constraints, marketplace pressures, and deadlines often prevent product development teams from implementing your recommendations. They may really want to implement all of your recommendations but are simply unable to. Frequently, usability professionals do not have insight into the true cost to implement a given recommendation. By understanding the cost to implement your recommendations and the constraints the product development team is working under, you not only ensure that the essential recommendations are likely to be incorporated, you also earn allies on the team.

As mentioned earlier, this type of prioritization typically occurs after you have presented the findings to the development team. After the presentation meeting (described later), schedule a second meeting to prioritize the findings you have just discussed. You may be able to cover it in the same meeting if time permits, but we find it is best to schedule a separate meeting. At this second meeting you can determine these priorities and also the status of each recommendation (i.e., accepted, rejected, or under investigation). A detailed discussion of recommendation status can be found below (see “Ensuring the Incorporation of Your Findings,” page 660).

It can be a great exercise to work with the product team to take your prioritizations to the next level and incorporate cost. The result is a cost-benefit chart to compare the priority of a usability recommendation to the cost of implementing it.

After you have presented the list of user requirements or issues and their impact on the product development process, ask the team to identify the cost associated with implementing each change. Because developers have substantial technical experience with their product, they are usually able to make this evaluation quickly.

The questions in Figure 14.2 are designed to help the development team determine the cost. These are based on questions developed by MAYA Design (McQuaid 2002).

Average the ratings that each stakeholder gives these questions to come up with an overall cost score for each usability finding/recommendation. The closer the average score is to 7, the greater the cost for that requirement to be implemented. Based on the “cost” assignment for each item in the list and the usability priority you have provided, you can determine the final priority each finding or requirement should be given. The method to do this is described below.

In Figure 14.3, the x-axis represents the importance of the finding from the usability perspective (high, medium, low), while the y-axis represents the difficulty or cost to the product team (rating between 1 and 7). The further to the right you go, thegreater the importance is to the user. The higher up you go, the greater the difficulty to implement the recommendation. This particular figure shows a cost-benefit chart for the wants and needs travel example conducted in Chapter 9, Wants and Needs Analyses (see page 400 for the table of results).

FIGURE 14.3 Example of a cost-benefit chart for results obtained during a wants and needs analysis (see the results on page 400).(McQuaid 2002)

The chart quadrants

As you can see, there are four quadrants into which your recommendations can fall:

![]() High value. Quadrant contains high-impact issues/recommendations that require the least cost or effort to implement. Recommendations in this quadrant provide the greatest return on investment and should be implemented first.

High value. Quadrant contains high-impact issues/recommendations that require the least cost or effort to implement. Recommendations in this quadrant provide the greatest return on investment and should be implemented first.

![]() Strategic. Quadrant contains high-impact issues/recommendations that require more effort to implement. Although it will require significant resources to implement, the impact on the product and user will be high and should be tackled by the team next.

Strategic. Quadrant contains high-impact issues/recommendations that require more effort to implement. Although it will require significant resources to implement, the impact on the product and user will be high and should be tackled by the team next.

![]() Targeted. Quadrant contains recommendations with lower impact and less cost to implement. This may be referred to as the “low-hanging fruit”: they are tempting for the product development team to implement because of the low cost; however, because the impact is lower, these should be addressed third, only after the team has tackled the “high-value” and “strategic” recommendations.

Targeted. Quadrant contains recommendations with lower impact and less cost to implement. This may be referred to as the “low-hanging fruit”: they are tempting for the product development team to implement because of the low cost; however, because the impact is lower, these should be addressed third, only after the team has tackled the “high-value” and “strategic” recommendations.

![]() Luxuries. Quadrant contains low-impact issues/recommendations that require more effort to implement. This quadrant provides the lowest return on investment and should be addressed only after recommendations in the other three quadrants have been completed.

Luxuries. Quadrant contains low-impact issues/recommendations that require more effort to implement. This quadrant provides the lowest return on investment and should be addressed only after recommendations in the other three quadrants have been completed.

By going through the extra effort of working with the product team to create this chart, you have provided them with a plan of attack. In addition, the development team will appreciate the fact that you have worked with them to take their perspective into account.

Presenting Your Findings

Now that you have collected your data and analyzed it, you need to showcase the results to all stakeholders. This presentation will often occur prior to the prioritization exercise with the product development team (i.e., you will have a usability prioritization, but it will not factor in the product team’s priorities yet). The reality is that writing a usability report, posting it to a group website, and e-mailing out the link to the report is just not enough. Usability reports fill an important need (e.g., documenting your findings and archiving the detailed data for future reference), but you must present the data verbally as well.

You really need to have a meeting to discuss your findings. This meeting will likely take one to two hours, and ideally should be done in person. Yes, we realize that the meeting could be conducted remotely using application-sharing tools, but we find that the meeting tends to run smoother with face-to-face interaction. Then there are no technical issues to contend with, and – more importantly – you can see for yourself the reaction of your audience to the results. Do they seem to understand what you are saying? Are they reacting positively with smiles and head nods, or are they frowning and shaking their heads?

Why the Verbal Presentation is Essential

Since your and the stakeholders’ time is valuable, it is important to understand why a meeting to present your results and recommendations is so critical. No one wants unnecessary meetings in his/her schedule. If you do not feel the meeting is essential, neither will your stakeholders. What follows are some reasons for having a meeting and for scheduling it as soon as possible.

Dealing with the issues sooner rather than later

Product teams are very busy. They are typically on a very tight timeline and they are dealing with multiple sources of information. You want to bring your findings to the attention of the team as soon as possible. This is for your benefit and theirs. From your perspective, it is best to discuss the issues while the activity and results are fresh in your mind. From the product team’s perspective, the sooner they know what they need to implement based on your findings, the more likely they are to actually be able to do this.

Ensuring correct interpretation of the findings and recommendations

You may have spent significant time and energy conducting the activity, collecting and analyzing the data, and developing recommendations for the team. The last thing you want is for the team to misinterpret your findings and conclusions. The best way to ensure that everyone is on the same page is to have a meeting and walk through the findings. Make sure that the implications of the findings are clearly understood and why they are important to stakeholders. The reality is that many issues are too complex to describe effectively in a written format. A face-to-face presentation is key.

Dealing with recommendation modifications

It may happen that one or more of your recommendations are not appropriate. This often occurs because of technical constraints that you were unaware of. Users sometimes request things that are not technically possible to implement. You want to be aware of the constraints and to document them. There may also be the case where the product is implemented in a certain manner because a key customer or contract agreement requires it. By having the stakeholders in the room, you can brainstorm a new recommendation that fulfills the users’ needs and fits within the product or technological constraints. Alternatively, stakeholders may offer better recommendations than what you considered. We have to admit that we don’t always have the best solutions, so a meeting with all the stakeholders is a great place to generate them and ensure everyone is in agreement.

Presentation Attendees

Invite all the stakeholders to your presentation. These are typically the key people who are going to benefit and/or decide what to do with your findings. Do not rely on one person to convey the message to the others, as information will often get lost in the translation. In addition, stakeholders who are not invited may feel slighted and you do not want to lose allies at this point. We typically meet with the product manager, the development manager, and sometimes the business analysts. The product manager can address any functional issues, the schedule, and budget issues that relate to your recommendations, while the development manager can address issues relating to technical feasibility and the time and effort required to implement your proposals. You may need to hold follow-up meetings with individual developers to work out the technical implementation of your recommendations. Keep the presentation focused on the needs of your audience.

Ingredients of a Successful Presentation

The format and style of the presentation can be as important as the content that you are trying to relay. You need to convey the importance of your recommendations. Do not expect the stakeholders to automatically understand the implications of your findings. The reality is that product teams have demands and requirements to meet from a variety of sources, such as marketing, sales, and customers. User requirements are just one of these many sources. You need to convince them that your user findings are significant to the product’s development. There are a variety of simple techniques that can help you do this.

Keep the presentation focused

You will typically have only one or two hours to meet with the team, so you need to keep your presentation focused. If you need more than two hours, you are probably going into too much detail. If necessary, schedule a second meeting rather than conducting a three-hour meeting (people become tired, irritable, and lose their concentration after two hours).

You may not have time to discuss all of the details, but that’s fine because a detailed usability report can serve this function (discussed later). What you should cover will depend on who you are presenting to. Hopefully, the product team has been involved from the very beginning (refer to Chapter 1, Introduction to User Requirements, “Getting Stakeholder Buy-in for Your Activity” section, page 14), so you will be able to hit the ground running and dive into the findings and recommendations (the meat of your presentation). The team should be aware of the goal of the activity, who participated, and the method used. Review this information at a high level and leave time for questions. If you were not able to get the team involved early on, you will need to provide a bit of background. Provide an “executive summary” of what was done, who participated, and the goal of the study. This information is important to provide context for your results.

The delivery medium

The way that you choose to deliver your presentation can have an effect on its impact. We have found PowerPoint slides can be an effective way to communicate your results. In the past, we used to come with photocopies of our table of recommendations and findings. The problem was that people often had trouble focusing on the current issue being discussed. If we looked around the room, we would find people flipping ahead to read about other issues. This is not what you want. By using slides, you can place one finding per slide so the group is focused on the finding at hand. Also, you are in control so there is no flipping ahead. As the meeting attendees leave, you can hand them the paper summary of findings to take with them.

Start with the good stuff

Start the meeting on a positive note; begin your presentation with positive aspects of your findings. You do not want to be perceived as someone who is there to deliver bad news (e.g., this product stinks or your initial functional specification is incorrect). Your user requirements findings will always uncover things that are perceived “good news” to the product team.

For example, let’s say you conducted a card sort to determine whether an existing travel website needed to be restructured. If you uncovered that some of the site’s architecture matched users’ expectations and did not need to be changed, while other aspects needed to be restructured, you would start out your discussion talking about the section of the product that can remain unchanged. The team will be thrilled to hear that they do not need to build from scratch! Also, they work hard and they deserve a pat on the back. Obviously, putting the team in a good mood can help soften the potential blow that is to come.

Product teams also always love to hear the positive things that participants have to say. So, when you have quotes that give praise to the product, be sure to highlight them at the beginning of the session.

Use visuals

Visuals can really help to get your points across. Screenshots, photographs, results (e.g., dendrogram from a card sort), proposed designs or architecture are all examples of visuals you can use. Insert them wherever possible to help convey your message. They also make your presentation more visually appealing. Video and highlights tapes can be particularly beneficial. Stakeholders who could not be present at your activities can feel a part of the study when watching video clips or listening to audio highlights. This can take some significant time and resources on your part, so choose when to use these carefully. For example, if the product team holds an erroneous belief about their end users and you have data to demonstrate it, there is nothing better than visual proof to drive the point home (done tactfully, of course).

Prioritize and start at the top

It is best to prioritize your issues from a usability perspective prior to the meeting (refer to “First Prioritization: Usability Perspective,” page 639). It may sound obvious, but you should begin your presentation with the high-priority issues. It is best to address the important issues first because this is the most critical information that you need to convey to the product team. It also tends to be the most interesting. In case you run out of time in the meeting, you want to make sure that the most important information has been discussed thoroughly.

Avoid discussion of implementation or acceptance

The goal of this meeting is to present your findings. At this point you do not want to debate what can and cannot be done. This is an important issue that must be debated, but typically there is simply not enough time in the presentation meeting. It will come in a later meeting as you discuss the status of each recommendation (discussed later). If the team states “No, we can’t do it,” let them know that you would like to find out why, but that discussion will be in the next step. Remind them that you do not expect the user requirements data to replace the data collected from other sources (e.g., marketing and sales), but rather that the data should complement and support those other sources and you will want to have a further discussion of how all the data fits together. A discussion of when and how to determine a status for each recommendation can be found below (see “Ensuring the Incorporation of Your Findings,” page 660).

Avoid jargon

This sounds like a pretty simple rule, but it can be easy to unknowingly break it. It is easy to forget that terms and acronyms that we use on a daily basis are not common vocabulary for everyone else (e.g., “UCD,” “think-aloud protocol,” “transfer or training”). There is nothing worse than starting a presentation with jargon that no one in the room understands. If you make this mistake you are running a serious risk of being perceived as arrogant and condescending. As you finalize your slides, take one last pass over them to make sure that your terminology is appropriate for the audience. If you must use terminology that you think will be new to your audience, define it immediately.

Reporting Your Findings

By this point, you have conducted your activity, analyzed the results, presented the recommendations to the team, and now it is time to archive your work. It is important to compile your results in a written format for communication and archival purposes. After the report is created and finalized, you should post it on the web for easy access (either as a link to the document, or convert the text document to HTML). You do not want to force people to contact you to get the report or to find out where they can get it. The website should be well known to all stakeholders. The more accessible your report is, the more it will be viewed. In addition to making your report accessible from the web, we recommend sending an e-mail to all stakeholders with the executive summary (discussed below) and a link to the full report as soon as it is available.

Report Format

The format of the report should be based on the needs of your audience. Your manager, the product development team, and executives are interested in different information. It is critical to give each reader what he or she needs. In addition, there may be different methods in which to convey this information (e.g., the web, e-mail, paper). In order for your information to be absorbed, you must take content and delivery into consideration. There are three major types of report:

The complete report is the most detailed, containing information about the users, the method, the results, the recommendations, and an executive summary. The other “reports” are different or more abbreviated methods of presenting the same information. You can also include items such as educational materials and posters to help supplement the report. These are discussed in the “Report Supplements” section later (see page 659). Table 14.1 provides an “at a glance” view of the different report types, their contents, and audiences.

The Complete Report

Ideally, each user requirements gathering activity that you conduct should have a complete report as one of your deliverables. This is the most detailed of the reports. It should be comprehensive, describing all aspects of the activity (e.g., recruiting, method, data analysis, recommendations, conclusion).

Value of the Complete Report

You may be thinking “No one will read it!” or “It takes too much time.” It really is not much extra work to pull a complete report together once you have a template (discussed later). Plus, the proposal for your activity will supply you with much of the information needed for the report. (Refer to Chapter 5, Preparing for Your User Requirements Activity, “Creating a Proposal” section, page 146.) Even if the majority of people do not read the full report, it still serves some important functions. Also, regulated industries (e.g., drug manufacturers) may be required for legal reasons to document everything they learned and justify the design recommendations that were made.

Archival value

The complete report is important for archival purposes. If you are about to begin work on a product, it is extremely helpful to review detailed reports that pertain to the product and understand exactly what was done for that product in the past. What was the activity? Who participated? What were the findings? Did the product team implement the results? You may not be the only one asking these questions. The product manager, your manager, or other members of the usability group may need these answers as well. Having all of the information documented in one report is the key to finding the answers quickly.

Another benefit is the prevention of repeat mistakes. Over time, stakeholders change. Sometimes when new people come in with a fresh perspective they suggest designs or functionality that have already been investigated and demonstrated as unnecessary or harmful to users. Having reports to review before changes are incorporated can prevent making unnecessary mistakes.

The detail of a formal, archived report is also beneficial to people that have never conducted a particular user requirements activity before. By reading a report for a particular type of activity, they can gain an understanding of how to conduct a similar activity. This is particularly important if they want to replicate your method.

Certain teams want the details

Complete reports are important if the product team that you are working with wants to understand the details of the method and what was done. This is particularly important if they were unable to attend any of the session or view videotapes. Theymay also want those details if they disagree with your findings and/or recommendations.

Consulting

Experienced usability professionals working in a consulting capacity know that a detailed, complete report is expected. The client wants to ensure that they are getting what they paid for. It would be unprofessional to provide anything less than a detailed report.

Key Sections of the Complete Report

The complete report should contain at least the following sections.

Executive summary:: In this summary, the reader should have a sense of what you did and the most important findings. Try not to exceed one page, or two pages maximum. Try to answer a manager’s simple question: “Tell me what I need to know about what you did and what you found.” This is one of the most important sections of the report and it should be able to stand alone. Key elements include:

Background:: This section should provide background information about the product or domain of interest.

Method:: Describe in precise detail how the activity was conducted and who participated.

![]() Participants. Who participated in the activity? How many participants? How were they recruited? What were their job titles? What skills or requirements did an individual have to meet in order to qualify for participation? Were participants paid? Who contributed to the activity from within your company?

Participants. Who participated in the activity? How many participants? How were they recruited? What were their job titles? What skills or requirements did an individual have to meet in order to qualify for participation? Were participants paid? Who contributed to the activity from within your company?

![]() Materials. What materials were used to conduct the session (e.g., survey, cards for a card sort)?

Materials. What materials were used to conduct the session (e.g., survey, cards for a card sort)?

![]() Procedure. Describe in detail the steps that were taken to conduct the activity. Where was the session conducted? How long was it? How were data collected? It is important to disclose any shortcomings of the activity. Did only eight of your 12 participants show up? Were you unable to complete the session(s) due to unforeseen time restraints? Being up-front and honest will help increase the level of trust between you and the team.

Procedure. Describe in detail the steps that were taken to conduct the activity. Where was the session conducted? How long was it? How were data collected? It is important to disclose any shortcomings of the activity. Did only eight of your 12 participants show up? Were you unable to complete the session(s) due to unforeseen time restraints? Being up-front and honest will help increase the level of trust between you and the team.

Results:: This is where you should delve into the details of your findings and recommendations. It is ideal to begin the results section with an overview – a couple of paragraphs summarizing the major findings. It acts as a “mini” executive summary.

![]() What tools, if any, were used to analyze the data (e.g., EZSort to analyze card sort data)? Show visual representations of the data results, if applicable (e.g., a dendrogram).

What tools, if any, were used to analyze the data (e.g., EZSort to analyze card sort data)? Show visual representations of the data results, if applicable (e.g., a dendrogram).

![]() Include quotes from the participants. Quotes can have a powerful impact on product teams. If the product manager reads quotes like “I have gone to TravelSmart.com before and will never go back” or “It’s my least favorite travel site,” you will be amazed at how he/she sits up and takes notice.

Include quotes from the participants. Quotes can have a powerful impact on product teams. If the product manager reads quotes like “I have gone to TravelSmart.com before and will never go back” or “It’s my least favorite travel site,” you will be amazed at how he/she sits up and takes notice.

![]() Present a detailed table of all findings and recommendations. Include a status column to track what the team has agreed to. Document what recommendations they have agreed to and what they have rejected. Also, if appropriate, prioritize your recommendations, as described earlier (see page 638).

Present a detailed table of all findings and recommendations. Include a status column to track what the team has agreed to. Document what recommendations they have agreed to and what they have rejected. Also, if appropriate, prioritize your recommendations, as described earlier (see page 638).

Complete Report Template

Appendix H (page 726) provides a report template. This gives a clear sense of the sections of the report and their contents. This template can be easily modified for any user requirements activity.

The Recommendations Report

This is a version of the report that focuses on the findings and recommendations from the activity. This report format is ideal for the audience that is going to be implementing the findings – typically the product manager or developer manager. In our experience, developers are not particularly interested in the details of the method we used. They want an action list that tells them what was found and what they need to do about it (i.e., issues and recommendations). To meet this need we simply take the results section of the complete report (discussed above) and save it as a separate document.

We find that visuals such as screen shots or proposed architecture flows, where appropriate, are important for communicating recommendations. A picture is truly worth a thousand words. We find that developers appreciate that we put the information that is important to them in a separate document, saving them the time of having to leaf through a 50-page report. Again, the more accessible you make your information, the more likely it is to be viewed and used. You should also provide your developers with access to the complete report in case they are interested in the details.

The Executive Summary Report

The audience for this report is typically executive vice-presidents and senior management (your direct manager will likely want to see your full report). The reality is that executives do not have time to read a formal usability report; however, we do want to make them aware of the activities that have been conducted for their product(s) and the key findings. The best way to do this is via the executive summary (see page 656). We typically insert the executive summary into an e-mail and send it to the relevant executives, together with a link to the full report. (The reality is that your executives still may not take the time to read the full report, but it is important to make it available to them.) This is especially important when the changes you are recommending are significant or political. Copy yourself on the e-mail and you now have a record that this information was conveyed. The last thing you want is a product VP coming back to you and saying “Why was I not informed about this?” By sending the information you can say “Actually, I e-mailed you the information on June 10.”

Report Supplements

You can create additional materials to enhance your report. Different people digest information best in different formats, so think of what will work best for your audiences. Educational materials and posters are two ways to help relay your findings in an additional format.

Educational materials

The product manager or development manager may have been involved in your study and attended a presentation of the results, but it is unlikely that every member of the product development team will be similarly aware. To educate team members, including new employees, you can create booklets, handouts, or a website containing a description of the end user(s), the requirements gathering activity, and the findings. This ensures that what you have learned will continue to live on. Ask the team to post links to these materials on the product team’s website.

Posters

Posters are an excellent lingering technique that can grab stakeholders’ attention and convey important concepts about your work quickly. You can create a different poster for each type of user, activity conducted, or major finding. It obviously depends on the goals of your study and the information you want the stakeholder to walk away with. The poster can contain a photo collage, user quotes, artifacts collected, results from the activity (e.g., dendrogram from a card sort, task flow from group task analysis), and recommendations based on what was learned (see Figure 14.4 on page 661). Display posters in the hallways where the product team works so that people will stop and read them. It is a great way to make sure that everyone is aware of your findings.

Ensuring the Incorporation of Your Findings

You have delivered your results to the stakeholders, and now you want to do everything you can to make sure the findings are acted upon. You want to make sure that the data are incorporated into user profiles, personas, functional documentation, and ultimately the product. As mentioned in the previous section, presenting the results rather than simply e-mailing a report is one of the key steps in making sure your results are understood and are implemented correctly. There are some key things you can do to help to ensure your findings are put to use. These include involving stakeholders from beginning to end, becoming a team player, making friends at the top, and keeping a scorecard.

Stakeholder Involvement

A theme throughout this book has been to get your stakeholders involved from the inception of your activity and to continue their involvement throughout the process. (Refer to Chapter 1, Introduction to User Requirements, “Getting Stakeholder Buy-in for Your Activity” section, page 14). Their involvement will have the biggest payoff at the recommendations stage. Because of their involvement, they will understand what the need was for the activity, the method used, and the users who were recruited. In addition, they should have viewed the session(s) and should not be surprised by the results of your study. By involving the product team from the beginning, they feel as though they made the user requirements discoveries with you. They have seen and heard the users’ requirements first hand. Teams that are not involved in the planning and collection processes may feel as though you are trying to shove suspect data down their throats. It can sometimes be a tough sell to get them to believe in your data.

If the team has not been involved in the user requirements process at all, start to include them now – better late than never! Work with them to determine the merged usability and development prioritization (see “Prioritization of Findings,” page 638), and continue to do so as the findings are implemented. Also, be sure to involve them in the planning for future activities.

Be a Virtual Member of the Team

As was mentioned earlier in this book, if at all possible you will want to be an active, recognized member of the product team from the moment you are assigned to the project. (Refer to Chapter 1, Introduction to User Requirements, “Getting Stakeholder Buy-in for Your Activity” section, page 14.) Take the time to become familiar with the product as well as with the product team priorities, schedule, budget, and concerns. You need to do your best to understand the big picture. You should be aware that usability data are only one of the many factors the team must consider when determining the goals and direction of their product. (Refer to Chapter 1, Introduction to User Requirements, “A Variety of Requirements” section, page 8.)

Recognizing this time investment, the product team will trust you and acknowledge you as someone who is familiar with their product and the development issues. This knowledge will not only earn you respect; it will also enable you to make informed recommendations which are executable because you are familiar with all the factors that impact the product’s development processes. The team will perceive you as someone who is capable of making realistic recommendations to improve the product. In contrast, if you are viewed as an outsider with the attitude “You must implement all of the users’ requirements and anything less is unacceptable,” you will not get very far.

Obtain a Status for Each Recommendation

Document the team’s response to your recommendations (e.g., accept, reject, needs further investigation). This shows that you are serious about the finding and that you are not simply presenting them as suggestions. We like to include a “status” column in all of our recommendations tables (refer to Appendix H, page 734). After the results presentation meeting (discussed above) we like to hold a second meeting where we can determine the priority of the results in terms of development priorities (see “Second Prioritization: Merging Usability and Product Development Priorities,” page 641) as well as the status of each recommendation. If the team agrees to implement the recommendation, we document the recommendation as “Accepted.” If the recommendation is pending because the product team needs to do further investigation (e.g., resource availability, technical constraints), we note this and state why. No matter how hard you try, there will be a few recommendations that the product development team will reject or not implement. You should expect this, nobody wins all the time. In these cases, we note their rejection and indicate the team’s reasoning. Perhaps they disagree with what the findings have indicated, or they do not have the time to build what you are requesting. Whatever their reason is, document it objectively and move on to the next recommendation. We also like to follow-up with the team after the product has been released to do a reality check on the status column. We let them know ahead of time that we will follow-up to see how the findings were implemented. Did they implement the recommendations they agreed to? If not, why? Be sure to document what you uncover in the follow-up.

Ensure the Product Team Documents Your Findings

As mentioned in Chapter 1, Introduction to User Requirements, “A Variety of Requirements” section, page 8, there are a variety of different kinds of product requirements (e.g., marketing, business, usability). Typically someone on the product team is responsible for creating a document to track all of these requirements. Make sure your usability requirements get included in this document. Otherwise, they may be forgotten.

As a reader of this book, you may not be a product manager, but encouraging good habits within the team you are working with can make your job easier. The product team should indicate each requirement’s justification, the source(s), and the date it was collected. This information is key to determining the direction of the product, so it is important to have the documentation to justify the decisions made. If certain user requirements are rejected or postponed to a later release, this should also be indicated within the document. By referring to this document you will be able to ensure that the user requirements are acknowledged – and if they are not incorporated, you will understand why. This is similar to the document that you own that tracks the status of each recommendation, but a product team member will own this document and it will include all of the product requirements, not just user requirements. If the team you work with uses a formal enhancement request system, make sure that your findings are entered into the system.

Keep a Scorecard

As was just discussed above, you should track the recommendations that the product development team has agreed to implement. If you are working as an external consultant, you may not be so concerned about this; but if you are an internal employee, it is important. We formally track this information in what we refer to as “the usability scorecard.” Figure 14.5 illustrates the information that we maintain in our scorecard.

We use scorecard information for a number of purposes. If a product is getting internal or customer complaints regarding usability and the team has worked with us before, we like to refer to our scorecard to determine whether they implemented our recommendations. In some cases they have not, so this information provesinvaluable. Executives may ask why the usability group (or you) has not provided support or why your support failed. The scorecard can save you or your group from becoming involved in the blame-game. If the product development team has implemented the recommendations, however, we need to determine what went wrong. The scorecard helps to hold the product team and us accountable.

We also find that the scorecard is a great way to give executives an at-a-glance view of the state of usability for their products. It helps them answer questions such as “Who has received usability support?”, “When and how often?”, and “What has the team done with the results?” We assign a “risk” factor to each of the activities, based on how many of the recommendations have been implemented. When a VP sees the red coding (indicating high risk), he or she quickly picks up the phone and calls the product manager to find out why they are at that risk category. It is a political tactic to get traction, but it works.

It is important for us to note that this approach has worked well for us, due in large part to the fact that we have worked with a vice-president in charge of usability and UI. If you don’t have a strong VP or a strong CEO who can back you up, a bad score can kill the usability function at a company. Anytime the score is low, it fingers someone as either not doing their job or as ignoring the recommendations of the study. If that person being blamed is powerful, he or she can do harm to the usability studies. If this is a concern at your particular company, use the scorecard simply for your tracking purposes at first, to record the state of things, and make it public only when there is an important need.

Lastly, the reality of many usability departments is that they are understaffed. As a result, you want to track who is making use of your data versus who is using your services but not your data. The scorecard is an ideal way to do this. Obviously, you will want to use your limited resources to help the teams that are utilizing both your services and the data you collect.

Pulling It All Together

In this chapter, we have described the steps to take after you have conducted your user requirements activity and analyzed the data. You may have conducted several activities or only one. In either case, the results and recommendations need to be prioritized based on the user impact and cost to the product development to incorporate them. In addition, we have described various formats for showcasing your data, presenting the results, and documenting your data. It is not enough to collect and analyze user requirements; you must communicate and archive them so they can live on long after the activity is done. Good luck in your user requirements ventures!

Calico Configuration Modeling Workbench

Tim P. McCollum, Sun Microsystems, Inc.

Calico Commerce, Inc. was a privately held company whose core product enabled companies to sell customizable configurations of complex products (e.g., personal computers, hi-tech bicycles, mainframe computers, cellphone plans). By using Calico’s products, companies could greatly reduce their cost of sales and increase customer satisfaction by automating the sales process and eliminating returns resulting from erroneous orders. The company’s founder and core engineering team did an outstanding job creating an approach and a set of features innovative enough to win several Fortune 100 customers and have one of the most successful IPOs in NASDAQ history.

Web-based sales applications built by Calico enabled consumers to create and purchase valid configurations of complex products. For example, if someone wanted to buy a personal computer via the Web, he or she might go to a computer vendor’s website to place the order. The consumer would “build” the PC by selecting from a variety of PC components. The Calico-created website prevented consumers from selecting incompatible components, which eliminated erroneous orders and costly product returns. The website used to configure the PC, and ultimately to place the order, would have been created by Calico professional services using the Calico Configuration Modeling Workbench.

The Challenge

The original Calico modeling workbench was created during a time when graphical user interface (GUI) applications were the predominate paradigm. As the predominate application paradigm shifted to the Web, new competitors were able to develop their architectures from the ground up to better suit Web-based application development.

In order to compete, Calico had to update its core offering quickly or risk becoming out of date and irrelevant. The Calico Workbench needed to address the primary customer values of initial time to market and the cost of maintaining the application following initial deployment.

Our Approach

To successfully update the workbench, the product team (the engineering architect, engineering manager, product marketing, and myself) needed to:

Working closely with the workbench engineering manager and architect, we pored through previous bug reports and lists of feature improvement requests from marketing. The most important questions, however, were how to improve the features and in what priority. These questions required understanding the contexts in which the problems occurred. We therefore planned to conduct a task analysis to identify the workbench shortcomings and their contexts. What we found, however, was significantly more fundamental and important to the overall product strategy.

Resistance in the Proposal Phase

The original task analysis proposal was met with significant resistance for many legitimate reasons:

![]() The plan required several hours from the most productive field service people we had. Their work was measured in billable hours and this was not billable time, so their management was hesitant to free up their time.

The plan required several hours from the most productive field service people we had. Their work was measured in billable hours and this was not billable time, so their management was hesitant to free up their time.

![]() Mid/upper level engineering management was already behind schedule and they felt that this activity would take too much time. They were forging ahead, so whatever we found would be irrelevant.

Mid/upper level engineering management was already behind schedule and they felt that this activity would take too much time. They were forging ahead, so whatever we found would be irrelevant.

![]() Some marketing factions maintained: “We already have the list of what we need done. Just implement that.”

Some marketing factions maintained: “We already have the list of what we need done. Just implement that.”

![]() Some powerful executives believed: “This workbench is good enough. Let’s just attach it to a more modern runtime.”

Some powerful executives believed: “This workbench is good enough. Let’s just attach it to a more modern runtime.”

Field Service People

The professional services billable-hours issue was resolved by utilizing people who were going to be at headquarters anyway. There were times at which they would be “out of the field” for training or organizational meetings. When they came into headquarters, we requested a four-hour time slot while they were in town. This meant the task analysis took a little longer, but we were still able to fully de-brief six professional services people over the course of three weeks.

Engineering and Marketing

For the most part, marketing was very supportive. The biggest challenge in getting buy-in for this activity from marketing was overcoming their fear that we might undermine their position of authority regarding product requirements.

As is often the case, the marketing requirements for this release were pretty “clear” but also pretty high-level (e.g. new Web-based architecture, faster run-time, fix the known usability problems with the workbench). The marketing requirements document (MRD) is rarely detailed enough to sufficiently explain what engineering must do to deliver a successful product. Often, when the user interface designer and an engineer start working on a new product feature, they discover that it impacts the user interface of a variety of other existing features/attributes – all of which engineering views as new, un-promised “features” with significant schedule impacts. The set of “in-between” requirements that are both too low-level to show up in the MRD, but big enough for engineering to view them as schedule-impacting features, were the class of requirements we used to illustrate, and justify, the need for “user experience requirements.”

The biggest challenge with engineering management was that they didn’t see the value of the exercise. Many engineering staff members had worked with “usability” people before and, in all honestly, didn’t feel that usability work contributed useful information in a timely fashion. The results came in too late, the importance of the findings was often overstated, and the net result was a lot of noise that could not or should not be acted on. To resolve the marketing and engineering issues, we leveraged the traditional gap that exists between marketing requirements and engineering planning.

Engineering was all too familiar with this scenario and was frustrated from receiving blame for late deliveries due to “feature creep” in the development cycle. I made the argument that the real culprit was not feature creep later in the cycle, it was poor planning based upon insufficient design assumptions early in the cycle. I successfully argued that the product is ultimately defined by three primary influences:

In most cases, only two of these influences are accounted for in the planning phases of a project, which leads to seriously slipped schedules, and/or products that may meet the letter of the marketing requirements, but still not satisfy the true customer needs. In order to deliver a successful product in a realistic timeframe, we needed to run user requirement activities parallel with marketing and engineering activities.

Successfully articulating this information and committing to provide user requirements in an effective timeframe did a couple of things for us. First, it established user requirements on a level playing field with marketing and engineering requirements. Next, it illustrated that user requirements gathering is related to, but distinct from, marketing requirements and thereby eliminated many of marketing’s political issues. In addition, this level of design detail is too time-involved for the typical marketer to work through, so marketing saw us as extending their work rather than competing with it. Engineering also saw user requirements as a means for getting the level of detail they needed to make more accurate forecasts. Lastly, because this work would also prioritize user requirements, engineering saw this as an important resource for assessing the costs of slipping a ship date or sticking to the date and cutting features. Having now acquired an understanding of how user requirements fit in and could help solve some existing pain points, the other stakeholders were willing to entertain proposals for user requirement activities.

Resistance in the Planning Phase

The first user requirement activity the product team undertook was a task analysis of workbench users. In this case, I encountered some resistance to my task analysis protocol because I stepped back and took a broader look at the space than what many believed was necessary. When designing the interview script, you must make sure the time is filled with useful information gathering, but you also need be flexible enough to follow lines of discovery not originally anticipated. You have to develop a protocol that allows the story to unfold rather than imposes preconceived biases and assumptions.

Issues with the Proposed Execution of the Activity

While we were simply trying to capture the broader perspective in these interviews, we were pressured by others to modify our interview script so it was “more efficient and didn’t cover ground that everyone already knew the answers to.” Most of the other stakeholders were much more comfortable with directly targeted questions like:

![]() Name the top 5 problems you encountered with the workbench.

Name the top 5 problems you encountered with the workbench.

![]() When do you use feature X and what problems do you encounter when using it?

When do you use feature X and what problems do you encounter when using it?

While these are certainly clear, useful, and efficient questions, they assume that all the problems with the workbench are relatively small and well known. Making this assumption minimizes the opportunity to uncover more fundamental, and potentially more productive, insights about the true nature of the product’s user experience and competitiveness. While we listened to everyone’s feedback and incorporated many of their specific questions into the protocol, we stuck to our guns and framed the interviews within a broader user experience perspective. Instead of simply interviewing with “efficient,” targeted questions, we designed the protocol in two pieces. We started with a much broader perspective by asking questions like:

![]() What projects did you work on in the last 18 months?

What projects did you work on in the last 18 months?

![]() What were the customer goals on each project?

What were the customer goals on each project?

![]() Describe the project architecture.

Describe the project architecture.

![]() Draw a picture of the project organization (who did what jobs and how they interacted).

Draw a picture of the project organization (who did what jobs and how they interacted).

The second section of the interview protocol included the specific questions the other stakeholders wanted answered plus quite a few targeted questions of our own. As it turned out, the broader questions proved very effective and we rarely had to utilize the targeted questions to uncover necessary information. Devising the script in this manner ensured that, at a minimum, we would get answers to the specific questions people thought we should be asking. This helped garner initial support for the project, but it also would have enabled us to claim project success even if the broader, more risky, questioning did not yield any directly actionable results.

Issues from Executives and Key Stakeholders

Perhaps even more importantly, modifying our approach to obtain buy-in prior to the investigation was key to successfully “selling” the results to the organization after the study was complete. If this initial step is done correctly, the other stakeholders will feel some ownership for the study’s results and thereby some responsibility for acting upon the findings. One of the last things done in the “buy-in” process before the activity was to show a Microsoft PowerPoint slide of the key findings we would obtain during the study. This was actually a minimal subset of all the things we hoped to accomplish, but we correctly assumed this small list of concrete findings would be sufficient for the other stakeholders to approve the investigation. When the time came to present the findings, we then led the presentation with the same slide and proceeded through the findings. This enabled us to remind people why we did the study, and their part in determining the questions we would answer. It also demonstrated that we had successfully accomplished what we set out to do. Providing a clear simple link between what we said we would do and what we actually delivered established a credibility baseline for any messages we wanted to convey later in the presentation.

It also enabled us to add a little drama by essentially saying: “Not only did we do what we set out to do and find answers to your important questions, but we also found some things we believe are even more important.” This gets people’s attention and enhances the credibility of all the findings.

In sum, setting understandable, achievable expectations, bestowing some ownership of the results upon others during the buy-in phase, and creating continuity between the study proposal and presentation of findings, was critical for obtaining actionable commitments from other stakeholders.

Presenting the Results

The original workbench had a very “panels-based” UI. In the original UI most of the critical model elements were presented in the form of separate dialogs (“panels”) with each containing several data entry fields. This forced users to deal with very small elements of the model in isolation from one another. The panels UI paradigm did not allow users to easily understand how the model worked overall. In addition, the panels-based UI also made it extremely difficult to debug and modify the model because there was no simple way to see how the individual elements of the model affected one another. These combined influences meant that it was unnecessarily difficult to deploy and maintain a sales application using the original Calico Modeling Workbench.

During the task analysis we observed several of the field personnel drawing pictures of how they thought the model elements interacted. Upon further investigation we found that these hand-written pictures were often the most used part of a project’s documentation. Based upon these critical observations during the task analysis, it became clear that the panels UI model was a huge productivity problem and that we needed a new workbench paradigm that visually depicted the overall model structure and enabled users to directly interact with the model elements.

Convincing Stakeholders and Getting Buy-in for Change

Discovering this insight was not enough. We now had to make the insight relevant to various stakeholders who were already feeling pressure to get an updated product into the market as soon as possible. Fortunately, and in part due to their roles in the task analysis, both the engineering architect and engineering manager immediately understood the importance of the finding. This cleared the way to put together some early concept prototypes of the new model visualization workbench. Of course, getting people to understand that a problem exists and getting their commitment to do something about it are two different things. Even though our proposal had support in many quarters, there was still a significant leadership group who believed that our proposal would introduce too much risk into the schedule. Fortunately, due to the way we handled the buy-in phase and worked successfully with field personnel, we had established some credibility with, and access to, a variety of “influencers.”

In addition to being well-versed in their own skill set, usability professionals must also be well-versed in the language and needs of marketing and engineering. I began working on a concept prototype to show the internal engineering and marketing audiences some of the core advantages of the new model visualization concept. To make our findings even more relevant to marketing, I also translated our design proposal into a pseudomarketing white paper. The intent was, in a light-hearted way, to articulate how the kinds of features we were proposing might be valued by a customer or used in marketing messaging. The “marketing” write-up, the prototype, and the results of the task analysis formed a cohesive package that enabled others to see the power of the ideas for which we were recommending action.

Getting Feedback on Proposed Changes from Customers

There was an opportunity to test drive our prototype and our value propositions with some real customers. Once internal users saw a tool which allowed them to graphically depict and interact with models, they immediately appreciated how it could positively impact many of their modeling tasks, reduce time-to-market, and decrease cost-of-ownership issues. Similarly, once customers saw the prototype and understood how this would impact their time-to-market, cost-of-ownership, and maintenance issues, they became very enthusiastic for our proposal. Gaining access to customers with a value proposition and a prototype in hand was a huge step that would not have been possible if we had not established credibility during the user requirements activity. When customers are clearly enthused by something that engineering management considers possible, most serious objections disappear.

Obtaining Buy-in during Development

Obtaining support from marketing, sales, service, and engineering leadership was only part of the user requirements battle; we also needed buy-in from the individual engineers who would implement the requirements-based designs. Translating user requirements into actionable product designs can be a significant bottleneck which, if not handled correctly, can result in an insufficient implementation of the requirement. In my experience, engineers resent being told how to design. The best practice is collaboration, and making them an integral part of the design process.

Low-fidelity prototyping is a great tool for obtaining engineering buy-in. Due to the success of the user requirements and prototyping work, we obtained approval to conduct a three-day low-fidelity prototyping session. After participating in the session, all the engineers had a personal interest in making the designs a reality and a greater appreciation for the time and effort required to create good designs. For the remainder of the project, the engineers and user experience personnel collaborated very effectively to produce a product whose design matched both the letter and the spirit of the user experience requirements.

Lessons Learned

In this case, I wish I had done a couple of things differently. First, I should have initially presented a more conservative task analysis plan that focused more on a small set of important, specific questions rather than one that focused on the process of the technique. While I would have conducted the study in the same manner, I now think that presenting a plan with more nebulous objectives only served to reinforce opinions that this was a fluffy, esoteric usability activity that was unlikely to yield useful results. If I had it to do over, I would have focused on the concrete things we were most likely to uncover and would not have presented so much detail about the process we would use to uncover them. Overcoming this slight misstep required significant time and rework.

In addition, I would have planned to get the resulting design ideas in front of actual customers as soon as possible. Garnering positive customer feedback for the feature set was invaluable in convincing the organization to act on the findings. In all honesty, it was fortune rather than insight that enabled us to get customer feedback. The transition from an old code base (that didn’t make sense to update with new functionality) to a new code base (that wasn’t yet ready to show in a sales situation) had left sales and marketing with little new material to demo. This happenstance, and the “marketing-ese” write-up that we had done, opened the door for marketing to see how the vision painted by our findings and prototype would fly with customers. As more customers and field service personnel continued to express enthusiasm for the design direction, even the most resistant stakeholders adopted an “OK, we’ll try it” stance. Creating ways to obtain further customer feedback and enabling other internal groups to confirm the findings with real customers are the most powerful means for persuading an organization to act on user requirements.

Conclusion

The bottom line is that even important, strongly substantiated, results do not speak for themselves. Obtaining clear results is ultimately of little value if they are not communicated effectively up, down, and across the organization. Each important stakeholder must often be approached using a different message style and/or technique to obtain his/her necessary support. Determining how to approach each stakeholder requires one to understand the vernacular of the skill-set, the social-political context, and their past history with usability groups.

In the end, the user requirements gathering activities provided the foundation for a credible user experience team and established a set of effective user experience design processes that led to significantly better products. This was accomplished by:

![]() Educating the company about how user experience activities extend and ease the work of marketing and engineering

Educating the company about how user experience activities extend and ease the work of marketing and engineering

![]() Including other stakeholders in the user requirements planning and making them co-owners of the results

Including other stakeholders in the user requirements planning and making them co-owners of the results

![]() Conducting an effective, collaborative, user requirements activity that brought new, meaningful, and actionable insights to the company

Conducting an effective, collaborative, user requirements activity that brought new, meaningful, and actionable insights to the company

![]() Creating an effective set of user experience process and deliverables synchronized with engineering and marketing deliverables

Creating an effective set of user experience process and deliverables synchronized with engineering and marketing deliverables

![]() Taking time to make our findings relevant to each audience by trying to talk in their terms (e.g. sketching a valid business model, potential marketing value propositions, and engineering impacts)

Taking time to make our findings relevant to each audience by trying to talk in their terms (e.g. sketching a valid business model, potential marketing value propositions, and engineering impacts)

![]() Validating our findings and designs with customers and users in collaboration with other skill-sets

Validating our findings and designs with customers and users in collaboration with other skill-sets

![]() Creating good, mutually beneficial relationships with other customer facing groups by demonstrating that we could, and would, listen and act.

Creating good, mutually beneficial relationships with other customer facing groups by demonstrating that we could, and would, listen and act.

Insights gained through user requirements activities contributed greatly to Calico’s development of innovative tools that enabled it to win multiple technical bake-offs against competitors. In fact, if imitation is the sincerest form of flattery, then Calico was paid the highest compliment by its competitors as many modified their workbenches and laid claim to similar visualization capabilities in their products within the 12 months following the Visual Workbench’s release.

However, Calico’s ultimate fate also provides a cautionary perspective. While it is important to articulate the value of good customer requirements activities, it is just as important not to over-sell its ultimate impact. Be certain you know the upper and lower limits of user requirements impacts because you must often gain buy-in for user requirements activities based upon the least you will learn. If you make your justification on more than this, you run the risk of losing credibility during the pitch, or possibly worse, failing to meet expectations once the research is complete. On the other hand, if you don’t understand and plan for potential discoveries, you can wind up eliminating potentially important insights before you even start the user requirements activity. In Calico’s case, the company released a technically advanced product with innovative features based upon insightful user requirements, but it ultimately failed as a company. The moral of the story: Good user requirements can be the competitive difference that enables a company to outperform the competition, but no amount of design or technical superiority can overcome the influences of a poor corporate strategy, failed customer management, and a shrinking economy.