CHAPTER 20

CHAPTER 20

Dense Stereo Disparity from an Artificial Life Standpoint

Centro de Investigación Cientifica y de Educación Superior de Ensenada, México

F. FERNÁNDEZ

Universidad de Extremadura, Spain

C. B. PÉREZ

Centro de Investigación Cientificay de Educación Superior de Ensenada, México

E. LUTTON

Institut National de Recherche en Informatique et en Automatique, France

20.1 INTRODUCTION

Artificial life is devoted to the endeavor of understanding the general principles that govern the living state. The field of artificial life [1] covers a wide range of disciplines, such as biology, physics, robotics, and computer science. One of the first difficulties of studying artificial life is that there is no generally accepted definition of life. Moreover, an analytical approach to the study of life seems to be impossible. If we attempt to study a living system through gradual decomposition of its parts, we observe that the initial property of interest is no longer present. Thus, there appears to be no elementary living thing: Life seems to be a property of a collection of components but not a property of the components themselves. On the other hand, classical scientific fields have proved that the traditional approach of gradual decomposition of the whole is useful in modeling the physical reality. The aim of this chapter is to show that the problem of matching the contents of a stereoscopic system could be approached from an artificial life standpoint. Stereo matching is one of the most active research areas in computer vision. It consists of determining which pair of pixels, projected on at least two images, belong to the same physical three-dimensional point. The correspondence problem has been approached using sparse, quasidense, and dense stereo matching algorithms. Sparse matching has normally been based on sparse points of interest to achieve a three-dimensional reconstruction. Unfortunately, most modeling and visualization applications need dense reconstruction rather than sparse point clouds. To improve the quality of image reconstruction, researchers have turned to the dense surface reconstruction approach.

The correspondence problem has been one of the primary subjects in computer vision, and it is clear that these matching tasks have to be solved by computer algorithms [3]. Currently, there is no general solution to the problem, and it is also clear that successful matching by computer can have a large impact on computer vision [4,5]. The matching problem has been considered the most difficult and most significant problem in computational stereo. The difficulty is related to the inherent ambiguities being produced during the image acquisition concerning the stereo pair: for example, geometry, noise, lack of texture, occlusions, and saturation. Geometry concerns the shapes and spatial relationships between images and the scene. Noise refers to the inevitable variations of luminosity, which produces errors in the image formation due to a number of sources, such as quantization, dark current noise, or electrical processing. Texture refers to the properties that represent the surface or structure of an object. Thus, lack of texture refers to the problem of unambiguously describing those surfaces or objects with similar intensity or gray values. Occlusions can be understood as those areas that appear in only one of the two images due to the camera movement. Occlusion is the cause of complicated problems in stereo matching, especially when there are narrow objects with large disparity and optical illusion in the scene. Saturation refers to the problem of quantization beyond the dynamic range in which the image sensor normally works. Dense stereo matching is considered an ill-posed problem. Traditional dense stereo methods are limited to specific precalibrated camera geometries and closely spaced viewpoints. Dense stereo disparity is a simplification of the problem in which the pair is considered to be rectified and the images are taken on a linear path with the optical axis perpendicular to the camera displacement. In this way the problem of matching two images is evaluated indirectly through a univalued function in disparity space that best describes the shape of the surfaces in the scene. There is one more problem with the approach to studying dense stereo matching using the specific case of dense stereo disparity. In general, the quality of the solution is related to the contents of the image pair, so the quality of the algorithms depends on the test images. In our previous work we presented a novel matching algorithm based on concepts from artificial life and epidemics that we call the infection algorithm.

The goal of this work is to show that the quality of the algorithm is comparable to the state of the art published in computer vision literature. We decided to test our algorithm with the test images provided at the Middlebury stereo vision web page [11]. However, the problem is very difficult to solve and the comparison is image dependent. Moreover, the natural vision system is an example of a system in which the visual experience is a product of a collection of components but not a property of the components. The infection algorithm presented by Olague et al. [8] uses an epidemic automaton that propagates the pixel matches as an infection over the entire image with the purpose of matching the contents of two images. It looks for the correspondences between real stereo images following a susceptible–exposed–infected–recovered (SEIR) model that leads to fast labeling. SEIR epidemics refer to diseases with incubation periods and latent infection. The purpose of the algorithm is to show that a set of local rules working over a spatial lattice could achieve the correspondence of two images using a guessing process. The algorithm provides the rendering of three-dimensional information permitting visualization of the same scene from novel viewpoints. Those new viewpoints are obviously different from the initial photographs. In our past work, we had four different epidemic automata in order to observe and analyze the behavior of the matching process. The best results that we have obtained were those related to cases of 47 and 99%. The first case represents geometrically a good image with a moderate percentage of computational effort saving. The second case represents a high percentage of automatically allocated pixels, producing an excellent percentage of computational effort saving, with acceptable image quality.

Our current work aims to improve the results based on a new algorithm that uses concepts from evolution, such as inheritance and mutation. We want to combine the best of both epidemic automata to obtain high computational effort saving with excellent image quality. Thus, we are proposing to use knowledge based on geometry and texture to decide during the algorithm which epidemic automaton is based more firmly on neighborhood information. The benefit of the new algorithm is shown in Section 20.3 through a comparison with previous results. Both the new and the preceding algorithms use local information such as the zero-mean normalized cross-correlation, geometric constraints (i.e., epipolar geometry, orientation), and a set of rules applied within the neighborhood. Our algorithm manages global information, which is encapsulated through the epidemic cellular automaton and the information about texture and edges in order to decide which automata is more appropriate to apply.

The chapter is organized as follows. The following section covers the nature of the correspondence problem. In Section 20.2 we introduce the new algorithm, explaining how the evolution was applied to decide between two epidemic automata. Finally, Section 20.3 shows the results of the algorithm, illustrating the behavior, performance, and quality of the evolutionary infection algorithm. In the final section we state our conclusions.

20.1.1 Problem Statement

Computational stereo studies how to recover the three-dimensional characteristics of a scene from multiple images taken from different viewpoints. A major problem in computational stereo is how to find the corresponding points between a pair of images, which is known as the correspondence problem or stereo matching. The images are taken by a moving camera in which a unique three-dimensional physical point is projected into a unique pair of image points. A pair of points should correspond to each other in both images. A correlation measure can be used as a similarity criterion between image windows of fixed size. The input is a stereo pair of images, Il (left) and Ir (right). The correlation metric is used by an algorithm that performs a search process in which the correlation gives the measure used to identify the corresponding pixels on both images. In this work the infection algorithm attempts to maximize the similarity criterion within a search region. Let pl, with image coordinates (x, y), and pr, with image coordinates (x′, y′), be pixels in the left and right image, 2W + 1 the width (in pixels) of the correlation window, ![]() and

and ![]() the mean values of the images in the windows centered on pl and pr, R(pl) the search region in the right image associated with pl, and

the mean values of the images in the windows centered on pl and pr, R(pl) the search region in the right image associated with pl, and ![]() (Il, Ir) a function of both image windows. The

(Il, Ir) a function of both image windows. The ![]() function is defined as the zero-mean normalized cross-correlation in order to match the contents of both images:

function is defined as the zero-mean normalized cross-correlation in order to match the contents of both images:

where

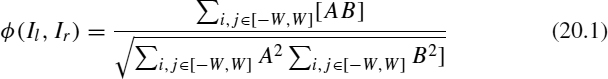

However, stereo matching has many complex aspects that turn the problem intractable. In order to solve the problem, a number of constraints and assumptions are exploited which take into account occlusions, lack of texture, saturation, or field of view. Figure 20.1 shows two images taken at the EvoVisión laboratory that we used in the experiments. The movement between the images is a translation with a small rotation along the x, y, and z axes, respectively: Tx = 4.91mm, Ty = 114.17mm, Tz = 69.95mm, Rx = 0.84°, Ry = 0.16°, Rz = 0.55°. Figure 20.1 also shows five lattices that we have used in the evolutionary infection algorithm. The first two lattices correspond to the images acquired by the stereo rig. The third lattice is used by the epidemic cellular automaton in order to process the information that is being computed. The fourth lattice corresponds to the reprojected image, while the fifth lattice (Canny image) is used as a database in which we save information related to contours and texture. In this work we are interested in providing a quantitative result to measure the benefit of using the infection algorithm. We decided to apply our method to the problem of dense two-frame stereo matching. For a comprehensive discussion on the problem, we refer the reader to the survey by Scharstein and Szeliski [11]. We perform our experiments on the benchmark Middlebury database. This database includes four stereo pairs: Tsukuba, Sawtooth, Venus, and Map. It is important to mention that the Middlebury test is limited to specific camera geometries and closely spaced viewpoints. Dense stereo disparity is a simplification of the problem in which the pair is considered to be rectified, and the images are taken on a linear path with the optical axis perpendicular to the camera displacement. In this way, the problem of matching is evaluated indirectly through a univalued function in disparity space that best describes the shape of the surfaces. The performance of our algorithm has been evaluated with the methodology proposed in [11]: The error is the percentage of pixels far from the true disparity by more than one pixel.

Figure 20.1 Relationships between each lattice used by the infection algorithm. A pixel in the left image is related to the right image using a cellular automaton, canny image, and virtual image during the correspondence process. Gray represents the sick (explored) state, and black represents the healthy (not-explored) state.

20.2 INFECTION ALGORITHM WITH AN EVOLUTIONARY APPROACH

The infection algorithm is based on the concept of natural viruses used to search for correspondences between real stereo images. The purpose is to find all existing corresponding points in stereo images while saving on the number of calculations and maintaining the quality of the data reconstructed. The motivation to use what we called the infection algorithm is based on the following: When we observe a scene, we do not observe everything in front of us. Instead, we focus our attention on some regions that retain our interest in the scene. As a result, it is not necessary to analyze each part of the scene in detail. Thus, we pretend to “guess” some parts of the scene through a process of propagation based on artificial epidemics.

The search process of the infection algorithm is based on a set of transition rules that are coded as an epidemic cellular automaton. These rules allow the development of global behaviors. A mathematical description of the infection algorithm has been provided by Olague et al.[9]. In this chapter we have introduced the idea of evolution within the infection algorithm using the concepts of inheritance and mutation in order to achieve a balance between exploration and exploitation. As we can see, the idea of evolution is rather different from that in traditional genetic algorithms. Concepts such as an evolving population are not considered in the evolutionary infection algorithm. Instead, we incorporate aspects such as inheritance and mutation to develop a dynamic matching process. To introduce the new algorithm, let us define some notation:

- Cellular automata. A cellular automaton is a continuous map G: SL → SL which commutes with

This definition is not, however, useful for computations. Therefore, we consider an alternative characterization. Given a finite set S and d-dimensional shift space SL, consider a finite set of transformations, N ⊆ L. Given a function f: SN → S, called a local rule, the global cellular automaton map is

where υ ∈ L, c ∈ SZ, and υ + N are the translates of υ by elements in N.

- Epidemic cellular automata. Our epidemic cellular automaton can be introduced formally as a quadruple E = (S, d, N, f), where S = 5 is a finite set composed of four states and the wild card (∗), d = 2 a positive integer, N ⊂ Zd a finite set, and fi : SN → S an arbitrary set of (local) functions, where i = {1, …, 14}. The global function Gf : SL → SL is defined by Gf(c)υ = f(cυ + N).

It is also useful to note that S is defined by the following sets:

• S = {α1, φ2, β3, ε0, ∗} is a finite alphabet.

• Sf = {α1, β3} is the set of final output states.

• S0 = {ε0} is called the initial input state.

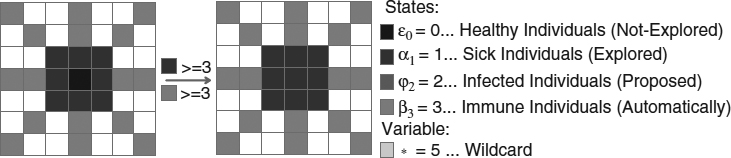

Our epidemic cellular automaton has four states, defined as follows. Let α1 be the explored (sick) state, which represents cells that have been infected by the virus (it refers to pixels that have been computed in order to find their matches); ε0 be the not-explored (healthy) state, which represents cells that have not been infected by the virus (it refers to pixels that remain in the initial state); β3 be the automatically allocated (immune) state, which represents cells that cannot be infected by the virus [this state represents cells that are immune to the disease (it refers to pixels that have been confirmed by the algorithm in order to allocate a pixel match automatically)]; and φ2 be the proposed (infected) state, which represents cells that have acquired the virus with a probability of recovering from the disease (it refers to pixels that have been “guessed” by the algorithm in order to decide later the best match based on local information).

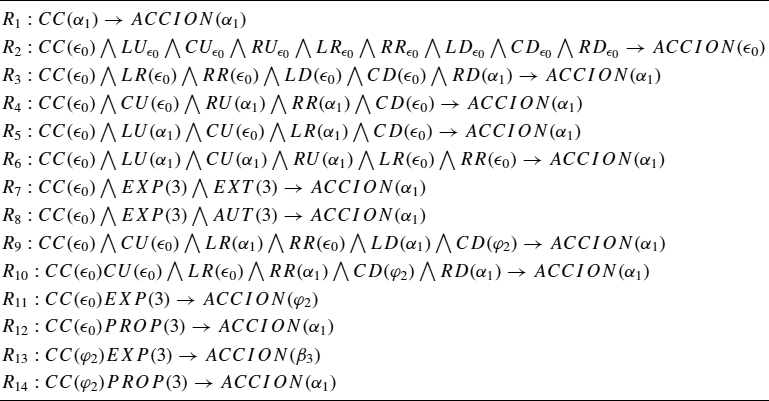

In previous work we defined four different epidemic cellular automata, from which we detect two epidemic graphs that provide singular results in our experiments (see Figure 20.2). One epidemic cellular automaton produces 47% effort saving; the other, 99%. These automata use a set of transformations expressed by a set of rules grouped within a single graph. Each automaton transforms a pattern of discrete values over a spatial lattice. Entirely different behavior is achieved by changing the relationships between the four states using the same set of rules. Each rule represents a relationship that produces a transition based on local information. These rules are used by the epidemic graph to control the global behavior of the algorithm. In fact, the evolution of cellular automata is governed typically not by a function expressed in closed form, but by a “rule table” consisting of a list of the discrete states that occur in an automaton together with the values to which these states are to be mapped in one iteration of the algorithm.

The goal of the search process is to achieve a good balance between two rather different epidemic cellular automata in order to combine the benefits of each automaton. Our algorithm not only finds a match within the stereo pair, but provides an efficient and general process using geometric and texture information. It is efficient because the final image combines the best of each partial image within the same amount of time, and it is general because the algorithm could be used with any pair of images with little effort at adaptation.

Our algorithm attempts to provide a remarkable balance between the exploration and exploitation of the matching process. Two cellular automata were selected because each provides a particular characteristic from the exploration and exploitation standpoint. The 47% epidemic cellular automaton, called A, provides a strategy that exploits the best solution. Here, best solution refers to areas where matching is easier to find. The 99% epidemic cellular automaton, called B, provides a strategy that explores the space when matching is difficult to achieve.

Figure 20.2 Evolutionary epidemic graphs used in the infection algorithm to obtain (a) 47% and (b) 99% of computational savings.

The pseudocode for the evolutionary infection algorithm is depicted in Algorithm 20.1. The first step consists of calibrating both cameras. Knowing the calibration for each camera, it is possible to compute the spatial relationship between the cameras. Then two sets of rules, which correspond to 47 and 99%, are coded to decide which set of rules will be used during execution of the algorithm. The sets of rules contain information about the configuration of the pixels in the neighborhood. Next, we built a lattice with the contour and texture information, which we called a canny left image. Thus, we iterate the algorithm as long as the number of pixels with immune and sick states is different between times t and t + 1. Each pixel is evaluated according to a decision that is made based on three criteria:

- The decision to use A or B is weighted considering the current pixels evaluated in the neighborhood, so inheritance is incorporated within the algorithm.

- The decision is also made based on the current local information (texture and contour). Thus, environmental adaptation is contemplated as a driving force in the dynamic matching process.

- A probability of mutation that could change the decision as to using A or B is computed. This provides the system with the capability of stochastically adapting the dynamic search process.

Thus, while the number of immune and sick cells does not change between times t and t + 1, the algorithm searches for the set of rules that better match the constraints. An action is then activated which produces a path and sequence around the initial cells. When the algorithm needs to execute a rule to evaluate a pixel, it calculates the corresponding epipolar line using the fundamental matrix information. The correlation window is defined and centered with respect to the epipolar line when the search process is begun. This search process provides a nice balance between exploration and exploitation. The exploration process occurs when the epidemic cellular automaton analyzes the neighborhood around the current cell in order to decide where there is a good match. Once we find a good match, a process of exploitation occurs to guess as many point matches as possible. Our algorithm not only executes the matching process, but also takes advantage of the geometric and texture information to achieve a balance between the results of the 47 and 99% epidemic cellular automata. This allows a better result in texture quality, as well as geometrical shape, also saving computational effort. Figure 20.2 shows the two epidemics graphs, 47% and 99%, in which we can appreciate that the differences between the graphs are made by changing the relationships among the four states. Each relationship is represented as a transition based on a local rule, which as a set is able to control the global behavior of the algorithm. Next, we explain how each rule works according to the foregoing classification.

Algorithm 20.1 Pseudocode for the Evolutionary Infection Algorithm

20.2.1 Transitions of the Epidemic Automata

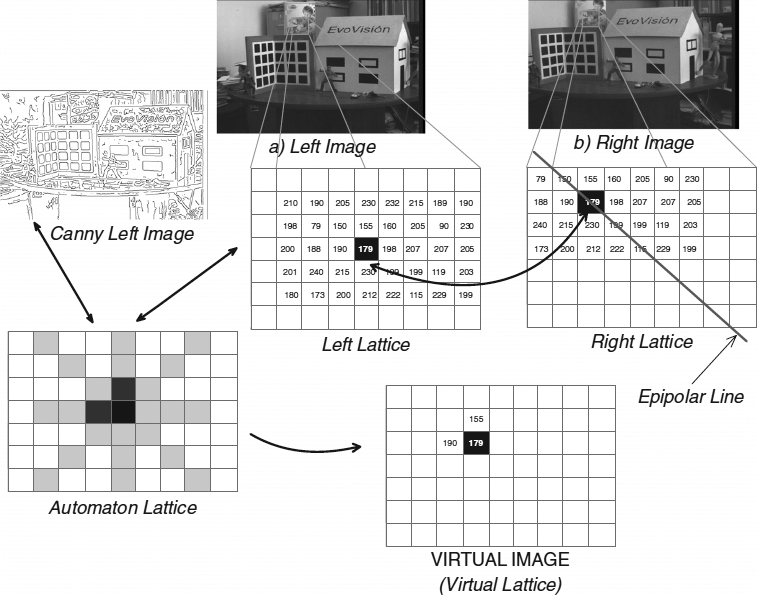

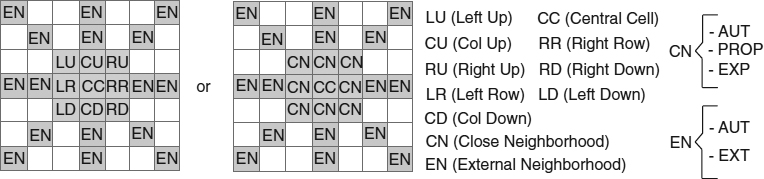

Each epidemic graph has 14 transition rules that we divide in three classes: basic rules, initial structure rules, and complex structure rules. Each rule could be represented as a predicate that encapsulates an action allowing a change of state on the current cell based on their neighborhood information. The basic rules relate the obvious information between the initial and explored states. The initial structure rules consider only the spatial set of relationships within the close neighborhood. The complex structure rules consider not only the spatial set of relationships within the close neighborhood, but also those within the external neighborhood. The transitions of our epidemic automata are based on a set of rules (Table 20.1) coded according to a neighborhood that is shown in Figure 20.3. The basic rules correspond to rules 1 and 2. The initial structure is formed by the rules 3, 4, 5, and 6. Finally, the complex structure rules correspond to the rest of the rules. Up to this moment, the basic and initial structure rules have not been changed. The rest of the rules are modified to produce different behaviors and a certain percentage of computational effort saving. The 14 epidemic rules related to the case of 47% are explained as follows.

- Rules 1 and 2. The epidemic transitions of these rules represent two obvious actions (Figures 20.4 and 20.5). First, if no information exists in the close neighborhood, no change is made in the current cell. Second, if the central cell was already sick (explored), no change is produced in the current cell.

- Rules 3 to 6. The infection algorithm begins the process with the nucleus of infection around the entire image (Figure 20.6). The purpose of creating an initial structure in the matching process is to explore the search space in such a way that the information is distributed in several processes. Thus, propagation of the matching is realized in a higher number of directions from the central cell. We use these rules only during the beginning of the process.

TABLE 20.1 Summary of the 14 Rules Used in the Infection Algorithm

Figure 20.3 Layout of the neighborhood used by the cellular automata.

Figure 20.4 Rule 1: Central cell state does not change if the pixel is already evaluated.

Figure 20.5 Rule 2: Central cell state does not change if there is a lack of information in the neighborhood.

- Rules 7 and 8. These rules assure the evaluation of the pixels in a region where immune (automatically allocated) individuals exist (Figures 20.7 and 20.8). The figure of rule 8 is similar to rule 7. The main purpose is to control the quantity of immune individuals within a set of regions.

- Rules 9 and 10. These transition rules avoid the linear propagation of infected (proposed) individuals (Figure 20.9). Rules 9 and 10 take into account the information of the close neighborhood and one cell of the external neighborhood.

Figure 20.6 Transition of rules 3, 4, 5, and 6, creating the initial structure for the propagation.

Figure 20.7 Rule 7: This transition indicates the necessity to have at least three sick (explored) individuals in the close neighborhood and three immune (automatically allocated) individuals on the external neighborhood to change the central cell.

Figure 20.8 Rule 8: This epidemic transition indicates that it is necessary to have at least three sick (explored) pixels in the close neighborhood and at least three immune pixels in the entire neighborhood to change the central cell.

Figure 20.9 Rules 9 and 10 avoid the linear propagation of the infected pixels.

- Rule 11. This rule generates the infected (proposed) individuals to obtain later a higher number of the immune (automatically allocated) individuals (Figure 20.10). If the central cell is on the healthy state (not-explored) and there are at least three sick individuals (explored) in the close neighborhood, then the central cell is infected (proposed).

- Rules 12 and 14. The reason for these transitions is to control the infected (proposed) individuals (Figures 20.11 and 20.12). If we have at least three infected individuals in the close neighborhood, the central cell is evaluated.

- Rule 13. This rule is one of the most important epidemic transition rules because it indicates the computational effort saving of individual pixels during the matching process (Figure 20.13). If the central cell is infected (proposed) and there are at least three sick (explored) individuals in the close neighborhood, then we guess automatically the corresponding pixel in the right image without computation. The number of sick (explored) individuals can be changed according to the desired percentage of computational savings.

Figure 20.10 Rule 11: This epidemic transition rule represents the quantity of infected (proposed) individuals necessary to obtain the immune (automatically allocated) individuals. In this case, if there are three sick (explored) individuals in the close neighborhood, the central cell changes to an Infected (proposed) state.

Figure 20.11 Rule 12: This transition controls the infected (proposed) individuals within a region. It requires at least three infected pixels around the central cell.

Figure 20.12 Rule 14: This epidemic transition controls the infected (proposed) individuals in different small regions of the image. If the central cell is in an infected (proposed) state, the central cell is evaluated.

Figure 20.13 Rule 13: This transition indicates when the infected (proposed) individuals will change to immune (automatically allocated) individuals.

20.3 EXPERIMENTAL ANALYSIS

We have tested the infection algorithm with an evolutionary approach on a real stereo pair of images. The infection algorithm was implemented under the Linux operating system on an Intel Pentium-4 at 2.0 GHz with 256 Mbytes of RAM. We have used libraries programmed in C++, designed especially for computer vision, called V x L (Vision x Libraries). We have proposed to improve the results obtained by the infection algorithm through the implementation of an evolutionary approach using inheritance and mutation operations. The idea was to combine the best of both epidemic automata, 47 and 99%, to obtain high computational effort saving together with an excellent image quality. We used knowledge based on geometry and texture to decide during the correspondence process which epidemic automaton should be applied during evolution of the algorithm.

Figure 20.14 Results of different experiments in which the rules were changed to contrast the epidemic cellular automata: (a) final view with 47% savings; (b) final view with 70% savings; (c) final view with 99% savings; (d) final view with evolution of 47% and 99% epidemic automata.

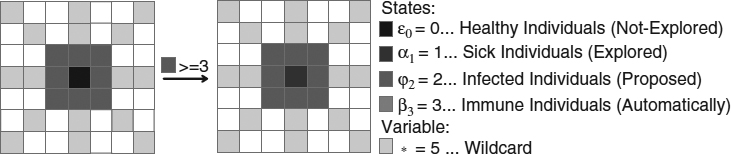

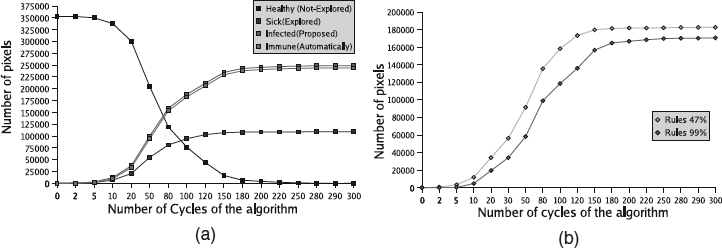

Figure 20.14 shows a set of experiments where the epidemic cellular automaton was changed to modify the behavior of the algorithm and to obtain a better virtual image. Figure 20.14(a) is the result of obtaining 47% of computational effort savings, Figure 20.14(b) is the result of obtaining 70% of computational effort savings, and Figure 20.14(c) shows the result to obtain 99% of computational effort savings. Figure 20.14(d) presents the results that we obtain with the new algorithm. Clearly, the final image shows how the algorithm combines both epidemic cellular automata. We observe that the geometry is preserved with a nice texture reconstruction. We also observe that the new algorithm spends about the same time employed by the 70% epidemic cellular automaton, with a slightly better texture result. Figure 20.15(a) shows the behavior of the evolutionary infection algorithm that corresponds to the final result of Figure 20.14(d). Figure 20.15(b) describes the behavior of the two epidemic cellular automata during execution of the correspondence process.

We decided to test the infection algorithm with the standard test used in the computer vision community [11]. Scharstein and Szeliski have set up test data to use it as a test bed for quantitative evaluation and comparison of different stereo algorithms. In general, the results of the algorithms that are available for comparison use subpixel resolution and a global approach to minimize the disparity. The original images can be obtained in gray-scale and color versions. We use the gray-scale images even if this represent a drawback with respect to the final result. Because we try to compute the best possible disparity map, we apply a 0% saving. The results are comparable to other algorithms that make similar assumptions: gray-scale image, window-based approach, and pixel resolution [2,12,15]. In fact, the infection algorithm is officially in the Middlebury database.

Figure 20.15 Evolution of (a) the states and (b) the epidemic cellular automata to solve the dense correspondence matching.

To improve the test results, we decided to enhance the quality of the input image with an interpolation approach [6]. According to Table 20.2 (note that the untex. column of the Map image is missing because this image does not have untextured regions), these statistics are collected for all unoccluded image pixels (shown in column all), for all unoccluded pixels in the untextured regions (shown in column untex.), and for all unoccluded image pixels close to a disparity discontinuity (shown in column disc.). In this way we obtain results showing that the same algorithm could be ameliorated if the resolution of the original images is improved. However, the infection algorithm was realized to explore the field of artificial life using the correspondence problem. Therefore, the final judgment should also be made from the standpoint of the artificial life community. In the future we expect to use the evolutionary infection algorithm in the search for novel vantage viewpoints.

TABLE 20.2 Results on the Middlebury Database

20.4 CONCLUSIONS

In this chapter we have shown that the problems of dense stereo matching and dense stereo disparity could be approached from an artificial life standpoint. We believe that the complexity of the problem reported in this research and its solution should be considered as a rich source of ideas in the artificial life community. A comparison with a standard test bed provides enough confidence that this type of approach can be considered as part of the state of the art. The best algorithms use knowledge currently not used in our implementation. This provides a clue for future research in which some hybrid approaches could be proposed by other researchers in the artificial life community.

Acknowledgments

This research was funded by CONACyT and INRIA under the LAFMI project 634-212. The second author is supported by scholarship 0416442 from CONACyT.

REFERENCES

1. C. Adami. Introduction to Artificial Life. Springer-Verlag, New York, 1998.

2. S. Birchfield and C. Tomasi. Depth discontinuities by pixel-to-pixel stereo. Presented at the International Conference on Computer Vision, 1998.

3. M. Z. Brown, D. Burschka, and G. D. Hager. Advances in computational stereo. IEEE Transactions on Pattern Analysis and Machine Intelligence, 25(8):993–1008, 2003.

4. J. F. Canny. A computational approach to edge detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 8(6):679–698, 1986.

5. G. Fielding and M. Kam. Weighted matchings for dense stereo correspondence. Pattern Recognition, 33(9):1511–1524, 2000.

6. P. Legrand and J. Levy-Vehel. Local regularity-based image denoising. In Proceedings of the IEEE International Conference on Image Processing, 2003, pp. 377–380.

7. Q. Luo, J. Zhou, S. Yu, and D. Xiao. Stereo matching and occlusion detection with integrity and illusion sensitivity. Pattern Recognition Letters, 24(9–10):1143–1149, 2003.

8. G. Olague, F. Fernández, C. B. Pérez, and E. Lutton. The infection algorithm: an artificial epidemic approach to dense stereo matching. In X. Yao et al., eds., Parallel Problem Solving from Nature VIII, vol. 3242 of Lecture Notes in Computer Science. Springer-Verlag, New York, 2004, pp. 622–632.

9. G. Olague, F. Fernández, C. B. Pérez, and E. Lutton. The infection algorithm: an artificial epidemic approach for dense stereo matching. Artificial Life, 12(4):593–615, 2006.

10. C. B. Pérez, G. Olague, F. Fernández, and E. Lutton. An evolutionary infection algorithm for dense stereo correspondence. In Proceedings of the 7th European Workshop on Evolutionary Computation in Image Analysis and Signal Processing, vol. 3449 of Lecture Notes in Computer Science. Springer-Verlag, New York, 2005, pp. 294–303.

11. D. Scharstein and R. Szeliski. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. International Journal of Computer Vision, 47(1):7–42, 2004.

12. J. Shao. Combination of stereo, motion and rendering for 3D footage display. Presented at the IEEE Workshop on Stereo and Multi-Baseline Vision, Kauai, Hawaii, 2001.

13. M. Sipper. Evolution of Parallel Cellular Machines. Springer-Verlag, New York, 1997.

14. J. Sun, N. N. Zheng, and H. Y. Shum. Stereo matching using belief propagation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 25(7):787–800, 2003.

15. C. Sun. Fast stereo matching using rectangular subregioning and 3D maximum-surface techniques. Presented at the IEEE Computer Vision and Pattern Recognition 2001 Stereo Workshop. International Journal of Computer Vision, 47(2):99–117, 2002.

16. C. L. Zitnick and T. Kanade. A cooperative algorithm for stereo matching and occlusion detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(7):675–684, 2000.

Optimization Techniques for Solving Complex Problems, Edited by Enrique Alba, Christian Blum, Pedro Isasi, Coromoto León, and Juan Antonio Gómez

Copyright © 2009 John Wiley & Sons, Inc.