P

pacemaker – (1) In a manufacturing context: The lean manufacturing concept of using a single workcenter to set the pace (speed) for the entire process; also called a pacing process. (2) In a medical context: A medical device used to set the pace of a patient’s heart.

In the lean manufacturing context, the pacemaker should be used to level-load the system over time and to set the pace for the other processes in the system. Ideally, the pace for the pacing process should be determined by the takt time, which is the cycle time determined by the market demand rate. Processes before the pacemaker (upstream) should produce only when they receive a pull signal from the next downstream process or directly from the pacemaker. This helps prevent overproduction and keeps the pacemaker from being starved. Processes after the pacemaker (downstream) should not block the pacemaker, should push materials in small order quantities (possibly using transfer batches), and should not be allowed to have inventories except in supermarkets or finished goods inventory. The pacemaker is normally the assembly process in a make to stock system. In make to order systems, the pacemaker is typically the process step where the product becomes unique.

Using a pacemaker simplifies scheduling, maintains a level output, focuses on the bottleneck, and prevents overproduction. The pacemaker concept is similar to the Drum-Buffer-Rope concept (Theory of Constraints) and to the CONWIP concept.

See CONWIP, Drum-Buffer-Rope (DBR), gateway workcenter, lean thinking, supermarket, takt time, Theory of Constraints (TOC), transfer batch, upstream.

pacing process – See pacemaker.

Pack to order – A customer interface strategy that collects components and packs them into a box or some other shipping container in response to a customer order.

Pack to order is similar to assemble to order, because it is “assembling” the shipment, which includes one or more products, packaging, and shipping information, in response to a customer order. Boston Scientific’s “pack to demand” process fills orders from inventory by attaching a country- and language-specific label to the box before shipping.

See make to order (MTO), mass customization, respond to order (RTO).

packing slip – The paperwork that accompanies a shipment and describes its contents, including information such as order numbers, item numbers, and quantities.

See Advanced Shipping Notification (ASN), bill of lading, manifest.

Paired-cell Overlapping Loops of Cards with Authorization – See POLCA.

pallet – A portable horizontal, rigid platform used as a base for storing, stacking, and transporting a load of goods.

Pallets are designed to be picked up and moved by a forklift truck. Pallets vary with respect to size, design, strength, and materials. Most pallets are made from wood, but they can also be made from plastic or steel. A two-way pallet allows a forklift truck to enter from the front or the back, whereas a four-way pallet is designed so a forklift can enter the pallet from any side. The most common pallet in the U.S. is the Grocery Manufacturer’s Association (GMA) grocery pallet, which is a 4-way pallet that is 40 inches (101.6 cm) wide, 48 inches (121.92) deep, and 5 inches (12.7 cm) high. A skid is a pallet that does not have bottom deck boards.

See forklift truck, logistics, warehouse.

paradigm – A way of thinking; a frame of thought.

An old paradigm can inhibit creative thinking about a new problem. A new paradigm (also called a “paradigm shift”) can be a powerful new way of thinking about an old problem. For example, in the Soviet factory management paradigm, bigger and heavier machines were usually better. This influenced reward systems and machine design in many negative ways. The quality management field has seen a paradigm shift from thinking that quality and cost are always trade-offs to understanding that improved conformance quality usually leads to lower cost.

See bounded rationality, inductive reasoning.

parent item – See bill of material (BOM).

Pareto analysis – See Pareto Chart.

Pareto Chart – A histogram (bar chart) that helps identify and prioritize the most common sources of errors or defects. ![]()

The Pareto Chart, named for Vilfredo Pareto, was popularized as a quality management tool by Joseph M. Juran and Kaoru Ishikawa. The basic concept is based on Pareto’s Law, which teaches that each system has an “important few and a trivial many” (often called the 80-20 principle). A Pareto Chart highlights the important few by displaying the frequencies for the causes of a problem, sorted from highest to lowest.

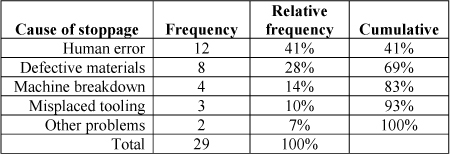

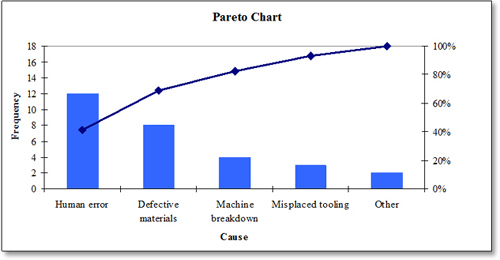

For example, an analysis of work stoppages on a production line is shown in the table on the right, presented here from highest to lowest frequency. These data were graphed to create the Pareto Chart below, which highlights the need to focus on the causes of human errors.

The line shows the cumulative frequency and shows the total percent of the causes up to and including that cause. Fixing the first two causes (human error and defective materials) will remove 69% of the stoppage problems, whereas fixing the last two will remove only 17%.

Pareto Charts can easily be generated in Microsoft Excel. When selecting the chart type, go to “custom types” and select “Line – Column on 2 Axes.”

See ABC classification, bar chart, causal map, checksheet, Failure Mode and Effects Analysis (FMEA), histogram, Pareto’s Law, Root Cause Analysis (RCA), seven tools of quality.

Pareto efficiency – See Pareto optimality.

Pareto optimality – An economics concept developed by the Italian economist Vilfredo Pareto that describes a situation where no one individual can be made better off without making other individuals worse off; also called Pareto efficiency.

Given an initial allocation of goods among a set of individuals, a Pareto improvement is a reallocation that makes at least one individual better off without making any others worse off. An allocation is Pareto optimal (Pareto efficient) when no other Pareto improvements can be made. Pareto efficiency does not necessarily result in a socially desirable distribution of resources and makes no statement about equality or the overall well-being of a society.

See economics, Pareto’s Law.

Pareto’s Law – The principle that most systems have a vital few and a trivial many; also called the 80-20 rule or principle. ![]()

Pareto’s Law teaches that most of the consequences in a system come from just a few of the causes. Some popular expressions of Pareto’s Law include, “Don’t sweat the small stuff,” “Major on the majors,” and “the vital few and the trivial many.” Juran preferred to say “the vital few and the useful many” to emphasize that the less important causes should not be ignored. The implication of Pareto’s Law is that people need to find and focus on the important few causes (issues, items, people, customers, problems) and not spend too much time on the many causes that do not matter as much. Pareto’s Law is written as two numbers, where the first number represents the percentage of the consequences (e.g., cost, errors) and the second number is the percentage of objects (e.g., causes). Note that the two numbers do not need to add up to 100.

Examples of Pareto’s Law include the ABC classification (manage the high dollar volume items), FMEA (manage the high risk failure modes), Theory of Constraints (manage the bottleneck), dispatching rules (start high-priority jobs first), critical path and critical chain analysis (manage the most important path and tasks in a project), supplier management (just a few suppliers account for most of the spend), customer sales distribution (few customers account for most of the sales), quality control (most of the defects can be attributed to just a few causes), human relations (most of the problems are caused by just a few people), sports (just a few players make most of the points), medicine (most people die from one of a few causes), and international relations (most of the problems in the world are caused by just a few rogue nations).

Alfredo Pareto was an Italian economist who lived in France in the early 1900s. In studying the distribution of wealth in Milan (Milano), he found that 20% of the people earned about 80% of the wealth. Although developed by the same man, Pareto’s Law is unrelated to the economics concept of Pareto efficiency. A Pareto Chart is a useful way to show a frequency count and highlight the higher priority issues.

See ABC classification, causal map, cycle counting, error proofing, Failure Mode and Effects Analysis (FMEA), Lorenz Curve, Pareto Chart, Pareto Optimality, Root Cause Analysis (RCA), Theory of Constraints (TOC).

parking lot – A meeting facilitation tool used to store discussion items not immediately relevant to the agenda.

Meeting participants often raise issues that are not immediately relevant to the current topic. When this happens, the meeting leader and other participants should keep the focus on the current agenda by (1) suggesting adding the item to the parking lot, (2) quickly adding the idea to the parking lot list if others agree, and (3) immediately returning to the current agenda item. The parking lot list is often written on a whiteboard or flip chart. If Post-it Notes are being used to brainstorm ideas, the parking lot can be a set of Post-its on a side wall. Ideally, the facilitator and participants will show respect for the person, show respect for the idea, and capture potentially important ideas for future discussions. Just before the meeting adjourns, the facilitator should ensure that someone records the parking lot issues and that the items will be addressed appropriately. In many cases, parking lot items should be added to the agenda for the next meeting.

See affinity diagram, brainstorming, causal map, Nominal Group Technique (NGT), personal operations management, two-second rule.

Parkinson’s Laws – Laws written by Professor C. Northcote Parkinson in the book Parkinson’s Law (1958). ![]()

A 20th century British author and professor of history, Cyril Northcote Parkinson (1909-1993) wrote some sixty books, including his famous satire of bureaucratic institutions. Parkinson’s most famous law is often quoted as “Work expands to fill the time allotted to it”; however, Parkinson’s actual wording was, “Work expands so as to fill the time available for its completion.” Here are some other examples of Parkinson’s laws:

• Expenditure rises to meet income.

• Expansion means complexity, and complexity decay.

• Policies designed to increase production increase employment; policies designed to increase employment do everything but.

• When something goes wrong, do not “try, try again.” Instead, pull back, pause, and carefully work out what organizational shortcomings produced the failure. Then, correct those deficiencies. Only after that, return to the assault.

• Delay is the deadliest form of denial.

• The matters most debated in a deliberative body tend to be the minor ones where everybody understands the issues.

• Deliberative bodies become decreasingly effective after they pass five to eight members.

This author has written a few 21st century operations management corollaries to Parkinson’s Laws:

• Inventory expands to fill the space allotted to it.

• The best way to improve on-time delivery is to reduce cycle time so that customers have taken delivery before they have time to change their minds.

• Lying begets41 lying. If a firm lies to its customers about expected delivery dates, customers will lie to the firm about the actual need date.

• The later a project becomes, the more unlikely it is that the project manager will inform the customer of the project’s lateness.

• The pi rule for project management: Poorly managed projects require approximately pi (3.1416) times more time than originally planned.

See bullwhip effect, leadtime syndrome, Murphy’s Law, project management.

PARM (Perishable Asset Resource Management) – See yield management.

parsimony – Adoption of the simplest assumption in the formulation of a theory or in the interpretation of data, especially in accordance with the rule of Occam’s Razor.

See Occam’s Razor.

part number – A unique identification number (or alphanumeric string) that defines an item for inventory management purposes; also called Stock Keeping Unit (SKU), item number, material, product code, or material. ![]()

Historically, APICS and other professional societies in North America have used the terms “item number” or “part number.” However, SAP, the most widely used Enterprise Resources Planning (ERP) system, uses the term “material.” Retailers often use the term “Stock Keeping Unit (SKU).” In some cases, a part number is used to identify the “generic” item, and the SKU is used for the inventory count for that part number at a specific location. A serial number is an identifier for a specific unit.

It is better to use all numeric part numbers (0-9) and not mix alpha (A-Z) and numeric characters. This is because many numbers and letters are easily confused in both reading and writing. For example, the number 1 and the letter l, the number 5 and the letter S, and the number 0 and the letter O are easy to confuse on a computer screen and in handwriting. In addition, many letters sound the same. For example, K and A, M and N, and Y and I sound alike. If letters are used, they should not be case-sensitive.

A significant (meaningful) part number uses characters, or groups of characters, coded to describe item attributes, such as the product family, commodity, location, or supplier. A semi-significant part number is an identifier that uses a portion of the number that is meaningful. Significant part numbers rarely work very well because product attributes change over time and part numbers require too many characters to be truly meaningful. It is good practice, therefore, to use short (seven digits or less) non-meaningful part numbers to reduce data entry time and errors and then use additional codes for item attributes. The use of Automated Data Collection (ADC) systems, such as barcoding and RFID, has made these issues less important.

Check digits are often added to the base part number to make it possible to conduct a quick validity check. See the check digit entry for more information.

In manufacturing, some items might exist for a short period of time in the assembly process. These items are sometimes called phantom items. However, if these items are not inventoried, they should usually not be given part number identifiers.

See active item, Automated Data Collection (ADC), barcode, bill of material (BOM), check digit, Electronic Product Code (EPC), locator system, phantom bill of material, product family, Radio Frequency Identification (RFID), raw materials, traceability, unit of measure, Universal Product Code (UPC), warehouse.

part period balancing – See lotsizing.

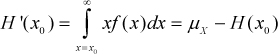

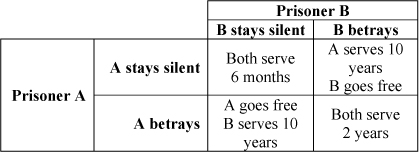

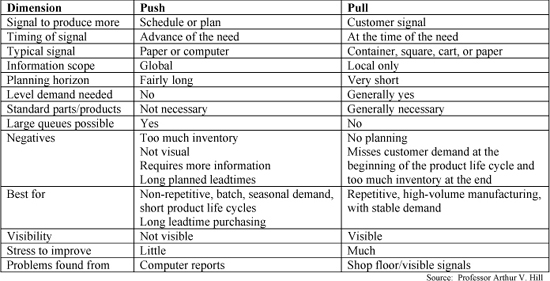

partial expectation – The partial first moment of a random variable; also called partial moment.

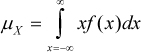

The partial expectation for a probability distribution is an important property used in inventory theory and risk management. The complete expectation (first moment) for continuous random variable X is the expected value  . The partial expectation evaluated at x0 is

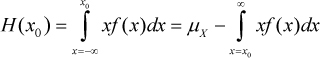

. The partial expectation evaluated at x0 is  . Blumenfeld (2010) and others defined the partial expectation as the weighted tail of the distribution

. Blumenfeld (2010) and others defined the partial expectation as the weighted tail of the distribution  . The partial expectation is related to the conditional expectation with H(x0) = E(X | X < x0)P(X < x0) or H(x0) = μ − E(X | X > x0)(1 − F(x0)).

. The partial expectation is related to the conditional expectation with H(x0) = E(X | X < x0)P(X < x0) or H(x0) = μ − E(X | X > x0)(1 − F(x0)).

The partial expectation functions for several distributions are presented in the table on the right. These equations were derived by the author from the tail conditional expectation functions in Landsman and Valdez (2005). Winkler, Roodman, and Britney (1972) presented closed-form expressions for the partial expectations for several distributions.

See gamma distribution, mean, probability density function, safety stock.

partial moment – See partial expectation.

Parts per Million (PPM) – See Defective Parts per Million (DPPM).

pay for performance – See gainsharing.

pay for skill – A compensation policy that rewards employees for developing new capabilities; also called skill-based pay, pay for knowledge, and pay for learning.

The organization provides training on a set of skills and increases the hourly pay rate for workers who can demonstrate that they have acquired new skills. For example, an employee who learns to use a laser welder might be paid an extra $0.25 per hour.

Some of the benefits of a pay for skill program include (1) allowing employers to cover for an absent worker, (2) reducing inventory and cycle time when workers can move to where the work is, (3) reducing boredom and increasing employee engagement, and (4) giving workers a broader perspective of the process, which gives them insights that can be used to improve the process.

On the negative side, pay for skill will not achieve greater performance for the employee if the skill is not needed or not used. Job rotation can also achieve many of the same benefits. A one-time bonus might make more sense in some cases. A similar approach is to create a labor grade (pay grade) system in which employees get promoted to new pay grades after mastering new skills.

See gainsharing, human resources, job design, job enlargement, job rotation, labor grade, piece work.

payback period – The time required to break even on an investment.

Payback ignores the time value of money and is regarded to be a crude and imprecise analysis. Much better methods include Net Present Value (NPV) and Economic Value Added (EVA). However, payback is still a commonly used approach for “quick and dirty” investment analysis.

See break-even analysis, financial performance metrics.

p-chart – A quality control chart used to monitor the proportion of units produced in a process that are defective; a unit is considered defective if any attribute of the unit does not conform to the standard.

To set up a p-chart, estimate ![]() , the long-term percent defective, from a large sample while the process is under control. Then set the upper and lower control limits at

, the long-term percent defective, from a large sample while the process is under control. Then set the upper and lower control limits at ![]() and

and ![]() .

.

To use a p-chart, sample n units from the process every so many lots, units, or time periods. The sample proportion is p = x/n, where x is the number of defective units found in the sample. Plot the sample proportion on the p-chart and determine if the process is “under control.”

The p-chart is based on the binomial distribution, which is the sum of n independent Bernoulli distributed binary (0-1) random variables with mean ![]() and standard deviation of the estimate

and standard deviation of the estimate ![]() . The normal distribution is a reasonable estimate of the binomial when

. The normal distribution is a reasonable estimate of the binomial when ![]() and

and ![]() .

.

Whereas the p-chart plots the proportion defective for any sample size, the np-chart plots the number of defective units for a fixed sample size.

See attribute, Bernoulli distribution, binomial distribution, control chart, np-chart, Statistical Process Control (SPC), Statistical Quality Control (SQC).

PDCA (Plan-Do-Check-Act) – A well-known four-step approach for process improvement; also called PDSA (Plan-Do-Study-Act), the Deming Cycle, and the Shewhart Cycle. ![]()

The PDCA cycle is made up of four steps:

• PLAN – Recognize an opportunity and plan the change. Plan to improve operations first by finding out what things are going wrong and then generate ideas for solving these problems. Decide what actions might reduce process variation.

• DO – Test the change. Make changes designed to solve the problems on a small or experimental scale first. This minimizes disruptions while testing whether the changes will work.

• CHECK – Review the test, analyze the results, and identify learning. Use data to determine if the change was effective in reducing variation. Check whether the small-scale or experimental changes are achieving the desired result or not. Also, continuously check nominated key activities (regardless of any experimentation going on) to provide information on the quality of the output at all times and to identify new problems that might appear.

• ACT – Take action based on what you learned in the check step. If the change was successful, implement the change and identify opportunities to transfer the learning to other opportunities for improvement. Implementation will likely require involvement of other persons and organizations (departments, suppliers, or customers) affected by the change. If the change was not successful, document that plan and go through the cycle again with a different plan.

Upon completion of a PDCA cycle, the cycle is repeated to test another idea. The repeated application of the PDCA cycle to a process is known as continuous quality improvement.

The PDCA cycle was developed by Shewhart (1939). Shewhart said the cycle draws its structure from the notion that constant evaluation of management practices, as well as the willingness of management to adopt and disregard unsupported ideas, is the key to the evolution of a successful enterprise. W. Edwards Deming first coined the term “Shewhart cycle” for PDCA, naming it after his mentor and teacher at Bell Laboratories in New York. Deming promoted PDCA as a primary means of achieving continued process improvement. He also referred to the PDCA cycle as the PDSA cycle (“S” for study) to emphasize the importance of learning in improvement. Deming is credited with encouraging the Japanese to adopt PDCA in the 1950s.

The Japanese eagerly embraced PDCA and other quality concepts, and to honor Deming for his instruction, they refer to the PDCA cycle as the Deming cycle. Many lean thinking programs use PDCA concepts.

Most quality improvement projects today use a similar five-step approach called DMAIC, which comes from the lean sigma movement. Most people (including this author) find DMAIC more intuitive and easier to follow than the PDCA or PDSA approaches.

See DMAIC, hoshin planning, lean sigma, quality management, Total Quality Management (TQM).

PDF – See probability density function.

PDM – See product data management.

PDSA (Plan-Do-Study-Act) – See PDCA.

pegging – The process of identifying the sources of the gross requirements for an item in the MRP materials plan.

Single-level pegging for a gross requirement goes up one level in the bill of material (BOM). Full-level pegging goes all the way up to the top level. Pegging is an important tool for helping production planners identify the impact that a late order might have on higher-level orders and customers. Pegging is like a where-used report, but is only used for those parent items that determine the gross requirements for the item.

See bill of material (BOM), bill of material implosion, Materials Requirements Planning (MRP), where-used report.

percentage bill of material – See bill of material (BOM).

perfect order fill rate – See fill rate.

performance management system – A set of policies and procedures with a supporting information system used to help create and maintain alignment between the organizational and employee goals.

A good performance management system is built on four main activities:

• Planning – The manager and direct reports collaboratively establish goals, objectives, outcomes, and training requirements for the direct report.

• Coaching – The manager trains, observes, and provides feedback to direct reports to improve performance.

• Appraisal – The manager provides performance feedback to direct reports and documents performance for both the pay and promotion decision processes.

• Rewards – The organization provides rewards in the form of recognition, pay raises, bonuses, and promotions.

Although performance measurement focuses only on evaluating performance, performance management takes a broader view, with more emphasis on intentional performance development. A good performance management system will align and coordinate individual behavior with the organization’s strategic objectives.

Culbert (2010) made 12 arguments against performance reviews:42

• Focus on finding faults and placing blame.

• Focus on deviations from some ideal as weaknesses.

• Focus on comparing employees.

• Create competition between boss and subordinate.

• Create one-sided-accountability and too many boss-dominated monologues.

• Create “thunderbolts from on high,” with the boss speaking for the company.

• Cause the subordinate to suffer if they make a mistake.

• Create an environment that allow the big boss to go on autopilot.

• Focuse on scheduled events.

• Give human resource people too much power.

• Do not lead to anything of substance.

• Are hated, and managers and subordinates avoid doing them until they have to.

Culbert (2010) went on to propose a collaborative constant dialog between the manager and employee built on trust and respect and where they are both responsible for success. Part of this dialog includes a “Performance Preview” that Culbert claimed holds people accountable, gives both managers and employees helpful feedback, and gives the company more of what it needs. He proposed three questions: (1) What are you getting from me that you like and find helpful? (2) What are you getting from me/the company that gets in your way and that you would like to have stopped? (3) What are you not getting from me/the company that you think would make you more effective? Tell me how that would help you specifically to do your job better?

Coens and Jenkins (2002) make similar arguments against performance reviews and suggest that managers should (1) provide honest feedback to employees by maintaining daily, two-way communication; (2) empower employees to be responsible for their careers, for receiving feedback, and for holding themselves accountable for the work to be done; (3) have the freedom to choose for themselves the most effective ways of working with people; (4) move away from an individual performance company to an organizational improvement company; and (5) create a culture to support the above.

See financial performance metrics, Management by Objectives (MBO), operations performance metrics, work measurement.

performance quality – See product design quality.

performance rating – A subjective estimate of a worker’s pace of work.

A 120% performance rating means that the observer estimated that the worker was working 20% faster than a normal worker. The performance rating is used to adjust the observed time to compute the normal time.

See normal time, standard time, time study, work measurement.

performance-based contracting – A legal relationship that allows organizations (usually governmental organizations) to acquire services via contracts that define what is to be achieved rather than how it is to be done.

In many situations, performance-based contracting provides good value products and services. In addition, performance-based contracting gives firms the freedom to bring new approaches to their customers.

See service guarantee, Service Level Agreement (SLA).

period cost – Expenses based on the calendar rather than the number of units produced; also called period expense.

Period costs include selling and administrative costs, depreciation, interest, rent, property taxes, insurance, and other fixed expenses based on time. Period costs are expensed on the income statement in the period in which they are incurred and not included in the cost of goods sold.

See overhead.

Period Order Quantity (POQ) – A simple lotsizing rule that defines the order quantity in terms of periods supply43; also known as the Periodic Order Quantity, days supply, weeks supply, and months supply.

The POQ is implemented in MRP systems by setting the lotsize to the sum of the next POQ periods of net requirements after the first positive net requirement. The optimal POQ is the Economic Order Quantity (EOQ) divided by the average demand per period. The POQ is ![]() days, where D is the expected annual demand, S is the ordering (or setup) cost, i is the carrying charge, and c is the unit cost.

days, where D is the expected annual demand, S is the ordering (or setup) cost, i is the carrying charge, and c is the unit cost.

See Economic Order Quantity (EOQ), lotsizing methods, periods supply, time-varying demand lotsizing problem.

periodic review system – An order-timing rule used for planning inventories; also known as a fixed-time period model, periodic system, fixed-order interval system, and P-model. ![]()

A periodic review system evaluates the inventory position every P time periods and considers placing an order. Unlike a reorder point system that triggers an order when the inventory position falls below the reorder point, a periodic review system only considers placing orders at the end of a predetermined time period, the review period (P). The graph below shows the periodic review system through two review periods.

The periodic review system makes good economic sense when the firm has economies of scale in transportation cost. In other words, the periodic review system should be used when the firm can save money by shipping or receiving many different items at the same time. The optimal review period is the perod order quantity (POQ), which is EOQ/μD, where EOQ is the economic order quantity and μD is the average demand per period. However, in most situations, the review period is determined by other factors, such as the transportation schedule.

The periodic review system can be implemented with either a fixed order quantity, such as the EOQ, or with an order-up-to lotsizing rule. The order-up-to rule is also known as a base stock system. This rule orders a quantity that brings the inventory position up to a target inventory at the end of each review period. The target inventory level is also called the base stock level. The optimal target inventory is T = SS + μD(L + P), where μD is the average demand per period, L is the replenishment leadtime, and P is the review period. The safety stock is ![]() units, where σD is the standard deviation of demand per period and z is the safety factor. The average lotsize is

units, where σD is the standard deviation of demand per period and z is the safety factor. The average lotsize is ![]() , and the average inventory is

, and the average inventory is ![]() . Compared to the reorder point system, the periodic system requires more safety stock inventory, because it must protect against stockouts during the review period plus the replenishment leadtime (L + P) rather than just during the replenishment leadtime (L).

. Compared to the reorder point system, the periodic system requires more safety stock inventory, because it must protect against stockouts during the review period plus the replenishment leadtime (L + P) rather than just during the replenishment leadtime (L).

Most retail chains use a periodic review system to replenish their stores. Each store has a target inventory level (base stock level) for each stock keeping unit (SKU). Every week the stores order enough to bring their inventory positions up to the base stock level, and trucks move the orders from regional warehouses to the stores.

See continuous review system, inventory management, min-max inventory system, order-up-to level, perpetual inventory system, reorder point, safety stock, slow moving inventory, supermarket, warehouse.

periods supply – The “time quantity” for an inventory; also known as days on hand (DOH), days supply, days of inventory, days in inventory (DII), inventory days, inventory period, coverage period, weeks supply, and months supply. ![]()

The periods supply44 is the expected time remaining before the current inventory goes to zero, assuming that the current average demand rate does not change. The periods supply metric is often preferable to the inventory turnover metric, because it is easier to understand and can easily be related to procurement and manufacturing leadtimes.

Periods supply is estimated by taking the current inventory and dividing by some estimate of the current (or future) average demand. The current average demand might be a simple moving average, an exponentially smoothed average, or an exponentially smoothed average with trend. For example, a professor has 100 pounds of candy in his office and is consuming 20 pounds per day. Therefore, the professor has five-days supply.

The periods supply metric and the inventory turnover metric measure essentially the same inventory performance. However, periods supply is based on the current average or forecasted demand, and inventory turnover is based on historical actual demand or cost of goods sold over a specified time period. If demand is relatively stable, one can easily be estimated from the other. The relationships between inventory turnover (T) and days on hand are T = 365/DOH and DOH = 365/T. Inventory Dollar Days (IDD) is the unit cost times DOH.

The days supply for work-in-process (WIP) inventory can also be used as an estimate of the cycle time. For example, a firm with 10-days supply of WIP inventory has a cycle time of about 10 days. This concept is based on Little’s Law (Little 1961), which states that the average inventory is the demand rate times the cycle time. Written in queuing theory terms, this is L = λW, where L is the number in system (the work-in-process), λ is the mean arrival rate (the demand rate), and W is the time in system (the cycle time). Note that when days supply is calculated from financial measures, this estimate of the average cycle time is a dollar-weighted average.

See cycle time, Inventory Dollar Days (IDD), inventory management, inventory turnover, Little’s Law, lot-for-lot, lotsize, Period Order Quantity (POQ), weighted average.

Perishable Asset Resource Management (PARM) – See yield management.

permutations – See combinations.

perpetual inventory system – An inventory control system that keeps accurate inventory records at all times.

In a perpetual inventory system, records and balances are updated with every receipt, withdrawal, and inventory balance correction. These systems often provide real-time visibility of inventory position (inventory on-hand and inventory on-order). In contrast, an inventory system could update inventory records periodically. However, with modern computers it makes little sense to use a periodic updating system.

See on-hand inventory, on-order inventory, periodic review system, real-time.

personal operations management – A philosophy and set of practices for applying operations management principles to help individuals (particularly knowledge workers) become more productive.

The term “personal operations management” was coined in by the author (Hill, 2011a). The book adapts and applies lean principles to personal time and life management.

See 6Ps, delegation, Getting Things Done (GTD), knowledge worker, parking lot, SMART goals, time burglar, two-minute rule, two-second rule, tyranny of the urgent.

PERT – See Project Evaluation and Review Technique.

phantom – See phantom bill of material.

phantom bill of material – A bill of material coding and structuring technique used primarily for transient (non-stocked) subassemblies; phantom items are called blow-through (or blowthrough) or transient items.

A phantom bill of material represents an item that is physically built but rarely stocked before being used in the next level in the bill of material. Materials Requirements Planning (MRP) systems do not create planned orders for phantom items and are said to “blow through” phantom items.

See bill of material (BOM), Materials Requirements Planning (MRP), part number.

phase review – A step in the new product development process where approval is required to proceed to the next step; also called stage-gate review and tollgate review.

See the stage-gate process entry for more detail.

See DMAIC, New Product Development (NPD), stage-gate process.

phase-in/phase-out planning – A planning process that seeks to coordinate the introduction of a new product with the discontinuation of an existing product.

New products typically offer updated features and benefits that make the current product obsolete. The phase-in of the new product and the phase-out of the current product is complicated by many factors, such as forecasting the demand for both products, planning the consumption and disposal of the inventory of the current product, filling the distribution channel with the new product, giving proper incentives to the sales force for both the current and new products, coordinating end-of-life policies for all related products and consumables, carefully designing a pricing strategy that maximizes contribution to profit, and last, but not least, creating an effective market communication program. With respect to market communications, some firms have found themselves in trouble when information about a new product becomes public and the market demand for the current product to decline rapidly.

For example, the demand for the Apple iPad 2 will decline rapidly once the Apple iPad 3 is announced. Hill and Sawaya (2004) discuss phase-in/phase-out planning in the context of the medical device industry.

See product life cycle management.

physical inventory – The process of auditing inventory balances by counting all physical inventory on-hand.

A physical inventory is usually done annually or quarterly and usually requires that all manufacturing operations be stopped. Cycle counting is better than the physical inventory count, because it (1) counts important items more often, (2) fixes the record-keeping process rather than just fixing the counts, and (3) maintains record accuracy during the year.

See cycle counting.

pick face – The storage area immediately accessible to the order picker.

pick list – A warehouse term for an instruction to retrieve items from storage; also called a picking list.

A pick list gives stock pickers the information they need to pick the right items, in the right quantities, from the right locations, in the right sequence (route). These items may be for a specific production order, sales order, or interplant order, or, alternatively, they may be for a group of orders. If the pick list is for a group of orders, the orders need to be consolidated (assembled) from the items that are picked.

See backflushing, picking, warehouse.

picking – The process of collecting items from storage locations to meet the requirements of an order. ![]()

Warehouses usually have more outgoing shipments (customer orders) than incoming shipments (purchase orders). This is particularly true for warehouses that serve retailers, because these warehouses are often used to break out large orders from a few manufacturers into small orders sent to many retailers. The picking process, therefore, has a significant impact on overall supply chain efficiency. Mispicks (errors in picking) directly impact customers and customer satisfaction.

The ideal picking system will have low purchase and implementation cost, low operating cost, low cycle time per pick, and high accuracy. The efficiency of the picking system is highly dependent upon the warehouse system and policies for locating SKUs in the warehouse.

The type of picking process depends on many factors, such as product characteristics (weight, volume, dimensions, fragility, perishability), number of transactions, number of orders, picks per order, quantity per pick, picks per SKU, total number of SKUs, shipping requirements (piece pick, case pick, or full-pallet loads), and value-added services, such as private labeling, cutting, or packaging. A pick to clear rule selects item locations with the smallest quantities first to empty the bins more quickly. A pick to light system uses LED lights for each bin and uses these lights to guide the picker to the next bin to be picked.

Picking is closely connected to the slotting policies, which are the rules used to guide employees to a bin (or bins) when putting materials away. All Warehouse Management Systems (WMS) provide functionality for both slotting and picking rules.

Voice picking (voice recognition) uses speech recognition and speech synthesis technologies to allow workers to communicate with the WMS. Warehouse workers use wireless, wearable computers with headsets and microphones to receive instructions by voice and verbally confirm their actions back to the system. The wearable computer, or voice terminal, communicates with the WMS via a radio frequency local area network (LAN). Directed RF picking uses radio frequency technologies to transmit picking, put away, replenishment, and cycle count instructions to warehouse personnel using either handheld or truck-mounted devices.

See batch picking, carousel, discrete order picking, first pick ratio, flow rack, forward pick area, pick face, pick list, private label, random storage location, reserve storage area, slotting, task interleaving, voice picking, warehouse, Warehouse Management System (WMS), wave picking, zone picking.

picking list – See pick list.

piece work – Pay for performance based on the number of units produced rather than on the number of hours worked or a salary; also called piece rate.

See gainsharing, pay for skill.

pilot test – A method used to test new software or a production process before it is fully implemented; for information systems, a pilot test is also called a conference room pilot.

In the software context, the purpose of the pilot test may be to (1) evaluate for purchase, (2) evaluate for implementation, (3) evaluate if the database and settings in the software are ready for implementation, and (4) train users how to use the software. Ideally, the pilot test is conducted with actual data by actual decision makers in conditions as close as possible to actual operating conditions. The pilot uses realistic test data, but the system is not “live,” which means that no data are changed and no decisions are made that affect actual operations. In a manufacturing context, a pilot run is done to test the capabilities of the system before ramping up production.

See beta test, implementation, prototype.

pipeline inventory – The number of units (or dollars) of inventory currently being moved from one location to another.

See supply chain management, Work-in-Process (WIP) inventory.

pitch – The time allowed to make one container of a product.

Pitch is used to check if actual production is keeping up with takt time requirements. Pitch is a multiple of takt time based on the container size. For example, if the container size is 60 units and the takt time is 10 seconds, pitch is 60 × 10 = 600 seconds (or 10 minutes) for each container. Pitch can also be expressed as a rate. For example, if pitch (as a time) is 10 minutes per container, pitch (as a rate) is 6 containers per hour.

See cycle time, lean thinking, takt time.

Plan-Do-Check-Act – See PDCA.

Plan-Do-Study-Act – See PDCA.

planned leadtime – See cycle time, leadtime.

planned obsolescence – A strategy of designing products to become obsolete or non-functional after a period of time; also known as built-in obsolescence.

Firms sometimes use planned obsolescence to motivate customers to buy replacement products. Obsolescence of desirability refers to a marketing strategy of trying to make the previous model appear to be obsolete from a psychological standpoint (e.g., automobile style and other fashion goods). Obsolescence of function refers to a strategy of making the product cease to be functional after some period of time or number of uses (e.g., products with built-in, non-replaceable batteries).

See New Product Development (NPD), product life cycle management.

planned order – An MRP term for a recommended purchase order or manufacturing order generated by the planning system.

MRP systems create planned orders to meet the net requirements for higher-level products, assemblies, and subassemblies. Planned orders are deleted and replaced by new planned orders every time MRP recalculates the materials plan. When planners convert a planned order to a firm planned order, it can no longer be changed by the MRP system. Capacity Requirements Planning (CRP) uses planned orders, firm planned orders, and open (released) orders to determine the requirements (load) on each workcenter for each day.

See Capacity Requirements Planning (CRP), manufacturing order, Materials Requirements Planning (MRP), purchase order (PO), time fence.

planning bill of material – See bill of material (BOM).

planning horizon – The span of time that the master schedule extends into the future.

In a manufacturing context, the planning horizon for the master production schedule should extend beyond the cumulative (stacked) leadtime for all components.

See cumulative leadtime, time fence.

planning versus forecasting – See forecasting demand.

planogram – A diagram used to specify how products are to be displayed in a retail space; also called plano-gram, plan-o-gram, and POG.

A good planogram allows inexperienced employees to properly maintain the retail shelf stock and appearance. A good planogram system will help the retailer (1) control inventory investment, (2) maximize inventory turnover, (3) minimize labor cost, (4) satisfy customers, (5) maximize sales, (6) maximize profit, and (7) maximize return on investment for the space.

See assortment, category captain, facility layout.

plant stock – An SAP term for on-hand inventory in a particular plant location.

plant-within-a-plant – A relatively autonomous process (“a plant”) located within a facility that allows for more focus and accountability; sometimes called a focused factory.

Each plant-within-a-plant (or focused factory) will likely have unique operations objectives (cost, quality, delivery, etc.) and unique workforce policies, production control methods, accounting systems, etc. This concept was promoted by Professor Wickham Skinner at Harvard Business School in a famous article on the focused factory (Skinner 1974). See the focused factory entry for more details.

See facility layout, focused factory, operations strategy.

platform strategy – A new product development strategy that plans new products around a small number of basic product designs (platforms) and allows for many final products with differing features, functions, and prices.

A platform strategy is commonly used in the automotive industry, where the platform is a chassis/drive-train combination upon which different models are built (e.g., Chevrolet, Buick, Cadillac). This concept is used in many other industries, such as personal computers (e.g., Dell), white goods (e.g., Whirlpool), and medical devices (e.g., Medtronic).

See New Product Development (NPD), white goods.

point of use – The lean manufacturing practice of storing materials, tools, and supplies in a manufacturing facility close to where they are needed in the manufacturing process.

Point of use reduces non-value-added walking and searching time.

See 5S, dock-to-stock, receiving, water spider.

PMI – See Project Management Institute (PMI).

PO – See purchase order.

Point-of-Sale (POS) – A data collection device located where products are sold; usually a scanning and cash register device in a retail store.

POS data collection provides a rich source of data that can be used to (1) provide real-time sales information for the entire supply chain, (2) help maintain accurate inventory records, (3) automatically trigger replenishment orders as required, (4) provide data for detailed sales analysis, and (5) provide in-store information to shoppers.

See category captain, real-time, Universal Product Code (UPC).

Poisson distribution – A discrete probability distribution useful for modeling demand when the average demand is low; also used for modeling the number of arrivals to a system in a fixed time period.

Like the exponential distribution, the Poisson distribution only has one parameter. Unlike the exponential, the Poisson is a discrete distribution, which means that it is only defined for integer values. The mean of the Poisson distribution is λ (lambda), and the variance is equal to the mean. The probability mass function p(x) is the probability of x and is only defined for integer values of x.

Parameter: The Poisson has only one parameter (λ), which is the mean.

Probability mass and distribution functions:

Partial expectation function: The partial expectation for the Poisson distribution is ![]() for x

for x ![]() 1 (Hadley & Whitin 1963). This is a useful tool for inventory models.

1 (Hadley & Whitin 1963). This is a useful tool for inventory models.

Statistics: Range non-negative integers {0, 1, ... }, mean λ (note that λ need not be an integer), variance λ, mode λ − 1 and λ if λ is an integer and ![]() λ

λ![]() otherwise, where the

otherwise, where the ![]() x

x![]() rounds down to the nearest integer. Median ≈

rounds down to the nearest integer. Median ≈![]() λ + 1/3−0.02/λ

λ + 1/3−0.02/λ![]() . Coefficient of variation

. Coefficient of variation ![]() . Skewness

. Skewness ![]() . Kurtosis

. Kurtosis ![]() .

.

Graph: The graph below shows the Poisson probability mass function with mean λ = 3.

Excel: The Excel function for the Poisson probability mass function is POISSON(x,λ, FALSE). The Microsoft Excel function for the cumulative Poisson distribution is POISSON(x,λ, TRUE), which returns the probability that the random variable will be less than or equal to x given that the mean of the Poisson distribution is λ. Excel does not provide an inverse Poisson distribution function.

Excel simulation: In an Excel simulation, it is necessary to use Excel formulas or a VBA function to generate Poisson distributed random variates, because Excel does not have an inverse function for the Poisson distribution. Law and Kelton (2000) presented a simple and fast algorithm, which is implemented in the VBA code below. Law and Kelton (2000) also noted that the inverse transform method with a simple search procedure can also perform well. Given that the Poisson distribution is typically used only for distributions with a low mean (e.g., λ < 9), a simple search procedure is reasonably fast.

Relationships to other distributions: The Poisson and exponential distributions are unique in that they have only one parameter. If the number of arrivals in a given time interval [0,t] follows the Poisson distribution, with mean λt, the interarrival times follow the exponential distribution with mean 1/λ. (See the queuing theory entry.) If X1, X2, ..., Xm are independent Poisson distributed random variables with mean λi, then X1 + X2 + ... + Xm is Poisson distributed with mean λ1 + λ2 + ... + λm. The Poisson distribution is a good approximation of the binomial distribution when n ![]() 20 and p

20 and p ![]() 0.05 and an excellent approximation when n

0.05 and an excellent approximation when n ![]() 100 and np

100 and np ![]() 10. For large values of λ (e.g., λ > 1000), the normal distribution with mean λ and variance λ is an excellent approximation to the Poisson. The normal distribution is a good approximation for the Poisson when λ

10. For large values of λ (e.g., λ > 1000), the normal distribution with mean λ and variance λ is an excellent approximation to the Poisson. The normal distribution is a good approximation for the Poisson when λ ![]() 10 if the continuity correction is used (i.e., replace P(x) with P(x + 0.5)). Haley and Whitin (1963) presented several pages of equations related to the Poisson distribution that are useful for inventory models.

10 if the continuity correction is used (i.e., replace P(x) with P(x + 0.5)). Haley and Whitin (1963) presented several pages of equations related to the Poisson distribution that are useful for inventory models.

History: The French mathematician Siméon Denis Poisson (1781-1840) introduced this distribution.

See bathtub curve, binomial distribution, c-chart, dollar unit sampling, exponential distribution, hypergeometric distribution, negative binomial distribution, newsvendor model, np-chart, probability distribution, probability mass function, queuing theory, slow moving inventory, u-chart.

poka-yoke – See error proofing.

POLCA (Paired-cell Overlapping Loops of Cards with Authorization) – A hybrid push/pull production control system for low-volume manufacturing developed by Professor Rajan Suri at the University of Wisconsin.

Suri and Krishnamurthy (2003, p. 1) describe POLCA as follows: “It is a hybrid push-pull system that combines the best features of card-based pull (Kanban) systems and push (MRP) systems. At the same time, POLCA gets around the limitations of pull systems in high-variety or custom product environments, as well as the drawbacks of standard MRP, which often results in long lead times and high WIP.”

See CONWIP, Drum-Buffer-Rope (DBR), kanban.

Pollaczek-Khintchine formula – A relatively simple queuing formula that relates the standard deviation of the service time to the mean number of customers in queue for a single server queuing system.

The formula itself can be found in the queuing theory entry.

See queuing theory.

POMS – See Production Operations Management Society.

pooling – A practice of combining servers to reduce customer waiting time or combining inventory stocking locations to reduce inventory.

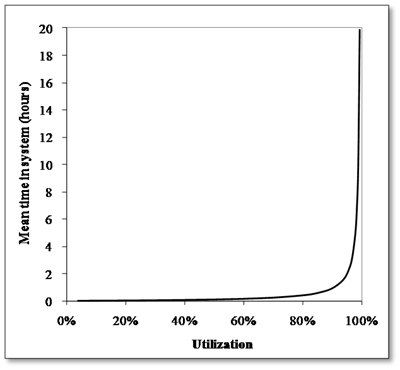

Operations managers often have to decide if it is better to have separate channels (each with its own queue) or to combine them into one queue (waiting line). Similarly, operations managers often have to decide if it is better to hold inventory in separate stocking locations or to combine them into a single stocking location. This is called the pooling problem. An queuing example is used here to explore the benefits of pooling.

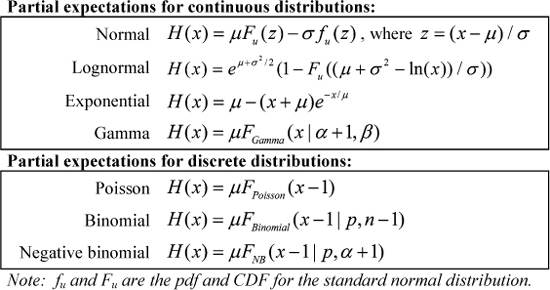

A firm has two technical experts, with one on the East Coast and one on the West Coast. Customers on the West Coast are only allowed to call the West Coast expert; and the same is true for the East Coast. The average interarrival time45 for customers calling the technical experts is a = 0.4 hours on both coasts. The average service time for the two identical experts is p = 0.3 hours. The utilization for each expert is ρ = p / a = 0.3/0.4 = 75%. (Be careful to not confuse ρ and p.) The coefficient of variation for the interarrival time is 1, and the coefficient of variation for the service time is also 1 (e.g., ca = 1 and cs = 1). Each expert is analyzed separately, which means that the number of servers is s = 1. Using the approximate G/G/s model (see the queuing theory entry), the average queue time is:

Therefore, customers will have to wait about 0.9 hours on average for each expert.

If the organization were to combine the two lines to form just one line for the experts, it would “pool” the systems and have only one line. In this case, the interarrival time for the combined system is half that of the separate systems (i.e., a = 0.2 hours), but the average service time remains the same (p = 0.3 hours). Again, using the approximate G/G/s model but with s = 2 servers, the average queue time for this system is:

Therefore, customers in the “pooled” system have to wait about 0.4 hours on average. In this case, pooling reduced the average waiting time by about one-half (from 0.9 hours to 0.4 hours), a very significant difference.

Why is the pooled system so much better? The answer is that in the old system, one expert could be idle while the other had customers waiting in line. In other words, the pooled system makes better use of the experts.

The benefits of pooling are often significant. The main point here is not the queuing model, but rather the fact that many systems can often be improved by pooling resources. Pooled systems can often make better use of resources, reduce the waiting time for customers, and reduce the risk of long waiting times.

Examples of this pooling concept can be found in many contexts:

• The dean of a business school centralized all tech support people for the school into one office instead of having one assigned to each department. The pooled resource provided faster and better support.

• Delta Airlines shares parts with other airlines in Singapore. This reduces the risk of any airline not having a needed part and reduces the required investment.

• Xcel Energy shares expensive power generator parts with many other firms in the Midwestern U.S.

• A large service firm reduced average waiting time by consolidating its call centers in one location.

Pooling also has disadvantages. When a service firm consolidates its call centers, some local customers might not experience service that is culturally sensitive, particularly if the call center is moved to another country. Also, large call centers can experience diseconomies of scale. Putting all operations in one location can be risky from a business continuity standpoint, because a disaster might put the entire business at risk. Finally, in a queuing context, if the mean service times for the customer populations are very different from one another, the pooled coefficient of variation of the pooled service time will increase and the average time in queue will also increase (Van Dijk & Van Der Sluis 2007).

See addition principle, call center, consolidation, diseconomy of scale, postponement, queuing theory, slow moving inventory.

POQ – See Period Order Quantity.

portal – See corporate portal.

Porter’s Five Forces – See five forces analysis.

POS – See Point-of-Sale.

post-mortem review – See post-project review.

post-project review – The practice of appraising a project after it has been completed to promote learning for (1) the members of the project team, (2) the sponsoring organization, and (3) the wider organization; also called post-mortem review, project retrospective, and post-implementation audit.

Organizations should seek to learn from their successes and failures. Organizations that do not do this are doomed to repeat their mistakes over and over again. This is a critical activity for successful project management. Gene Heupel of GMHeupel Associates recommends that process improvement project teams conduct three activities at the end of a project: (1) create a project completion notice, (2) conduct a post-project review, and (3) create a project closing report. Each of these is discussed briefly below.

The project completion notice is serves several purposes, including (1) verifying that the deliverables in the project charter have been completed, (2) defining the plan to sustain the implementation, and (3) releasing the team and establishing the end-of-project activities. Heupel recommends that the project team have the project sponsor sign this document.

The post-project review is a comprehensive review conducted by the project team to ensure that the team and the organization have learned as much as they can from the project. Lessons learned from this review are documented in the project closing report. It is important to remember that the purpose of this review is not to blame people, but rather to help the organization learn from the project experience so that going forward it will retain the good practices and improve the poor ones.

The project closing report contains all of the significant documents related to the project as well as lessons learned from the post-project review. This report becomes an important part of the organization’s knowledge base going forward. The project is not complete until the sponsor has signed off on the project closing report.

In many contexts, the post-project review might also (1) assess the level of user satisfaction, (2) evaluate the degree to which the stated goals were accomplished, and (3) list further actions required.

See deliverables, forming-storming-norming-performing model, implementation, lean sigma, learning organization, project charter, project management, sponsor.

postponement – The principle of delaying differentiation (customization) for a product as long as possible to minimize complexity and inventory; also called delayed differentiation, late customization, and late configuration. ![]()

Forecasting the demand for standard products is relatively easy, and the inventory carrying cost for these products is relatively low. However, forecasting demand for products differentiated for a particular channel or customer is much harder, and the carrying cost is often high due to obsolescence. Firms will likely have too much inventory for some differentiated products and too little for others. If a firm can delay the differentiation of the products until after the customer order has been received, the finished goods inventory is eliminated. Postponement is a form of pooling, where the organization pools the inventory as long as possible before the customer order requires differentiation.

Postponement is a foundational principle for mass customization. Postponement principles often allow an organization to change from make to order to assemble to order or configure to order, which allows the firm to reduce customer leadtime or increase customization. The point at which products are customized for customers is called the push-pull boundary.

For example, HP was able to standardize its printers and put all country-specific power management technology in the cord. This allowed for lower inventory and better customer service.

See agile manufacturing, customer leadtime, mass customization, New Product Development (NPD), operations strategy, pooling, push-pull boundary, respond to order (RTO), standard products.

predatory pricing – The practice of selling a product or service at a very low price (even below cost) to drive competitors out of the market and create barriers to entry for potential new competitors.

After a company has driven its competitors out of the market, it can recoup its losses by charging higher prices in a monopoly relationship with its customers. Predatory pricing is against the law in many countries.

See antitrust laws, bid rigging, bribery, price fixing.

predictive maintenance – See maintenance.

premium freight – Additional charges paid to a transportation provider to expedite shipments.

Premium freight can be used for bringing purchased materials into a facility and delivering products to customers. Premium freight is used when the normal freight method cannot provide needed materials in time for a production schedule. An increase in premium freight for purchased materials suggests that the firm should consider freezing more of the master production schedule (i.e., move out the time fence).

Similarly, premium freight is used when the normal freight method cannot deliver finished goods to customers by the promised delivery dates. An increase in premium freight for delivering products to customers suggests that the firm may be over-promising on its delivery dates to its customers.

See logistics, time fence.

prevention – Work to design and improve products and processes so defects are avoided.

Prevention cost is the cost associated with this work and includes product design, process design, work selection, worker training, and many other costs. The cost of quality suggests that investing in prevention will generally reduce appraisal (inspection) cost, internal failure cost, and external failure cost. However, many firms find that prevention cost is the hardest component of the cost of quality to measure.

See cost of quality, error proofing, Failure Mode and Effects Analysis (FMEA), rework, sentinel event.

preventive maintenance – See maintenance.

price elasticity of demand – See elasticity.

price fixing – Agreement between competitors to set an agreed-upon minimum price.

Price fixing inhibits competition and therefore forces customers to pay more than they would in a competitive environment. Price fixing is an illegal practice in many countries.

See antitrust laws, bid rigging, bribery, predatory pricing, purchasing.

price of non-conformance – See cost of quality.

primacy effect – A concept from psychology and sociology that suggests that people assign disproportionate importance to initial stimuli or observations.

For example, if a subject reads a long list of words, he or she is more likely to remember words read toward the beginning of the list than words read in the middle. The phenomenon is due to the fact that short-term memory at the beginning of a sequence of events is far less “crowded,” because fewer items are being processed in the brain.

The recency effect is a similar concept from psychology and sociology that suggests that people assign disproportionate importance to final stimuli or observations.

In summary, the primacy and recency effects predict that people will remember the items near the beginning and the end of the list. Lawyers scheduling the appearance of witnesses for court testimony and managers scheduling a list of speakers at a conference take advantage of these effects when they put speakers they wish to emphasize at the beginning or end. In measuring customer satisfaction, it is well-known that customers place undo emphasis on their first and most recent customer experiences (“moments of truth”).

See moments of truth, service quality.

primary location – See random storage location.

Principal Component Analysis (PCA) – A statistical tool that transforms a number of possibly correlated variables into a smaller number of uncorrelated variables called principal components.

The first principal component accounts for as much of the variability in the data as possible, and each subsequent component accounts for as much of the remaining variability as possible.

See cluster analysis, factor analysis.

print on demand – A customer interface strategy of printing books, manuals, and other materials in response to a customer order rather than creating an inventory; also called POD, publish on demand, and print to order.

Print on demand requires a printing process that can efficiently handle small printing batch sizes. Print on demand is a mass customization strategy made possible by computer-based printing technologies. Ideally, print on demand has nearly zero setup time, setup cost, finished goods inventory, and obsolete inventory.

See mass customization, respond to order (RTO).

print to order – See print on demand.

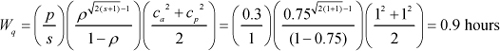

prisoners’ dilemma – A conflict situation (“game”) in which two players can decide to either cooperate or cheat.

The prisoner’s dilemma is a classic scenario in the game theory literature that received its name from the following hypothetical situation (Axelrod 1984). Imagine two criminals, A and B, arrested under suspicion of having committed a crime together. However, the police do not have sufficient proof to convict them. The police separate the prisoners and offer each of them the same deal. If one testifies for the prosecution against the other and the other remains silent, the betrayer goes free, and the silent accomplice receives the full 10-year sentence. If both stay silent, both prisoners are sentenced to only six months in jail for a minor charge due to lack of evidence. If each betrays the other, each receives a two-year sentence. Each prisoner must make the choice of whether to betray the other or remain silent. However, neither prisoner knows what choice the other prisoner will make. The game is summarized in the following table.

Prisoner’s dilemma

The gain for mutual cooperation in the prisoners’ dilemma is kept smaller than the gain for one-sided betrayal so that players are always tempted to betray. This economic relationship does not always hold. For example, two wolves working together can kill an animal that is more than twice as large as what either of them could kill alone.

The prisoners’ dilemma is meant to study short-term decision making where the actors do not have any specific expectations about future interactions or collaborations (e.g., in the original situation of the jailed criminals). Synergy usually only gets its full power after a long-term process of mutual cooperation, such as wolves hunting deer. If two entities repeatedly face a prisoners’ dilemma with each other, a fairly good strategy for each one is sometimes called tit for tat, which means that if you cheated on the previous move, I’ll cheat on this move; if you cooperated on the previous move, I’ll cooperate on this move.

See game theory, zero sum game.

private carrier – A shipper that transports its goods in truck fleets that it owns or leases.

See common carrier, logistics.

private label – A product or service created by one organization but sold by another organization under the seller’s brand name.

The most common example is when a large retailer (e.g., Target) contracts with a manufacturer to make a generic version of its product sold under the Target brand. Private label is a common practice in consumer packed goods and white goods.

See category captain, category management, consumer packaged goods, Original Equipment Manufacturer (OEM), picking, white goods.

privatization – The process of moving from a government owned and controlled organization to a privately owned and controlled for-profit organization; spelled privatisation in most of the world outside of the U.S.

pro bono – To work for the public good without charging a fee; short for the Latin pro bono publico, which means “for the public good.”

When lawyers, consultants, and other professionals work “pro bono,” they work without charging a fee. Some organizations provide pro bono services, but then prominently mention the gift in their marketing communications. Technically, this is gratis (free of charge) rather than pro bono, because it is part of the organization’s advertising, promotion, and branding strategy and not truly intended only for the public good.

probability density function – A statistics term for a function that represents the probability for a continuous random variable as the area under the curve; usually written as f (x); also called the density function and PDF. ![]()

Only continuous random variables have probability density functions. Discrete random variables have a probability mass function, which defines the probability for each discrete (integer) value (e.g., p(x) = P(X = x)).

The integral of the PDF for the entire range of a continuous random variable is one (![]() ).

).

The Cumulative Distribution Function (also called the CDF or simply the distribution function) is the lower tail (left tail) cumulative probability (i.e., the integral of the PDF) and is the probability that a random variable is less than the specified value. This can be expressed mathematically as  , where X is a continuous random variable, x is a specific value, and f(x) is the density function evaluated at x. The probability that a random variable is in the range [a, b] is

, where X is a continuous random variable, x is a specific value, and f(x) is the density function evaluated at x. The probability that a random variable is in the range [a, b] is  . The reliability function is simply one minus the CDF. The PDF is the derivative of the cumulative distribution function (i.e., dF(x)/dx = f(x)).

. The reliability function is simply one minus the CDF. The PDF is the derivative of the cumulative distribution function (i.e., dF(x)/dx = f(x)).

See beta distribution, chi-square distribution, Erlang distribution, exponential distribution, gamma distribution, lognormal distribution, normal distribution, partial expectation, probability distribution, probability mass function, random variable, reliability, sampling distribution, Student’s t distribution, triangular distribution, uniform distribution, Weibull distribution.

probability distribution – A mathematical or graphical description of how likely a random variable will be less than or equal to a particular value. ![]()

Random variables are said to be either discrete (i.e., only integer values) or continuous (i.e., any real values). Discrete random variables have a probability mass function that defines the probability for each discrete (integer) value. Continuous random variables have a Probability Density Function (PDF), where the probability is represented by the area under the curve. The Cumulative Distribution Function (CDF) evaluated at x is the probability that the random variable will attain a value less than or equal to x.

See Bernoulli distribution, beta distribution, bimodal distribution, binomial distribution, chi-square distribution, Erlang distribution, exponential distribution, gamma distribution, hypergeometric distribution, lognormal distribution, negative binomial distribution, normal distribution, Poisson distribution, probability density function, probability mass function, random variable, sampling distribution, Student’s t distribution, triangular distribution, uniform distribution, Weibull distribution.

probability mass function – A probability theory term for an equation that can be used to express the probability that a discrete random variable will be exactly equal to a given value; usually denoted as p(x).

A discrete random variable can only take on integer values. In contrast, a probability density function is used for continuous variables.

The probability mass is p(x) = P(X = x). A probability mass function must sum to one (i.e., ![]() ).

).

See Bernoulli distribution, bimodal distribution, binomial distribution, hypergeometric distribution, negative binomial distribution, Poisson distribution, probability density function, probability distribution, sampling distribution.

Probit Analysis – See logistic regression.

process – A set of steps designed to achieve a particular goal. ![]()

All processes have inputs and outputs. Ideally, processes will also have a feedback mechanism that evaluates the outputs and adjusts the inputs and the processes to better achieve the desired goal.

As mentioned in the preface to this book, this author uses the following framework to discuss process improvement:

• Better – How can we provide customers improved product quality, service quality, and value?

• Faster – How can we reduce cycle times to make our products and services more flexible and customizable?

• Cheaper – How can we reduce waste, lower cost, and better balance demand and capacity in a global supply chain?

• Stronger – How can we leverage our competitive strengths (core competences), mitigate risks by making processes more robust, and consider the triple bottom line (people, planet, and profits)?

Process improvement programs, such as lean sigma and lean, typically use tools, such as process mapping, error proofing, and setup time reduction methods, to reduce waste and add more value.

See error proofing, lean sigma, lean thinking, process map, robust, setup time reduction methods, systems thinking.

process capability and performance – A lean sigma methodology that measures the ability of a process to consistently meet quality specifications. ![]()

Process capability and performance can be measured in many ways. The simplest approach is to measure the Defects per Million Opportunities (DPMO), where a defect is anything that does not meet the customer (or specification) requirements. A DPMO value can be translated into a sigma level, where the lower the DPMO, the higher the sigma level. (See the sigma level entry.)

Another approach is to use the statistical measures Cp and Cpk for measuring process capability and Pp and Ppk for measuring process performance. Process capability compares the process output for an “in-control” process with the customer’s specification (tolerance limits) to determine if the common-cause variation is small enough to satisfy customer requirements.

The figure on the right shows a process with a process mean that is not centered between the lower tolerance limit (LTL) and the upper tolerance limit (UTL). Note that the mean μ is right of the center of the tolerance limits. Therefore, this process is not centered. This figure also shows that the process limits for common-cause variation (±3σ) are well within the specification (tolerance) limits. Therefore, this process is said to be capable.

One humorous way to communicate this concept is to compare the specification limits to the width of a garage. The common-cause variation (the process width) is the size of a car that must fit into the garage. The specification width is the size of the garage. If the garage is large (wide specification limits) and the car is small (tight process limits), the car will fit into the garage with no problem. However, if the garage is narrow and the car is large, the car will hit the sides of the garage (defects will be produced).

Several of the main process capability concepts are defined in more detail below.