F

fabrication – A manufacturing process that transforms materials to make parts to go into an assembly.

Fabrication includes manufacturing processes (such as molding, machining, forming, or joining) and not assembly. Small businesses that specialize in metal fabrication are called fab shops. A semiconductor fabrication facility is called a wafer fab and makes integrated circuits (silicon chips).

See assembly line, manufacturing processes, respond to order (RTO).

facility layout – The physical organization of processes in a facility. ![]()

The layout of a factory should minimize the total cost of moving materials between workcenters. Some processes must be located next to each other, while others cannot be located next to each other due to heat, sound, or vibration. Other issues include cycle time, waste, space efficiency, communications, safety, security, quality, maintenance, flexibility, customer waiting time, aesthetics for workers, and aesthetics for customers.

The layout problem is not unique to manufacturing. Service businesses, such as hospitals and banks, have the same issues. Retailers use planograms to help layout retail stores. Similar layout problems are common in other design contexts, such as laying out an integrated circuit. Experience engineering issues are important when the customer contact is high.

The three basic types of facility layouts include the process layout (functional layout), the product layout, and the fixed-position (project) layout. Each of these is discussed briefly below.

Process layout (functional layout) – A layout that groups similar activities together in departments or workcenters according to the process or function that they perform. For example, all drills might be located together in the drill workcenter. The process layout is generally used in operations that are required to serve a wide variety of customer needs, so the equipment must serve many purposes and the workforce needs to be highly skilled. Although process layouts offer high flexibility, they are relatively inefficient because of long queues, long cycle times, and high materials handling costs. The best example of a process layout is a job shop. The major concerns in a process layout are cycle times, utilization, order promising, routing, and scheduling.

Traditional industrial engineering approaches to improving a process layout include process analysis, graphical methods, computer simulation, and computer optimization. CRAFT (Computerized Relative Allocation of Facilities Technique) is a heuristic approach developed by Buffa, Armour, and Vollmann (1964) that uses a heuristic (non-optimal) approach to solving a quadratic assignment formulation of the facility layout problem.

Product layout – A product layout arranges activities in the sequence of steps required to manufacture or assemble a product. Product layouts are suitable for mass production or repetitive operations in which demand is relatively steady and volume is relatively high. Product layouts tend to be relatively efficient, but not very flexible. The best example of a product layout is an assembly line. The major concern in a product layout is balancing the line. In designing an assembly line, many tasks (elements) need to be assigned to workers, but these assignments are constrained by the target cycle time for the product and precedence relationships between the tasks (i.e., some tasks need to be done before others). The line balancing problem is to assign tasks to workstations to minimize the number of workstations required while satisfying the cycle time and precedence constraints. Many operations researchers have developed sophisticated mathematical computer models to solve this problem18. The line balancing problem becomes less important when the organization can use cross-trained workers who can move between stations as needed to maximize flow. Cellular manufacturing is a powerful approach for converting some equipment in a process layout into a product layout for families of parts. Mixed model assembly allows some firms to justify having product layouts that are not dedicated to a single product. See the cellular manufacturing and mixed model assembly entries.

Fixed-position layout (project-layout) – A fixed-position layout is used in projects where the workers, equipment, and materials go to the production site because the product is too large, fragile, or heavy to move. This type of layout is also called a project layout because the work is usually organized around projects. The equipment is often left on-site because it is too expensive to move frequently. Due to the nature of the work, the workers in a fixed position layout are usually highly skilled. The most familiar examples of a fixed position layout are the construction of building or house. However, a fixed position layout is also used in shipbuilding and machine repair. The major concerns with a fixed-position layout are meeting the project requirements, within budget, and within schedule. See the project management entry.

The Theory of Constraints literature suggests that the focus for all layouts should be on the bottleneck process. See the Theory of Constraints (TOC) entry.

See 5S, assembly line, cellular manufacturing, continuous flow, CRAFT, cross-training, experience engineering, flowshop, focused factory, job order costing, job shop, lean thinking, line balancing, mixed model assembly, planogram, plant-within-a-plant, process design, process map, product-process matrix, project management, spaghetti chart, Theory of Constraints (TOC), workcenter.

facility location – The physical site for a building. ![]()

The facility location problem is to find the best locations for the organization’s facilities (warehouses, stores, factories, offices). The facility location problem is often defined in terms of minimizing the sum of the incoming and outgoing transportation costs. In a retail context, the problem is often defined in terms of maximizing revenue. In the service context, the problem is defined in terms of meeting some service criterion, such as customer travel time or response time for customer needs.

Facility location theory suggests that the problem can be broken into finite and infinite set location models. The finite set location models evaluate a limited number of locations and determine which one is best. The infinite set location models find the best x-y coordinates (or latitudes and longitudes) for a site (or sites) that minimize some mathematical objective function. The center-of-gravity and numeric-analytic location models are infinite set location models. The gravity model for competitive retail store location and the Kepner-Tregoe Model are finite set location models.

Some location models assume that vehicles can travel directly across any geography, while others assume that vehicles are constrained to existing transportation networks. Some models assume that cost is proportional to the distance or time traveled, whereas others include all relevant costs, including tariffs, duties, and tolls.

See center-of-gravity model for facility location, gravity model for competitive retail store location, great circle distance, greenfield, Kepner-Tregoe Model, numeric-analytic location model, process design, supply chain management, tariff, warehouse.

factor analysis – A multivariate statistical method used to reduce the number of variables in a dataset without losing much information about the correlation structure between the variables.

Factor analysis originated in psychometrics and is used in behavioral sciences, social sciences, marketing, operations management, and other applied sciences that deal with large datasets. Factor analysis describes the variability of a number of observed variables in terms of a fewer number of unobserved variables called factors, where the observed variables are linear combinations of the factors plus error terms. To use an extreme example, a study measures people’s height in both inches and centimeters. These two variables have a correlation of 100% and the analysis can be simplified by combining the variables into one “factor” without losing any information.

Factor analysis is related to Principal Component Analysis (PCA). Because PCA performs a variance-maximizing rotation of the variable space, it takes into account all variability in the variables. In contrast, factor analysis estimates how much of the variability is due to common factors. The two methods become essentially equivalent if the error terms in the factor analysis model can be assumed to all have the same variance.

Cluster analysis and factor analysis are both data reduction methods. Given a dataset with rows that are cases (e.g., respondents to a survey) and columns that are variables (e.g., questions on a survey), cluster analysis groups cases into clusters and factor analysis groups variables into factors. Using the survey example, cluster analysis groups similar respondents and factor analysis groups similar variables.

See cluster analysis, Principal Component Analysis (PCA).

factorial – A mathematical function denoted as n! and defined as the product of all positive integers less than or equal to n; in other words, n! = n · (n − 1) · (n − 2) · · · 2 · 1.

The factorial function is important in many fields of mathematics and statistics. For example, a sequence of items is called a permutation and a set of n items can be ordered in n! different ways (permutations). The exclamation symbol (!) is called the “factorial” operator. Note that 0! = 1 is a special case. For example, 5! = 5·4·3·2·1 = 120. The factorial function is not defined for negative numbers. The definition of the factorial function can be extended to non-integer arguments using the gamma function, where n! = Γ (n + 1). The gamma function entry covers this subject in more detail.

Most computers cannot accurately compute values over 170! In Excel, n! = FACT(n). Excel reports an integer overflow error for FACT(n) when n ![]() 171. For large n, n! can be computed (approximately) in Excel with EXP(GAMMALN(n + 1)) and the ratio of two large factorials can be computed approximately as m!/n! = EXP(GAMMALN(m + 1) – GAMMALN(n + 1)).

171. For large n, n! can be computed (approximately) in Excel with EXP(GAMMALN(n + 1)) and the ratio of two large factorials can be computed approximately as m!/n! = EXP(GAMMALN(m + 1) – GAMMALN(n + 1)).

See combinations, gamma function.

Fagan Defect-Free Process – A formal process for reviewing products that encourages continuous improvement.

Fagan (2001) created a method of reviews and inspections for “early detection” of problems in software development while working at IBM. Fagan argues that the inspection process has two goals: (1) find and fix all defects and (2) find and fix all defects in the process that created the defects. The process specifies specific roles for a team of four people: Moderator, Reader, Author, and Tester.

See agile software development, early detection, New Product Development (NPD), scrum.

fail-safe – See error proofing.

Failure Mode and Effects Analysis (FMEA) – A process that identifies the possible causes of failures (failure modes), scores them to create a risk priority number, and then mitigates risk starting with the most important failure mode. ![]()

Failure Mode and Effects Analysis (FMEA) was invented by NASA early in the U.S. Apollo space program. NASA created the tool to alleviate the stress between two conflicting mottos: “Failure is not an option” and “Perfect is the enemy of good.” The first meant successfully completing the mission and returning the crew. The second meant that failure of at least some components was unavoidable.

FMEA is a simple process that identifies the possible causes of failures (failure modes), scores them on three dimensions (severity, occurrence, and detection) to create a risk priority number, and then mitigates risk starting with the most important failure mode. The first step in FMEA is to identify all potential failure modes where a failure might occur. Once these failure modes have been identified, FMEA then requires that each one be scored on three dimensions: severity, occurrence, and detection. All three dimensions are scored on a 1 to 10 scale, where 1 is low and 10 is high. These three scores are then multiplied to produce a Risk Priority Number (RPN). The failure models can then be prioritized based on the RPNs and risk mitigation efforts can then be designed for the more important failure modes.

Many organizations have found FMEA to be a powerful tool for helping them prioritize risk mitigation efforts. At 3M and other firms, FMEA is a required tool for all lean sigma projects.

Whereas Root Cause Analysis (RCA) identifies contributors to an adverse event after the fact, FMEA is intended to be a proactive (before the fact) tool. Ideally, FMEA anticipates all adverse events before they occur.

The scoring part of an FMEA requires the subjective evaluation of three dimensions for each failure mode, where each dimension is scored on a 1 to 10 scale:

Severity – Impact of the failure. If failure occurred, what is the significance of the harm of this failure in terms of cost, time, quality, customer satisfaction, etc.?

Occurrence – Frequency of occurrence. What is the probability that this failure will occur? (This is sometimes called the probability of occurrence.)

Detection – Ability to detect the problem and avoid the impact. Can the failure be detected early enough that it does not have a severe impact? (Important note: A 10 on detection means that it is hard to detect.)

Risk Priority Number (RPN) = (Severity) × (Occurrence) × (Detection)

It is easy to create an Excel workbook for FMEA. The following is a typical format. These actions usually target the likelihood of occurrence, but should also seek to make detection easier and reduce severity. After creating the workbook, the user can sort the rows by the RPN to prioritize the “actions to reduce risk.”

Failure Model and Effects Analysis (FMEA) example

See the Design Failure Mode and Effects Analysis (DFMEA) entry for information about how FMEA can be applied to design.

See Business Continuity Management (BCM), causal map, critical path, Design Failure Mode and Effects Analysis (DFMEA), error proofing, fault tree analysis, Hazard Analysis & Critical Point Control (HACCP), impact wheel, lean sigma, operations performance metrics, Pareto Chart, Pareto’s Law, prevention, risk, risk assessment, risk mitigation, robust, Root Cause Analysis (RCA), work simplification.

family – See product family.

FAS – See Final Assembly Schedule (FAS).

Fast Moving Consumer Goods (FMCG) – Any product sold in high volumes to end customers.

FMCG companies are firms that manufacture, distribute, or sell packaged consumer goods, food, hygiene products, grocery items, cleaning supplies, paper products, toiletries, soft drinks, diapers, toys, pharmaceuticals, and consumer electronics. These products are generally sold at low prices. FMCG seems to be synonymous with consumer packaged goods. The opposite of FMCG (and consumer packaged goods) is durable goods. Books and CDs are not FMCGs because consumers usually only buy them once.

See category captain, category management, consumer packaged goods, durable goods, Efficient Consumer Response (ECR).

fast tracking – See critical path.

fault tree analysis – A graphical management tool for describing the cause and effect relationships that result in major failures; a causal map usually used to identify and solve the causes for a specific actual historical problem.

Fault tree analysis is a causal map drawn from the top down. The actual historical fault or major failure being analyzed is identified as the “top event.” All possible causes of the top event are identified in a tree. The main distinquishing feature of a fault tree compared to other types of causal maps is the use of “OR” nodes for independent causes and “AND” nodes for multiple causes that must exist concurrently for a failure to occur.

See causal map, error proofing, Failure Mode and Effects Analysis (FMEA), risk assessment, risk mitigation, Root Cause Analysis (RCA), root cause tree.

faxban – A pull signal for a kanban system that is sent via fax.

Faxban uses fax communication rather than physical kanban cards to send a pull signal. Faxban can reduce the delay between the time that pull is needed and the time that the pull signal is sent. An e-kanban is similar, except that the signal is sent via e-mail, EDI, or through the Web.

See kanban.

FED-up model – See service quality.

field service – Repair and preventive maintenance activities performed by service technicians at the customer site.

Field service technicians (techs) often travel from their homes to customer sites to perform repairs. Techs usually carry inventory in their vehicles and often replace this inventory from a central source of supply on a use-one-order-one basis. Tech performance is often measured by average response time and customer satisfaction. Service calls can be under a warranty agreement or can be for time and materials. Hill (1992) presented a number of models for planning field service territories and tech truck stock inventory.

See response time, reverse logistics, Service Level Agreement (SLA), service parts.

FIFO – See First-In-First-Out (FIFO).

fill rate – See service level.

Final Assembly Schedule (FAS) – A schedule for the respond to order (RTO) customer interface of a manufacturing process.

For assemble to order (ATO), make to order (MTO), and RTO products, the Master Production Schedule (MPS) is a materials plan for longer-leadtime materials, subassemblies, and components that are kept in inventory until customer orders arrive. The MPS, therefore, is a statement of a longer-term plan for longer-leadtime inventoried materials based on a demand forecast. MRP uses this MPS to create a detailed materials plan that schedules the manufacturing and purchasing activities needed to support the materials plan for these master-scheduled items.

In contrast, the Final Assembly Schedule (FAS) is a short-term (e.g., 1-2 week) materials plan based on actual customer orders. The final assembly process might include assembly or other finishing operations, such as adding accessories, labeling, and packing. The push-pull boundary separates items that are in the MPS and the FAS. The Master Production Schedule (MPS) entry provides more information on this topic.

See assemble to order (ATO), bill of material (BOM), make to order (MTO), Master Production Schedule (MPS), push-pull boundary.

financial performance metrics – Economic measures of success. ![]()

All managers need to have a good understanding of financial performance metrics to make good decisions regarding capital investments, such as new plants and equipment, and also for process improvement projects. In virtually all public organizations and in most privately owned organizations, the financial performance metrics are the main goal. However, the operations performance metrics are often the “drivers” of the financial metrics. The cross-references below include most of the financial metrics that managers need to know.

See asset turnover, balance sheet, Balanced Scorecard, break-even analysis, Compounded Annual Growth Rate (CAGR), cost of goods sold, DuPont Analysis, EBITDA, Economic Value Added (EVA), goodwill, income statement, Internal Rate of Return (IRR), Net Present Value (NPV), operations performance metrics, payback period, performance management system, Return on Assets (ROA), Return on Capital Employed (ROCE), Return on Investment (ROI), Return on Net Assets (RONA), sunk cost, total cost of ownership, Y-tree.

finished goods inventory – The inventory units (or dollars) that are “finished” (completed) and ready for shipment or sale to a customer; sometimes abbreviated FG or FGI. ![]()

Other types of inventory include raw materials, Work-in-Process (WIP), Maintenance-Repair-Operations (MRO), and pipeline (in-transit) inventory.

See Maintenance-Repair-Operations (MRO), Work-in-Process (WIP) inventory.

finite loading – See finite scheduling.

finite population queuing model – See queuing theory.

finite scheduling – Creating a sequence of activities with associated times so that no resource (person, machine, tool, etc.) is assigned more work time than the time available.

The opposite of finite scheduling is infinite loading, which ignores capacity constraints when creating a schedule. Hill and Sum (1993) developed the following terms for finite scheduling: A due-date-feasible schedule satisfies all due date requirements for all tasks (orders or operations). A start-date-feasible schedule does not have any tasks (orders or operations) scheduled before the current time. A capacity-feasible schedule does not require any resource to work more time than is available in any period.

Most finite scheduling systems begin with the current due date and therefore will always create start-date feasible schedules. However, if the capacity is insufficient, these systems will not be able to create due-date feasible schedules.

In contrast, MRP systems plan backward from the due date and therefore always create due-date feasible schedules. However, MRP systems are infinite loading systems and ignore capacity when creating a schedule, which means they create schedules that are often not capacity feasible. MRP systems will “schedule” some orders in the “past-due” time bucket, which means that the orders should have been started before the current time. When MRP systems create orders in the “past-due” bucket, the schedule is not start-date feasible, but is still “due-date” feasible.

Although many ERP systems and project management tools have finite scheduling capabilities, few firms use these tools. Most firms use infinite loading for both ERP and project management and then resolve resource contention issues after the plan (schedule) has been created. Finite scheduling was one of the main needs that motivated the development of Advanced Planning and Scheduling (APS) systems.

See Advanced Planning and Scheduling (APS), backward loading, closed-loop MRP, forward scheduling, infinite loading, load, load leveling, Materials Requirements Planning (MRP), project management, time bucket.

firm order – In the manufacturing context, a customer order that has no uncertainty with respect to product specifications, quantity, or due date; also called a customer order or firm customer order.

In contrast a “soft order” is a forecast or a planned order sometimes provided to suppliers via EDI.

See Electronic Data Interchange (EDI), Master Production Schedule (MPS), Materials Requirements Planning (MRP).

firm planned order – A manufacturing order that is frozen in quantity and time and is not affected by the MRP planning system.

Firm planned orders are created manually by planners and only planners can change the date or quantity for a firm planned order. When an MRP system regenerates materials plans for all items, firm planned orders are not changed. Firm planned orders give planners the ability to adjust schedules to handle material and capacity problems. The order quantities in a master production schedule are often called firm planned orders. A firm planned order is similar to, but not identical to, a firm customer order.

See buyer/planner, manufacturing order, Master Production Schedule (MPS), time fence.

first article inspection – The evaluation of the initial item in an order to confirm that it meets all specifications.

Approval to run additional parts is contingent on this first item meeting all specifications. See inspection.

first mover advantage – The benefit sometimes gained by the first significant company to enter a new market.

Amazon is a good example of a firm that gained competitive advantage by being the first significant firm to enter the online book market. Although other firms sold books on the Internet before Amazon, it was the first firm to do so with appropriate systems and capitalization. Now that Amazon has established itself as the largest Internet book retailer, it has the economies of scale to offer low transaction costs (through good information systems and order fulfillment operations) and the economies of scope (and the network effect) to offer superior value to publishers and authors. The same arguments can be made for eBay in the on-line auction market.

Of course, the first firm in a market is not always able to establish a long-term competitive advantage. Dell Computer currently has the largest market share for personal computers, but many other firms, such as IBM, Apple, and Compaq, entered this market long before Dell.

See market share, operations strategy.

first pass yield – See yield.

first pick ratio – Percentage of items successfully retrieved from the initial warehouse storage location recommended on the pick list.

This is a performance measure for inventory accuracy and warehouse efficiency.

See operations performance metrics, picking.

First-In-First-Out (FIFO) – Using the arrival date as the priority for processing or as an accounting rule.

First-In-First-Out (FIFO) has several similar meanings:

Service priority – The customer who arrived first is serviced first. This is a common practice in walk-in clinics and restaurants.

Production scheduling – The customer order that was received first is processed first. Most lean systems use the FIFO rule. Although FIFO is the “fairest” rule, other dispatching rules often have better shop performance in terms of the average time in system.

Stock rotation – The method of picking goods from inventory that have been in inventory for the longest time.

Stock valuation – The method of valuing stocks that assumes that the oldest stock is consumed first and thus issues are valued at the oldest price.

See dispatching rules, inventory valuation, Last-In-First-Out (LIFO), queuing theory, warehouse.

fishbone diagram – See causal map.

five forces analysis – An industry analysis tool used to better understand a competitive environment.

Michael Porter’s five forces analysis (Porter 1988) can be used by any strategic business unit to evaluate its competitive environment. This analysis looks at five key areas: threat of entrants to the market, the power of buyers, the power of suppliers, the threat of substitutes, and competitive rivalry. The model is shown below.

See competitive analysis, industry analysis, operations strategy, SWOT analysis.

Porter’s Five Forces

five S – See 5S.

fixed order quantity – The policy of using a constant (fixed) lotsize in a production or inventory planning system.

The Economic Order Quantity (EOQ) is a special case of a fixed order quantity. SAP and most other ERP/MRP systems will order in multiples of the fixed order quantity if the net requirements require more than the fixed order quantity.

See Economic Order Quantity (EOQ), lotsize, lotsizing methods.

fixed price contract – A contract to complete a job at a predefined and set cost; also called a fixed-cost contract.

The contractor is obligated to finish the job, no matter how much time or cost is actually incurred. In other words, the contractor takes all the risk.

See buy-back contract, purchasing, risk sharing contract.

fixed storage location – The practice of storing items in a storage area that is labeled with the item ID.

With fixed storage locations, each item has a home location with the item’s identification (part number, item number, SKU) on the shelf. Fixed storage locations are generally inefficient and hard to maintain because the space requirements for products in storage usually change over time as the demand patterns change. These changes require that the organization frequently reallocate the fixed location assignments or have excessive amounts of extra space allocated to each product.

On the positive side, fixed storage locations make it easy for people to find products. Most firms find that a mixture of fixed and random storage locations systems makes sense. The fixed storage locations are used for high-volume products where people are frequently picking items. These locations are replenished often from random storage locations that hold larger bulk quantities of items.

See locator system, random storage location, supermarket, Warehouse Management System (WMS), zone storage location.

fixture – A device used to hold a work piece securely in the correct position relative to the tool while work is being done on the work piece.

Unlike a fixture, a jig can guide the tool.

See jig, manufacturing processes, tooling.

flexibility – The ability to change (adapt) quickly and efficiently in response to a change in the internal or external environment. ![]()

Although the term “flexibility” is used very commonly in business, it is often used inconsistently and has several very different definitions. A major contributing factor to this ambiguity is that organizations face a wide variety of uncertainties and therefore need to have many types of flexibility. However, when managers and researchers discuss flexibility, they often fail to specify which type of flexibility they have in mind. Flexibility can be viewed both strategically and tactically. From a strategic point of view, flexibility can be defined as:

Volume flexibility – The ability to quickly and efficiently increase or decrease the production rate. This is sometimes called scalability and is about having economies of scale or at least avoiding diseconomies of scale.

Mix flexibility – The ability to efficiently handle a wide variety of products in one facility. Other authors define mix flexibility slightly differently. Schroeder, Meyer Goldstein, and Rungtusanatham (2011) defined it as the time to change the mix of products and services; Sethi and Sethi (1990) defined it as the ability of the manufacturing system to produce a set of part types without major setups; and Dixon (1992) defined it as the ability to manufacture a variety of products within a short period of time and without major modifications of existing facilities. Mix flexibility is closely related to product range.

Customization flexibility – The ability to quickly and efficiently provide a wide range of “respond to order” products. This is sometimes called mass customization and is fundamentally about having economies of scope or at least avoiding diseconomies of scope.

New product development flexibility – The ability to quickly and efficiently bring new products to market.

All four of the above flexibilities require the flexibility to be efficient. Unless the organization can “flex” efficiently, it is not truly flexible. For example, it might be possible for a firm to reduce its volume from 100 units per day to 50 units per day, but it is not considered to have volume flexibility if the cost per unit doubles.

Sethi and Sethi (1990) provide a research review article on this subject.

See diseconomy of scale, mass customization, New Product Development (NPD), production planning, resilience, respond to order (RTO), scalability.

Flexible Manufacturing System (FMS) – An integrated set of machines that have automated materials handling between them and are controlled by an integrated information system.

See automation, cellular manufacturing, manufacturing processes, product-process matrix.

float time – See slack time.

floater – A direct labor employee used to fill in on a production line when the regular worker is absent.

floor planning – An arrangement used by a retailer to finance inventory where a finance company buys the inventory, which is then held in trust for the user.

floor stock – Inventory stored close to a production process so workers can use it without an inventory transaction.

The labor cost of handling inventory transactions for lower cost items, such as fasteners, can sometimes be more than the cost of the items themselves. This is also true for bulk items, such as liquids in drums or wire on rolls. This is often solved by moving materials to floor stock as either bulk issued or backflushed items.

With bulk issues, the inventory balance in the warehouse inventory is reduced by the quantity issued and the floor stock account is increased by the same amount. The floor stock inventory is considered out of the system and the system may immediately call for a new reorder. In contrast, with backflushing, the MRP system considers the floor stock as available inventory and the floor stock inventory is reduced when the product is shipped. The backflush quantity is based on the “quantity per” in the bill of material (BOM).

See backflushing.

flow – The movement of products and customers through a process with minimum time wasted in waiting, processing, and non-value-adding activities, such as rework or scrap.

In the lean philosophy, one of the main goals is to improve flow by reducing lotsizes, queues, and rework. Improving flow reduces cycle time, which increases visibility and exposes waste.

See lean, time-based competition.

flow rack – Warehouse shelving that is tilted with rollers so cases roll forward for picking.

With a flow rack, only one case needs to be on the pick face, which means that many items can be available in a small area. Flow racks allow for high item density, which decreases travel time and increases picks per hour.

flow time – See cycle time.

flowchart – A diagram showing the movement of information and objects over time; also called a flow chart and a process flowchart.

The term “flowchart” has historically been used primarily for information flow. Most process improvement leaders now use the term “process map” when creating a diagram to show the steps in a process. See the process map entry for much more detail.

See process map, seven tools of quality.

flowshop – An academic research term used to describe a process that involves a sequence of machines where jobs move directly from one machine to the next.

Dudek, Panwalkar, and Smith (1992) recognized that “there is no precise definition of a flowshop,” but they pointed out that “the following general assumptions are common in the literature. Jobs are to be processed in m stages sequentially. There is one machine at each stage. Machines are available continuously. A job is processed on one machine at a time without preemption and a machine processes no more than one job at a time.”

See facility layout, job shop, job shop scheduling.

FMCG – See Fast Moving Consumer Goods (FMCG).

FMEA – See Failure Mode and Effects Analysis (FMEA).

FMS – See Flexible Manufacturing System.

FOB – A common freight/shipping acronym meaning “free on board.”

When a buyer purchases something and pays for it with terms “FOB origin,” the responsibility of the seller stops when the goods are delivered to the transporting company in suitable shipping condition. It is then the buyer’s responsibility to pay for transportation. In addition, if something gets lost or is damaged during transport, it is settled between the buyer and the transportation company. FOB is an official Incoterm.

See Cash on Delivery (COD), Incoterms, terms, waybill.

focus group – A qualitative research technique that collects ideas from a small group of people.

Focus groups are often used as a marketing research tool to assess customer reactions to product ideas, but they can also be used for other purposes such as collecting employee opinions and gathering process improvement ideas. It is generally a good idea to have an experienced facilitator lead the focus group. Focus groups often use structured brainstorming methods such as the Nominal Group Technique (NGT).

See brainstorming, Nominal Group Technique (NGT).

focused factory – A process that is “aligned with its market” and therefore requires a limited range of operations objectives. ![]()

The concept of a focused factory was originally developed by Harvard Business School Professor Wickham Skinner (1974) in his seminal article entitled “The Focused Factory.” Skinner stated “The focused factory will out-produce, undersell, and quickly gain competitive edge over the complex factory.” Skinner’s article argues that a factory can excel at no more than one or two operations tasks, such as quality, delivery reliability, response time, low cost, customization, or short life cycle products.

“You can’t be everything to everyone” is an old phrase that suggests that people (and firms) cannot do everything well, at least not in one process. A focused factory is a means of implementing a strategic direction for an operation. A firm can have several “focused factories” in any one factory building or location.

Schroeder and Pesch (1994) define a focused factory as one with “a limited and consistent set of demands that originate from its products, processes, and customers, enabling the factory to effectively support the business strategy.” They state that “many manufacturing executives define focus simply as having a limited number of products ... but this definition is too narrow ... the key is to limit the demands placed on manufacturing by limiting the number of processes and customers as well as the number of products.” Schroeder (2008) notes that the types of focus could be based on products, processes, technologies, sales volume, customer interface (make to stock versus make to order), or product maturity.

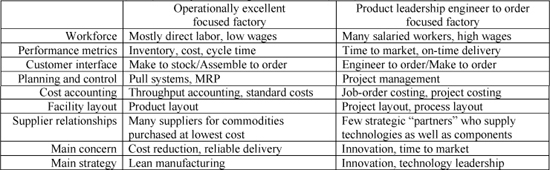

A focused factory, therefore, is not necessarily a factory that produces only one product, but a factory that reduces the variability or range of process requirements so the factory can excel at its key operations tasks. Focused factories align their processes to their markets and to the operations tasks required for those markets. This approach has implications for many process design issues, such as workforce (skill levels, salaried versus direct, customer-facing skills, etc.), performance metrics, customer interface, planning and control systems, cost accounting systems, facility layout, and supplier relationships. For example, the table below compares an operationally excellent make to stock “focused factory” making high volumes of standard products to an engineer to order “focused factory” developing innovative new products in response to customer orders.

Make to stock focused (MTS) factory versus engineer to order (ETO) focused factory

When a factory focuses on just a few key manufacturing tasks, it will be smaller, simpler, and more successful than a factory attempting to be all things to all customers. A focused factory can often deliver superior customer satisfaction to a vertical market, which allows it to dominate that market segment.

Some factories are unfocused originally because designers fail to recognize the limits and constraints of technologies and systems. Other factories start out highly focused, but lose focus over time due to product proliferation. In a sense, losing focus is “scope creep” for a factory.

See cellular manufacturing, core competence, facility layout, functional silo, handoff, operations strategy, plant-within-a-plant, product proliferation, scope creep, standard products, throughput accounting.

fool proofing – See error proofing.

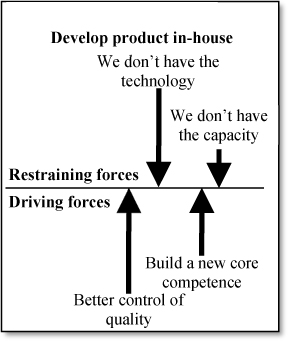

force field analysis – A brainstorming and diagramming technique useful for gaining a shared understanding of the “tug of war” between the forces (factors) that drive (motivate) change toward a goal and the forces that restrain (block) change and support the status quo; force field analysis is supported by the force field diagram.

Force field analysis was developed by Kurt Lewin (1943), who saw organizations as systems where some forces were trying to change the status quo and some forces were trying to maintain it. A factor can be individual people, groups, attitudes, assumptions, traditions, culture, values, needs, desires, resources, regulations, etc. Force field analysis can be used by individuals, teams, and organizations to identify the relevant forces and focus attention on ways of reducing the hindering forces and encouraging the helping forces. The tool helps build a shared understanding of the relevant issues and then helps build an action plan to address the issues.

Force field example

The figure on the right is an example of a force field “tug of war” on the issue of keeping new product development inside a firm. The equilibrium line separates the driving and restraining forces. (Note that the equilibrium line can be either vertical or horizontal.) The lengths of the arrows represent the strength of the forces. After the diagram is drawn, the team should focus on finding ways to strengthen or add driving forces and reduce or remove restraining forces.

See Analytic Hierarchy Process (AHP), brainstorming, decision tree, Kepner-Tregoe Model, Lewin/Schein Theory of Change, Pugh Matrix, stakeholder analysis.

force field diagram – See force field analysis.

force majeure – Events (or forces) beyond the control of the parties of a contract that prevent them from complying with the provisions of the contract; from French meaning superior force.

Typical forces that might be included in such a contract include governmental actions, restraints by court, wars or national emergencies, acts of sabotage, acts of terrorism, protests, riots, civil commotions, fire, arson, explosions, epidemics, lockouts, strikes or other labor stoppages, earthquakes, hurricanes, floods, lightning, embargos, blockades, archeological site discoveries, electrical outages, and interruptions of supply. The term “force majeure” is often used as the title of a standard clause in contracts exempting the parties for non-fulfillment of their obligations as a result of conditions beyond their control. In some cases, an industry shortage is considered a justifiable reason for a supplier to declare a force majeure and put customers on allocation.

Here is an example of a force majeure paragraph from naturalproductsinsider.com/ibg/terms.asp (November 27, 2005): “Neither party shall be deemed in default of this Agreement to the extent that performance of their obligations or attempts to cure any breach are delayed or prevented by reason of any act of God, fire, natural disaster, accident, act of government, shortages of materials or supplies, or any other causes beyond the control of such party, provided that such party gives the other written notice thereof properly and, in any event, within fifteen days of discovery thereof and uses its best efforts to cure the delay (force majeure). In the event of such force majeure, the time of performance or cure shall be extended for a period equal to the duration of the Force.”

See leadtime syndrome.

forecast accuracy – See forecast error metrics.

forecast bias – The average forecast error over time, defined mathematically as  , where Et is the forecast error in period t and T is the number of observations available.

, where Et is the forecast error in period t and T is the number of observations available.

The ideal forecasting system has a zero forecast bias, which means that it has an average forecast error of zero. When the forecast bias is zero, the positive and negative forecast errors “balance each other out.” Bias is not the same as forecast accuracy. It is possible that a forecasting system with low accuracy (high mean absolute error) has zero forecast bias, and conversely, a forecasting system with a high accuracy (low mean absolute error) has high forecast bias. Forecast bias is a measure of the average performance of the forecasting system, whereas forecast accuracy is a measure of the reliability of the forecast.

Good forecasting systems have built-in exception reporting systems that trigger a “tracking signal” report when the forecast bias is large. See the tracking signal entry.

In practice, forecast bias can be tracked with three approaches: the moving average, the running sum of the errors, or the exponentially smoothed average error. The moving average approach defines the forecast bias as the average over the last T periods. The running sum of the errors approach uses the simple equation Rt = Rt-1 + Et, where Rt is the running sum of the forecast error at the end of period t. With this approach, a small consistent bias will become large over many periods. The exponentially smoothed average uses the equation SEt = SEt-1 + αEt, where SEt is the smoothed average error at the end of period t and α is the smoothing constant (0 < α < 1). This is probably the best approach because it puts more weight on the most recent data.

See alignment, bias, demand filter, forecast error metrics, Mean Absolute Deviation (MAD), Mean Absolute Percent Error (MAPE), mean squared error (MSE), tracking signal.

forecast consumption – A method for reducing forecasted demand as the actual demand is realized.

See forecasting, Materials Requirements Planning (MRP).

forecast error metrics – Mathematical measures used to evaluate forecast bias and accuracy. ![]()

Forecast error is defined as the actual demand minus the forecast in a period. Using standard mathematical notation, Et = Dt − Ft, where Et is the forecast error in period t, Dt is the demand in period t, and Ft is the forecast made for period t. Given that the demand is rarely known, most organizations use actual sales as an estimate of demand. The following table is a summary of many forecast error metrics collected by this author. The symbol (![]() ) indicates metrics recommended by the author.

) indicates metrics recommended by the author.

Forecast bias metrics for a single item

• Average error, ![]()

![]()

• Smoothed error, SEt

• Mean Percent Error, MPE

• Mean Error Scaled by the Mean Demand,

• Running Sum of the Forecast Errors, RSEt

• Tracking Signal, TS1t, TS2t ![]()

Forecast accuracy metrics for a single item

• Mean Absolute Deviation (Mean Absolute Error), MAD

• Smoothed Mean Absolute Deviation, SMADt

• Mean Absolute Deviation as percent of average demand, MADP

• Mean Absolute Percent Error (Winsorized 1), MAPE

• Smoothed Mean Absolute Percent Error, SMAPEt

• Relative Absolute Error – Random Walk, RAErw

• Relative Absolute Error – Exponential Smoothing, RAEes

• Mean Absolute Scaled Error, MASE ![]()

• Thiel’s U, U1, U2, U3

• Mean Squared Error, MSE

• Smoothed Mean Squared Error, SMSEt

• Root Mean Squared Error, RMSE

• Forecast Attainment, FA

• Demand Filter, DFt ![]()

Forecast bias metrics for a group of items

• Count or percentage of items with positive forecast error, PPFE

• Weighted Average Forecast Error, WAFE

• Forecast Attainment, FA ![]()

Forecast accuracy metrics for a group of items

• Weighted Mean Absolute Percent Error (Winsorized at 1), WMAPE

• Weighted Mean Absolute Scaled Error, WMASE ![]()

• Median MAPE, MdMAPE

• Geometric Mean of the MAPE, GMMAPE

• Weighted Relative Absolute Error, WRAE

• Median RAE, MdRAE

• Geometric Mean RAE, GMRAE

• Forecast Attainment, FA

• Weighted Absolute Percent Error, WAPE

• Percent Better, PB

Other forecast error metrics

• Sample variance, standard deviation, ![]() ,

, ![]()

• Sample correlation, coefficient of determination, r, r2

• Regression, a, b, r2

• Sample autocorrelation at lag k, ![]()

See bias, Box-Jenkins forecasting, correlation, demand filter, exponential smoothing, forecast bias, forecast horizon, forecast interval, forecasting, geometric mean, linear regression, Mean Absolute Deviation (MAD), Mean Absolute Percent Error (MAPE), Mean Absolute Scaled Error (MASE), mean squared error (MSE), Median, Median Absolute Percent Error (MdAPE), operations performance metrics, Relative Absolute Error (RAE), standard deviation, Thiel’s U, tracking signal, weighted average, Winsorizing.

forecast horizon – The number of time periods into the future that are forecasted.

For example, if a firm regularly makes forecasts that cover the next six months, it has a six-month forecast horizon. If a firm has a six-month manufacturing leadtime, it should clearly forecast at least six months into the future. Forecast error increases rapidly with the forecast horizon. It is often more practical and more economical to spend money to reduce the manufacturing leadtime (and the corresponding forecast horizon) than it is to find a better forecasting method to improve the forecast error for a given forecast horizon.

See all-time demand, forecast error metrics, forecast interval, forecasting, forward visibility.

forecast interval – The highest and lowest reasonable values for a forecast.

This is usually set as the forecast (which is usually an expected value) plus or minus z standard deviations of the forecast error. A reasonable value is z = 3. A forecast interval is very similar to a confidence interval, but it is not exactly the same. It is important to understand that the forecast interval is strongly influenced by the forecast horizon, where the forecast interval increases with the forecast horizon.

See forecast error metrics, forecast horizon, forecasting, forward visibility, geometric mean.

forecasting – Predicting the future values of a variable. ![]()

Almost all organizations need to forecast sales or demand on a regular basis. Organizations also need to forecast the cost of materials, the availability of labor, the performance of a technology, etc. The two main types of forecasting methods are quantitative and qualitative methods. Quantitative methods can be further broken into time series methods and causal methods.

Time series methods (also called intrinsic forecasting methods) seek to find historical patterns in the data and then extrapolate those into the future. The simplest time series models are an average, moving average, and weighted moving average. Exponential smoothing forecasting models create forecasts with a weighted moving average, where the weights decline geometrically with the time lag. The Winters’ and Holt-Winters’ models for exponential smoothing (Winters 1960) add trend and seasonality. The Box-Jenkins method is a much more sophisticated model for time series forecasting (Box, Jenkins, & Reinsel 1994).

Causal methods (also called extrinsic or econometric forecasting methods) are nearly always multiple regression methods, where the model predicts one variable (the dependent variable) from one or more other independent lagged variables. See the linear regression and econometric forecasting entries.

Qualitative methods are subjective methods used to collect estimates from people. See the Delphi and technological forecasting entries for more information on qualitative models.

All time series can be decomposed into a seasonal pattern (tied to the calendar or a clock), a trend, cyclical (irregular patterns), and what is left over (random noise). See the seasonality, trend, and time series entries.

Forecast error is defined as the actual value minus the forecasted value. Most forecasting models assume that the random error is normally distributed. See the forecast error metrics entry for more detail.

Demand forecasts are better when:

• Expressed as a point estimate (a single number) and a forecast interval rather than just a point estimate.

• Aggregated across product families, regions, and periods.

• Made for a short horizon.

• Based on many periods of historical data.

• Supplemented by human intelligence.

• Clearly differentiated from a plan.

• Carefully aligned with reward systems.

• Created collaboratively by the supply chain.

• Used by everyone without modification.

Fundamental forecasting principles:

• Forecasting is difficult (especially if it is about the future).

• The only thing we know for sure about a forecast is that it is wrong.

• Separate forecasting and planning – forecast ≠ plan.

• It is easier to fit a model to historical data than it is to create accurate forecasts.

• Use lean to reduce cycle times and forecast horizons.

• Use information systems to replace inventory and improve service.

• Share demand information to reduce forecast error and coordinate the supply chain.

• Use leading indicators to reduce forecast error.

• Use demand management to balance supply and demand.

• Use yield management to maximize revenue.

• Use demand filters and tracking signals to control forecasts.

• Use the Bass Model for product life cycle forecasting, particularly at the beginning of the product life cycle.

• Use the geometric time series model for end-of-life forecasting.

Two misunderstandings of forecasting are common. Each of these is discussed below.

Confusing forecasting and planning – Many firms use the term “forecast” for their production plan. As a result, they lose important stockout (opportunity cost) information and create confusion and muddled thinking throughout their organizations. In a typical business context, the firm needs a forecast of the demand for its products without consideration of the firm’s capacity or supply. In response to this “unfettered” (unconstrained) demand forecast, the firm should make its production and inventory plans. In some periods, the firm might plan to have inventory greater than demand; in other periods, the firm might plan to have inventory short of demand.

Confusing sales and demand history – Many people use the terms “sales” and “demand” interchangeably. However, they are not the same. Technically, demand is sales plus lost sales. Most firms keep a sales history, which they sometimes call the “demand history.” (SAP uses the term “consumption” history.) This is a “censored” time series because sales will be less than demand when sales are lost due to lack of inventory. This distinction is important when using historical sales (not demand) to forecast future demand. Some retailers try to use information on the “in-stock position” to inflate the sales history to estimate the demand history.

The website www.forecastingprinciples.com provides a dictionary, bibliography, and other useful information on forecasting. The Principles of Forecasting is a free Web-based book by J. Scott Armstrong that can be found at www.forecastingprinciples.com/content/view/127/10 (April 18, 2011).

See all-time demand, anchoring, Bass Model, Box-Jenkins forecasting, censored data, coefficient of variation, Collaborative Planning Forecasting and Replenishment (CPFR), Croston’s Method, Delphi forecasting, demand, demand filter, demand management, econometric forecasting, elasticity, exponential smoothing, forecast consumption, forecast error metrics, forecast horizon, forecast interval, forward visibility, inventory management, leading indicator, linear regression, lumpy demand, Mean Absolute Deviation (MAD), Mean Absolute Percent Error (MAPE), moving average, Sales & Operations Planning (S&OP), seasonality, supply chain management, technological forecasting, Theta Model, time bucket, time series forecasting, tracking signal, trend.

forecasting lifetime demand – See all-time demand.

forging – A manufacturing process that shapes metal by heating and hammering.

Forging usually involves heating metal (below the melting point) and then using hammering or pressure to shape the metal. Forged parts usually require additional machining. Forging can be cold, warm, or hot.

for-hire carrier – A common carrier or contract carrier trucking firm that transports goods for monetary compensation.

See carrier, common carrier, logistics.

forklift truck – A vehicle used in warehouses, factories, and distribution centers to lift, move, stack, and rack loads (usually on pallets); also called a lift truck, fork lift, and hi-low.

A forklift may have a special attachment on the front for handling certain specialized products.

See cross-docking, logistics, materials handling, pallet, warehouse.

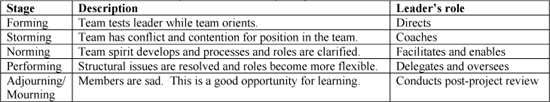

forming-storming-norming-performing model – A model that explains the progression of team development.

This model of group development was first proposed by Tuckman (1965), who maintained that all four phases are necessary for a team to be successful. Tuckman and Jensen (1977) added “adjourning” in 1970, which some call “mourning.”

• Forming – In this first stage, the team has high dependence on the leader for guidance. The team has little agreement on goals other than what the leader has defined. Individual roles and responsibilities are unclear. Processes are often ignored and team members often test the boundaries of the leader. The leader directs.

• Storming – Team members contend to establish their position relative to those of other team members and the leader and decision making is difficult. Informal alliances form and power struggles are common. The team needs to be focused on goals and compromises may be required to enable progress. The leader coaches.

• Norming – The team begins to develop consensus and clarify roles and responsibilities. Smaller decisions may be delegated to individuals or smaller teams. The team may engage in fun and social activities. The team begins to develop processes. The leader facilitates and enables.

• Performing – The team has a shared understanding of its vision and is less reliant on the leader. Disagreements are resolved positively and process changes are made easily. Team members might ask for assistance from the leader with personal and interpersonal development. The leader delegates and oversees.

• Adjourning (Mourning) – The team’s work is complete when the task is successfully completed and the team members can feel good about their work. The leader should conduct a post-project review to ensure that individuals learn and that organizational learning is captured and shared.

See brainstorming, mission statement, post-project review, project management.

formulation – A list of the quantities of each ingredient needed to make a product, typically used for chemicals, liquids, and other products that require mixing; also called a recipe.

forward buy – The practice of purchasing materials and components in excess of the short-term anticipated demand; the use of forward buys is called forward buying.

Forward buying is often motivated by trade promotions (temporary price reductions) or anticipation of a potential price increase. Although forward buying might reduce the acquisition cost for the customer, it can increase inventory carrying cost for the customer and increase the variability of the demand for the supplier. Everyday low pricing (EDLP) is a way to encourage customers to reduce forward buying and stabilize demand.

See acquisition, bullwhip effect, Everyday Low Pricing (EDLP), futures contract, loss leader, promotion, purchasing.

forward integration – See vertical integration.

forward loading – See forward scheduling.

forward pass – See forward scheduling.

forward pick area – A space within a warehouse used for storing and picking higher-demand items; this space is resupplied from a larger reserve storage area; sometimes called a golden zone.

This area usually has fixed storage locations so that pickers can remember where the items are located. Using a forward pick area can reduce unproductive travel time by order pickers but must be replenished from a bulk storage (reserve storage) area somewhere else in the warehouse. A forward pick area usually has small quantities of high-volume parts stored in carton flow racks positioned near a conveyor, shipping area, or the loading dock. Forward pick areas are common in distribution centers in North America, especially those supporting retail sales. Bartholdi (2011) provided more information in his free on-line book and Bartholdi and Hackman (2008) developed a mathematical model for optimizing the space allocated to a forward pick area.

See picking, reserve storage area, slotting, warehouse, Warehouse Management System (WMS).

forward scheduling – A finite scheduling method that begins with the start date (which could be the current time) and plans forward in time, never violating the capacity constraints; also called forward loading or forward pass.

The start date and task times are the primary inputs to a forward scheduling algorithm. The planned completion date is an output of the process. Forward scheduling is quite different from back scheduling, which starts with the due date (planned completion date) and plan backward to determine the planned start date. The critical path method uses forward scheduling to determine the early start and early finish for each activity in the project network. See the finite scheduling entry for more detail.

See back scheduling, Critical Path Method (CPM), finite scheduling.

forward visibility – Giving information on future demand and production plans to internal and external suppliers.

Customers can give their suppliers forward visibility by sharing their forecasts and production plans. This allows suppliers to plan their production to better meet their customers’ requirements.

See Electronic Data Interchange (EDI), forecast horizon, forecast interval, forecasting, Materials Requirements Planning (MRP).

foundry – A facility that pours hot metal into molds to create metal castings.

A casting is any product formed by a mold (British, mould), which is a hollow cavity with the desired shape. The casting can be either ejected or broken out of the mold. Castings do not always require heat or a foundry. For example, plaster may be cast. Sand casting uses sand as the mold material.

A foundry creates castings by heating metal in a furnace until it is in liquid form, pouring the metal into a mold, allowing the metal to cool and solidify, and finally removing the casting from the mold. Castings often require additional operations before they become products sold to customers. Castings are commonly made from aluminum, iron, and brass. Castings are used in many products, such as engines, automobiles, and machine tools.

See manufacturing processes, mold.

Fourth Party Logistics (4PL) provider – See Third Party Logistics (3PL) provider.

fractile – A selection portion of a probability distribution.

For example, the lower quartile is the lower 25% of the cumulative probability distribution and the top decile is the top 10% of the cumulative probability distribution.

See interquartile range, newsvendor problem.

Free-on-Board (FOB) – See FOB.

freight bill – Invoice for the transportation charges of goods shipped or received. See logistics

freight forwarder – An independent business that handles export shipments for compensation.

See logistics.

front office – See back office.

frozen schedule – See time fence.

FTE – See Full Time Equivalent.

fuel surcharge – An extra charge added to the cost of a shipment to cover the variable cost of fuel.

fulfillment – The process of shipping products to customers in response to customer orders; also called fulfillment operations.

The fulfillment process almost always involves order entry, picking items from a warehouse or distribution center, packaging, and shipping. In addition, fulfillment may also involve:

• Supplier-facing activities – Placing replenishment orders, managing in-bound logistics, providing information on current inventory status to suppliers, and expediting.

• Other customer-facing activities – Tracking orders, sending automated e-mails to customers to let them know their packages are in transit, satisfying customer requests for information, handling returns, and providing help desk support for products.

• Financial activities – Processing credit card transactions, invoicing customers, and paying suppliers.

The term “fulfillment” is most often associated with e-commerce and other operations that ship many small orders to end customers rather than operations that process shipments to other manufacturers, wholesalers, or resellers. Examples of fulfillment operations include fulfillment operations for mail-order catalogs, Internet stores, and service parts. A fulfillment house is a third party that performs outsourced storage, order picking, packaging, shipment, and other similar services for others.

See customer service, help desk, order entry, replenishment order, Third Party Logistics (3PL) provider, warehouse, Warehouse Management System (WMS).

Full Time Equivalent (FTE) – A labor staffing term used to equate the salary or work hours for a number of parttime people to the number of “equivalent” full-time people.

For example, three people working half-time is equal to 1.5 FTEs.

full truck load – See less than truck load (LTL).

functional build – A design and manufacturing methodology that de-emphasizes individual part quality and focuses on system quality.

Conventional design and manufacturing processes sequentially check each part being produced against design specifications utilizing Cp and Cpk metrics. This requires that all critical dimensions of a part be within specification limits. An example is an auto manufacturer checking 1,400 points on a door die. If any of these are out of tolerance, they would be reworked to achieve proper specifications. With a functional build, if the part is close to passing, it is used in the assembly and the overall assembly is held to tighter tolerances. In contrast, the functional build process checks fewer points and fixes only the ones necessary to bring the door assembly (system) into tolerance. The result is a higher quality assembly, which is what the customer really cares about, at a substantially lower cost.

A study conducted by CAR found that Japanese automobile manufacturers had the lowest quality doors as measured by Cpk for individual parts, but had high customer scores for the door assembly, while American manufacturers had higher door component Cpk values, but lower customer scores (adapted from “The Quest for Imperfection,” Charles Murray, Design News, October 10, 2005, www.designnews.com).

See process capability and performance, Taguchi methods.

functional silo – A functional group or department in an organization, such as marketing, operations, accounting, and finance, that is overly focused on its own organization and point of view, which results in inefficient and ineffective processes.

A silo is a tall cylindrical tower used for storing grain, animal feed, or other material. The photo on the right shows a series of six silos on an American farm. The metaphor here is that organizations often have “silos” where people in functional groups are overly focused on their own functions, do not coordinate with other functions, do not share information with other functions, and do not have constructive interactions with other functions. The functional departments are usually pictured as vertical lines, whereas processes serving each market segment are drawn as horizontal lines that cross multiple silos. The result of the functional silo problem is inefficient processes, poor customer service, and lack of innovation.

For example, a customer order begins with a salesperson who hands it off to order entry, but neglects to include some information. The order entry person enters the sales order data into the information system, but accidentally enters the promise date incorrectly. The manufacturing organization makes the product to the customer’s requirements, but misses one important unusual customer need. The shipping people accidently ship the product to the billing address rather than to the point of need. It is easy for information to get lost in this process because each “silo” (department) has different goals and information systems. It is hard for any one process to “own” this customer order because the process is too far from the voice of the customer.

The lean answer to this issue is to create organizations around value streams that are aligned with market segments. A focus on value streams instead of silos reduces waste, cycle time, cost, and defects. In the operations strategy literature, this is called a focused factory.

See Deming’s 14 points, focused factory, lean thinking, mass customization, order entry, value stream.

future reality tree – A theory of constraints term for a type of causal map used to show the relationships needed to create the future state desirable effects.

See causal map, current reality tree, Theory of Constraints (TOC).

futures contract – An agreement to purchase or sell a commodity for delivery in the future with (1) a price determined at initiation of the contract, (2) terms that obligate each party to fulfill the contract at the specified price, (2) the purpose of assuming or shifting price risk, and may be satisfied by delivery or offset; also called futures.

A futures contract is a standardized, transferable, exchange-traded contract that requires delivery of a commodity, bond, currency, or stock index, at a specified price, on a specified future date. Unlike options, futures convey an obligation to buy. The risk to the holder is unlimited, and because the payoff pattern is symmetrical, the risk to the seller is unlimited. Money lost and gained by each party on a futures contract is equal and opposite. In other words, futures trading is a zero sum game. Futures contracts are forward contracts, meaning they represent pledges to make certain transactions at future dates. The exchange of assets occurs on the date specified in the contract. Futures are distinguished from generic forward contracts in that they contain standardized terms, trade on formal exchanges, are regulated by overseeing agencies, and are guaranteed by clearinghouses. To insure that payment will occur, futures also have a margin requirement that must be settled daily. Finally, by making an offsetting trade, taking delivery of goods, or arranging for an exchange of goods, futures contracts can be closed. Hedgers often trade futures for the purpose of keeping price risk in check.

See commodity, forward buy, purchasing, zero sum game.

fuzzy front end – The process for determining customer needs or market opportunities, generating ideas for new products, conducting necessary research on the needs, developing product concepts, and evaluating product concepts up to the point that a decision is made to proceed with development.

This process is called the fuzzy front end because it is the most unstructured part of product development. Preceding the more formal product development process, it generally consists of three tasks: strategic planning, concept generation, and pre-technical evaluation. These activities are often chaotic, unpredictable, and unstructured. In comparison, the subsequent new product development process is typically structured, predictable, and formal, with prescribed sets of activities, questions to be answered, and decisions.

Adapted from www.pdma.org (April 18, 2011).

See New Product Development (NPD).

G

Gage R&R – See Gauge R&R.

gainsharing – An incentive program that provides financial benefits to employees based on improvements in quality or productivity; also called pay for performance.

See Balanced Scorecard, human resources, job design, pay for skill, piece work.

game theory – A branch of mathematics that models the strategic interactions among competitors to determine the optimal course of action.

Business can be viewed as a “game” between the competitors in a market. A decision (move) by one player motivates a move by another player. Historically, game theory can be traced back to the Talmud and Sun Tzu’s writings. John von Neumann and Oskar Morgenstern are credited with the mathematical development of modern-day game theory in their book Theory of Games and Economic Behavior (Neumann & Morgenstern 1944). In the early 1950s, John Nash generalized these results and created the basis for the modern field of mathematical game theory19. The most widely known example of game theory is the prisoners’ dilemma.

A major issue with game theory is the trade-off between realism and simplicity. The most common assumptions in game theory are (1) rationality (i.e., people take actions likely to make them happier, and they know what makes them happy) and (2) common knowledge (i.e., everyone else is trying to make themselves happy, potentially at our expense).

See co-opetition (co-competition), prisoners’ dilemma, zero sum game.

gamma distribution – A continuous probability distribution often used to model task times and other variables that have a left tail bounded by zero.

The gamma distribution has shape parameter α and scale parameter β. Important special cases of the gamma distribution include the exponential, k-Erlang, and chi-square distributions. The k-Erlang is a special case of the gamma with an integer shape parameter.

Gamma density and distribution functions: The gamma density function is f(x) = β-α xα-1 e-x/β/Γ(α) for x > 0; f(x) = 0 otherwise, where ![]() dt is the gamma function. The gamma function does not have a closed form when α is not an integer, which means that the gamma density and distribution functions must be approximated numerically. The gamma function entry presents the VBA code for the gamma function.

dt is the gamma function. The gamma function does not have a closed form when α is not an integer, which means that the gamma density and distribution functions must be approximated numerically. The gamma function entry presents the VBA code for the gamma function.

Graph: The graph below shows a gamma density function with a range of α parameters and scale parameter β = 1. Note that if X is a gamma distributed random variable with shape α and scale 1, then βX is a gamma distributed random variable with shape α and scale β.

Statistics: Mean μ = αβ, variance σ2 = αβ2, mode β(α - 1) if α ![]() 1 and 0 otherwise, skewness

1 and 0 otherwise, skewness ![]() , and coefficient of variation

, and coefficient of variation ![]() .

.

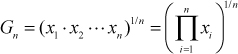

Parameter estimation: Many authors, such as Fisher and Raman (2010), use the method of moments to estimate ![]() and

and ![]() , where

, where ![]() and s are the sample mean and standard deviation. Law (2007) presented a maximum likelihood estimation procedure that requires numerical methods. Minka (2002, p. 2) claimed that the approach in Law “can be quite slow, requiring around 250 iterations if α = 10” and presented an MLE approach that converges in about four iterations. All MLE approaches are based on the fact that the MLE estimate for beta is

and s are the sample mean and standard deviation. Law (2007) presented a maximum likelihood estimation procedure that requires numerical methods. Minka (2002, p. 2) claimed that the approach in Law “can be quite slow, requiring around 250 iterations if α = 10” and presented an MLE approach that converges in about four iterations. All MLE approaches are based on the fact that the MLE estimate for beta is ![]() .

.

Excel: In Excel, the natural log of the gamma function is GAMMALN(α), which means that the gamma function is EXP(GAMMALN(α)). The gamma density and distribution functions are GAMMADIST(x, α, β, FALSE) and GAMMADIST(x, α, β, TRUE). The inverse distribution function is GAMMAINV(p, α, β). In Excel 2010, the gamma distribution function is renamed GAMMA.DIST(x, α, β, TRUE), and the gamma inverse function is renamed GAMMA.INV(p, α, β).

Excel errors: The GAMMADIST and GAMMAINV functions in Excel 2003 and Excel 2007 will return #NUM for some combinations of input parameters. Knüsel (2005) stateed that the GAMMADIST function “can have numerical problems just in the most important central part of the distribution.” Evidently, these problems have been fixed in Excel 2010.

Excel simulation: An Excel simulation can generate gamma distributed random variates with the inverse transform method using x = GAMMAINV(1-RAND(), α, β).

Partial expectation function: H(x) = μFGamma(x|α+1,β).

Related distributions: If X ~ Gamma(1, β), then X ~ Exponential(β), where β is the mean. If X ~ Gamma(k,β), then X ~ Erlang(k, β), where k is an integer. If X ~ Gamma(k / 2, 2), then X ~ Chi-square with k degrees of freedom. See Law (2007) for more details. The gamma, Weibull, and lognormal distributions are special cases of the generalized gamma distribution (Wikipedia 2010). The gamma converges to the normal distribution as the shape parameter (α) approaches infinity.

See beta distribution, chi-square distribution, Erlang distribution, exponential distribution, gamma function, inverse transform method, negative binomial distribution, partial expectation, probability density function, probability distribution.

gamma function – A mathematical extension of the factorial function to real and complex numbers.

The gamma function is ![]() for α > 0. When α is a non-negative integer, α! = Γ(α + 1). Note that

for α > 0. When α is a non-negative integer, α! = Γ(α + 1). Note that ![]() . In Excel, the gamma function can be computed as exp(GAMMALN(x)).

. In Excel, the gamma function can be computed as exp(GAMMALN(x)).

The gamma function is used in several probability distributions, including the beta, F, gamma, chi-square, and Weibull, and is also useful for evaluating ratios of factorials. The factorial of a positive integer n is defined as n! = n·(n - 1)·(n - 2) ... 1, where 0! ≡ 1. The gamma function generalizes this to all non-negative real numbers where α! = Γ(α + 1).