S

S&OP – See Sales & Operations Planning (S&OP).

SaaS – See Software as a Service (SaaS).

safety – Freedom from the occurrence or risk of injury, danger, or loss.

See DuPont STOP, error proofing, Occupational Safety and Health Administration (OSHA), risk management, risk mitigation.

safety capacity – Capacity that is available in case of an emergency; sometimes called a capacity cushion.

Safety capacity is planned “extra” capacity and can be measured as the difference between the planned capacity and planned demand. Examples include a medical doctor “on call,” a supervisor who can help in time of need, or capacity for overtime. Safety capacity is not just having too much capacity; it is capacity that is not actually working but can be called to work in case of emergency. Although safety capacity, safety stock, and safety leadtime can both be used to protect a firm from uncertain demand and supply, they are not identical concepts.

See capacity, safety leadtime, safety stock.

safety factor – See safety stock.

safety leadtime – The difference between the planned and average time required for a task or manufacturing order; called safety time by Wallace and Stahl (2003).

Safety leadtime is the “extra” planned leadtime used in production planning and purchasing to protect against fluctuations in leadtime. The same concept can be used in project scheduling. Safety leadtime should absorb the variability in the leadtimes. Whereas safety stock should be used to absorb variability in the demand and yield, safety leadtime should be used to protect against uncertainty in leadtimes or task times. Critical chain scheduling uses a buffer time to protect tasks assigned to constrained resources so they are almost never starved for work.

For example, if it takes a student an average of 30 minutes to get to school, and the student plans to leave 35 minutes before a class begins, the student will arrive 5 minutes early on average. This means that the student will have a safety leadtime of 5 minutes.

See critical chain, Master Production Schedule (MPS), purchasing leadtime, safety capacity, safety stock, sequence-dependent setup time, slack time.

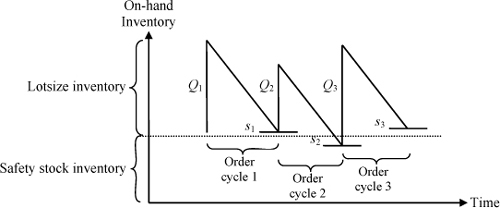

safety stock – The planned or actual amount of “extra” inventory used to protect against fluctuations in demand or supply; the planned or actual inventory position just before a replenishment order is received in inventory; sometimes called buffer stock, reserve stock, or inventory buffer. ![]()

The business case for safety stock: Safety stock is management’s primary control variable for balancing carrying cost and service levels. If the safety stock is set too high, the inventory carrying cost will be too high. If safety stock is set too low, the shortage cost will be too high. Safety stock is needed in nearly all systems to protect against uncertain demand, leadtime, and yield that affect demand, supply, or both demand and supply.

Definition of safety stock: Managers often confuse safety stock with related concepts, such as an order-up-to level (for determining the lotsize), a reorder point (a “minimum” inventory for triggering a new order), or the average inventory. Safety stock is the average inventory when a new order is received. It is not the minimum, maximum, or average inventory. The figure below shows the safety stock as the lowest point on each of the three order cycles. The actual safety stock over this time period is the average of these three values.

Cycle inventory and safety stock inventory

For stationary demand, the average inventory is the safety stock plus the average lotsize inventory. Given that the average lotsize inventory is half the average lotsize, the average inventory is ![]() units, where SS is the safety stock and

units, where SS is the safety stock and ![]() is the average lotsize. Therefore, safety stock can be estimated as

is the average lotsize. Therefore, safety stock can be estimated as ![]() .

.

Safety stock in different types of systems: The reorder point system, the order-up-to (target) inventory system, the Time Phased Order Point (TPOP) system, and MRP systems manage inventory with a planned (or forecasted) demand during the replenishment leadtime. Safety stock protects the organization from demand during the leadtime that is greater than planned. Therefore, safety stock should be based on the standard deviation of demand during leadtime and not just the average demand during leadtime.

Safety stock and days supply: It is a common practice to define safety stock in terms of a constant days supply. Although it is fine to communicate safety stocks in terms of days supply, it is a bad idea to use a constant days supply to set safety stock, unless all items have the same leadtime, average demand, standard deviation of demand, yield, and stockout cost.

The equation for safety stock: Define X as the demand during the replenishment leadtime. Safety stock is then SS = zσX, where z is the safety factor, which is usually between 1 and 4, and σX is the estimated standard deviation of demand during replenishment leadtime. Assuming that demand is serially independent, the standard deviation of demand during the leadtime is ![]() , where L is the fixed planned replenishment leadtime and σD is the standard deviation of demand per period. Safety stock is then

, where L is the fixed planned replenishment leadtime and σD is the standard deviation of demand per period. Safety stock is then ![]() .

.

Safety stock and the leadtime parameter L: The basic safety stock model assumes that leadtime (L) is constant. More complicated models treat leadtime as a random variable and use the average leadtime and the standard deviation of the leadtime. Therefore, leadtime can be handled in the safety stock model in three ways: (1) use a constant leadtime at the average, (2) use a constant leadtime at a value well above the average, or (3) use the safety stock based on the standard deviation of the leadtime, e.g., ![]() . When the review period P is greater than zero, the equation is

. When the review period P is greater than zero, the equation is ![]() (Silver, Pyke, and Peterson 1998).

(Silver, Pyke, and Peterson 1998).

Safety stock versus safety leadtime: As a general rule, safety stock should be used to absorb the variability in the demand (or yield), and safety leadtime should be used to protect against uncertainty in leadtimes. Whereas safety stock requires more inventory, safety leadtime requires that inventory arrive earlier.

The safety factor parameter z: The safety factor (z) determines the service level, where the service level increases as z increases. Many academic textbooks are imprecise on this subject. At least three approaches can be used to calculate the safety factor: (1) the order cycle service level approach, (2) the unit fill rate approach, and (3) the economic approach. Each of these approaches is described briefly below.

The order cycle service level approach is commonly taught in texts and implemented in major software systems (including SAP). This approach defines the service level as the probability of a shortage event on one order cycle. An order cycle is defined as the time between placing orders, and the average number of order cycles per year is A/Q, where A is the annual demand in units, and Q is the average order quantity. The safety factor for this approach is based on the standard normal distribution (i.e., z = F-1(SL). This z value can be easily calculated in Excel using NORMSINV(SL). The order cycle service level approach does not consider how many order cycles are expected per year or how many units might be short in a stockout event and is therefore not recommended.

The unit fill rate service level approach for setting the safety factor defines the service level as the expected percentage of units demanded that are immediately available from stock. This approach defines safety stock closer to the actual economics; however, this approach is much more complicated than the order cycle service level approach. No matter which service level approach is used, it is not clear how to set the best service level.

The economic approach for setting the safety factor z requires an estimate of the shortage or stockout cost per unit short (stocked out). If management is able to estimate this cost, the newsvendor model can be used to determine the safety factor that will minimize the expected total incremental cost (carrying cost plus shortage cost). This model balances the cost of having to carry a unit in safety stock inventory and the cost of having a unit shortage. Unfortunately, it is difficult to estimate the cost of a shortage (or a stockout) for one unit at the retail level. It is even more difficult to translate a shortage (or a stockout) into a cost at a distribution center, factory warehouse, or finished goods inventory.

The periodic review system: For a periodic review system with a time between reviews of P time periods, L should be replaced with L + P in all equations. In other words, when ordering only every P time periods, the safety stock is ![]() . Note that P = 0 for a continuous review system.

. Note that P = 0 for a continuous review system.

The Time Phased Order Point (TPOP) system: If a firm places replenishment orders based on a TPOP system (based on forecasts), the safety stock should be defined in terms of the standard deviation of the forecast error rather than the standard deviation of demand. Unfortunately, many managers, systems designers, professors, students, and textbooks do not understand this important concept.

See aggregate inventory management, autocorrelation, cycle stock, demand during leadtime, Economic Order Quantity (EOQ), Everyday Low Pricing (EDLP), goodwill, inventory management, lotsizing methods, marginal cost, newsvendor model, order cycle, partial expectation, periodic review system, purchasing leadtime, reorder point, replenishment order, safety capacity, safety leadtime, service level, square root law for safety stock, stockout, Time Phased Order Point (TPOP), warehouse.

Sales & Operations Planning (S&OP) – A business process used to create the Sales & Operations Plan, which is a consensus plan involving Marketing/Sales, Operations/Logistics, and Finance that balances market demand and resource capability; also called Sales, Inventory & Operations Planning (SI&OP). ![]()

S&OP is an important process in virtually all firms, but it is particularly critical in manufacturing firms. Fundamentally, S&OP is about finding the right balance between demand and supply. If demand is greater than supply, customers will be disappointed, customer satisfaction will decline, and sales will be lost. If supply is greater than demand, cost will be high.

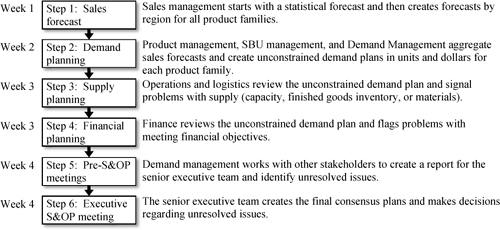

The diagram below presents an example of an S&OP process. This diagram is based on concepts found in Wallace (2004) and Ling and Goddard (1995). However, it should be noted that each firm will implement S&OP in a different way. Most experts recommend that the S&OP process be repeated each month. Step 1 usually begins with statistical forecasts at either a product or product family level. These forecasts are then modified with market intelligence from sales management, usually for each region. However, some experts argue that providing statistical forecasts to sales management gives them an easy way out of doing the hard work of creating the forecasts. Statistical forecasts are useful for the majority of products, and most experts consider it a waste of time to not use the statistical forecasts as a starting point.

In step 2, the product management and the management of the Strategic Business Unit (SBU) work with the demand management organization to convert the sales forecasts into an unconstrained demand plan, which factors in higher-level issues, such as industry trends, pricing, and promotion strategy. The demand plan is expressed in both units and dollars. In step 3, operations and logistics have the opportunity to check that the supply (either new production or inventory) is sufficient to meet the proposed demand plan. If major changes need to be made, they can be made in steps 4 or 5. In step 4 (parallel to step 3), finance reviews the demand plan to ensure that it meets the firm’s financial objectives (revenues, margins, profits) and creates a financial plan in dollars. In step 5, the demand management organization coordinates with the other organizations to put together a proposed Sales & Operations Plan that is a consensus plan from the demand plan, supply plan, and financial plan. Finally, in step 6, the executive team meets to finalize the S&OP plan. At every step in the process, assumptions and issues are identified, prioritized, and passed along to the next step. These assumptions and issues play important roles in steps 5 and 6.

An example S&OP process

Source: Professor Arthur V. Hill

See aggregate inventory management, Business Requirements Planning (BRP), Capacity Requirements Planning (CRP), chase strategy, closed-loop MRP, demand management, forecasting, level strategy, Master Production Schedule (MPS), product family, production planning, Resource Requirements Planning (RRP), Rough Cut Capacity Planning (RCCP), time fence.

Sales Inventory & Operations Planning (SI&OP) – See Sales & Operations Planning (S&OP).

salvage value – The value of an item when it is scrapped instead of sold; also known as scrap value.

Retailers and manufacturers can usually find salvage firms or discounters to buy obsolete inventory. Some companies use salvage firms to buy unsold electronic parts. The salvage firm pays a low price (e.g., $0.01 per pound) and keeps the parts in inventory for years. In the rare case of a demand, the salvage firm sells the parts back at the original book value. Large retailers often discount older products in their stores, but if that strategy does not work, they often turn to branded and unbranded Internet sales channels.

See scrap.

sample size calculation – A statistical method for estimating the number of observations that need to be collected to create a confidence interval that meets the user’s requirements.

Managers and analysts often need to estimate the value of a parameter, such as an average time or cost. However, with only a few observations, the estimates might not be very accurate. Therefore, it is necessary to know both the estimated mean and a measure of the accuracy of that estimate.

Confidence intervals can help with this problem. A confidence interval is a statement about the reliability of an estimate. For example, a confidence interval on the time required for a task might be expressed as “25 hours plus or minus 2 hours with a 95% confidence level,” or more concisely “25 ± 2 hours.” The first number (25) is called the “sample mean.” The second number (2) is called the “half-width” of the confidence interval. The “95% confidence” suggests that if we were to make this estimate many times, the true mean would be included (“covered”) in the confidence interval about 95% of the time. It is sometimes stated as, “We are 95% sure that the confidence interval contains the mean.”

Sometimes some observations have already been collected, and the goal is to develop a confidence interval from these observations. At other times, the required half-width is known, and it is necessary to find the number of observations needed to compute this half-width with a certain degree of confidence. It is also possible to express the half-width as a percentage. The five most common problems related to confidence intervals are:

• Problem 1: Create a confidence interval given that n observations are available.

• Problem 2: Find the sample size needed to create the desired confidence interval with a prespecified halfwidth.

• Problem 3: Find the sample size needed to create the desired confidence interval with a prespecified halfwidth expressed as a decimal percentage.

• Problem 4: Find the sample size needed to create the desired confidence interval on a proportion with a prespecified half-width percentage.

• Problem 5: Develop a confidence interval for a stratified random sample.

The entry in this encyclopedia on confidence intervals addresses Problem 1. For Problem 2, the goal is to find the smallest sample size n that is necessary to achieve a two-tailed 100(1 − α)% confidence interval with a prespecified half-width of h units.

Step 0. Define parameters – Specify the desired half-width h (in units), the estimated size of the population N, and the confidence level parameter α. If the size of N is large but unknown, use an extremely large number (e.g., N = 1010). Compute zα/2 = NORMSINV(1 - α/2).

Step 1. Take a preliminary sample to estimate the sample mean and standard deviation – Take a preliminary sample of n0 observations, where n0 ![]() 9 observations, and estimate the sample mean and standard deviation (

9 observations, and estimate the sample mean and standard deviation (![]() and s) from this sample.

and s) from this sample.

Step 2. Estimate the required sample size – Compute n* (zα/2 s/h)2. Round up to be conservative. If the sample size n is large relative to the total population N (i.e., n*/N > 0.05), use n* = (zα/2 s)2/(h2 + (zα/2s)2 / N instead. (This assumes that n* ![]() 30, so it is appropriate to use a z value; otherwise use the t value.)

30, so it is appropriate to use a z value; otherwise use the t value.)

Step 3. Take additional observations – If n* > n0, take n* -n0 additional observations.

Step 4. Recompute the sample mean and sample standard deviation – Recompute ![]() and s from the entire n observations.

and s from the entire n observations.

Step 5. Compute the half-width and create the confidence interval – Compute the half-width ![]() . If the sample size n is large relative to the total population N (i.e., n/N > 0.05), use

. If the sample size n is large relative to the total population N (i.e., n/N > 0.05), use ![]() instead. The confidence interval is then

instead. The confidence interval is then ![]() .

.

Step 6. Check results – Make sure that h ![]() h′; if not, repeat steps 2 to 6.

h′; if not, repeat steps 2 to 6.

The larger the number of observations (n), the smaller the confidence interval. The goal is to find the lowest value of n that will create the desired confidence interval. If n observations are selected randomly many times from the population, the confidence interval (![]() ) will contain the true mean about 100(1 - α)% of the time.

) will contain the true mean about 100(1 - α)% of the time.

See central limit theorem, confidence interval, dollar unit sampling, sampling, standard deviation.

sampling – The selection of items from a population to help a decision maker make inferences about the population.

Sampling is frequently used when it is impossible, impractical, or too costly to evaluate every unit in the population. Sampling allows decision makers to make inferences (statements) about the population from which the sample is drawn. A random sample provides characteristics nearly identical to those of the population.

One major issue in developing a sampling plan is the determination of the number of observations in the sample (the sample size) needed to achieve a desired confidence level and maximum allowable error. See the sample size calculation entry for more details.

Probability sampling includes simple random sampling, systematic sampling, stratified sampling, probability proportional to size sampling, and cluster or multistage sampling. Stratified sampling (also known as stratification) defines groups or strata as independent subpopulations, conducts random samples in each of these strata, and then uses information about the population to make statistical inferences about the overall population. Stratified sampling has several advantages over simple random sampling. First, stratified sampling makes it possible for researchers to draw inferences about groups that are particularly important. Second, stratified sampling can significantly tighten the confidence interval on the mean and reduce the sample size to achieve a predefined confidence interval. Finally, different sampling approaches can be applied to each stratum.

See acceptance sampling, Analysis of Variance (ANOVA), central limit theorem, confidence interval, consumer’s risk, dollar unit sampling, hypergeometric distribution, Lot Tolerance Percent Defective (LTPD), normal distribution, operating characteristic curve, producer’s risk, sample size calculation, sampling distribution, standard deviation, t-test, work sampling.

sampling distribution – The probability distribution for a statistic, such as the sample mean, based on a set of randomly selected units from a larger population; also called the finite-sample distribution.

A sampling distribution can be thought of as a relative frequency distribution from a number of samples taken from a larger population. This relative frequency distribution approaches the sampling distribution as the number of samples approaches infinity. For discrete (integer) variables, the heights of the distribution are probabilities (also called the probability mass). For continuous variables, the intervals have a zero width, and the height of the distribution at any point is called the probability density.

The standard deviation of the sampling distribution of the statistic is called the standard error. According to the central limit theorem, the standard error for the sample mean is always ![]() , where s is the standard deviation for the sample. Other statistics, such as the sample median, sample maximum, sample minimum, and the sample standard deviation, have different sampling distributions.

, where s is the standard deviation for the sample. Other statistics, such as the sample median, sample maximum, sample minimum, and the sample standard deviation, have different sampling distributions.

For example, an analyst is trying to develop a confidence internal on the average waiting time for a call center where the waiting time follows an exponential distribution. The analyst collects n = 101 sample waiting times and finds the sample mean and standard deviation are ![]() minutes. According to the central limit theorem, the sampling distribution for the sample mean is the normal distribution regardless of the underlying distribution. The sampling distribution for the sample mean, therefore, is normal with mean and standard deviation

minutes. According to the central limit theorem, the sampling distribution for the sample mean is the normal distribution regardless of the underlying distribution. The sampling distribution for the sample mean, therefore, is normal with mean and standard deviation ![]() minutes with a 95% confidence interval of (5.26, 5.74) minutes.

minutes with a 95% confidence interval of (5.26, 5.74) minutes.

See central limit theorem, confidence interval, probability density function, probability distribution, probability mass function, sampling.

sand cone model – An operations strategy model that suggests a hierarchy for the operations capabilities of quality, reliability, speed, and cost, where the quality is the base of the sand cone, followed by reliability, speed, and cost.

In the short-term, organizations often have to make trade-offs between cost, speed, reliability, and quality. For example, speed can sometimes be increased by using premium freight, which adds cost. However, Ferdows and De Meyer (1990) argue that in the longer run, firms can avoid trade-offs and build cumulative capabilities in all four areas. They argue that management attention and resources should first go toward enhancing quality, then dependability (reliability), then speed (flexibility), and finally cost (efficiency). They argue further that capabilities are built one on top of the other like a sand cone, where each lower layer of sand must be extended to support any increase for a higher layer. The drawing above depicts this relationship.

The sand cone model

Adapted from Ferdows, K. & A. De Meyer (1990).

Pine (1993) makes a similar argument in his book on mass customization but changes the order to cost → quality → flexibility. He argues that in the life cycle of a product, such as 3M’s Post-it Notes, the first priority was to figure out a way to make it profitable. The first small batch of Post-it Notes probably cost 3M about $50,000; but during the first year, 3M was able to find ways to automate production and bring the cost down substantially. The second priority was then to increase conformance quality to ensure the process was reliable. Some could argue that this was essentially a continuation of the effort to reduce cost. The third and last priority was to increase variety from canary yellow53 in three sizes to many colors and sizes.

See mass customization, operations strategy, premium freight.

SAP – A leading Enterprise Resources Planning (ERP) software vendor; the full name is SAP AG; SAP is the German acronym for Systeme, Andwendungen, Produkte in der Datenverarbeitung, which translated to English means Systems, Applications, Products in Data Processing.

SAP was founded in Germany in 1972 by five ex-IBM engineers. SAP is headquartered in Walldorf, Germany, and has subsidiaries in more than 50 countries. SAP America, which has responsibility for North America, South America, and Australia, is headquartered just outside Philadelphia.

See ABAP (Advanced Business Application Programming), Advanced Planning and Scheduling (APS), Enterprise Resources Planning (ERP).

satisfaction – See service quality.

satisficing – The effort needed to obtain an outcome that is good enough but is not exceptional.

In contrast to satisficing action, maximizing action seeks the biggest and optimizing action seeks the best. In recent decades, doubts have been expressed about the view that in all rational decision making the agent seeks the best result. Instead, some argue it is often rational to seek to satisfice (i.e., to get a good result that is good enough although not necessarily the best). The term was introduced by Simon (1957). (Adapted from The Penguin Dictionary of Philosophy, ed. Thomas Mautner, found at www.utilitarianism.com/satisfice.htm, April 1, 2011.)

See bounded rationality, learning curve, learning organization.

SBU – See Strategic Business Unit (SBU).

scalability – The ability to increase capacity without adding significant cost, or the ability to grow with the organization.

For example, software is said to be “scalable” if it can handle a significant increase in transaction volume.

See agile manufacturing, flexibility, resilience.

scale count – An item count based on the weight determined by a scale.

A scale count is often more economical than performing an actual physical count. This is particularly true for small inexpensive parts where slight inaccuracy is not important. Counting accuracy depends on the scale accuracy, the variance of the unit weight, and the variance of the container tare weight. Of course, the container tare weight should be subtracted from the total weight.

See cycle counting, tare weight.

scales of measurement – The theory of scale types.

According to Wikipedia, psychologist Stanley Smith Stevens developed what he called levels of measurement that include:

• Nominal scale – Categorical, labels (however, some critics claim that this is not a scale).

• Ordinal scale – Rank order.

• Interval scale – Any quantitative scale, such as temperature, that has an arbitrary zero point; although differences between values are meaningful, ratios are not.

• Ratio scale – Any quantitative scale, such as a weight, that does not have an arbitrary zero point; ratios are meaningful.

See operations performance metrics.

scatter diagram – A graphical display of data showing the relationship between two variables; also called scatterdiagram and scatterplot.

The scatter diagram is usually drawn as a set of points on a graph. When the points appear to fall along a line (e.g., from bottom left to top right), the user might hypothesize a linear relationship.

See linear regression, Root Cause Analysis (RCA), run chart, seven tools of quality.

scheduled receipt – See open order.

scientific management – An approach to management and industrial organization developed by Frederick Winslow Taylor (1856-1915) in his monograph The Principles of Scientific Management (Taylor 1911).

Taylor believed that every process had “one best way” and developed important industrial engineering and operations management approaches, such as the time and motion studies, to find that best way. For example, in one of Taylor’s most famous studies, he noticed that workers used the same shovel for all materials. His research found that the most effective load was 21.5 pounds, which led him to design different shovels for each material for that weight.

Taylor made many important contributions to the field of operations management, emphasizing time and motion studies, division of labor, standardized work, planning, incentives, management of knowledge work, and selection and training. Taylor also influenced many important thought leaders, including Carl Barth, H. L. Gantt, Harrington Emerson, Morris Cooke, Hugo Münsterberg (who created industrial psychology), Frank and Lillian Gilbreth, Harlow S. Person, and James O. McKinsey and many important organizations, such as Harvard University’s Business School, Dartmouth’s Amos Tuck School, University of Chicago, Purdue University, McKinsey (an international consulting firm), and the American Society of Mechanical Engineers. His work also influenced industrial development in many other nations, including France, Switzerland, and the Soviet Union.

One criticism of scientific management is that it separated managerial work (e.g., planning) and direct labor. This led to jobs where workers were not expected to think. In contrast, many successful Japanese firms stress the need to gather suggestions from workers and require managers begin their careers on the shop floor.

See best practices, division of labor, human resources, job design, standardized work, time study, work measurement.

scope – See project management, scope creep.

scope creep – The tendency for project boundaries and requirements to expand over time, often resulting in large, unmanageable, and never-finished projects.

Scope creep is reflected in subtle changes in project requirements over time. For example, a software project might start out as a simple table that needs to be accessed by just a single type of user. However, as the user group becomes engaged in the project, the “scope” increases to include a larger and more complicated database with multiple tables and multiple types of users.

One of the main keys to successful project management is avoiding scope creep. If the users want to increase the scope of a project, they should be required to either go back and change the charter (and get the appropriate signed approvals) or defer the changes to a new project. If management does not manage scope creep, the project will likely not be completed on time or within budget.

In a consulting context, scope creep is additional work outside the project charter that the client wants for no additional charge. If the client is willing to change the charter and pay for the work, it is an “add-on sale” and is not considered scope creep.

See focused factory, project charter, project management, project management triangle, scoping.

scoping – The process of defining the limits (boundaries) for a project.

Defining the project scope is a critical determinant of the success of a project. When scoping a project, it is just as important to define what is not in scope as it is to define what is in scope.

See project charter, project management, scope creep.

SCOR Model – A process reference model that has been developed and endorsed by the Supply-Chain Council as the cross-industry, standard, diagnostic tool for supply-chain management, spanning from the supplier’s supplier to the customer’s customer; acronym for Supply-Chain Operations Reference. ![]()

The SCOR Model

The SCOR Model allows users to address, improve, and communicate supply chain management practices within and between all interested parties. The SCOR framework attempts to combine elements of business process design, best practices, and benchmarking. The basic SCOR model is shown above.

The SCOR Model was developed to describe the business activities associated with all phases of satisfying a customer’s demand. It can be used to describe and improve both simple and complex supply chains using a common set of definitions. An overview of the SCOR Model can be found on the Supply Chain Council webpage http://supply-chain.org. Some of the benefits claimed for the SCOR Model include (1) standardized terminology and process descriptions, (2) predefined performance measures, (3) best practices, and (4) basis for benchmarking a wide variety of supply chain practices.

See benchmarking, bullwhip effect, Supply Chain Council, supply chain management, value chain.

scrap – Material judged to be defective and of little economic value.

Scrap is any material that is obsolete or outside specifications and cannot be reworked into a sellable product. Scrap should be recycled or disposed of properly according to environmental laws. A scrap factor (or yield rate) can be used to inflate the “quantity per” to allow for yield loss during a manufacturing process. The scrap value (or salvage value) is credited to factory overhead or the job that produced the scrap.

See conformance quality, cost of quality, red tag, rework, salvage value, yield.

scree plot – See cluster analysis.

scrum – A method of implementing agile software development, where teams meet on a daily basis, and computer code is delivered in two to four week “sprints.”

Scrum is similar to lean thinking in many ways. The fundamental concept of scrum is that the organization produces computer code in small “chunks” (like small lotsizes) that can be quickly evaluated and used by others. This allows for early detection of defects, a key operations management concept. This is consistent with the lean concept of reducing lotsizes and “one-piece flow.” Scrum is also similar to lean in that it requires short stand-up meetings and clear accountabilities for work. Scum defines three roles and three meetings:

The three roles:

1. Product owner – This person manages the product’s requirements and divides the work among team members and among sprints.

2. Scrum master – This person runs the daily scrum meeting.

3. Team members – These are the software developers responsible for delivering code that meets the requirements.

1. Sprint Planning Meeting – The product owner and team members meet at the start of a sprint to plan this period’s work and identify any issues that may impact the program.

2. Daily Scrum Meeting – This 15-minute meeting is led by the scrum master, and each team member is expected to answer three questions: “What did you accomplish since yesterday’s scrum meeting?”, “What will you accomplish before tomorrow’s scrum meeting?”, and “What roadblocks may impede your progress?” The sprint burndown chart, a measure of the team’s progress, is updated during this meeting. Attendees at scrum meetings are expected to stand, rather than sit, during the 15 minutes. This is to keep the meeting concise and on time.

3. Sprint Review Meeting – The product owner holds this meeting at the conclusion of a sprint, to review the state of the deliverables, and cross-check them against the stated requirements for that sprint.

See agile software development, deliverables, early detection, Fagan Defect-Free Process, lean thinking, New Product Development (NPD), prototype, sprint burndown chart, waterfall scheduling.

search cost – The cost of finding a supplier that can provide a satisfactory product at an acceptable price.

See switching cost, total cost of ownership, transaction cost.

seasonal factor – See seasonality.

seasonality – A recurring pattern in a time series that is based on the calendar or a clock.

The demand for a product is said to have seasonality if it has a recurring pattern on an annual, monthly, weekly, daily, or hourly cycle. For example, retail demand for toys in North America is significantly higher during the Christmas season. The demand for access to major highways is much higher during the “rush hours” at the beginning and end of a workday. For some firms, sales tend to increase at the end of the quarter due to sales incentives. This is known as the “hockey stick effect.”

Most forecasting models apply a multiplicative seasonal factor to adjust the forecast for the seasonal pattern. The forecast, therefore, is equal to the underlying average times the seasonal factor. For example, a toy retailer might have a seasonal factor for the month of December (i.e., the Christmas season) of 4, whereas a low demand month such as January, might have a seasonal factor of 0.6. Demand data can be “deseasonalized” by dividing by the seasonal factor. Although it is not recommended, it is also possible to use an additive seasonal factor.

See anticipation inventory, Box-Jenkins forecasting, chase strategy, exponential smoothing, forecasting, hockey stick effect, level strategy, newsvendor model, production planning, time series forecasting, trend.

self check – See inspection.

self-directed work team – Work groups that have significant decision rights and autonomy.

See High Performance Work Systems (HPWS), human resources, job design, organizational design.

sensei – A reverent Japanese term for a teacher or master.

In the lean manufacturing context, a sensei is a master of lean knowledge with many years of experience. In traditional lean environments, it is important for the sensei to be a respected and inspirational figure. Toyota uses a Japanese-trained sensei to provide technical assistance and management advice when it is trying something for the first time or to help facilitate transformational activities.

See lean thinking.

sensitivity analysis – The process of estimating how much the results of a model will change if one or more of the inputs to the model are changed slightly.

Although the concept of sensitivity analysis can be used with any model, it is a particularly powerful part of linear programming analysis. For example, in linear programming, the analyst can determine the additional profit for each unit of change in a constraint. The economic benefit of changing the constraint by one unit is called the “shadow price” of the constraint.

See linear programming (LP), operations research (OR).

sentinel event – A healthcare term used to describe any unintended and undesirable occurrence that results in death or serious injury not related to the natural course of a patient’s illness; sometimes called a “never event.”

A sentinel is a guard or a lookout. Serious adverse healthcare events are called sentinel events because they signal the need for a “sentinel” or “guard” to avoid them in the future. Examples of sentinel healthcare events include death resulting from a medication error, suicide of a patient in a setting with around-the-clock care, surgery on the wrong patient or body part, infection-related death or permanent disability, assault or rape, transfusion death, and infant abduction.

Following a sentinel event (or potential sentinel event), nearly all healthcare organizations conduct a root cause analysis to identify the causes of the event and then develop an action plan to mitigate the risk of the event reoccurring. The Joint Commission (formerly called JCAHO) tracks statistics on sentinel events.

See adverse event, causal map, error proofing, Joint Commission, prevention, Root Cause Analysis (RCA).

sequence-dependent setup cost – See sequence-dependent setup time.

sequence-dependent setup time – A changeover time that changes with the order in which jobs are started.

A sequence-dependent setup time (or cost) is a changeover time (or cost) that is dependent on the order in which jobs are run. For example, it might be easy to change a paint-making process from white to gray, but difficult to change it from black to white. Sometimes, setups are not sequence-dependent between items within a product family, but are sequence-dependent between families of products. In this case, setups between families are sometimes called major setups, and setups within a family are called minor setups.

When setup times (or costs) are sequence-dependent, it is necessary to have a “from-to” table of times (or costs), much like a “from-to” travel time table on the back of a map. Creating a schedule for sequence-dependent setup times is a particular type of combinatorial optimization problem that is nearly identical to the traveling salesperson problem.

See batch process, major setup cost, product family, safety leadtime, setup, setup cost, setup time, Traveling Salesperson Problem (TSP).

serial correlation – See autocorrelation.

serial number traceability – See traceability.

service blueprinting – A process map for a service that includes moments of truth, line of visibility, fail points, and additional information needed to create the right customer experience.

The main idea of service blueprinting is to get customers’ perspectives into the service design and improvement process. During the process design stage, business process managers, architects, interior designers, marketing managers, operations managers, and IT professionals use the service blueprint to guide the design process. After the design is completed and implemented, the blueprint defines the required features and quality of the service for the service managers. Some recommended steps for service blueprinting include:

1. Clearly identify the target customer segment.

2. Develop a process map from the customer’s point of view – This should include the choices the customers need to make when they buy and use the service. It should also include all activities, flows, materials, information, failure points, customer waiting points (queues), risk points, pain points, and handoffs.

3. Map employee actions both onstage and backstage – This involves drawing the lines of interaction and visibility and then identifying the interactions between the customer and employee and all visible and invisible employee actions.

4. Link customer and contact person activities to needed support functions – This involves drawing the line of internal interaction and linking the employee actions to the support processes.

5. Add evidence of service at each customer action step – This involves showing evidence of the service that the customer sees and receives at each point of the service experience.

The service blueprint should show all points of interaction between the customer and service providers (known as “moments of truth”), identify “fail points” and the “line of visibility,” and include fairly precise estimates of the times required for each step, including the queue times. The line of visibility separates a service operation into back office operations that take place without the customer’s presence and front office operations in direct contact with the customer. Some people argue that the only difference between a process map and a service blueprint is the identification of the fail points and the demarcation of the line of visibility.

A service blueprint is better than a verbal description because it is more formal, structured, and detailed and shows the interactions between processes. The blueprint provides a conceptual model that facilitates studying the service experience prior to implementing it and also makes the implementation easier.

See experience engineering, line of visibility, moment of truth, process design, process map, service failure, service guarantee, service management.

service failure – A situation when a service provider does not provide satisfactory service. ![]()

The best service organizations pay a great deal of attention to these situations and try to recover dissatisfied customers before they become “terrorists” and give a bad report to a large number of potential customers. (Note: The word “terrorists” has been used in this context by service quality experts for decades; however, in light of recent events, many experts are now shying away from using such a strong and emotionally charged word.)

For example, when this author was traveling through Logan Airport in Boston on the way to Europe, an airport restaurant served some bad clam chowder that caused him to get very sick (along with at least one other traveler on the same flight). As a form of service recovery, the restaurant offered a free coupon for more food. This form of service recovery was not adequate and he became a “terrorist” who reported this service failure to thousands of people. (Note: This restaurant is no longer in business in the Logan Airport.)

See service blueprinting, service guarantee, service management, service quality, service recovery.

service guarantee – A set of two promises offered to customers before they buy a service. The first promise is the level of service provided and the second promise is what the provider will do if the first promise is not kept. ![]()

Hays and Hill (2001) found empirically that a service guarantee often has more value for operations improvement than it does for advertising. A carefully defined service guarantee can have the following benefits:

• Defines the value proposition for both customers and employees.

• Supports marketing communications in attracting new customers, particularly those who are risk-adverse.

• Helps the service firm retain “at-risk” customers.

• Lowers the probability that dissatisfied customers will share negative word-of-mouth reports with others.

• Motivates customers to provide useful process improvement ideas.

• Motivates service firm employees to learn from mistakes and improve the service process over time.

• Clearly predefines the service recovery process for both customers and employees.

• Ensures that the service recovery process does not surprise customers.

A service guarantee is usually applied to organizations serving external customers, but it can also be applied to internal customers. Service guarantees are not without risk (Hill 1995). Offering a service guarantee before the organization is ready can lead to serious problems. Announcing the withdrawal of a service guarantee is tantamount to announcing that the organization is no longer committed to quality.

A service guarantee is a promise related to the intangible attributes of the service (e.g., timeliness, results, satisfaction, etc.), whereas a product warranty is a promise related to the physical attributes of the product (durability, physical performance, etc.). Product warranties are similar to service guarantees from a legal perspective and have many of the same benefits and risks.

Hill (1995) developed the figure below to show the relationship between a service guarantee and customer satisfaction. The numbers in the figure are for illustrative purposes only. The three arrows show that a service guarantee can increase the percent of customers who complain (by rewarding them to complain), the percent recovered (by having a predefined service recovery process and payout), and the percent satisfied (by motivating learning from service failures). Organizations never want to increase the percent of dissatisfied customers, but they should want to increase the percent of dissatisfied customers who complain so they hear all customer complaints. Service guarantees inflict “pain” on the organization, which motivates the organization to learn faster.

Service guarantees, service failures, and customer satisfaction

Source: Professor Arthur V. Hill (1995)

From 1973-1980, Domino’s Pizza offered a “30-minutes or it’s free” guarantee. Unfortunately, Domino’s settled major lawsuits for dangerous driving in 1992 and 1993, which led the firm to abandon its on-time delivery guarantee and replace it with an unconditional satisfaction guarantee (source: Wikipedia, March 28, 2011).

A Service Level Agreement (SLA) is essentially a service guarantee for commercial customers. See the Service Level Agreement (SLA) entry for a comparison of service guarantees, SLAs, and product warranties.

See brand, caveat emptor, durability, performance-based contracting, response time, risk sharing contract, service blueprinting, service failure, Service Level Agreement (SLA), service management, service quality, service recovery, SERVQUAL, value proposition, warranty.

service level – A measure of the degree to which a firm meets customer requirements. ![]()

Service level is often measured differently for make to stock (MTS) and respond to order (RTO) products. It is possible, however, to define a general service level metric for both MTS and RTO. The following three sections describe these three types of service level metrics.

Service level metrics for make to stock (MTS) products – Retailers, distributors, and manufacturers that make and or sell products from inventory (from stock) need a service level measure that reflects the availability of inventory for customers. For make to stock (MTS) products, the service level is usually measured as a fill rate metric. The unit fill rate is the percentage of units filled immediately from stock; the line fill rate is the percentage of lines filled immediately from stock; and the order fill rate is the percentage of orders filled immediately from stock. The terms “fill rate” and “service level” are often used synonymously in many make to stock firms.

Many textbooks, such as Schroeder et al. (2011) and most major ERP systems (e.g., SAP) define the service level for MTS products as the order cycle service level, which is the probability of not having a stockout event during an order cycle. However, this is a poor service metric because it does not take into account the number of order cycles per year or the severity of a stockout event. See the safety stock entry for more detail.

Best Buy and other retail chains measure service level with the in-stock position, which is the percentage of stores in the chain (or the percentage of items in a store) that have the presentation quantity54. The presentation quantity is the minimum number of units needed to create an attractive offering for customers. The calculation of in-stock for an item is (Number of stores that have the presentation minimum on-hand)/(Total number of stores stocking that item).

Service level metrics for respond to order (RTO) products – Respond to order products are assembled, built, fabricated, cut, mixed, configured, packaged, picked, customized, printed, or engineered in response to a customer’s request (order). The service level for these products is usually measured as the percent of orders that are filled on time, otherwise known as on-time delivery (OTD), which is the percent of orders shipped (or received) complete within the promise date (or request date).

Ideally, firms should compute OTD based on the customer request date, because the promise date may or may not satisfy the customer’s requirements. However, most firms find it difficult to use the request date because customers can “game” the request date to get a higher priority. Measuring OTD is further complicated by the fact that the supplier can update the promise date as the situation changes so orders are rarely late. Firms should compute OTD from the customer’s perspective. Therefore, firms should measure OTD based on the customer receipt date rather than the manufacturer’s ship date. However, most firms do not have access to the customer receipt date, and therefore measure OTD against their shipping dates, and then hold their distribution/transportation partners responsible for their portion of the customer leadtime.

OTD can be improved by either (1) making safer promises (e.g., promise three weeks instead of two weeks) or (2) reducing the mean or variance of the manufacturing leadtime. The first alternative can have a negative impact on demand. The second alternative requires lean sigma thinking to reduce the mean and variability of the customer leadtime. Hill and Khosla (1992) and Hill, Hays, and Naveh (2000) develop models for the leadtime elasticity of demand and for finding the “optimal” customer leadtime to offer to the market.

The customer leadtime for an RTO product is the actual time between the order receipt and the delivery to the customer. Customer leadtime, therefore, is a random variable that has a mean, mode, standard deviation, etc. The planned leadtime (or planned customer leadtime) is usually a fixed quantity, which may be conditioned on some attribute of the order (quantity, complexity, routing, materials, size, etc.). For example, a firm might offer a two-week leadtime for standard products and a three-week leadtime for non-standard products.

Some academics define additional performance metrics for RTO products, such as the mean and standard deviation of lateness, earliness, and tardiness. Define A as the actual delivery date (or time) and D as the due date (or time) for a customer order. Lateness is then defined as D - A, earliness is defined as max(D-A, 0), and tardiness is defined as max(A-D, 0). Lateness can be either positive or negative. Negative lateness means that the delivery was early. Neither earliness nor tardiness can be negative. Earliness is zero when an order is on time or late and tardiness is zero when an order is on time or early. Average earliness is commonly calculated only for early orders and average tardiness is commonly calculated only for tardy orders. Using more sophisticated mathematical notation, earliness is (D - A)+ and tardiness is (A - D)+, where (x)+ = max(x, 0). Some systems prioritize orders based on lateness.

General service level metrics for both MTS and RTO products – It is possible to use a service level metric for both MTS and RTO products by defining the fill rate as the percent of units, lines, or orders shipped by the due date. Some firms call this on-time and complete. Some members of the Grocery Manufacturing Association in North America use a fill rate metric called the perfect order fill rate, which is the percent of orders shipped on time, to the correct customer, to the correct place, complete (right quantity), free of damage, in the right packaging, with the correct documentation, and with an accurate invoice. However, some firms have backed away from the perfect order fill rate because it may be more demanding than customers expect, which means that it is more expensive than customers need. This suggests that firms should customize their perfect order metric to the needs of their market.

See aggregate inventory management, carrying cost, commonality, customer service, delivery time, dispatching rules, goodwill, inventory management, job shop scheduling, make to stock (MTS), materials management, mixed model assembly, on-time delivery (OTD), operations performance metrics, order cycle, purchase order (PO), purchasing, reorder point, respond to order (RTO), safety stock, Service Level Agreement (SLA), service management, slow moving inventory, stockout.

Service Level Agreement (SLA) – An arrangement between a service provider and a customer that specifies the type and quality of services that will be provided.

A Service Level Agreement (SLA) is usually a legally binding contract, but it can also be an informal agreement between two parties. The SLA is an effective means for the customer and supplier to engage in a serious discussion at the beginning of a relationship to determine what is important to the customer and clearly specify expectations. The service provider is usually obliged to pay the customer a penalty if any condition in the SLA is not satisfied. SLA conditions often include a definition of services, performance measurement, problem management, customer duties, warranties, disaster recovery, and termination of agreement. SLAs are also used to monitor a supplier’s performance and force the supplier to take corrective action when the conditions of the agreement are not met.

An SLA is essentially a service guarantee for a commercial (B2B) market. Service guarantees are usually designed for consumers (B2C) and are very short (e.g., as short as one sentence). In contrast, SLAs are usually designed for commercial customers (B2B) and usually require several pages of legal terminology. An Operating Level Agreement (OLA) is essentially an SLA within a firm. OLAs are often the key tool for achieving SLAs. A warranty is essentially a legally binding SLA for product performance rather than service performance.

Examples of an SLA: (1) A number of capital equipment firms offer a range of options (a menu) of field service SLAs to their customers that allow customers to make trade-offs between the price and service quality as measured by equipment downtime, technician response time, etc. (2) The best-known SLAs are in the telecommunications markets where the service provider might provide SLAs that specify uptime requirements. (3) Many firms use SLAs in an outsourcing relationship to clarify the business requirements for both parties.

See business process outsourcing, downtime, field service, outsourcing, performance-based contracting, service guarantee, service level, service management, service quality, warranty.

service management – A product that is simultaneously produced and consumed. ![]()

Services are said to be intangible, which means that the service is not a physical “thing.” However, many (if not most) services have facilitating goods. For example, a dinner at a nice restaurant will have comfortable chairs, nice plates, and good food. However, the chairs, plates, and food are not the service; they are only the facilitating goods for the service. Services cannot be inventoried, which means that they cannot be stored. For example, a flight from London to Paris at noon on July 4, cannot be “stored” in inventory until July 5. Once the aircraft has taken off, that capacity is gone forever.

Although most services are labor intensive (e.g., haircuts, surgery, and classroom instruction), some are capital intensive (e.g., power generation). Many services require that the customer have intensive customer contact in the process throughout the production of the service (e.g., surgery), others require limited contact with the customer at the beginning and end of the process (e.g., car repair), and some require no customer involvement at all (e.g., police protection). Using McDonald’s as an example, Levitt (1972) argue that both labor intensive and capital intensive services should be managed more like factories with respect to standardization, technology, systems, and metrics.

See Application Service Provider (ASP), back office, business process outsourcing, call center, Customer Effort Score (CES), experience engineering, help desk, labor intensive, line of visibility, Net Promoter Score (NPS), operations performance metrics, production line, service blueprinting, service failure, service guarantee, service level, Service Level Agreement (SLA), Service Profit Chain, service quality, service recovery, SERVQUAL, Software as a Service (SaaS), transactional process improvement.

service marketing – See service management.

service operations – See service management.

service parts – Components, parts, or supplies used to maintain or repair machinery or equipment; spare parts.

Service parts are sometimes called spare parts, but the term “spare” implies that they are not needed, which is often not the case (Hill 1992). Service parts are usually considered to be Maintenance-Repair-Operations (MRO) items. The slow moving inventory entry presents an inventory model based on the Poisson distribution for managing service parts.

See aftermarket, bathtub curve, field service, Maintenance-Repair-Operations (MRO), slow moving inventory.

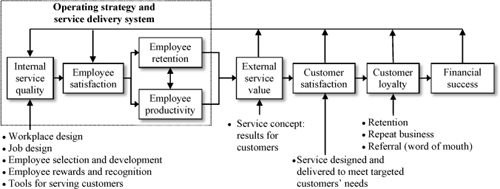

Service Profit Chain – A conceptual model for service management that relates employee satisfaction to customer satisfaction, revenue and profit.

The Service Profit Chain, developed by Heskett, Jones, Loveman, Sasser, and Schlesinger (1994) and Heskett, Sasser, and Schlesinger (1997), begins with “internal service quality,” which involves workplace design and job design for employees. The central concept is that if employees are satisfied, they will have lower labor turnover (higher employee retention) and better productivity. Higher employee satisfaction, retention, and productivity then translate into better “external service value” and satisfaction for the customer. This higher customer satisfaction then translates into higher customer loyalty, revenue, and ultimately into sales growth and profitability for the firm. This model is promoted by several consulting firms, including Heskett’s firm, the Service Profit Chain Institute (www.serviceprofitchain.com). The figure below shows the Service Profit Chain.

The Service Profit Chain

Adapted from Heskett, Jones, Loveman, Sasser, & Schlesinger (1994)

See human resources, job design, operations strategy, service management, service quality.

service quality – A customer’s long-term overall evaluation of a service provider. ![]()

Hays and Hill (2001) defined service quality as a customer’s long-term overall evaluation of a service provider and customer satisfaction as the customer’s evaluation of a specific service episode (a service event). However, some authors reverse these definitions. Service quality is perceived differently based on three types of product attributes: search qualities, experience qualities, and credence qualities.

Search qualities – Product attributes that can be fully evaluated prior to purchase. For example, the color of a dress purchased in a store can easily be evaluated before purchase. Color, style, price, fit, and smell are generally considered to be search qualities.

Experience qualities – Product attributes that cannot be evaluated without the product being purchased and consumed (experienced) by the customer. For example, the flavor of a food product cannot be evaluated until it is consumed. Given that the customer cannot fully evaluate the quality of the product until it is purchased, customers often have to rely more on personal recommendations for products that have experience qualities.

Credence qualities – Product attributes that cannot easily be evaluated even after purchase and consumption. For example, the quality of the advice from lawyers, doctors, and consultants is often hard to evaluate even after the advice has been given, because it is often quite subjective.

Zeithaml, Parasuraman, and Berry (1988) and many others define the service quality “gap” as the difference between the expectations and the delivery for a particular service episode. However, this model suggests that service quality (or customer satisfaction) is high when customers expect and receive mediocre service. In response to this problem, this author created the simple FED-up model, which states that F equals E minus D, where F = Frustration, E = Expectation, and D = Delivery. When F = 0, the customer is not necessarily satisfied; the customer is just not frustrated. In other words, no gap between expectation and delivery is not satisfaction, but rather the absence of dissatisfaction.

The critical incidents method is a good approach for identifying potential service quality dimensions. (See the critical incidents method entry.) The gap model is a good structure for measuring these dimensions on a survey, because it measures both importance and performance for each dimension of service quality. The gap model defines the gap as importance minus performance. Thus, if a service quality dimension has high importance but has low performance, it has a large gap and should be given high-priority.

Many hotels use three questions to measure customer satisfaction and service quality:

• Willingness to return – Do you intend to return to our hotel in the next year?

• Willingness to recommend – Would you recommend our hotel to your friends and family?

• Overall satisfaction – Overall, were you satisfied with your experience at our hotel?

More recently, many hotels have simplified the measurement process and now use the Net Promoter Scale developed by Reichheld (2003), which is a modification of the “willingness to recommend” question above. Dixon, Freeman, and Toman (2010) claim that the Customer Effort Score (CES) is a better predictor of customer loyalty in call centers than either the NPS or direct measures of customer satisfaction.

A common consultant’s exhortation to service leaders is to “delight our customers!” However, great care should be taken in applying this slogan. For example, when this author traveled to Europe several years ago, an airline agent allowed him the option of using a domestic upgrade coupon to upgrade from coach to business class. He was delighted to be able to sit in business class for the eight-hour flight. However, a couple of weeks later, he flew the same route and was denied the same upgrade and therefoe was quite disappointed. The principle here is that today’s delight is tomorrow’s expectation. Service providers should not delight customers unless they can do so consistently, and when they do perform a “one-off” special service, they should manage the customer’s expectations for the future.

Pine and Gillmore’s (2007) book on “Authenticity” argues that in a world increasingly filled with deliberately staged experiences and manipulative business practices (e.g., frequent flyer miles), consumers choose to buy based on how real and how honest they perceive a service provider to be. This is related to the moment of truth concept.

It is sometimes possible to improve service quality by changing customer perception of waiting time using one of Maister’s (1985) eight factors that affect customer perceptions of wait time:

• Unoccupied waits seem longer than occupied waits – An unoccupied wait is one where the customer has nothing to do or to entertain them; in other words, the customer is bored.

• Pre-process waits seem longer than in-process waits – For example, a patient might feel better waiting in the exam room (in-process wait) than in the waiting room (pre-process wait).

• Anxiety makes waits seem longer.

• Uncertain waits seem longer than waits of a known duration – Therefore, manage customer expectations.

• Unexplained waits seem longer than explained waits.

• Unfair waits seem longer than equitable waits.

• The more valuable the service, the longer people will be willing to wait.

• Waiting alone seems longer than waiting with a group – This is an application of the first factor.

ASQ has a Service Quality Division that has developed The Service Quality Book of Knowledge. More information on this book can be found on ASQ’s website http://asq.org.

See critical incidents method, Customer Effort Score (CES), customer service, empathy, empowerment, experience engineering, human resources, Kano Analysis, line of visibility, moment of truth, Net Promoter Score (NPS), primacy effect, quality management, service failure, service guarantee, Service Level Agreement (SLA), service management, Service Profit Chain, SERVQUAL, single point of contact, triage.

service recovery – Restoring customers to a strong positive relationship with the firm after they have experienced a service failure. ![]()

The service recovery principle (slogan) is, “It is much easier to keep an existing customer than it is to find a new one.” Many consultants make unsubstantiated claims about this by stating that “It costs about ten times more to win a new customer than it does to keep an existing customer.” The customer acquisition cost is the cost of finding a new customer and the service recovery cost is the cost of keeping a customer. Customer acquisition cost includes costs related to sales calls, advertising, direct mail, and other marketing communications, all of which can be quite expensive. Some firms measure the annual customer acquisition cost as the advertising budget divided by the number of new customers during a year. This analysis regularly finds good support for the claim that customer acquisition cost is high. Service recovery cost is the cost of compensating customers for a service failure plus some administrative cost, both of which are usually modest compared to the life-time value of the customer.

The six steps to service recovery are (1) listen, (2) apologize and show empathy, (3) ask the service recovery question “What can we do to completely satisfy you?” (4) fix the problem quickly (prioritize customers and escalate if needed), (5) offer symbolic atonement (something tangible the customer will appreciate), and (6) follow up to ensure that the relationship is fixed. Steps 3 and 5 of this process are particularly important, because they ensure the customer has been completely restored to a healthy relationship with the service provider.

This author developed the three fixes of service quality, which are (1) ensure the customer’s specific problem is fixed, (2) ensure the customer relationship is fixed so he or she will return, and (3) ensure the system is fixed so this problem never recurs. Step (3) here requires root cause analysis and error proofing.

See acquisition, error proofing, Root Cause Analysis (RCA), service failure, service guarantee, service management.

serviceability – The speed, courtesy, competence, and ease of repair.

Serviceability is often measured by mean response time and Mean Time to Repair (MTTR).

See Mean Time to Repair (MTTR), New Product Development (NPD).

SERVQUAL – A service quality instrument (survey) that measures the gap between customer expectations and perceptions after a service encounter.

The SERVQUAL instrument developed by Parasuraman, Zeithaml, and Berry (1988) has been used in numerous service industries. The instrument is organized around five dimensions of customer service:

Tangibles – Physical facilities, equipment, and appearance of personnel

Reliability – Ability to perform the promised service dependably and accurately

Responsiveness – Willingness to help customers and provide prompt service

Assurance – Competence, courtesy, credibility, and security

Empathy – Access, communication, and understanding

The diagram below is the SERVQUAL model (Zeithaml, Parasuraman, and Berry 1988) with several adaptations made by this author. Customers get their expectations from their own past experiences with the service provider, experiences with other service providers, their own intrinsic needs, and from communications from the service provider. The basic concept of the model is that Gap 5 (the service quality gap) exists when the perceived service does not meet the expected service. This gap is the result of one or more other gaps. Gap 1 (the product design gap) is a failure to understand the customer’s needs and expectations. Gap 2 (the process design gap) is a failure to design a process consistent with the product design. Gap 3 (the production gap) is a failure to actually deliver (produce) a service that meets the needs of a specific customer. Gap 4 (the perjury gap) is a failure to communicate to set and manage customer expectations. In summary, service providers should avoid the product design, process design, production, and perjury gaps in order to avoid the service quality gap and consistently deliver satisfying customer experience.

Teas (1994) and others challenge SERVQUAL in a number of ways. One of the main criticisms is that it defines quality as having no gap between expectation and delivery. However, some argue that service quality is not a function of the gap, but rather a function of the delivered value, which has no connection with the gap. For example, if someone hates McDonald’s hamburgers and goes to a McDonald’s restaurant and buys a hamburger, the customer gets what he or she expects. However, the customer will not perceive this as good quality. See the discussion of the FED-up model in the service quality entry for a simple model that addresses this issue.

SERVQUAL Model (Adapted)

Adapted by Professor A.V. Hill from Zeithaml, Parasuraman, & Berry (1988).

See customer service, empathy, service guarantee, service management, service quality.

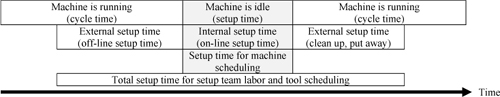

setup – The activity required to prepare a process (machine) to produce a product; also called a changeover.

A setup is a common term in a factory where the tooling on a machine has to be changed to start a new order. However, setups are an important part of all human activity. For example, a surgical operation has a setup time and setup cost to prepare the operating room for a surgery. A racecar has a changeover when it goes in for a pit stop on a racetrack. Adding a new customer to a database is also a setup process.

See batch process, external setup, internal setup, sequence-dependent setup time, setup cost, setup time, setup time reduction methods.

setup cost – In a manufacturing context, the cost to prepare a process (e.g., a machine) to start a new product; in a purchasing context, the cost to place a purchase order; also known as the changeover cost or order cost. ![]()

The setup cost (or ordering cost) is an important parameter for managerial decision making for manufacturers, distributors, and retailers. This cost is particularly important when making order sizing (lotsizing, batchsize) decisions. If the ordering cost is close to zero, the firm can justify small lotsizes (order sizes) and approach the ideal of just-in-time (one-piece flow). In a purchasing context, the ordering cost is the cost of placing and receiving an order. In a manufacturing context, the setup cost is the cost of setting up (changing over) the process to begin a new batch. This cost is usually called the “setup” or “changeover” cost.

In both the purchasing and manufacturing contexts, the ordering cost should reflect only those costs that vary with the number of orders. For example, most overhead costs (such as the electricity for the building) are not relevant to lotsizing decisions and therefore should be ignored. The total annual ordering cost is dependent only on the number of orders placed during the year.

The typical standard costing approach used in many firms includes allocated overhead in the order cost. In a manufacturing context, the number of standard labor hours for the machine setup is multiplied by the “burden” (overhead) rate. For many firms, the burden rate is more than $200 per shop hour. Many accountants make the argument that “all costs are variable in the long run,” and therefore the overhead should be included in the ordering cost. Although this argument is probably true for product costing, it is not true for estimating the ordering cost for determining lotsizes. Inventory theorists argue that the ordering cost (setup cost) should be treated as a marginal cost, which means overhead costs should be ignored.

In the purchasing context, the cost components include the following:

• Order preparation component – Computer processing, clerical processing.

• Order communication component – The marginal cost of mailing, faxing, or electronic communication of the order to the supplier.

• Supply order charge – Any order processing charge from the supplier.

• Shipping cost component – The fixed portion of the shipping cost (note that the per unit shipping cost should be considered part of the unit cost and not part of the ordering cost).

• Receiving cost – Cost of handling the receipt of an order. This cost includes the accounting costs, the per order (not per unit) inspection costs, and the cost of moving the order to storage. Again, costs that vary with the number of units should not be included here.

In the manufacturing context, the cost components included in the setup cost include the following:

• Order preparation component – Computer processing and clerical processing.

• Order communication component – Sending the order paperwork or electronic information to the shop floor.

• Setup labor cost component – The incremental labor cost of setting up the machine. This cost should include the workers’ hourly wage and fringe, but should not be assigned any other factory overhead (burden).

• Opportunity cost of the machine time lost to setup – At a bottleneck machine, time lost to a setup has tremendous value. In fact, for every hour that the bottleneck is sitting idle, the entire plant is also idle. Therefore, the opportunity cost of the capacity at the bottleneck is the opportunity cost of lost capacity for the entire plant. For example, if a plant is generating $10,000 in gross margin per hour, one hour lost to a setup at the bottleneck has an opportunity cost of $10,000. The opportunity cost for time lost to a setup at a nonbottleneck machine is zero. Goldratt and Cox (1992) and Raturi and Hill (1988) expand on these ideas.

• Shop floor control cost component – The cost of handling data entry activities associated with the order. Again, this is the cost per order that is handled.

Many plants have large setups between families of parts and small setups between parts within a family. The setups between families are sometimes called major setups and the setups between parts within a family are called minor setups.

A sequence-dependent setup time (or cost) is a changeover time (or cost) that is dependent on the order in which jobs are run. For example, it might be easy to change a paint-making process from white to gray, but difficult to change from black to white. Sometimes, setups are not sequence-dependent between items within a product family, but are sequence-dependent between families of products. When setup times (or costs) are sequence-dependent, it is necessary to have a “from-to” table of times (or costs), much like a “from-to” travel time table on the back of a map. Creating a schedule for sequence-dependent setup times is a particular type of combinatorial optimization problem that is nearly identical to the traveling salesperson problem.