M

MAD – See Mean Absolute Deviation (MAD).

maintenance – The work of keeping something in proper condition, upkeep, or repair.

Maintenance activities can be divided into three types: emergency maintenance, preventive maintenance, and predictive maintenance. Emergency maintenance is an unplanned maintenance problem that often results in lost productivity and schedule disruption.

Preventive maintenance is the practice of checking and repairing a machine on a scheduled basis before it fails; also called preventative maintenance. The maintenance schedule is usually based on historical information on the time between failures for the population of machines. In contrast, emergency maintenance is where maintenance is done after the machine fails. In the practice of dentistry, preventive maintenance is the annual checkup and cleaning; emergency maintenance is the urgent trip to the dentist when the patient has a toothache. The old English saying, “a stitch in time saves nine” suggests that timely preventive maintenance will save time later. In other words, sewing up a small hole in a piece of clothing will save more stitching later.

Predictive maintenance is the practice of monitoring a machine with a measuring device that can anticipate and predict when it is likely to fail. Whereas preventive maintenance is based on a schedule or a counting mechanism, predictive maintenance is based on the information from a measurement device that reports the status of the machine. Predictive maintenance is often based on vibration analysis. Predictive maintenance should be targeted at equipment with high failure costs and should only be used when the predictive tools are reliable (McKone & Weiss 2002).

See the Total Productive Maintenance (TPM) entry for more information on this topic.

See autonomous maintenance, availability, bathtub curve, Maintenance-Repair-Operations (MRO), Mean Time Between Failure (MTBF), Mean Time to Repair (MTTR), reliability, reliability engineering, Reliability-Centered Maintenance (RCM), robust, Total Productive Maintenance (TPM), work order.

Maintenance-Repair-Operations (MRO) – Purchased “non-production” items not used directly in the product; also called Maintenance, Repair, and Operating supplies, Maintenance, Repair, and Operations, and Maintenance, Repair, and Overhaul.

MRO is typically divided between manufacturing MRO (cutting oil, sandpaper, etc.) and non-manufacturing MRO (travel, office supplies, etc.). Manufacturing MRO includes electrical and mechanical, electronic, lab equipment and supplies, and industrial supplies. These items are generally not handled by the firm’s ERP system, but are often a significant expense for many firms. General and Administrative (G&A) expenses include computer-related capital equipment, travel and entertainment, and MRO. MRO is usually the most significant and most critical of these expenses.

Many consulting firms have had success helping large multi-division firms “leverage their MRO spend” across many divisions. They save money by getting all divisions to buy from the same MRO suppliers, which gives the buying firm more leverage and volume discounts. For example, they get all divisions to use the same airline and negotiate significantly lower prices. This may also reduce transaction costs.

See business process outsourcing, consolidation, consumable goods, finished goods inventory, leverage the spend, lumpy demand, maintenance, overhead, purchasing, service parts, spend analysis, supplier, Total Productive Maintenance (TPM).

major setup cost – The changeover cost from one family of products to another family of products; the “between-family” changeover cost.

A minor setup involves changing over a process from one product to another in the same product family. In contrast, a major setup is involves changing over a process from a product in one product family to a product in another product family. In other words, a minor setup is within family and a major setup is between families, and therefore requires more time and cost. Both major and minor setup costs can be either sequence-dependent or sequence-independent.

See joint replenishment, sequence-dependent setup time, setup cost.

make to order (MTO) – A process that produces products in response to a customer order. ![]()

MTO processes typically produce products that (1) are built in response to a customer order, (2) are unique to a specific customer’s requirements, and (3) are not held in finished goods inventory. However, statements (2) and (3) are not always true:

MTO products are not always unique to a customer – It is possible (but not common) to use an MTO process for standard products. For example, the publisher for this book could have used an MTO process called Print on Demand that prints a copy of the book after the customer order is received. This is an example of a “standard product” produced in response to a customer order.

MTO products are sometimes held in finished goods inventory – Many descriptions of MTO state that MTO never has any finished goods inventory. However, an MTO process may have a small amount of temporary finished goods inventory waiting to be shipped. An MTO process may also have some finished goods inventory when the customer order size is smaller than the minimum order size. In this case, the firm might hold residual finished goods inventory, speculating that the customer will order more at a later date.

The respond to order (RTO) entry discusses these issues in much greater depth.

See build to order (BTO), engineer to order (ETO), Final Assembly Schedule (FAS), mass customization, pack to order, push-pull boundary, respond to order (RTO), standard products.

make to stock (MTS) – A process that produces standard products to be stored in inventory. ![]()

The main advantage of the make to stock (MTS) customer interface strategy over other customer interface strategies, such as assemble to order (ATO), is that products are usually available with nearly zero customer leadtime. The main challenge of an MTS strategy is to find the balance between inventory carrying cost and service, where the service level is measured with a fill rate metric, such as the unit fill rate or order cycle fill rate.

See assemble to order (ATO), push-pull boundary, respond to order (RTO), service level, standard products.

make versus buy decision – The decision to either manufacture an item internally or purchase it from an outside supplier. ![]()

Managers in manufacturing firms often have to decide between making a part (or product) internally or buying (outsourcing) it from a supplier. These decisions require careful use of accounting data and often have strategic implications. The guiding slogan is that “a firm should never outsource its core competence.”

One of the most difficult aspects of this decision is how to handle overhead. If overhead is completely ignored and the focus is on only direct labor and materials, the decisions will generally opt for “in-sourcing.” If overhead is fully allocated (including overhead that will remain even after outsourcing is complete), decisions will generally opt for outsourcing and can lead the firm into the death spiral where everything is outsourced. (See the figure above.) In the death spiral, the firm outsources and then finds itself with the same overhead but fewer units, which means that overhead per unit goes up, which leads the firm to pursue more outsourcing.

The outsourcing death spiral

See burden rate, business process outsourcing, outsourcing, overhead, standard cost, supply chain management, vertical integration.

makespan – The time that the last job finishes for a given set of jobs.

The static job shop scheduling problem involves scheduling a set of jobs on one or more machines. One of the common objectives in the static job shop scheduling problem is to minimize makespan, which means to minimize the maximum completion time of jobs. In other words, the goal is to assign jobs to machines and sequence (or schedule) to minimize the completion time for the last job completed.

See job shop, job shop scheduling.

Malcolm Baldrige National Quality Award (MBNQA) – An annual award established in 1987 to recognize Total Quality Management in American industry. ![]()

The MBNQA was named after Malcolm Baldrige, the U.S. Secretary of Commerce from 1981 to 1987. It represents the U.S. government’s endorsement of quality as an essential part of a successful business strategy. The MBNQA is based on the premise that competitiveness in the U.S. economy is improved by (1) helping stimulate American companies to improve quality and productivity, (2) establishing guidelines and criteria in evaluating quality improvement efforts, (3) recognizing quality improvement achievements of companies, and (4) making information available on how winning companies improved quality. The MBNQA scoring system is based on seven categories illustrated below.

Organizations can score themselves or be scored by external examiners using the following weights for each area: leadership (120), strategic planning (85), customer focus (85), measurement, analysis, and knowledge management (90), workforce focus (85), operations focus (85), and results (450). The total adds to 1000 points.

Baldrige criteria for performance excellence framework

See http://www.nist.gov/baldrige for more information.

See American Society for Quality (ASQ), benchmarking, ISO 9001:2008, quality management, Shingo Prize, Total Quality Management (TQM).

Management by Objectives (MBO) – A systematic method for aligning organizational and individual goals and improving performance.

Management by Objectives (MBO), developed by Drucker (1954), is a goal setting and performance management system. With MBO, senior executives set goals that cascade down the organization so every individual has clearly defined objectives, with individuals having significant input in setting their own goals. Performance evaluation and feedback is then based on these objectives. Drucker warned of the activity trap, where people were so involved in their day-to-day activities that they forgot their primary objectives.

In the 1990s, Drucker put MBO into perspective by stating, “It’s just another tool. It is not the great cure for management inefficiency ... MBO works if you know the objectives [but] 90% of the time you don’t” (Mackay 2007, p. 53). Deming (2000) teaches that the likely result of MBO is suboptimization because of the tendency of reward systems to focus on numbers rather than on the systems and processes that produce the numbers. He argues that employees will find ways to give management the numbers, often by taking actions that are not in the best interests of the organization.

See balanced scorecard, Deming’s 14 points, hoshin planning, performance management system, suboptimization, Y-tree.

management by walking around – A management concept that encourages management to walk, observe, and communicate with workers; sometimes abbreviated MBWA.

David Packard and William Hewlett, founders of Hewlett-Packard (HP), coined the term “management by walking around” to describe an active management style used at HP. MBWA was popularized by Peters and Austin (1985). The bigger idea is that management needs to be engaged with the workers and know and understand the “real” work that is being done. Japanese managers employ a similar concept called the 3Gs (Gemba, Genbutsu, and Genjitsu), which mean the “actual place,” “actual thing,” and “actual situation.”

See 3Gs, gemba, one-minute manager, waste walk.

Manhattan square distance – A distance metric on an x-y plane that limits travel to the x and y axes.

The Manhattan square distance is a good estimate for many intracity travel distances where vehicles can only travel in certain directions (e.g., north/south or east/west) due to the layout of the roads. The equation for the Manhattan square distance is dij = |xi − xj| + |yi − yj|. This metric is named after the densely populated borough of Manhattan in New York City that is known for its rectangular street layout. The Minkowski distance metric is a general form of the Manhattan square distance.

See cluster analysis, great circle distance, Minkowski distance metric.

manifest – A customs document listing the contents loaded on a means of transport, such as a boat or aircraft.

See Advanced Shipping Notification (ASN), bill of lading, packing slip.

MANOVA (Multivariate Analysis of Variance) – See Analysis of Variance (ANOVA).

Manufacturing and Service Operations Management Society (MSOM) – A professional society that is a division of the Institute for Operations Research and the Management Sciences (INFORMS), which promotes the enhancement and dissemination of knowledge, and the efficiency of industrial practice, related to the operations function in manufacturing and service enterprises.

According to the MSOM website, “The methods which MSOM members apply in order to help the operations function add value to products and services are derived from a wide range of scientific fields, including operations research and management science, mathematics, economics, statistics, information systems and artificial intelligence. The members of MSOM include researchers, educators, consultants, practitioners and students, with backgrounds in these and other applied sciences.”

MSOM publishes the M&SOM Journal as an INFORMS publication. The website for MSOM is http://msom.society.informs.org.

See Institute for Operations Research and the Management Sciences (INFORMS), operations management (OM), operations research (OR).

manufacturing cell – See cellular manufacturing.

Manufacturing Cycle Effectiveness (MCE) – See value added ratio.

Manufacturing Execution System (MES) – An information system that collects and presents real-time information on manufacturing operations and prioritizes manufacturing operations from the time a manufacturing order is started until it is completed; often called a shop floor control system.

Shop floor control has been an important topic in the production planning and control literature for decades, but the term “MES” has only been used since 1990. Whereas MRP systems plan and control orders for an item with order sizes, launch dates, and due dates, MESs plan and control the operations in the routing for an item. Most MESs are designed to integrate with an ERP system to provide shop floor control level details.

MES provides for production and labor reporting, shop floor scheduling, and integration with computerized manufacturing systems, such as Automated Data Collection (ADC) and computerized machinery. Specifically, MES functions include resource allocation and status, dispatching production orders, data collection/acquisition, quality management, maintenance management, performance analysis, operations/detail scheduling, labor management, process management, and product tracking and genealogy. Some MESs will also include document control systems that provide work instructions, videos, and drawings to operators on the shop floor.

The benefits claimed for an MES include (1) reduced manufacturing cycle time, (2) reduced data entry time, (3) reduced Work-in-Process (and increase inventory turns), (4) reduced paper between shifts, (5) reduced leadtimes, (6) improved product quality (reduced defects), (7) reduced lost paperwork and blueprints, (8) improved on-time delivery and customer service, (9) reduced training and changeover time, and, as a result, (10) improved gross margin and cash flow. See MESA International website at www.mesa.org for more information.

See Automated Data Collection (ADC), Materials Requirements Planning (MRP), real-time, routing, shop floor control, shop packet, Total Productive Maintenance (TPM).

manufacturing leadtime – See leadtime.

manufacturing order – A request for a manufacturing organization to produce a specified number of units of an item on or before a specified date; also called shop order, production order, and production release. ![]()

All orders should include the order number, item (material) number, quantity, start date, due date, materials required, and resources used. The order number is used in reporting material and labor transactions.

See firm planned order, interplant order, leadtime, lotsizing methods, Materials Requirements Planning (MRP), planned order, purchase order (PO), work order.

manufacturing processes – Technologies used in manufacturing to transform inputs into products. ![]()

The following list presents a taxonomy of manufacturing processes developed by the author. This taxonomy omits many types of processes, particularly chemical processes.

• Processes that remove materials and/or prepare surfaces – Cutting (laser, plasma, water jet), drilling, grinding, filing, machining (milling, planning, threading, rabbeting, routing), punching, sawing, shearing, stamping, and turning (lathe, drilling, boring, reaming, threading, spinning).

• Forming processes – Bending (hammering, press brakes), casting (metal, plaster, etc.), extrusion, forging, hydroforming (hydramolding), molding, and stamping.

• Temperature related processes – Cooling (cryogenics) and heating (ovens).

• Separating processes – Comminution & froth flotation, distillation, and filtration.

• Joining processes – Adhesives, brazing, fasteners, riveting, soldering, taping, and welding.

• Coating processes – Painting, plating, powder coating, printing, thermal spraying, and many others.

• Assembly processes – Assembly line, mixed model assembly, and manufacturing cells.

Computer Numerically Controlled (CNC) machines and Flexible Manufacturing Systems (FMS) can do a variety of these activities. Manufacturing cells can be used for many activities other than just assembly. Machines used in manufacturing are often supported by tooling (e.g., fixtures, jigs, molds) and gauges.

See assembly, assembly line, Computer Numerical Control (CNC), die cutting, extrusion, fabrication, fixture, Flexible Manufacturing System (FMS), forging, foundry, gauge, jig, mixed model assembly, mold, production line, stamping, tooling.

Manufacturing Resources Planning (MRP) – See Materials Requirements Planning (MRP).

manufacturing strategy – See operations strategy.

Manugistics – A software vendor of Advanced Planning and Scheduling (APS) systems.

See Advanced Planning and Scheduling (APS).

MAPD (Mean Absolute Percent Deviation) – See Mean Absolute Percent Error (MAPE).

MAPE (Mean Absolute Percent Error) – See Mean Absolute Percent Error (MAPE).

maquiladora – A Mexican corporation that operates under a maquila program approved by the Mexican Secretariat of Commerce and Industrial Development (SECOFI).

A maquila program entitles the maquiladora company to foreign investment and management without needing additional authorization. It also gives the company special customs treatment, allowing duty-free temporary import of machinery, equipment, parts, materials, and administrative equipment, such as computers and communications devices, subject only to posting a bond guaranteeing that such goods will not remain in Mexico permanently.

Ordinarily, a maquiladora’s products are exported, either directly or indirectly, through sale to another maquiladora or exporter. The type of production may be the simple assembly of temporarily imported parts, the manufacture from start to finish of a product using materials from various countries, or any combination of manufacturing and non-manufacturing operations, such as data-processing, packaging, and sorting coupons.

The legislation now governing the industry’s operation is the “Decree for Development and Operation of the Maquiladora Industry,” published by the Mexican federal Diario Oficial on December 22, 1989. This decree described application procedures and requirements for obtaining a maquila program and the special provisions that apply only to maquiladoras (source: www.udel.edu/leipzig/texts2/vox128.htm, March 28, 2011).

See outsourcing, supply chain management.

marginal cost – An economics term for the increase (or decrease) in cost resulting from an increase (or decrease) of one unit of output or activity; also called incremental cost.

Operations managers can make sound economic decisions based on the marginal cost or marginal profit by ignoring overhead costs that are fixed and irrelevant to a decision. This is true for many production and inventory models.

For example, the marginal cost of placing one more purchase order (along with the associated receiving cost) may be very close to zero. At the same time the firm might have large buying and receiving departments that have significant overhead (e.g., building space, receiving space), which means that the average cost per order is high. When making decisions about the optimal number of purchase orders per year, the firm should ignore the average costs and make decisions based on the marginal (incremental) cost. All short-term decisions should be based on the marginal cost, and long-term decisions should be based on the average (or full) cost. As many accountants like to say, “All costs are variable in the long run.”

Marginal revenue is the additional revenue from selling one more unit. Economic theory says that the maximum total profit will be at the point where the marginal revenue equals marginal cost.

See carrying charge, carrying cost, Economic Order Quantity (EOQ), economy of scale, numeric-analytic location model, order cost, safety stock, sunk cost.

market pull – See technology push.

market share – The percent of the overall sales (dollars or units) of a market (local, regional, national, or global) that is controlled by one company.

One insightful question to ask a senior executive is, “What is your market share?” When he or she answers, then ask, “Of what?” Many managers are caught by this trick question and report their market share for their regional or national market instead of the global market. This question exposes a lack of global thinking.

See cannibalization, core competence, disruptive technology, first mover advantage, product proliferation, target market, time to market.

mass customization – A business model that uses a routine approach to efficiently create a high variety of products or services in response to customer-defined requirements. ![]()

Some people mistakenly assume that mass customization is only about increasing variety at the same cost. However, some of the best examples of mass customization focus on reducing cost while maintaining or even reducing variety. For example, AbleNet manufactures a wide variety of products for disabled people, such as the electrical switch shown on the right. The product comes in a number of different colors and with a wide variety of features (e.g., push once, push twice, etc.). When AbleNet created a modular design, it was able to provide customers with the same variety as before, but dramatically reduced the number of products it produced and stored. It was able to mass customize by postponing the customization by using decals and cover plates that could be attached to the top of the button. It also moved much of the feature customization to the software, allowing the hardware to become more standard. As a result, AbleNet significantly improved service, inventory, and cost while keeping the same variety for its customers.

Pine (1993) argues that mass customization strategies should be considered in markets that already have many competitors and significant variety (i.e., markets that clearly value variety). According to Kotha (1995), the competitive challenge in this type of market is to provide the needed variety at a relatively low cost.

Products and services can be mass customized for a channel partner (e.g., a distributor), a customer segment (e.g., high-end customers), or an individual customer. Customization for an individual is called personalization.

One of the primary approaches for mass customization is postponement, where customization is delayed until after the customer order is received. For example, IBM in Rochester, Minnesota, builds the AS400 using “vanilla boxes,” which are not differentiated until after the customer order has been received. IBM customizes the vanilla boxes by inserting hard drives, modems, and other modular devices into slots on the front of the box.

Eight strategies can be used for mass customization:30

1. Design products for mass customization – Make the products customizable.

2. Use robust components – Commonality is a great way to improve customization.

3. Develop workers for mass customization – Mass customization and flexibility are fundamentally a function of the flexibility and creativity of the workers.

4. Apply lean/quality concepts – Lean thinking (and short cycle times) and high quality are essential prerequisites for mass customization.

5. Reduce setup times – Long setup times (and large lotsizes) are the enemy of mass customization.

6. Use appropriate automation – Many people equate mass customization with automation; however, many of the best mass customization concepts have little to do with automation.

7. Break down functional silos – Functional silos contribute to long cycle times, poor coordination, and high costs, all of which present obstacles to mass customization.

8. Manage the value chain for mass customization – Some of the best examples of mass customization use virtual organizations and supply chain management tools to increase variety and reduce cost.

Pine and Gilmore (1999) have extended mass customization concepts to “experiences,” where the goal is to create tailored memorable experiences for customers.

See agile manufacturing, assemble to order (ATO), commonality, configurator, economy of scope, engineer to order (ETO), experience engineering, flexibility, functional silo, make to order (MTO), modular design (modularity), operations strategy, pack to order, postponement, print on demand, product mix, product proliferation, product-process matrix, push-pull boundary, respond to order (RTO), robust, sand cone model, virtual organization.

Master Production Schedule (MPS) – A high-level plan for a few key items used to determine the materials plans for all end items; also known as the master schedule. ![]()

As shown in the figure on the right, the manufacturing planning process begins with the strategic plan, which informs the business plan (finance) and the demand plan (marketing and sales), which in turn, inform the production plan (manufacturing). The Sales & Operations Planning Process (S&OP) then seeks to reconcile these three plans. Resource Requirements Planning (RRP) supports this reconciliation process by evaluating the production plan to make sure that sufficient resources (labor and machines) are available. RRP is a high-level evaluation process that only considers aggregate volume by product families (often in sales dollars or an aggregate measure of capacity, such as shop hours) and does not consider specific products, items, or resources.

Once the production plan is complete, the master scheduling process combines the production plan, firm customer orders, and managerial insight to create the MPS, which is a schedule for end items. Rough Cut Capacity Planning (RCCP) then evaluates the master schedule to make sure that sufficient capacity is available. RCCP is more detailed than RRP, but only considers the small set of end items in the master production schedule. Many firms consider major assemblies to be make to stock end items. The Final Assembly Schedule (FAS) is then used to schedule specific customer orders that pull from this inventory. Although the MPS is based on forecast (demand plan) information, it is a plan rather than a forecast, because it considers capacity limitations. Even though the MPS has the word “schedule” in it, it should not be confused with a detailed schedule.

Once the MPS is complete, the Materials Requirements Planning (MRP) process converts the MPS into a materials plan, which defines quantities and dates for every production and purchase order for every item. MRP uses a gross-to-net process to subtract on-hand and on-order quantities and a back scheduling process to account for planned leadtimes. Capacity Requirements Planning (CRP) then evaluates the materials plan to make sure that sufficient capacity is available. CRP is more detailed than RCCP and considers the capacity requirements for every operation in the routing for every production order in the materials plan. Although this capacity check is more detailed than the others, it is still fairly rough, because it uses daily time buckets and uses planned leadtimes based on average queue times. Advanced Planning and Scheduling (APS) systems can be used to conduct even more detailed scheduling.

Once the materials plan is complete, planners and buyers (or buyer/planners) review the materials plan and determine which manufacturing orders to release (send) to the factory and which purchase orders to release to suppliers. The planners and buyers may also reschedule open orders (orders already in the factory or already with suppliers) to change the due dates (pull in or push out) or the quantities (increase or decrease).

The planning above the MPS determines the overall volume and is often done in dollars or aggregate units for product families. In contrast, the planning at the MPS level and below is done in date-quantity detail for specific items and therefore determines the mix (Wallace & Stahl 2003).

Many firms do not have the information systems resources to conduct RRP, RCCP, and CRP.

All of the above plans (the production plan, master production schedule, and materials plan) have a companion inventory plan. If the planned production exceeds the planned demand, planned inventory will increase. Conversely, if planned production is less than the planned demand, planned inventory will decrease.

For example, the high-level production plan (aggregate plan, sales and operations plan) for a furniture company specifies the total number of chairs it expects to need for each month over the next year. The MPS then identifies the number of chairs of each type (by SKU) that are needed each week. MRP then builds a detailed materials plan by day for all components and determines the raw materials needed to make the chairs specified by the MPS.

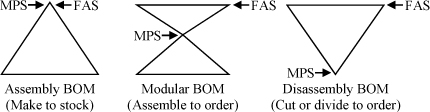

The figure below shows three types of bill of materials. The assembly BOM starts with many components (at the bottom of the BOM) and converts them into a few end products (at the top). The final product is a standard product and typically has a make to stock customer interface. The modular BOM starts with many components assembled into a few modules (subassemblies) that are mixed and matched to create many end items. This is typically an assemble to order customer interface. The disassembly31 BOM starts with a raw material, such as oil, and converts it into many end items, such as motor oil, gasoline, and plastic. This is typically a make to order customer interface, but could also be make to stock.

The Theory of Constraints (TOC) literature labels the processes for making these three BOMs as the A-plant, T-plant, and V-plant. See the VAT analysis entry for more detail.

The MPS is said to be “top management’s handle on the business,” because the MPS includes a very limited number of items. Therefore, the MPS process should focus on the narrow part of the BOM, which suggests that master scheduling should be done for end items for an assembly BOM, for major components for the modular BOM, and for raw materials for a disassembly BOM. Safety stock should be positioned at the same level as the MPS so that it is balanced between materials. In other words, a safety stock of zero units of item A and ten units of item B is of little value to protect against demand uncertainty if the end item BOM requires one unit of each. The Final Assembly Schedule (FAS) is a short-term schedule created in response to customer orders for the modular BOM. The push-pull boundary is between the items in the master schedule and in the FAS.

See assemble to order (ATO), Available-to-Promise (ATP), back scheduling, bill of material (BOM), Bill of Resources, Business Requirements Planning (BRP), Capacity Requirements Planning (CRP), chase strategy, Final Assembly Schedule (FAS), firm order, firm planned order, level strategy, Materials Requirements Planning (MRP), on-hand inventory, on-order inventory, production planning, push-pull boundary, Resource Requirements Planning (RRP), Rough Cut Capacity Planning (RCCP), safety leadtime, Sales & Operations Planning (S&OP), time fence, VAT analysis.

master schedule – The result of the master production scheduling process.

See Master Production Schedule (MPS).

master scheduler – The person responsible for creating the master production schedule.

See Master Production Schedule (MPS).

material delivery routes – See water spider.

Material Review Board (MRB) – A standing committee that determines the disposition of items of questionable quality.

materials handling – The receiving, unloading, moving, storing, and loading of goods, typically in a factory, warehouse, distribution center, or outside work or storage area.

Materials handling systems use four main categories of mechanical equipment: storage and handling equipment, engineered systems (e.g., conveyors, handling robots, AS/RS, AGV), industrial trucks (e.g., forklifts, stock chasers), and bulk material handling (e.g., conveyor belts, stackers, elevators, hoppers, diverters).

See forklift truck, inventory management, logistics, materials management, receiving, supply chain management.

materials management – The organizational unit and set of business practices that plans and controls the acquisition, creation, positioning, and movement of inventory through a system; sometimes called materials planning; nearly synonymous with logistics.

Materials management must balance the conflicting objectives of marketing and sales (e.g., have lots of inventory, never lose a sale, maintain a high service level) and finance (e.g., keep inventories low, minimize working capital, minimize carrying cost). Materials management often includes purchasing/procurement, manufacturing planning and control, distribution, transportation, inventory management, and quality.

If a firm has both logistics and materials management functions, the logistics function will focus primarily on transportation issues and the materials in warehouses and distribution centers and the materials management function will focus on procurement and the materials inside plants.

See carrying cost, inventory management, logistics, materials handling, purchasing, service level, supply chain management, Transportation Management System (TMS), warehouse, Warehouse Management System (WMS).

materials plan – See Materials Requirements Planning (MRP).

Materials Requirements Planning (MRP) – A comprehensive computer-based planning system for both factory and purchase orders; a major module within Enterprise Resources Planning Systems; also called Manufacturing Resources Planning. ![]()

MRP is an important module within Enterprise Requirements Planning (ERP) systems for most manufacturers. MRP was originally called Materials Requirements Planning and focused primarily on planning purchase orders for outside suppliers. MRP was then expanded to handle manufacturing orders sent to the shop floor, and some software vendors changed the name to Manufacturing Resources Planning.

MRP plans level by level down the bill of material (BOM). MRP begins by netting (subtracting) any on-hand and on-order inventory from the gross requirements. It then schedules backward from the due date using fixed planned leadtimes to determine order start dates. Lotsizing methods are then applied to determine order quantities. Lotsizes are often defined in terms of the number of days of net requirements. With MRP regeneration, a batch computer job updates all records in the database. With MRP net change generation, the system updates only the incremental changes.

MRP creates planned orders for both manufactured and purchased materials. The set of planned orders (that can be changed by MRP) and firm orders (that cannot be changed by MRP) is called the materials plan. Each order is defined by an order number, a part number, an order quantity, a start date, and a due date. MRP systems use the planned order start date to determine priorities for both shop orders and purchase orders. MRP is called a priority planning system rather than a scheduling system, because it backschedules from due dates using planned leadtimes that are calculated from the average queue times.

Nearly all MRP systems create detailed materials plans for an item using a Time Phased Order Point (TPOP) and fixed planned leadtimes. Contrary to what some textbooks claim, MRP systems rarely consider available capacity when creating a materials plan. Therefore, MRP systems are called infinite loading systems rather than finite loading systems. However, MRP systems can use Rough Cut Capacity Planning (RCCP) to check the Master Production Schedule (MPS) and Capacity Requirements Planning (CRP) to create load reports to check materials plans. These capacity checks can help managers identify situations when the plant load (planned hours) exceeds the capacity available. Advanced Planning and Scheduling (APS) systems are capable of creating detailed materials plans that take into account available capacity; unfortunately, these systems are hard to implement because of the need for accurate capacity, setup, and run time data.

See Advanced Planning and Scheduling (APS), allocated inventory, Available-to-Promise (ATP), bill of material (BOM), Business Requirements Planning (BRP), Capacity Requirements Planning (CRP), closed-loop MRP, dependent demand, Distribution Requirements Planning (DRP), effectivity date, Engineering Change Order (ECO), Enterprise Resources Planning (ERP), finite scheduling, firm order, forecast consumption, forward visibility, gross requirements, infinite loading, inventory management, kitting, low level code, Manufacturing Execution System (MES), manufacturing order, Master Production Schedule (MPS), net requirements, on-hand inventory, on-order inventory, pegging, phantom bill of material, planned order, production planning, purchase order (PO), purchasing, routing, time bucket, time fence, Time Phased Order Point (TPOP), where-used report.

matrix organization – An organizational structure where people from different units of an organization are assigned to work together under someone who is not their boss.

In a matrix organization, people work for one or more leaders who are not their bosses and who do not have primary input to their performance reviews. These people are “on loan” from their home departments. A matrix organization is usually (but not always) a temporary structure that exists for a short period of time. An example of a matrix organization is an architectural firm where people from each discipline (e.g., landscape architecture, heating, and cooling) temporarily report to a project manager for a design project.

See performance management system, project management.

maximum inventory – See periodic review system.

maximum stocking level – An SAP term for the target inventory.

MBNQA – See Malcolm Baldrige National Quality Award.

MCE (Manufacturing Cycle Effectiveness) – See value added ratio.

mean – The average value; also known as the arithmetic average. ![]()

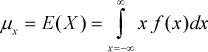

The mean is the arithmetic average of a set of values and is a measure of the central tendency. For a sample of n values {x1, x2, ... xn}, the sample mean is defined as ![]() . For the entire population of N values, the population mean is the expected value and is defined as

. For the entire population of N values, the population mean is the expected value and is defined as ![]() . Greek letter μ is pronounced “mu.”

. Greek letter μ is pronounced “mu.”

For a continuous distribution with density function f(x), the mean is the expected value  , which is also known as the complete expectation and the first moment. The partial expectation, an important inventory theory concept, is defined as

, which is also known as the complete expectation and the first moment. The partial expectation, an important inventory theory concept, is defined as  .

.

The median is considered to be a better measure of central tendency than the mean when the data is likely to have outliners. The median is said to be an “error resistant” statistic.

See box plot, geometric mean, interpolated median, kurtosis, median, mode, partial expectation, skewness, trim, trimmed mean.

Mean Absolute Deviation (MAD) – A measure of the dispersion (variability) of a random variable; defined as the average absolute deviation from the mean. ![]()

The mathematical definition of the MAD for a random variable xi with mean μ is ![]() . The standard deviation for a normally distributed random variable is theoretically exactly equal to

. The standard deviation for a normally distributed random variable is theoretically exactly equal to ![]() (approximately 1.25MAD). This is true asymptotically32, but will rarely be true for any sample.

(approximately 1.25MAD). This is true asymptotically32, but will rarely be true for any sample.

Brown (1967) implemented this approach widely at IBM, because early computers could not take square roots. However, by 1970, Brown argued that “MAD is no longer appropriate to the real world of computers. It never was the correct measure of dispersion” (Brown 1970, p. 148).

Other experts, such as Jacobs and Wagner (1989), argue that MAD is a still good approach, because absolute errors are less sensitive to outliers than the squared errors used in the variance and standard deviation. The MAD approach continues to be used in many major inventory management systems, including SAP.

In a forecasting context, the average error is often assumed to be zero (i.e., unbiased forecasts). In this context, ![]() . The MAD can be smoothed at the end of each period with the updating equation SMADt = σ|Et| + (1 − α)SMADt−1. The smoothed MAD is sometimes called the smoothed absolute error or SAE.

. The MAD can be smoothed at the end of each period with the updating equation SMADt = σ|Et| + (1 − α)SMADt−1. The smoothed MAD is sometimes called the smoothed absolute error or SAE.

See forecast bias, forecast error metrics, forecasting, Mean Absolute Percent Error (MAPE), mean squared error (MSE), Median Absolute Percent Error (MdAPE), outlier, Relative Absolute Error (RAE), robust, standard deviation, tracking signal, variance.

Mean Absolute Percent Deviation (MAPD) – See Mean Absolute Percent Error (MAPE).

Mean Absolute Percent Error (MAPE) – A commonly used (but flawed) measure of forecast accuracy that is the average of the absolute percent errors for each period; also called the Mean Absolute Percent Deviation (MAPD). ![]()

MAPE is defined mathematically as ![]() , where Et is the forecast error in period t, Dt is the actual demand (or sales) in period t, and T is the number of observed values. Many firms multiply by 100 to rescale the MAPE as a percentage. Note that the MAPE is not the MAD divided by the average demand.

, where Et is the forecast error in period t, Dt is the actual demand (or sales) in period t, and T is the number of observed values. Many firms multiply by 100 to rescale the MAPE as a percentage. Note that the MAPE is not the MAD divided by the average demand.

The MAPE has three significant problems. First, when the demand is small, the absolute percent error in a period (APEt = |Et|/Dt) can be quite large. For example, when the demand is 10 and the forecast is 100, the APEt for that period is 90/10 = 9 (or 900%). These very large values can have an undue influence on the MAPE. Second, when the demand is zero in any period, the MAPE is undefined. Third, it is conceptually flawed. The MAPE is the average ratio, when it should be the ratio of the averages. In the opinion of many experts, the Mean Absolute Scaled Error (MASE) is a better metric than the MAPE because it avoids the above problems.

One way to try to fix the MAPE is to Winsorize (bound) the ratio in each period at 100%, which means that the MAPE is defined in the range [0%, 100%]. See the Winsorizing entry for details. However, in this author’s view, this “fix” only treats the symptoms of the problem.

The average MAPE can be misleading as an aggregate measure for a group of items with both low and high demand. For example, imagine a firm with two items. One item is very important with high demand, high unit cost, and low MAPE (e.g., 10%), and the other is a very unimportant item with low demand, low unit cost, and high MAPE (e.g., 90%). When these two MAPE values are averaged, the overall MAPE is 50%. However, this gives too much weight to the low-demand item and not enough to the important item. The weighted MAPE avoids this problem. The weighted average MAPE is defined as ![]() , where wi is the importance weight for item i of N items. The annual cost of goods sold is the best weight to use in this equation.

, where wi is the importance weight for item i of N items. The annual cost of goods sold is the best weight to use in this equation.

Like many time series forecasting statistics, the MAPE can be smoothed with the updating equation SMAPEt = α|Et|/Dt + (1 − α)SMAPEt−1. Be sure to Winsorize the APEt when implementing this equation.

See demand filter, exponential smoothing, forecast bias, forecast error metrics, forecasting, Mean Absolute Deviation (MAD), Mean Absolute Scaled Error (MASE), mean squared error (MSE), Median Absolute Percent Error (MdAPE), Relative Absolute Error (RAE), Thiel’s U, tracking signal, Winsorizing.

Mean Absolute Scaled Error (MASE) – A forecast performance metric that is the ratio of the mean absolute deviation for a forecast scaled by (divided by) the mean absolute deviation for a random walk forecast.

Hyndman and Koehler (2006) proposed the MASE as a way to avoid many of the problems with the Mean Absolute Percent Error (MAPE) forecast performance metric. The MASE is the ratio of the mean absolute error for the forecast and the mean absolute error for the random walk forecast. Whereas the MAPE is the average of many ratios, the MASE is the ratio of two averages.

In the forecasting literature, the mean absolute error for the forecast error is called the Mean Absolute Deviation (MAD) and is defined as ![]() , where Dt is the actual demand and Ft is the forecast for period t. The random walk forecast for period t is the actual demand in the previous period (i.e., Ft = Dt–1); therefore, the Mean Absolute Deviation for the random walk is

, where Dt is the actual demand and Ft is the forecast for period t. The random walk forecast for period t is the actual demand in the previous period (i.e., Ft = Dt–1); therefore, the Mean Absolute Deviation for the random walk is ![]() . This assumes a one-period-ahead forecast, but it can easily be modified for a k-period-ahead forecast. The MASE, therefore, is defined as MASE = MAD/MADRW.

. This assumes a one-period-ahead forecast, but it can easily be modified for a k-period-ahead forecast. The MASE, therefore, is defined as MASE = MAD/MADRW.

The MASE will only have a divide-by-zero problem when the demand does not change over the entire horizon, but that is an unlikely situation. When MASE < 1, the forecasts are better than the random walk forecast; when MASE > 1, the forecasts are worse than the random walk forecast. A forecasting model with a MASE of 20% has a forecast error of 20% of the forecast error of the simplistic random walk forecast. A MASE of 95% is only slightly better than a simplistic random walk forecast.

The Mean Absolute Scaled Accuracy (MASA) is the companion accuracy measure for the MASE and is defined as 1 – MASE. MASA can be interpreted as the percent accuracy of the forecast relative to the accuracy of the random walk forecast. When MASA = 0, the forecasts are no better than the random walk forecast; when MASA < 0, the forecasts are worse than the random walk forecast; and when MASA = 60%, the average absolute forecast error is 40% of the average absolute forecast error for the random walk forecast. MASE and MASA are better measures of forecast accuracy than MAPE because they measure accuracy against an objective standard, which is the random walk forecast.

Hyndman and Koehler (2006, p. 13) assert that measures based on scaled measures (such as the MASE) “should become the standard approach in comparing forecast accuracy across series on different scales.” However, the MASE has two organizational challenges. First, it is not always easy to explain to managers. Second, when changing from MAPE to MASE, those responsible for forecasting will have to explain why the reported forecast accuracy decreases dramatically.

Dan Strike at 3M has suggests a very similar metric that uses a 12-month moving average as the scaling factor. This metric is even simpler than MASE to explain, but may be slightly harder to implement.

Thiel’s U3 metric is the mean squared error scaled by the mean squared error for the random walk forecast. Therefore, it can be argued that the “new” MASE scaling concept is just an adaption of Thiel’s metric that uses the MAD rather than the mean squared error (MSE).

MASE can be implemented with simple moving averages or with exponential smoothing for both the numerator (the smoothed MAD) and the denominator (the smoothed MAD for a random walk forecast).

See forecast error metrics, Mean Absolute Percent Error (MAPE), Relative Absolute Error (RAE).

mean squared error (MSE) – The expected value of the square of the difference between an estimator and the parameter.

The MSE measures how far off an estimator is from what it is trying to estimate. In forecasting, the MSE is a measure of the forecast error that is the average of the squared forecast errors and is defined mathematically as ![]() , where Et is the forecast error in period t and T is the number of observed values. The MSE is an estimate of the variance of the forecast error and is approximately equal to the variance when the forecast bias is close to zero. Like most time series statistics, the MSE can be smoothed with the updating equation

, where Et is the forecast error in period t and T is the number of observed values. The MSE is an estimate of the variance of the forecast error and is approximately equal to the variance when the forecast bias is close to zero. Like most time series statistics, the MSE can be smoothed with the updating equation ![]() . The root mean squared error (RMSE) is the square root of the MSE and is an estimate of the standard deviation of the forecast error. In fact, the RMSE will be equal to the standard deviation of the forecast error if the forecast is unbiased. Variances are additive, but standard deviations are not; therefore, RMSE is not normally smoothed.

. The root mean squared error (RMSE) is the square root of the MSE and is an estimate of the standard deviation of the forecast error. In fact, the RMSE will be equal to the standard deviation of the forecast error if the forecast is unbiased. Variances are additive, but standard deviations are not; therefore, RMSE is not normally smoothed.

See forecast bias, forecast error metrics, Mean Absolute Deviation (MAD), Mean Absolute Percent Error (MAPE), standard deviation, tracking signal, variance.

Mean Time Between Failure (MTBF) – A maintenance and reliability term for the average time that a component is expected to work without failing.

The MTBF is a good measure of product reliability. The MTBF is often modeled with the bathtub curve that has higher failure rates at the beginning and end of the product life cycle. The Mean Time For Failure (MTFF) is the average time to the first failure and is sometimes used for non-repairable products.

See availability, bathtub curve, maintenance, Mean Time to Repair (MTTR), New Product Development (NPD), reliability, Total Productive Maintenance (TPM).

Mean Time to Repair (MTTR) – A maintenance and reliability term for the average time required to fix something, such as a machine.

The MTTR is a measure of the complexity and cost of a repair job and a measure of the maintainability of a product (Schroeder 2007). The MTTR should not be used as the only performance measure for service techs because the best service techs are often assigned the most difficult repair jobs, which means they will have the highest MTTR. The same is true for doctors and other skilled professionals.

See availability, maintenance, Mean Time Between Failure (MTBF), New Product Development (NPD), serviceability, Total Productive Maintenance (TPM).

Measurement System Analysis (MSA) – An approach for verifying the accuracy and precision of a data measurement system using statistical analysis tools, such as Gauge R&R, attribute Gauge R&R, and the P/T ratio.

See Gauge, Gauge R&R, metrology.

MECE – The concept that an analysis should define issues and alternatives that are mutually exclusive and collectively exhaustive; pronounced “me-see.”

MECE thinking is widely used by strategy consulting firms, such as McKinsey, Bain, and BCG, to create both issue trees and decision trees (Rasiel 1998). In fact, the case interview method these firms use to screen applicants is designed to test MECE thinking.

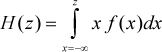

Mutually exclusive means that the ideas or alternatives are distinct and separate and do not overlap. Collectively exhaustive means that the ideas or alternatives cover all possibilities. The Venn diagram below shows two sets (A and B) that overlap and therefore are not mutually exclusive. The union of sets A, B, and C is collectively exhaustive because it covers all possibilities.

In most anlysies, it is far more important that issues be separable than MECE. For example, an outgoing shipment could be late because of mechanical problems with a truck or because the products were not ready to ship. These two events are not mutually exclusive, because they both could be true, but they can and should be studied separately.

See causal map, decision tree, issue tree, Minto Pyramid Principle, story board, Y-tree.

median – The middle value of a set of sorted values. ![]()

The median, like the mean, is a measure of the central tendency. The calculation of the median begins by sorting the values in a list. If the number of values is odd, the median is the middle value in the sorted list. If the number of values is even, the median is the average of the two middle values in the sorted list. For example, the median of {1, 2, ![]() , 9, 100} is 3, and the median of {1, 2,

, 9, 100} is 3, and the median of {1, 2, ![]() , 100, 200} is (3 + 9)/2 = 6.

, 100, 200} is (3 + 9)/2 = 6.

The median is often a better measure of central tendency than the mean when the data is highly skewed. For example, consider the following selling prices for houses (in thousands): $175, $180, $200, $240, $241, $260, $800, and $2400. The mean is $562, but the median is only $240.5. In this case, the mean is “pulled up” by the two high prices.

Excel provides the function MEDIAN(range) for computing the median of a range of values.

Some people consider the interpolated median to be better than the median when many values are at the median and the data has a very limited number of possible values. For example, the interpolated median is often used for Likert survey questions on the 1-5 or 1-7 scale and also for grades in the U.S. that are translated from A, A-, B+, etc. to a 4.0 scale.

See box plot, forecast error metrics, interpolated median, mean, Median Absolute Percent Error (MdAPE), mode, skewness, trimmed mean.

Median Absolute Percent Error (MdAPE) – The middle value of all the percentage errors for a data set when the absolute values of the errors (negative signs are ignored) are ordered by size.

See forecast error metrics, Mean Absolute Deviation (MAD), Mean Absolute Percent Error (MAPE), median.

mergers and acquisitions (M&A) – The activity of one firm evaluating, buying, selling, or combining with another firm.

Whereas an acquisition is the purchase of one company by another, a merger is the combination of two companies to form a new company. In an acquisition, one firm will buy another to gain market share, create greater efficiency through economies of scale, or acquire new technologies or resources.

The goal for both mergers and acquisitions is to create shareholder value. The success of a merger or acquisition depends on whether this synergy is achieved from (1) growing revenues through synergies between products, markets, or product technologies or (2) economies of scale through headcount reduction, purchasing leverage, IT systems, HR, and other functional synergies. Unfortunately, planned synergies are not always realized, and in some cases revenues decline, morale sags, costs increase, and share prices drop.

See acquisition, antitrust laws, economy of scale.

Metcalfe’s Law – See network effect.

Methods Time Measurement (MTM) – See work measurement.

metrology – The science of measurement; closely related to Measurement System Analysis (MSA).

Metrology attempts to validate data obtained from test equipment and considers precision, accuracy, traceability, and reliability. Metrology, therefore, requires an analysis of the uncertainty of individual measurements to validate instrument accuracy. The dissemination of traceability to consumers (both internal and external) is often performed by a dedicated calibration laboratory with a recognized quality system.

Metrology has been an important topic in commerce since people started measuring length, time, and weight. For example, according to New Unger’s Bible Dictionary, the cubit was an important measure of length among the Hebrews (Exodus 25:10) and other ancient peoples. It was commonly measured as the length of the arm from the point of the elbow to the end of the middle finger, which is roughly 18 inches (45.72 cm).

The scientific revolution required a rational system of units and made it possible to apply science to measurement. Thus, metrology became a driver of the Industrial Revolution and was a critical precursor to systems of mass production. Modern metrology has roots in the French Revolution and is based on the concept of establishing units of measurement based on constants of nature, thus making measurement units widely available. For example, the meter is based on the dimensions of the Earth, and the kilogram is based on the mass of a cubic meter of water. The Système International d’Unités (International System of Units or SI) has gained worldwide acceptance as the standard for modern measurement. SI is maintained under the auspices of the Metre Convention and its institutions, the General Conference on Weights and Measures (CGPM), its executive branch, the International Committee for Weights and Measures (CIPM), and its technical institution, the International Bureau of Weights and Measures (BIPM). The U.S. agencies with this responsibility are the National Institute of Standards and Technology (NIST) and the American National Standards Institute (ANSI).

See gauge, Gauge R&R, lean sigma, Measurement System Analysis (MSA), reliability.

milestone – An important event in the timeline for a project, person, or organization.

A milestone event marks the completion of a major deliverable or the start of a new phase for a project. Therefore, milestones are good times to monitor progress with meetings or more formal “stage-gate” reviews that require key stakeholders to decide if the project should be allowed to continue to the next stage. In project scheduling, milestones are activities with zero duration.

See deliverables, project management, stage-gate process, stakeholder.

milk run – A vehicle route to pick up materials from multiple suppliers or to deliver supplies to multiple customers.

The traditional purchasing approach is for customers to send large orders to suppliers on an infrequent basis and for suppliers to ship orders to the customer via a common carrier. With a milk run, the customer sends its own truck to pick up small quantities from many local suppliers on a regular and frequent basis (e.g., once per week). Milk runs speed delivery and reduce inventory for the customer and level the load for the supplier, but at the expense of additional transactions for both parties. Milk runs are common in the automotive industry and are a commonly used lean practice. Hill and Vollmann (1986) developed an optimization model for milk runs.

See lean thinking, logistics, Vehicle Scheduling Problem (VSP).

min/max inventory system – See min-max inventory system.

mindmap – A diagram used to show the relationships between concepts, ideas, and words that are connected to a central concept or idea at one or more levels.

A mindmap is a graphical tool that can be used by an individual or a group to capture, refine, and share information about the relationships between concepts, ideas, words, tasks, or objects that are connected to a central concept or idea at one or more levels in a hierarchy. Mindmaps can be used to:

• Generate ideas

• Capture ideas

• Take course notes

• Provide structure to ideas

• Review and study ideas

• Visualize and clarify relationships

• Help plan meetings and projects

• Organize ideas for papers

• Create the storyboard for a presentation

• Stimulate creativity

• Create a shared understanding

• Create the agenda for a meeting

• Communicate ideas with others

• Teach concepts to others

• Document ideas

• Help make decisions

• Create a work breakdown structure

• Create a task list

• Prioritize activities

• Solve problems

A mindmap represents how one or more people think about a subject. The spatial organization on the paper (or screen) communicates the relationship between the nodes (ideas, concepts, objects, etc.) in the creator’s mind. Creating a mindmap helps the creators translate their thinking about a subject into more concrete ideas, which helps them clarify their own thinking and develop a shared understanding of the concepts. Once created, a mindmap is an excellent way to communicate concepts and relationships to others.

Methods – The concepts on a mindmap are drawn around the central idea. Subordinate concepts are then drawn as branches from those concepts. Buzan and Buzan (1996) recommend that the mindmaps should be drawn by hand using multiple colors, drawings, and photos. This makes the mindmap easier to remember, more personal, and more fun. They argue further that the mindmap should fit on one piece of paper, but allowed it to be a large piece of paper. Many powerful software packages are now available to create mindmaps, including Mind Manager (www.mindjet.com), Inspiration (www.inspiration.com), and many others.

Relationship to other mapping tools – A mindmap is similar to a causal map, except the links in a mindmap usually imply similarity rather than causality. A strategy map is a special type of causal map. A project network shows the time relationships between the nodes and therefore is not a mindmap. A bill of material (BOM) drawing and a work breakdown structure are similar to mindmaps in that they show relatedness and subordinated concepts.

Mindmap example – The example below was created by the author with Mindjet Mind Manager software to brainstorm both the work breakdown structure and the issue tree for a productivity improvement project. The symbol (+) indicates that additional nodes are currently hidden from view.

In conclusion, mindmaps are a powerful tool for visualizing, structuring, and communicating concepts related to a central idea. This author predicts that mindmapping software will become as popular as Microsoft’s process mapping tool (Microsoft Visio) and project management tool (Microsoft Project Manager).

Source: Professor Arthur V. Hill

See causal map, issue tree, process map, project network, strategy map, Work Breakdown Structure (WBS).

Minkowski distance metric – A generalized distance metric that can be used in logistics/transportation analysis, cluster analysis, and other graphical analysis tools.

If location i has coordinates (xi, yi), the Euclidean (straight-line) distance between points i and j is ![]() , which is based on the Pythagorean Theorem. The Manhattan square distance only considers travel along the x-axis and y-axis and is given by dij = |xi − xj | + |yi − yj|. The Minkowski distance generalizes these metrics and defines the distance as dij = (|xi − xj|r + |yi − yj|r)1/r. The Minkowski distance metric is equal to the Euclidean distance when r = 2 and the Manhattan square distance when r = 1. Minkowski distance can be defined in multi-dimensional space. Consider item i with K attributes (dimensions) (i.e., xi1, xi2, ... , xiK). The Minkowski distance between items i and j is then defined as

, which is based on the Pythagorean Theorem. The Manhattan square distance only considers travel along the x-axis and y-axis and is given by dij = |xi − xj | + |yi − yj|. The Minkowski distance generalizes these metrics and defines the distance as dij = (|xi − xj|r + |yi − yj|r)1/r. The Minkowski distance metric is equal to the Euclidean distance when r = 2 and the Manhattan square distance when r = 1. Minkowski distance can be defined in multi-dimensional space. Consider item i with K attributes (dimensions) (i.e., xi1, xi2, ... , xiK). The Minkowski distance between items i and j is then defined as ![]() .

.

The Chebyshev distance (also called the maximum metric and the L∞ metric) sets the distance to the longest dimension (i.e., dij = max(|xi−xj|,|yi−yj|) and is equivalent to the Minkowski distance when r = ∞.

See cluster analysis, Manhattan square distance, Pythagorean Theorem.

min-max inventory system – An inventory control system that signals the need for a replenishment order to bring the inventory position up to the maximum inventory level (target inventory level) when the inventory position falls below the reorder point (the “min” or minimum level); known as the (s,S) system in the academic literature, labeled (R,T) in this book. ![]()

As with all reorder point systems, the reorder point can be determined using statistical analysis of the demand during leadtime distribution or with less mathematical methods. As with all order-up-to systems, the order quantity is the order-up-to level minus the current inventory position, which is defined as (on-hand + on-order – allocated – backorders).

A special case of a min-max system is the (S − 1, S) system, where orders are placed on a “one-for-one” basis. Every time one unit is consumed, another unit is ordered. This policy is most practical for low-demand items (e.g., service parts), high-value items (e.g., medical devices), or long leadtime items (e.g., aircraft engines).

See inventory management, order-up-to level, periodic review system, reorder point.

minor setup cost – See major setup cost, setup cost.

Minto Pyramid Principle – A structured approach to building a persuasive argument and presentation developed by Barbara Minto (1996), a former McKinsey consultant.

The Minto Pyramid Principle developed by Barbara Minto (1996) can improve almost any presentation or persuasive speech. Minto’s main idea is that arguments should be presented in a pyramid structure, starting with the fundamental question (or hypothesis) at the top and cascading down the pyramid, with arguments at one level supported by arguments at the next level down. At each level, the author asks, “How can I support this argument?” and “How do I know that this is true?” The presentation covers all the arguments at one level, and then moves down to the next level to further develop the argument.

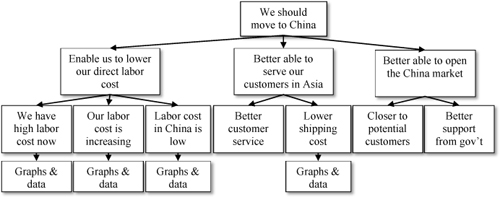

For example, a firm is considering moving manufacturing operations to China. (See the figure below.) At the top of the pyramid is the hypothesis, “We should move part of our manufacturing to China.”

Example of the Minto Pyramid Principle

Source: Professor Arthur V. Hill

From this, the team doing the analysis and making the presentation asks, “Why is it a good idea to move to China?” The answer comes at the next level with three answers: (1) We will have lower labor cost in China, (2) we can better serve our customers in Asia, and (3) locating in China will eventually open new markets for the company’s products in China. The team then asks “Why?” for each of these three items and breaks these out in more detail at the next level. The “enable us to lower our direct labor cost” argument is fully developed before the “better able to serve our customers in Asia” is started.

Minto recommends that the presentation start with an opening statement of the thesis, which consists of a factual summary of the current situation, a complicating factor or uncertainty that the audience cares about, and the explicit or implied question that this factor or uncertainty raises in the audience’s mind and that the presenter’s thesis answers. The closing consists of a restatement of the main thesis, the key supporting arguments (usually the second row of the pyramid), and finally an action plan.

See hypothesis, inductive reasoning, issue tree, MECE, story board.

mission statement – A short statement of an organization’s purpose and aspirations, intended to provide direction and motivation.

Most organizations have a vision statement or mission statement that is intended to define their purpose and raison d’être33. However, for many organizations, creating a vision or mission statement is a waste of time. Vision and mission statements are published in the annual report and displayed prominently on the walls, but they are understood by few, remembered by none, and have almost no impact on anyone’s thinking or behavior. Yet this does not have to be the case. Vision and mission statements can be powerful tools for aligning and energizing an entire organization.

Although scholars do not universally agree on the difference between vision and mission statements, most view the vision statement as the more strategic longer-term view and argue that the mission should be derived from the vision. A vision statement should be a short, succinct, and inspiring statement of what the organization intends to become at some point in the future. It is the mental image that describes the organization’s aspirations for the future without specifying the means to achieve those aspirations. The table below presents examples of vision statements that have worked and others that probably would not have worked.

Of course, having a vision, mission, goals, and objectives is not enough. Organizations need to further define competitive strategies and projects to achieve them. A competitive strategy is a plan of action to achieve a competitive advantage. Projects are a means of implementing strategies. Projects require goals and objectives, but also require a project charter, a team, and a schedule. Strategies and projects should be driven by the organization’s vision and mission. The hoshin planning, Y-tree, and strategy mapping entries present important concepts on how to translate a strategy into projects and accountability.

The mission statement translates the vision into more concrete and detailed terms. Many organizations also have values statementS dealing with integrity, concern for people, concern for the environment, etc., where the values statement defines constraints and guides all other activities. Of course, the leadership must model the values. Enron’s motto was “Respect, Integrity, Communication and Excellence” and its vision and value statement declared “We treat others as we would like to be treated ourselves ... We do not tolerate abusive or disrespectful treatment. Ruthlessness, callousness and arrogance don’t belong here” (source: http://wiki.answers.com, May 8, 2011). However, Enron’s leadership obviously did not live up to it.

Goals and objectives are a means of implementing a vision and mission. Although used interchangeably by many, most people define goals as longer term and less tangible than objectives. The table below on the left shows the Kaplan and Norton model (2004), which starts with the mission and then follows with values and vision. This hierarchy puts mission ahead of values and vision. The table below on the right is a comprehensive hierarchy developed by this author that synthesizes many models.

In conclusion, vision and mission statements can be powerful tools to define the organization’s desired end state and to energize the organization to make the vision and mission a reality. To be successful, these statements need to be clear, succinct, passionate, shared, and lived out by the organization’s leaders. They should be supported by a strong set of values that are also lived out by the leadership. Lastly, the vision and mission need to be supported by focused strategies, which are implemented through people and projects aligned with the strategies and mission.

See Balanced Scorecard, forming-storming-norming-performing model, hoshin planning, SMART goals, strategy map, true north, Y-tree.

mistake proofing – See error proofing.

mix flexibility – See flexibility.

mixed integer programming (MIP) – A type of linear programming where some decision variables are restricted to integer values and some are continuous; also called mixed integer linear programming.

See integer programming (IP), linear programming (LP), operations research (OR).

mixed model assembly – The practice of assembling multiple products in small batches in a single process.

For example, a firm assembled two products (A and B) on one assembly line and used large batches to reduce changeover time with sequence AAAAAAAAAAAAAAABBBBBBBBBBBBBB. However, the firm was able to reduce changeover time and cost, which enabled it to economically implement mixed model assembly with sequence ABABABABABABABABABABABABABABA.

The advantages of mixed model assembly over the large batch assembly are that it (1) reduces inventory, (2) improves service levels, (3) smoothes the production rate, and (4) enables early detection of defects. Its primary disadvantage is that it requires frequent changeovers, which can add complexity and cost.

See assembly line, early detection, facility layout, heijunka, manufacturing processes, service level, setup time reduction methods.

mizusumashi – See water spider.

mode – (1) In a statistics context: The most common value in a set of values. (2) In a transportation context: The method of transportation for cargo or people (e.g., rail, road, water, or air).

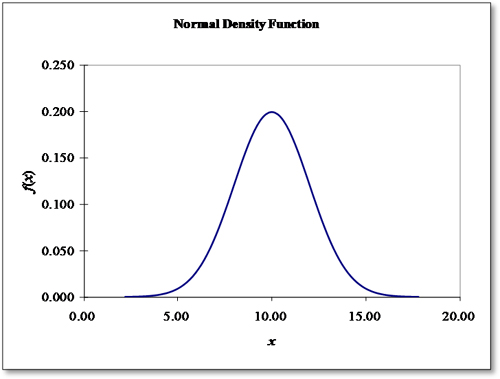

In the statistics context, the mode is a measure of central tendency. For a discrete distribution, the mode is the value where the probability mass function is at its maximum value. In other words, the mode is the value that has the highest probability. For a continuous probability distribution, the mode is the value where the density function is at its maximum value. However, the modal value may not be unique. For symmetrical distributions, such as the normal distribution, the mean, median, and mode are identical.

In the transportation context, the mode is a type of carrier (e.g., rail, road, water, air). Water transport can be further broken into barge, boat, ship, ferry, or sailboat and can be on a sea, ocean, lake, canal, or river. Intermodal shipments use two or more modes to move from origin to destination.

See intermodal shipments, logistics, mean, median, multi-modal shipments, skewness.

modular design (modularity) – Organizing a complex system as a set of distinct components that can be developed independently and then “plugged” together. ![]()

The effectiveness of the modular design depends on the manner in which systems are divided into components and the mechanisms used to plug components together. Modularity is a general systems concept and is a continuum describing the degree to which a system’s components can be separated and recombined. It refers to the tightness of coupling between components and the degree to which the “rules” of the system architecture enable (or prohibit) the mixing and matching of components. Because all systems are characterized by some degree of coupling between components and very few systems have components that are completely inseparable and cannot be recombined, almost all systems are modular to some degree (Schilling 2000).

See agile manufacturing, commonality, interoperability, mass customization.

modularity – See modular design.

mold – A hollow cavity used to make products in a desired shape.

See foundry, manufacturing processes, tooling.

moment of truth – A critical or decisive time on which much depends; in the service quality context, an event that exposes a firm’s authenticity to its customers or employees. ![]()

A moment of truth is an opportunity for the firm’s customers (or employees) to find out the truth about the firm’s character. In other words, it is a time for customers or employees to find out “who we really are.” This is a chance for employees (or bosses) to show the customers (or employees) that they really do care about them and to ask customers (or employees) for feedback on how products and services might be improved. These are special moments and should be managed carefully.

When creating a process map, it is important to highlight the process steps that “touch” the customer. A careful analysis of a typical service process often uncovers many more moments of truth than management truly appreciates. Such moments might include a customer phone call regarding a billing problem, a billing statement, and an impression from an advertisement.

People tend to remember their first experience (primacy) and last experience (recency) with a service provider. It is important, therefore, to manage these important moments of truth with great care.