C

C&E diagram – A causal map using the Ishikawa (fishbone) diagram.

See C&E matrix, causal map, lean sigma, Root Cause Analysis (RCA), Root Cause Tree, seven tools of quality.

C&E Matrix – An analysis tool used to collect subjective data to make quantitative estimates of the impact of the Key Process Input Variables (KPIVs) on Key Process Output Variables (KPOVs) to identify the most important KPIVs for a process improvement program; also known as a cause and effect matrix.

In any process improvement program, it is important to determine which Key Process Input Variables (KPIVs) have the most impact on the Key Process Output Variables (KPOVs). The C&E Matrix is a practical way to collect subjective estimates of the importance of the KPIVs on the KPOVs.

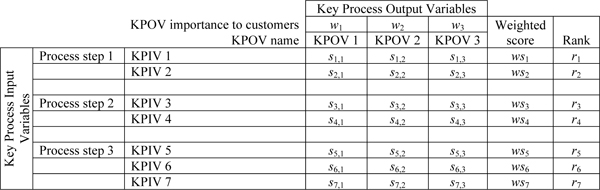

Building the C&E Matrix begins by defining the KPOVs along the columns on the top of the matrix (table) and the KPIVs along the rows on the left side. (See the example below.) Experts then estimate the importance of each KPOV to the customer. This is typically done on a 1-10 scale, where 1 is unimportant and 10 is critically important. Each pair of input and output variables is then scored on a 0-10 scale, where the score is the degree to which the input variable impacts (causes) the output variable. The sum of the weighted scores is then used to rank the input variables to determine which input variables deserve the most attention and analysis.

The example below illustrates the matrix for three KPOVs and seven KPIVs. KPOV 1 to KPOV 3 are the names of the output variables with customer importance weights (w1, w2, w3). The si,j values are the impact scores for input (KPIV) variable i on output (KPOV) variable j. The weighted score for each KPIV is then calculated as the sum of the weights and the impact scores. Stated mathematically, the weighted score for the i-th KPIV is defined as ![]() , where J is the number of KPOVs. These scores are then ranked in the far right column.

, where J is the number of KPOVs. These scores are then ranked in the far right column.

C&E Matrix example

The C&E Matrix is closely related to the C&E Diagram and the causal map. The C&E Matrix is a special type of causal map represented in matrix form that has only one set of input variables (the KPIVs) and one set of output variables (the KPOVs). This can be generalized by including all variables on both rows and columns. This generalized matrix, called the adjacency matrix in the academic literature, allows for input variables to cause other input variables. This matrix can also be represented as a causal map. The “reachability” matrix is an extension of the adjacency matrix and represents how many steps it takes to get from one node to another in the network. Scavarda, Bouzdine-Chameeva, Goldstein, Hays, and Hill (2006) discussed many of these issues.

Some firms and consultants confuse a C&E Matrix with the Kepner-Tregoe Model (KT). KT is a simple scoring system for alternative courses of action, where each alternative is scored on a number of different dimensions, and each dimension has an assigned weight. The idea is that the alternative with the highest weighted sum is likely to be the best one. In contrast, the C&E Matrix scores input variables that cause output variables. In the KT Model, the dimensions do not cause the alternatives; they simply evaluate them.

See C&E Diagram, causal map, Kepner-Tregoe Model, Key Process Output Variable (KPOV), lean sigma.

CAD – See Computer Aided Design.

CAD/CAM – See Computer Aided Design/Computer Aided Manufacturing.

CAGR – See Compounded Annual Growth Rate (CAGR).

CAI – See Computer Aided Inspection.

call center – An organization that provides remote customer contact via telephone and may conduct outgoing marketing and telemarketing activities.

A call center can provide customer service such as (1) a help desk operation that provides technical support for software or hardware, (2) a reservations center for a hotel, airline, or other service, (3) a dispatch operation that sends technicians or other servers on service calls, (4) an order entry center that accepts orders for products from customers, and (5) a customer service center that provides other types of customer help.

A well-managed call center can have a major impact on customer relationships and on firm profitability. Call center management software monitors system status (number in queue, average talk time, etc.) and measures customer representative productivity. Advanced systems also provide forecasting and scheduling assistance. Well-managed call centers receive customer requests for help through phone calls, faxes, e-mails, regular mail, and information coming in through the Internet. Representatives respond via interpersonal conversations on the phone, phone messages sent automatically, fax-on-demand, interactive voice responses, and e-mails. Web-based information can provide help by dealing with a large percentage of common problems that customers might have and can provide downloadable files for customers. By taking advantage of integrated voice, video, and data, information can be delivered in a variety of compelling ways that enhance the user experience, encourage customer self-service, and dramatically reduce the cost of providing customer support.

The Erlang C formula is commonly used to determine staffing levels in any time period. (See the queuing theory entry for more information.)

See Automatic Call Distributor (ACD), cross-selling, customer service, help desk, knowledge management, order entry, pooling, queuing theory, service management, shrinkage.

CAM – See Computer Aided Manufacturing.

cannibalization – The sales of a new product that will be taken from the firm’s other products.

For example, a new computer model might take sales from an existing model and therefore add nothing to the firm’s overall market share or bottom line.

See market share.

cap and trade – A system of financial incentives put in place by the government to encourage corporations to reduce pollution.

The government issues emission permits that set limits (caps) for the pollution that can be emitted. Companies that produce fewer emissions can sell their excess pollution credits to those that produce more.

See carbon footprint, green manufacturing, sustainability.

capability – See Design for Six Sigma (DFSS), process capability and performance.

Capability Maturity Model (CMM) – A five-level methodology for measuring and improving processes.

CMM began as an approach for evaluating the “maturity” of software development organizations, but has since been extended to other organizations. Many capability maturity models have been developed for software acquisition, project management, new product development, and supply chain management. This discussion focuses on the Capability Maturity Model for Software (SW-CMM), which is one of the best-known products of the Carnegie Mellon University Software Engineering Institute (SEI). CMM is based heavily on the book Managing the Software Process (Humphries 1989). The actual development of the SW-CMM was done by the SEI at Carnegie Mellon University and the Mitre Corporation in response to a request to provide the federal government with a method for assessing the capability of its software contractors.

The SW-CMM model is used to score an organization on five maturity levels. Each maturity level includes a set of process goals. The five CMM levels follow:

Level 1: Initial – The software process is characterized as ad hoc and occasionally even chaotic. Few processes are defined and success depends on individual effort and heroics. Maturity level 1 success depends on having quality people. In spite of this ad hoc, chaotic environment, maturity level 1 organizations often produce products and services that work; however, they frequently exceed project budgets and schedules. Maturity level 1 organizations are characterized by a tendency to over-commit, abandon processes in the time of crisis, repeat past failures, and fail to repeat past successes.

Level 2: Repeatable – Basic project management processes are established to track cost and schedule activities. The minimum process discipline is in place to repeat earlier successes on projects with similar applications and scope. There is still a significant risk of exceeding cost and time estimates. Process discipline helps ensure that existing practices are retained during times of stress. When these practices are in place, projects are performed and managed according to their documented plans. Project status and the delivery of services are visible to management at defined points (for example, at major milestones and at the completion of major tasks).

Level 3: Defined – The software process for both management and engineering activities is documented, standardized, and integrated into a standard software process for the organization. All projects use an approved, tailored version of the organization’s standard software process for developing and maintaining software. A critical distinction between maturity levels 2 and 3 is the scope of standards, process descriptions, and procedures. At level 2, the standards, process descriptions, and procedures may be quite different in each specific instance of the process (for example, on a particular project). At maturity level 3, the standards, process descriptions, and procedures for a project are tailored from the organization’s set of standard processes to suit a particular project or organizational unit.

Level 4: Managed – Detailed measures of the software process and product quality are collected. Both the software process and products are quantitatively understood and controlled. Using precise measurements, management can effectively control the software development effort. In particular, management can identify ways to adjust and adapt the process to particular projects without measurable losses of quality or deviations from specifications. At this level, the organization sets a quantitative quality goal for both the software process and software maintenance. A critical distinction between maturity level 3 and maturity level 4 is the predictability of process performance. At maturity level 4, the process performance is controlled using statistical and other quantitative techniques and is quantitatively predictable.

Level 5: Optimizing – Maturity level 5 focuses on continually improving process performance through both incremental and innovative technological improvements. Quantitative process-improvement objectives for the organization are established, continually revised to reflect changing business objectives, and used as criteria in managing process improvement. The effects of deployed process improvements are measured and evaluated against the quantitative process-improvement objectives. Both the defined processes and the organization’s set of standard processes are targets of measurable improvement activities. Process improvements to address common causes of process variation and measurably improve the organization’s processes are identified, evaluated, and deployed. The organization’s ability to rapidly respond to changes and opportunities is enhanced by finding ways to accelerate and share learning. A critical distinction between maturity level 4 and maturity level 5 is the type of process variation addressed. At level 4, processes are concerned with addressing special causes of process variation and providing statistical predictability of the results. At level 5, processes are concerned with addressing common causes of process variation and changing the process to improve process performance (while maintaining statistical probability) and achieve the established quantitative process-improvement objectives.

Although these models have proven useful to many organizations, the use of multiple models has been problematic. Further, applying multiple models that are not integrated within and across an organization is costly in terms of training, appraisals, and improvement activities. The CMM Integration project (CMMI) was formed to sort out the problem of using multiple CMMs. The CMMI Product Team’s mission was to combine the following:

• The Capability Maturity Model for Software (SW-CMM) v2.0 draft C

• The Systems Engineering Capability Model (SECM)

• The Integrated Product Development Capability Maturity Model (IPD-CMM) v0.98

• Supplier sourcing

CMMI is the designated successor of the three source models. The SEI has released a policy to sunset the Software CMM and previous versions of the CMMI.

Many of the above concepts are from http://en.wikipedia.org/wiki/Capability_Maturity_Model #Level_1_-_Initial (November 4, 2006).

Interestingly, maturity level 5 is similar to the ideals defined in the lean sigma and lean philosophies.

See common cause variation, lean sigma, lean thinking, operations performance metrics.

capacity – (a) Process context: The maximum rate of output for a process, measured in units of output per unit of time; (b) Space/time/weight context: The maximum space or time available or the maximum weight that can be tolerated. ![]()

This entry focuses on definition (a). The unit of time may be of any length (a day, a shift, a minute, etc.). Note that it is redundant (and ignorant) to use the phrase “maximum capacity” because a capacity is a maximum.

Some sources make a distinction between several types of capacities:

Rated capacity, also known as effective capacity, nominal capacity, or calculated capacity, is the expected output rate for a process based on planned hours of operation, efficiency, and utilization. Rated capacity is the product of three variables, hours available, efficiency, and utilization.

Demonstrated capacity, also known as proven capacity, is the output rate that the process has actually been able to sustain over a period of time. However, demonstrated capacity is affected by starving (the process is stopped due to no input), blocking (the process is stopped because it has no room for output), and lack of market demand (we do not use capacity without demand).

Theoretical capacity is the maximum production rate based on mathematical or engineering calculations, which sometimes do not consider all relevant variables; therefore, it is quite possible that the capacity can be greater than or less than the theoretical value. It is fairly common for factories to work at 110% of their theoretical capacity.

Capacity should not be confused with load. If an elevator has three people on it, what is its capacity? This is a trick question. The capacity might be 2, 3, 20, or 99. If it has three people on it, the elevator has a current load of three and probably has a capacity of at least three. For many years, this author wrote APICS certification exam questions related to this concept. It was amazing how many people answered this question incorrectly.

The best capacity will minimize the total relevant cost, which is the sum of the capacity and waiting costs. All systems have a trade-off between capacity utilization and waiting time. These two variables have a nonlinear relationship. As utilization goes to 100%, the waiting time tends to go to infinity. Maximizing utilization is not the goal of the organization. The goal of capacity management is to minimize the sum of two relevant costs: the cost of the capacity and the cost of waiting.

For example, the optimal utilization for a fire engine is not 100%, but much closer to 1%. Utilization for an office copy machine should be relatively low because the cost of people waiting is usually higher than the cost of the machine waiting. One humorous question to ask students is: “Should you go make copies to keep the machine utilized?” The answer, of course, is “no” because utilization is not the goal. The goal, therefore, is to find the optimal balance between the cost of the machine and the cost of people waiting.

In contrast, some expensive machines, such as a bottling system, will run three shifts per day 365 days per year. The cost of downtime is the lost profit from the system and can be quite expensive.

In a manufacturing context, capacity management is executed at four levels: Resource Requirements Planning (RRP), Rough Cut Capacity Planning (RCCP), Capacity Requirements Planning (CRP), and input/output control. See those entries in this encyclopedia to learn more.

The newsvendor model can be used to find the optimal capacity. The model requires that the analyst define a time horizon, estimate the distribution of demand, and estimate the cost of having one unit of capacity too much and the cost of having one unit of capacity too little.

In some markets, customers can buy capacity rather than products. For example, a customer might buy the capacity of a supplier’s factory for one day per week. This can often help the customer reduce the procurement leadtime. Of course, if the customer does not use the capacity, the supplier will still be paid.

See absorptive capacity, bill of resources, bottleneck, capacity management, Capacity Requirements Planning (CRP), closed-loop MRP, downtime, input/output control, Little’s Law, load, newsvendor model, Overall Equipment Effectiveness (OEE), process design, queuing theory, Resource Requirements Planning (RRP), Rough Cut Capacity Planning (RCCP), safety capacity, utilization, yield management.

capacity cushion – See safety capacity.

capacity management – Planning, building, measuring, and controlling the output rate for a process.

See capacity.

Capacity Requirements Planning (CRP) – The planning process used in conjunction with Materials Requirements Planning (MRP) to convert open and planned shop orders into a load report in planned shop hours for each workcenter.

The CRP process is executed after the MRP planning process has produced the materials plan, which includes the set of all planned and open orders. CRP uses the order start date, order quantity, routing, standard setup times, and standard run times to estimate the number of shop hours required for each workcenter. It is possible for CRP to indicate that a capacity problem exists during specific time periods even when Resource Requirements Planning (RRP) and Rough Cut Capacity Planning (RCCP) have indicated that sufficient capacity is available. This is because RRP and RCCP are not as detailed as CRP with respect to timing the load. The output of the CRP process is the capacity plan, which is a schedule showing the planned load (capacity required) and planned capacity (capacity available) for each workcenter over several days or weeks. This is also called a load profile.

See Business Requirements Planning (BRP), capacity, closed-loop MRP, input/output control, Master Production Schedule (MPS), Materials Requirements Planning (MRP), planned order, Resource Requirements Planning (RRP), Rough Cut Capacity Planning (RCCP), routing, Sales & Operations Planning (S&OP).

capacity utilization – See utilization.

CAPEX – An abbreviation for the CAPital EXpenditure used as the initial investment in new machines, equipment, and facilities.

See capital.

capital – Money available for investing in assets that produce output.

See CAPEX, capital intensive.

capital intensive – Requiring a large expenditure of capital in comparison to labor.

A capital intensive industry requires large investments to produce a particular good. Good examples include power generation and oil refining.

See capital, labor intensive.

carbon footprint – A measure of the carbon dioxide (CO2) and other greenhouse gas emissions released into the environment by a person, plant, organization, or state; often expressed as tons of carbon dioxide per year.

The carbon footprint takes into account energy use (heat, cooling, light, power, and refrigeration), transportation, and other means of emitting carbon.

See cap and trade, energy audit, green manufacturing, triple bottom line.

cargo – Goods transported by a vehicle; also called freight.

See logistics, shipping container.

carousel – A rotating materials handling device used to store and retrieve smaller parts for use in a factory or warehouse.

Carousels are often automated and used for picking small parts in a high-volume business. The carousel brings the part location to the picker so the picker does not have to travel to the bin location to store or retrieve a part. Both horizontal and vertical carousels are used in practice. The photo on the right is a vertical carousel.

See Automated Storage & Retrieval System (AS/RS), batch picking, picking, warehouse.

carrier – (1) In a logistics context: An organization that transports goods or people in its own vehicles. (2) In a telecommunications context: An organization that offers communication services. (3) In an insurance context: An organization that provides risk management services.

Carriers may specialize in small packages, less than truck load (LTL), full truck loads (TL), air, rail, or sea. In the U.S., a carrier involved in interstate moves must be licensed by the U.S. Department of Transportation. The shipper is the party that initiates the shipment.

See common carrier, for-hire carrier, less than truck load (LTL), logistics.

carrying charge – The cost of holding inventory per time period, expressed as a percentage of the unit cost. ![]()

This parameter is used to help inventory managers make economic trade-offs between inventory levels, order sizes, and other inventory control variables. The carrying charge is usually expressed as the cost of carrying one monetary unit (e.g., dollar) of inventory for one year and therefore has a unit of measure of $/$/year. Reasonable values are in the range of 15-40%.

The carrying charge is the sum of four factors: (1) the marginal cost of capital or the weighted average cost of capital (WACC), (2) a risk premium for obsolete inventory, (3) the storage and administration cost, and (4) a policy adjustment factor. This rate should only reflect costs that vary with the size of the inventory and should not include costs that vary with the number of inventory transactions (orders, receipts, etc.). A good approach for determining if a particular cost driver should be included in the carrying charge is to ask the question, “How will this cost be affected if the inventory is doubled (or halved)?” If the answer is “not at all,” that cost driver is probably not relevant (at least not in the short-term). It is difficult to make a precise estimate for the carrying charge. Many firms erroneously set it to the WACC and therefore underestimate the cost of carrying inventory.

See carrying cost, Economic Order Quantity (EOQ), hockey stick effect, inventory turnover, marginal cost, obsolete inventory, production planning, setup cost, shrinkage, weighted average.

carrying cost – The marginal cost per period for holding one unit of inventory (typically a year). ![]()

The carrying cost is usually calculated as the average inventory investment times the carrying charge. For example, if the annual carrying charge is 25% and the average inventory is $100,000, the carrying cost is $25,000 per year. Many firms incorrectly use the end-of-year inventory in this calculation, which is fine if the end-of-year inventory is close to the average inventory during the year. However, it is quite common for firms to have a “hockey stick” sales and shipment pattern where the end-of-year inventory is significantly less than the average inventory during the year. Technically, this type of average is called a “time-integrated average” and can be estimated fairly accurately by averaging the inventory at a number of points during the year.

Manufacturing and inventory managers must carefully apply managerial accounting principles in decision making. A critical element in many decisions is the estimation of the inventory carrying cost, sometimes called the “holding cost.” Typical decisions include the following:

• Service level trade-off decisions – Many make to stock manufacturers, distributors, and retailers sell standard products from inventory to customers who arrive randomly. In these situations, the firm’s service level improves with a larger finished goods inventory. Therefore, trade-offs have to be made between inventory carrying cost and service.

• Lot size decisions – A small order size requires a firm to place many orders, which results in a small “cycle” inventory. Assuming instantaneous delivery with order quantity Q, the average cycle inventory is approximately Q/2. Even though small manufacturing order sizes provide low average cycle inventory, they require more setups, which, in turn, may require significant capacity leading to a large queue inventory and a large overall carrying cost.

• Hedging and quantity discount decisions – Inventory carrying cost is also an important issue when evaluating opportunities to buy in large quantities or buy early to get a lower price. These decisions a total cost model to make evaluate the economic trade-offs between the carrying cost and the purchase price.

• In-sourcing versus outsourcing decisions – When trying to decide if a component or a product should be manufactured in-house or purchased from a supplier, the inventory carrying cost is often a significant factor. Due to longer leadtimes, firms generally increase inventory levels to support an outsourcing decision. It is important that the proper carrying cost be used to support this analysis.

The above decisions require a “managerial economics” approach to decision making, which means that the only costs that should be considered are those costs that vary directly with the amount of inventory. All other costs are irrelevant to the decision.

The carrying charge is a function of the following four variables:

• Cost of capital – Firms have alternative uses for money. The cost of capital reflects the opportunity cost of the money tied up in the inventory.

• Storage cost – The firm should consider only those storage costs that vary with the inventory level. These costs include warehousing, handling, insurance and taxes, depreciation, and shrinkage.

• Obsolescence risk – The risk of obsolescence tends to increase with inventory, particularly for firms that deal with high technology products, such as computers, or perishable products, such as food.

• Policy adjustment – This component of the carrying cost reflects management’s desire to pursue this policy and is based on management intuition rather than hard data.

When estimating the unit cost for the carrying cost calculation, most authors argue for ignoring allocated overhead. However, given that it is difficult for most organizations to know their unit cost without overhead, it is reasonable for them to use the fully burdened cost and use the appropriate carrying charge that does not double count handling, storage, or other overhead costs.

See carrying charge, Economic Order Quantity (EOQ), hockey stick effect, Inventory Dollar Days (IDD), inventory management, inventory turnover, marginal cost, materials management, opportunity cost, outsourcing, overhead, production planning, quantity discount, service level, setup cost, shrinkage, weighted average.

cash cow – The firm or business unit that holds a strong position in a mature industry and is being “milked” to provide cash for other business units; the cash cow is often in a mature industry and therefore not a good place for significant new investment.

The BCG Growth-Share Matrix6

Adapted by Professor Arthur V. Hill

The Boston Consulting Group (BCG) Growth-Share Matrix can help managers set investment priorities for the product portfolio in a business unit or for business units in a multidivisional firm. Stars are high growth/high market share products or business units. Cash cows have low growth but high market share and can be used to supply cash to the firm. Dogs have low growth and low market share and should be either fixed or liquidated. Question marks have high growth, but low market share; a few of these should be targeted for investment while the others should be divested. Stern and Deimler (2006) and Porter (1985) provide more detail.

See operations strategy.

Cash on Delivery (COD) – Contract terms that require payment for goods and transportation at time of delivery.

casting – See foundry.

catchball – A lean term used to describe an iterative top-down/bottom-up process in which plans are “thrown” back and forth between two levels in an organization until the participants at the different levels come to a shared understanding.

The term “catchball” is a metaphor used in the hoshin planning process for participative give-and-take discussions between two levels of an organization when creating a strategic plan. Catchball can help an organization achieve buy-in, find agreement, build a shared understanding, and create detailed execution plans for both the development and execution of a strategy. Catchball is “played” between multiple levels of the organization to ensure that the strategic plan is embraced and executable at every level.

The strategic planning process in many organizations has two common issues: (1) the plan is difficult to implement because the higher-level management is out of touch with the details, and (2) the lower-level managers do not understand the rationale behind the plan and therefore either passively resist or actively oppose the new strategy. With catchball, higher-level management develops a high-level plan and then communicates with the next layer of management to gain understanding and buy-in. Compared to other types of strategic planning, catchball usually results in strategic plans that are embraced by a wider group of managers and are more realistic (e.g., easier to implement).

Catchball essentially has two steps: (1) conduct an interactive session early in the planning process to give the next level of management the opportunity to ask questions, provide feedback, and challenge specific items, and (2) have the next level managers develop an execution plan for the higher-level initiatives and toss it back to the higher-level managers for feedback and challenges.

Catchball is comparable to agile software development where software ideas are tested early and often, which leads to better software. The same is true for strategy development with catchball where strategic plans are tested early and often. Both concepts are similar to the idea of small lotsizes and early detection of defects.

See agile software development, early detection, hoshin planning, lean thinking, operations strategy, prototype.

category captain – A role given by a retailer to a representative from a supplier to help the retailer manage a category of products.

It is a common practice for retailers in North America to select one supplier in a category as the category captain. A retailer usually (but not always) selects the category captain as the supplier with the highest sales in the category. The supplier then selects an individual among its employees to serve in the category captain’s role. Traditionally, the category captain is a branded supplier; however, the role is sometimes also given to private label suppliers. Category captains often have their offices at the customer’s sites.

The category captain is expected to work closely with the retailer to provide three types of services:

Analyze data:

• Analyze category and channel data to develop consumer and business insights across the entire category.

• Perform competitive analyses by category, brand, and package to identify trends.

• Analyze consumer purchasing behavior by demographic profile to understand consumer trends by channel.

• Audit stores.

Develop and present plans:

• Develop business plans and strategies for achieving category volume, margin, share, space, and profit targets.

• Help the retailer build the planogram for the category.

• Prepare and present category performance reviews for the retailer.

• Participate in presentations as a credible expert in the category.

• Respond quickly to the retailer’s requests for information.

• Provide the retailer with information on shelf allocation in a rapidly changing environment.

• Educate the retailer’s sales teams.

• Advise the retailer on shelving standards and associated software tools.

Craig Johnson, a Kimberly-Clark category captain at Target, adds, “Most category captains also have responsibilities within their own company as well and have to balance the needs of their retailer with internal company needs. It can get hairy sometimes.”7

The category captain must focus on growing the category, even if requires promoting competing products and brands. This practice will lead to the growth of the entire category and therefore be in the best interests of the supplier. In return for this special relationship, the supplier will have an influential voice with the retailer. However, the supplier must be careful never to abuse this relationship or violate any antitrust laws.

The category captain is usually given access to the retailer’s proprietary information, such as point-of-sale (POS) data. This information includes sales data for all suppliers that compete in the category. The retailer and category captain will usually have an explicit agreement that requires the captain to use this data only for category management and not share it with anyone in the supplier’s company.

Retailers will sometimes assign a second competing supplier as a category adviser called the “category validator.” In theory, the validator role was created as a way for the retailer to compare and evaluate the category captain’s recommendations. In practice, however, the adviser is usually used by the retailer as another category management resource. The adviser conducts ad hoc category analyses at the request of the retailer, but does not usually duplicate the services provided by the captain.

See antitrust laws, assortment, category killer, category management, consumable goods, consumer packaged goods, Fast Moving Consumer Goods (FMCG), planogram, Point-of-Sale (POS), private label.

category killer – A term used in marketing and strategic management to describe a dominant product or service that tends to have a natural monopoly in a market.

One example of a category killer is eBay, an on-line auction website that attracts large numbers of buyers and sellers simply because it is the largest on-line auction. Other examples include “big box” retailers, such as Home Depot, that tend to drive smaller “mom and pop” retailers out of business.

See big box store, category captain, category management.

category management – The retail practice of segmenting items (SKUs) into groups called categories to make it easier to manage assortments, inventories, shelf-space allocation, promotions, and purchases.

Benefits claimed for a good category management system include increased sales due to better space allocation and better stocking levels, lower cost due to lower inventories, and increased customer retention due to better matching of supply and demand. Category management in retailing is analogous to the commodity management function in purchasing.

See assortment, brand, category captain, category killer, commodity, consumable goods, consumer packaged goods, Fast Moving Consumer Goods (FMCG), private label.

category validator – See category captain.

causal forecasting – See econometric forecasting, forecasting.

causal map – A graphical tool often used for identifying the root causes of a problem; also known as a cause and effect diagram (C&E Diagram), Ishikawa Diagram, fishbone diagram, cause map, impact wheel, root cause tree, fault tree analysis, and current reality trees. ![]()

A causal map is a diagram that shows the cause and effect relationships in a system. Causal maps can add value to organizations in many ways:

• Process improvement and problem solving – Causal maps are a powerful tool for gaining a deep understanding of any problem. As the old adage goes, “A problem well-defined is a problem half-solved.” The causal map is a great way to help organizations understand the system of causes that result in blocked goals and then find solutions to the real problems rather than just the symptoms of the problems. People think in “visual” ways. A good causal map is worth 1000 words and can significantly reduce meeting time and the time to achieve the benefit from a process improvement project.

• Supporting risk mitigation efforts – Causal maps are a powerful tool for helping firms identify possible causes of problems and develop risk mitigation strategies for these possible causes.

• Gaining consensus – The brainstorming process of creating a causal map is also a powerful tool for “gaining a shared understanding” of how a system works. The discussion, debate, and deliberation process in building a causal map is often more important than the map itself.

• Training and teaching – A good causal map can dramatically reduce the time required to communicate complex relationships for training, teaching, and documentation purposes.

• Identifying the critical metrics – Many organizations have too many metrics, which causes managers to lose sight of the critical variables in the system. A good causal map can help managers identify the critical variables that drive performance and require high-level attention. Focusing on these few critical metrics leads to strategic alignment, which in turn leads to organizational success.

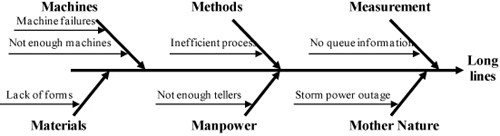

Many types of causal maps are used in practice, including Ishikawa Diagrams (also known as fishbone diagrams, cause and effect diagram, and C&E Diagrams), impact wheels (from one cause to many effects), root cause trees (from one effect to many causes), and strategy maps. The Ishikawa Diagram was developed by Dr. Kaoru Ishikawa (1943-1969) and is by far the most popular form of a causal map. The Ishikawa Diagram is a special type of a causal map that shows the relationships between the problem (at the “head” of the fishbone on the right) and the potential causes of a problem. The figure below is a simple example of an Ishikawa Diagram for analyzing long waiting lines for tellers in a bank.

The Ishikawa Diagram is usually developed in a brainstorming context. The process begins by placing the name of a basic problem of interest at the far right of the diagram at the “head” of the main “backbone” of the fish. The main causes of the problem are drawn as bones off the main backbone. Many firms prescribe six causes: machines (equipment), methods, measurement, materials, manpower (labor), and Mother Nature (environment). However, many argue that this list is overly confining and a bit sexist. Brainstorming is typically done to add possible causes to the main bones and more specific causes to the bones on the main bones. This subdivision into ever-increasing specificity continues as long as the problem areas can be further subdivided. The practical maximum depth of this tree is usually about four or five levels.

Ishikawa Diagram example

Source: Arthur V. Hill

The Ishikawa Diagram is limited in many ways:

• It can only analyze one output variable at a time.

• Some people have trouble working backward from the problem on the far right side of the page.

• It is hard to read and even harder to draw, especially when the problem requires many levels of causation.

• The diagram is also hard to create on a computer.

• The alliteration of Ms is sexist with the terms “manpower” and “Mother Nature.”

• The six Ms rarely include all possible causes of a problem.

Causal maps do not have any of these limitations.

The Ishikawa Diagram starts with the result on the right. With root cause trees, current reality trees (Goldratt 1994), and strategy maps (Kaplan & Norton 2004), the result is usually written at the top of the page. With an FMEA analysis and issues trees, the result is written on the left and then broken into its root causes on the right.

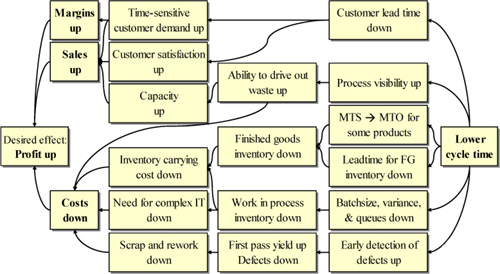

The causal map diagram below summarizes the 304-page book Competing Against Time (Stalk & Hout 2003) in less than a half-page. This is a strategy map using a causal map format rather than the Kaplan and Norton (1992) four-perspective strategy map format. This time-based competition strategy reduces cycle times, cost, and customer leadtimes. Reducing customer leadtimes segments the market, leaving the price-sensitive customers to the competition. This strategy map could be taken a step further to show how the firm could achieve lower cycle time through setup time reduction, vendor relationships, 5S, and plant re-layout.

Source: Professor Arthur V. Hill

Regardless of the format used, these diagrams are usually created through a brainstorming process, often with the help of 3M Post-it Notes. The team brainstorms to identify the root causes of each node. The process continues until all causes (nodes) and relationships (arcs) have been identified. It is possible that “loops” will occur in the diagram. A loop can occur for a vicious cycle or virtuous cycle. For example, a vicious cycle occurs when an alcoholic person drinks and is criticized by family members, which may, in turn, cause him or her to drink even more, be criticized further, etc. A virtuous cycle is similar, except that the result is positive instead of negative.

Scavarda, Bouzdine-Chameeva, Goldstein, Hays, and Hill (2006) developed a method for building collective causal maps with a group of experts. This method assigns weights to each causal relationship and results in a meaningful graphical causal map, with the more important causal relationships shown with darker arrows.

Many software tools are available to help brainstorm and document the cause and effect diagrams. However, Galley (2008) insists that Excel is the best tool.

The C&E Matrix provides a means for experts to assign weights to certain causal input variables. The same can be done with a causal map. All that needs to be done is to score each input variable on several dimensions that are important to customers and then create a weighted score for each input variable. Priority is then given to those variables that are believed to have the most impact on customers. Alternatively, experts can “vote” (using multi-voting) for the causes that they believe should be the focus for further analysis.

Causal maps should not be confused with concept maps, knowledge maps, and mindmaps that nearly always show similarities (but not causality) between objects. For example, monkeys and apes are very similar, but monkeys do not cause apes. Therefore, a knowledge map would show a strong connection between monkeys and apes, but a causal map would not.

Hill (2011b, 2011c) provides more detail on this subject.

See 5 Whys, affinity diagram, Analytic Hierarchy Process (AHP), balanced scorecard, brainstorming, C&E Diagram, C&E Matrix, current reality tree, decision tree, Failure Mode and Effects Analysis (FMEA), fault tree analysis, future reality tree, hoshin planning, ideation, impact wheel, issue tree, lean sigma, MECE, mindmap, Nominal Group Technique (NGT), Pareto Chart, Pareto’s Law, parking lot, process map, quality management, Root Cause Analysis (RCA), root cause tree, sentinel event, seven tools of quality, strategy map, Total Quality Management (TQM), value stream map, Y-tree.

cause and effect diagram – See causal map.

cause map – A trademarked term for a causal map coined by Mark Galley of ThinkReliability.

Cause mapping is a registered trademark of Novem, Inc., doing business as ThinkReliability (www.thinkreliability.com).

See causal map.

caveat emptor – A Latin phrase that means “buyer beware” or “let the buyer beware.”

This phrase means that the buyer (not the seller) is at risk with a purchase decision. The phrase “caveat venditor” is Latin for “let the seller beware.”

See service guarantee, warranty.

CBT – See Computer Based Training (CBT).

c-chart – A quality control chart used to display and monitor the number of defects per sample (or per batch, per day, etc.) in a production process.

Whereas a p-chart controls the percentage of units that are defective, a c-chart controls the number of defects per unit. Note that one unit can have multiple defects. The Poisson distribution is typically used for c-charts, which suggests that defects are rare.

See attribute, control chart, Poisson distribution, Statistical Process Control (SPC), Statistical Quality Control (SQC), u-chart.

CDF – See probability density function.

cell – See cellular manufacturing.

cellular manufacturing – The use of a group of machines dedicated to processing parts, part families, or product families that require a similar sequence of operations. ![]()

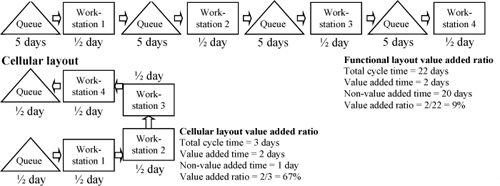

Concept – In a traditional functional (process) layout, machines and workers are arranged in workcenters by function (e.g., drills or lathes), large batches of parts are moved between workcenters, and workers receive limited cross-training on the one type of machine in their workcenter. With cellular manufacturing, machines and workers are dedicated to making a particular type of product or part family. The machines in the cell, therefore, are laid out in the sequence required to make that product. With cells, materials are moved in small batches and workers are cross-trained on multiple machines and process steps. Cells are often organized in a Ushaped layout so workers inside the “U” can communicate and help each other as needed. The figure below contrasts functional and cellular layouts showing that cells can have a much higher value added ratio.

Functional (process) layout

Source: Arthur V. Hill

Advantages of a cell over a product layout – The advantages of a cell over a product layout include reduced cycle time, travel time, setup time, queue time, work-in-process inventory, space, and materials handling cost. Reduced cycle time allows for quicker detection of defects and simpler scheduling. When firms create cells, they often cross-train workers in a cell, which leads to better engagement, morale, and labor productivity. Some firms also implement self-managed work teams to develop workers, reduce overhead, and accelerate process improvement.

Disadvantages of a cell over a product layout – The main disadvantage of a cell is that the machines dedicated to a cell may not have sufficient utilization to justify the capital expense. Consequently, cellular manufacturing is often difficult to implement in a facility that uses large expensive machines. When a firm has one very large expensive machine, it might still use cells for all other steps.

Professors Nancy Hyer and Urban Wemmerlov wrote a book and an article on on using cells for administrative work (Hyer & Wemmerlov 2002a, 2002b).

See automation, Chaku-Chaku, cross-training, facility layout, Flexible Manufacturing System (FMS), focused factory, group technology, handoff, job design, lean thinking, product family, utilization, value added ratio, workcenter, Work-in-Process (WIP) inventory.

CEMS (Contract Electronics Manufacturing Services) – See contract manufacturer.

censored data – Data that is incomplete because it does not include a subpopulation of the data.

A good example of censored data is demand data that does not include data for lost sales. A retailer reports that the sales were 100 for a particular date. However, the firm ran out of stock during the day and does not have information on how many units were demanded but not sold due to lack of inventory. The demand data for this firm is said to be “censored.”

See demand, forecasting.

centered moving average – See exponential smoothing, moving average.

center-of-gravity model for facility location – A method for locating a single facility on an x-y coordinate system to attempt to minimize the weighted travel distances; also called the center of mass or centroid model.

This is called the “infinite set” facility location problem because the “depot” can be located at any point on the x-y coordinate axis. The model treats the x and y dimensions independently and finds the first moment in each dimension. The one depot serves N markets with locations at coordinates (xi, yi) and demands Di units.

The center-of-gravity location for the depot is then ![]() and

and ![]() .

.

This model does not guarantee optimality and can only locate a single depot. Center-of-gravity locations can be far from optimal. In contrast, the numeric-analytic location model guarantees optimality for a single depot location, can be extended (heuristically) to multiple depots, and can also be extended (heuristically) to multiple depots with latitude and longitude data.

See facility location, gravity model for competitive retail store location, numeric-analytic location model.

central limit theorem – An important probability theory concept that can be stated informally as “The sum or average of many independent random variables will be approximately normally distributed.”

For example, the first panel in the figure below shows a probability distribution (density function) that is clearly non-normal. (This is the triangular density function.) The second figure shows the distribution of a random variable that is the average of two independent random variates drawn from the first distribution.

The third and fourth figures show the probability distributions when the number of random variates in the average increases to four and eight. In each successive figure, the distribution for the average of the random variates is closer to normal. This example shows that as the number of random variates in the average increases, the distribution of the average (and the sum) converges to the normal distribution.

Central limit theorem example

Source: Professor Arthur V. Hill

See confidence interval, Law of Large Numbers, normal distribution, sample size calculation, sampling, sampling distribution.

certification – See supplier qualification and certification.

CGS (Cost of Goods Sold) – See cost of goods sold.

chain of custody – See traceability.

Chaku-Chaku – The Japanese phrase “Load, Load” used to describe the practice of facilitating one-piece flow in a manufacturing cell, where equipment automatically unloads parts so the operator can move parts between machines with minimal wasted motion.

With Chaku-Chaku, the operator is responsible for moving parts from machine to machine around an oval or circular-shaped cell and also for monitoring machine performance. When arriving at a machine in the cell, the operator will find a completed part already removed from the machine and the machine ready for a new part. The operator then starts a new part (from the previous machine), picks up the completed part from the machine, and then carries the part to the next machine in the cell to repeat the process.

See cellular manufacturing, multiple-machine handling.

champion – A senior manager who sponsors a program or project; also called an executive sponsor or sponsor.

The champion’s role is to define the strategic direction, ensure that resources are available, provide accountability, and deal with political resistance. This term is often used in the context of a process improvement program at both the program level (the program champion) and the project level (the project sponsor). Although the role can be either formal or informal, in many contexts, making it formal has significant benefits.

See deployment leader, lean sigma, lean thinking, program management office, project charter, sponsor.

change management – A structured approach for helping individuals, teams, and organizations transition from a current state to a desired future state.

Change management is an important discipline in a wide variety of project management contexts, including information systems, process improvement programs (e.g., lean sigma), new product development, quality management, and systems engineering. Organizational change requires (1) helping stakeholders overcome resistance to change, (2) developing new consensus, values, attitudes, norms, and behaviors to support the future state, and finally (3) reinforcing the new behaviors through new organizational structures, reward systems, performance management systems, and standard operating procedures. The benefits of good change management include better engagement of workers, reduced risk of project failure, reduced time and cost to affect the change, and longer lasting results.

The ADKAR Model for Change and the Lewin/Schein Theory of Change entries in this encyclopedia present specific change management methodologies.

See ADKAR Model for Change, co-opt, Lewin/Schein Theory of Change, project charter, project management, RACI Matrix, stakeholder analysis.

changeover – See setup.

changeover cost – See setup cost.

changeover time – See setup time.

channel – See distribution channel.

channel conflict – Competition between players trying to sell to the same customers.

For example, a personal computer company might try to compete with its own distributors (such as Sears) for customers by selling directly to customers. This is often an issue when a retail channel is in competition with a Web-based channel set up by the company. Channel conflict is not a new phenomenon with the Internet, but has become more obvious with the disruptions caused by the Internet.

See disintermediation, distribution channel, distributor, supply chain management.

channel integration – The practice of extending strategic alliances to the suppliers (and their suppliers) and to customers (and to their customers).

See distribution channel, supply chain management.

channel partner – A firm that works with another firm to provide products and services to customers.

Channel partners for a manufacturing firm generally include distributors, sales representatives, logistics firms, transportation firms, and retailers. Note that the term “partner” is imprecise because relationships with distributors and other “channel partners” are rarely legal partnerships.

See distribution channel, supply chain management.

chargeback – See incoming inspection.

chase strategy – A production planning approach that changes the workforce level to match seasonal demand to keep finished goods inventory relatively low.

With the chase strategy, the workforce level is changed to meet (or chase) demand. In contrast, the level strategy maintains a constant workforce level and meets demand with inventory (built in the off-season), overtime production, or both.

Many firms are able to economically implement a chase strategy for each product and a level employment overall strategy by offering counter-seasonal products. For example, a company that makes snow skis might also make water skis to maintain a constant workforce without building large inventories in the off-season. Other examples of counter-seasonal products include snow blowers and lawn mowers (Toro Company) and snowmobiles and all terrain vehicles (Polaris).

See heijunka, level strategy, Master Production Schedule (MPS), production planning, Sales & Operations Planning (S&OP), seasonality.

Chebyshev distance – See Minkowski distance.

Chebyshev’s inequality – A probability theory concept stating that no more than 1/k2 of a distribution can be more than k standard deviations away from the mean.

This theorem is named for the Russian mathematician Pafnuty Lvovich Chebyshev (![]() ). The theorem can be stated mathematically as

). The theorem can be stated mathematically as ![]() and can be applied to all probability distributions. The one-sided Chebyshev inequality is P(X − μ

and can be applied to all probability distributions. The one-sided Chebyshev inequality is P(X − μ ![]() kσ)

kσ) ![]() 1/(1 + k2).

1/(1 + k2).

See confidence interval.

check digit – A single number (a digit) between 0 and 9 that is usually placed at the end of an identifying number (such as a part number, bank account, credit card number, or employee ID) and is used to perform a quick test to see if the identifying number is clearly invalid.

The check digit is usually the last digit in the identifying number and is computed from the base number, which is the identifying number without the check digit. By comparing the check digit computed from the base number with the check digit that is part of the identifying number, it is possible to quickly check if an identifying number is clearly invalid without accessing a database of valid identifying numbers. This is particularly powerful for remote data entry of credit card numbers and part numbers. These applications typically have large databases that make number validation relatively expensive. However, it is important to understand that identifying numbers with valid check digits are not necessarily valid identifying numbers; the check digit only determines if the identifying number is clearly invalid.

A simple approach for checking the validity of a number is to use the following method: Multiply the last digit (the check digit) by one, the second-to-last digit by two, the third-to-last digit by one, the fourth-to-last digit by two, etc. Then sum all digits in these products (including the check digit), divide by ten, and find the remainder. The number is proven invalid if the remainder is not zero.

For example, the account number 5249 has the check digit 9. The products are 1 × 9 = 9, 2 × 4 = 8, 1 × 2 = 2, and 2 × 5 = 10. The sum of the digits is 9 + 8 + 2 + 1 + 0 = 20, which is divisible by 10 and therefore is a valid check digit. Note that the procedure adds the digits, which means that 10 is treated as 1 + 0 = 1 rather than a 10. The above procedure works with most credit card and bank account numbers.

The check digit for all books registered with an International Standard Book Number is the last digit of the ISBN. The ISBN method for the 10-digit ISBN weights the digits from 10 down to 1, sums the products, and then returns the check digit as modulus 11 of this sum. An upper case X is used in lieu of 10.

For example, Operations Management for Competitive Advantage, Eleventh Edition by Chase, Jacobs, and Aquilano (2006) has ISBN 0-07-312151-7, which is 0073121517 without the dashes. The ending “7” is the check digit so the base number is 007312151. Multiplying 10 × 0 = 0, 9 × 0 = 0, 8 × 7 = 56, 7 × 3 = 21, 6 × 1 = 6, 5 × 2 = 10, 4 × 1 = 4, 3 × 5 = 15, and 2 × 1 = 2 and adding the products 0 + 0 + 56 + 21 + 6 + 10 + 4 + 15 + 2 = 114. Dividing 114 by 11 has a remainder of 7, which is the correct check digit. The new 13-digit ISBN uses a slightly different algorithm.

See algorithm, part number.

checklist – A record of tasks that need to be done for error proofing a process.

A checklist is a tool that can be used to ensure that all important steps in a process are done. For example, pilots often use maintenance checklists for items that need to be done before takeoff. The 5S discipline for a crash cart in a hospital uses a checklist that needs to be checked each morning to ensure that the required items are on the cart. Checklists are often confused with checksheets, which are tally sheets for collecting data.

See checksheet, error proofing, multiplication principle.

checksheet – A simple approach for collecting defect data; also called a tally sheet.

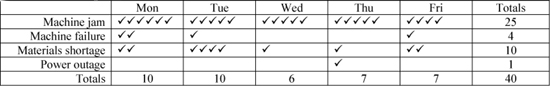

A checksheet is a simple form that can be used to collect and count defects or other data for a Pareto analysis. It is considered one of the seven tools of quality. The user makes a check mark (![]() ) every time a defect of a particular type occurs in that time period. The table below provides a simple example of a checksheet that records the causes for machine downtime.

) every time a defect of a particular type occurs in that time period. The table below provides a simple example of a checksheet that records the causes for machine downtime.

A different format for a checksheet shows a drawing (schematic) of a product (such as a shirt) and counts the problems with a checkmark on the drawing in the appropriate location. For example, if a defect is found on the collar, a checkmark is put on a drawing of a shirt by the shirt collar.

Checksheets should be used in the gemba (the place where work is done), so workers and supervisors can see them and update them on a regular basis. Checksheets are often custom-designed by users for their particular needs. Checksheets are often confused with checklists, which are used for error proofing.

See checklist, downtime, gemba, Pareto Chart, seven tools of quality.

child item – See bill of material (BOM).

chi-square distribution – A continuous probability distribution often used for goodness of fit testing; also known as the chi-squared and χ2 distribution; named after the Greek letter “chi” (χ).

The chi-square distribution is the sum of squares of k independent standard normal distributed random variables. If X1, X1,... , Xk are k independent standard normal random variables, the sum of these random variables has the chi-square distribution with k degrees of freedom. The best-known use of the chi-square distribution is for goodness of fit tests.

Parameters: Degrees of freedom, k.

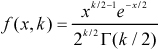

Density function and distribution functions:  , where k

, where k ![]() I and Γ(k/2) is the gamma function, which has closed-form values for half-integers (i.e.,

I and Γ(k/2) is the gamma function, which has closed-form values for half-integers (i.e., ![]() , where !! is the double factorial function).

, where !! is the double factorial function). ![]() , where Γ(k/2) is the gamma function and γ(k / 2, x/2) is the lower incomplete gamma function

, where Γ(k/2) is the gamma function and γ(k / 2, x/2) is the lower incomplete gamma function ![]() , which does not have a closed form.

, which does not have a closed form.

Statistics: Mean k, median ≈ k(1 − 2 / (9k))3, mode max(k − 2, 0), variance 2k.

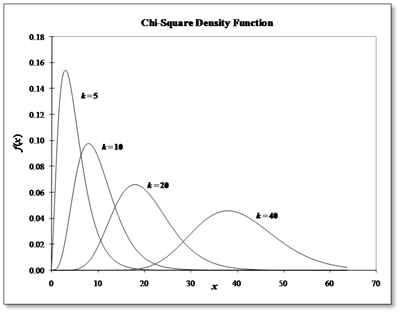

Graph: The graph below shows the chi-square density function for a range of k values.

Excel: Excel 2003/2007 uses CHIDIST(x, k) to return the one-tailed (right tail) probability of the chi-square distribution with k degrees of freedom, CHIINV(p, k) returns the inverse of the one-tailed (right tail) probability of the chisquare distribution, and CHITEST(actual_range, expected_range) can be used for the chi-square test. The chi-square density is not available in Excel, but the equivalent Excel function GAMMADIST(k/2, 2) can be used. Excel 2010 has the CHISQ.DIST(x, degrees_of_freedom, cumulative) and several other related functions.

Relationships to other distributions: The chi-square is a special case of the gamma distribution where χ2(k) = Γ(k / 2,2). The chi-square is the sum of k independent random variables; therefore, by the central limit theorem, it converges to the normal as k approaches infinity. The chi-square will be close to normal for k > 50 and ![]() will approach the standard normal.

will approach the standard normal.

See chi-square goodness of fit test, gamma distribution, gamma function, probability density function, probability distribution.

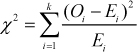

chi-square goodness of fit test – A statistical test used to determine if a set of data fits a hypothesized discrete probability distribution.

The chi-square test statistic is  , where Oi is the observed frequency in the i-th bin and Ei is the expected frequency. Ei is n(F(xit) – F(xib)), where

, where Oi is the observed frequency in the i-th bin and Ei is the expected frequency. Ei is n(F(xit) – F(xib)), where ![]() is the total number of observations, F(x) is the distribution function for the hypothesized distribution, and (xib, xit) are the limits for bin i. It is important that every bin has at least five observations.

is the total number of observations, F(x) is the distribution function for the hypothesized distribution, and (xib, xit) are the limits for bin i. It is important that every bin has at least five observations.

The hypothesis that the data follow the hypothesized distribution is rejected if the calculated χ2 test statistic is greater than χ21-α,k-1, the chi-square distribution value with k - 1 degrees of freedom and significance level of α. The formula for this in Excel is CHIINV(1− α, k−1). The Excel function CHITEST(actual_range, expected_range) can also be used.

Failure to reject the null hypothesis of no difference should not be interpreted as “accepting the null hypothesis.” For smaller sample sizes, goodness-of-fit tests are not very powerful and will only detect major differences. On the other hand, for a larger sample size, these tests will almost always reject the null hypothesis because it is almost never exactly true. As Law and Kelton (2002) stated, “This is an unfortunate property of these tests, since it is usually sufficient to have a distribution that is ‘nearly’ correct.”

The Kolmogorov-Smirnov (KS) test is generally believed to be a better test for continuous distributions.

See Box-Jenkins forecasting, chi-square distribution, Kolmogorov-Smirnov test (KS test).

CIM – See Computer Integrated Manufacturing.

clean room – A work area where air quality, flow, flow direction, temperature, and humidity are carefully regulated to protect sensitive equipment and materials.

Clean rooms are frequently found in electronics, pharmaceutical, biopharmaceutical, medical device, and other manufacturing environments. Clean rooms are important features in the production of integrated circuits, hard drives, medical devices, and other high-tech and sterile products. The air in a clean room is repeatedly filtered to remove dust particles and other impurities.

The air in a typical office building contains from 500,000 to 1,000,000 particles (0.5 micron or larger) per cubic foot of air. A human hair is about 75-100 microns in diameter, but a particle that is 200 times smaller (0.5 micron) than a human hair can cause a major disaster in a clean room. Contamination can lead to expensive downtime and increased production cost. The billion-dollar NASA Hubble Space Telescope was damaged because of a particle smaller than 0.5 micron.

People are a major source of contamination. A motionless person produces about 100,000 particles of 0.3 micron and larger per minute. A person walking produces about 10 million particles per minute.

The measure of the air quality in a clean room is defined in Federal Standard 209E. A Class 10,000 clean room can have no more than 10,000 particles larger than 0.5 micron in any given cubic foot of air. A Class 1000 clean room can have no more than 1000 particles and a Class 100 clean room can have no more than 100 particles. Hard disk drive manufacturing, for example, requires a Class 100 clean room.

People who work in clean rooms must wear special protective clothing called bunny suits that do not give off lint particles and prevent human skin and hair particles from entering the room’s atmosphere.

click-and-mortar – A hybrid between a dot-com and a “brick-and-mortar” operation.

See dot-com.

clockspeed – The rate of new product introduction in an industry or firm.

High clockspeed industries, such as consumer electronics, often have product lifecycles of less than a year. In contrast, low clockspeed industries, such as industrial chemicals, may have product life cycles measured in decades. High clockspeed industries can be used to understand the dynamics of change that will, in the long run, affect all industries. The term was popularized in the book Clockspeed by Professor Charles Fine from the Sloan School at MIT (Fine 1995).

See New Product Development (NPD), time to market.

closed-loop MRP – An imprecise concept of a capacity feedback loop in a Materials Requirements Planning (MRP) system; sometimes called closed-loop planning.

Some consultants and MRP/ERP software vendors used to claim that their systems were “closed-loop” planning systems. Although they were never very precise in what this meant, they implied that their systems provided rapid feedback to managers on capacity/load imbalance problems. They also implied that their closedloop systems could somehow automatically fix the problems when the load exceeded the capacity. The reality is that almost no MRP systems automatically fix capacity/load imbalance problems. Advanced Planning and Scheduling (APS) Systems are designed to create schedules that do not violate capacity, but unfortunately, they are hard to implement and maintain.

See Advanced Planning and Scheduling (APS), Business Requirements Planning (BRP), capacity, Capacity Requirements Planning (CRP), finite scheduling, Materials Requirements Planning (MRP), Sales & Operations Planning (S&OP).

cloud computing – Internet-based computing that provides shared resources, such as servers, software, and data to users.

Cloud computing offers many advantages compared to a traditional approach where users have their own hardware and software. These advantages include (1) reduced cost, due to less investment in hardware and software and shared expense for maintaining hardware, software, and databases, (2) greater scalability, (3) ease of implementation, and (4) ease of maintainence.

Potential drawbacks of cloud computing include (1) greater security risk and (2) less ability to customize the application for specific business needs. Cloud computing includes three components: Cloud Infrastructure, Cloud Platforms, and Cloud Applications. Cloud computing is usually a subscription or pay-per-use service. Examples include Gmail for Business and salesforce.com.

See Application Service Provider (ASP), Software as a Service (SaaS).

cluster analysis – A method for creating groups of similar items.

Cluster analysis is an exploratory data analysis tool that sorts items (objects, cases) into groups (sets, clusters) so the similarity between the objects in a group is high and the similarity between groups is low. Each item is described by a set of measures (also called attributes, variables, or dimensions). The dissimilarity between two items is a function of these measures. Cluster analysis, therefore, can be used to discover structures in data without explaining why they exist.

For example, biologists have organized different species of living beings into clusters. In this taxonomy, man belongs to the primates, the mammals, the amniotes, the vertebrates, and the animals. The higher the level of aggregation, the less similar are the members in the respective class. For example, man has more in common with all other primates than with more “distant” members of the mammal family (e.g., dogs).

Unlike most exploratory data analysis tools, cluster analysis is not a statistical technique, but rather a collection of algorithms that put objects into clusters according to well-defined rules. Therefore, statistical testing is not possible with cluster analysis. The final number of clusters can be a user-input to the algorithm or can be based on a stopping rule. The final result is a set of clusters (groups) of relatively homogeneous items.

Cluster analysis has been applied to a wide variety of research problems and is a powerful tool whenever a large amount of information is available and the researcher has little prior knowledge of how to make sense out of it. Examples of cluster analysis include:

• Marketing research: Cluster consumers into market segments to better understand the relationships between different groups of consumers/potential customers. It is also widely used to group similar products to define product position.

• Location analysis: Cluster customers based on their locations.

• Quality management: Cluster problem causes based on their attributes.

• Cellular manufacturing: Cluster parts based on their routings.

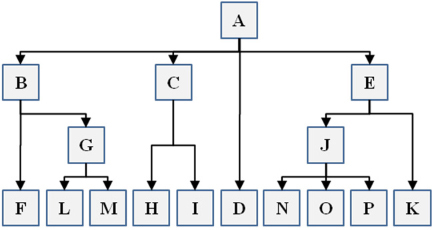

A dendrogram is a graphical representation of the step-by-step clustering process. In the dendrogram on the right, the first step divides the entire group of items (set A) into four sets (B, C, D, E). The second step divides B into sets F and G, divides C into sets H and I, and divides E into sets J and K. In the last step, set G is divided into sets L and M, and set J is divided into sets N, O, and P. Therefore, the final clusters are on the bottom row (sets F, L, M, H, I, D, N, O, P, and K). Note that each of these final sets may include only one item or many items.

Dendrogram example

Source: Professor Arthur V. Hill

The distance between any two objects is a measure of the dissimilarity between them. Distance measures can be computed from the variables (attributes, dimensions) that describe each item. The simplest way to measure the distance between two items is with the Pythagorean distance. When we have just two variables (xi, yi) to describe each item i, the Pythagorean distance between points i and j is ![]() . With three variables (xi, yi, zi) to describe each item, the Pythagorean distance is

. With three variables (xi, yi, zi) to describe each item, the Pythagorean distance is ![]() . With K variables, xik is defined as the measurement on the k-th variable for item i, and the Pythagorean distance between items i and j is defined as

. With K variables, xik is defined as the measurement on the k-th variable for item i, and the Pythagorean distance between items i and j is defined as  .

.

The Minkowski metric is a more generalized distance metric. If item i has K attributes (xi1, xi2, ... , xiK), the distance between item i and item j is given by  . The Minkowski metric is equal to the Euclidean distance when r = 2 and the Manhattan square distance when r = 1.

. The Minkowski metric is equal to the Euclidean distance when r = 2 and the Manhattan square distance when r = 1.

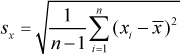

When two or more variables are used to define distance, the one with the larger magnitude tends to dominate. Therefore, it is common to first standardize all variables, i.e., ![]() , where

, where ![]() is the sample mean for the k-th variable and sk is the sample standard deviation. However, even with standardization, not all variables should have the same weight in the summation. Unfortunately, it is usually not clear how to determine how much weight should be given to each variable.

is the sample mean for the k-th variable and sk is the sample standard deviation. However, even with standardization, not all variables should have the same weight in the summation. Unfortunately, it is usually not clear how to determine how much weight should be given to each variable.

Many clustering algorithms are available. The objective functions include the complete-linkage (or farthest-neighbor), single-linkage (or nearest-neighbor), group-average, and Ward’s method. Ward’s method is one of the more commonly used methods and measures the distance (dissimilarity) between any two sets (S1, Sj) as the sum of the squared distances between all pairs of items in the two sets, i.e., ![]() . Divisive methods start with all items in one cluster and then split (partition) the cases into smaller and smaller clusters. Agglomerative methods begin with each item treated as a separate cluster and then combine them into larger and larger clusters until all observations belong to one final cluster.

. Divisive methods start with all items in one cluster and then split (partition) the cases into smaller and smaller clusters. Agglomerative methods begin with each item treated as a separate cluster and then combine them into larger and larger clusters until all observations belong to one final cluster.

A scree plot is a graph used in cluster analysis (and also factor analysis) that plots the objective function value against the number of clusters to help determine the best number of clusters. The scree test involves finding the place on the graph where the objective function value appears to level off as the number of clusters (factors) increases. To the right of this point is only “scree.” Scree is a geological term referring to the loose rock and debris that collects on the lower part of a rocky slope.

SPSS offers three general approaches to cluster analysis:

• Hierarchical clustering – Users select the distance measure, select the linking method for forming clusters, and then determine how many clusters best suit the data.

• K-means clustering – Users specify the number of clusters in advance and the algorithm assigns items to the K clusters. K-means clustering is much less computer-intensive than hierarchical clustering and is therefore preferred when datasets are large (i.e., N > 1000).

• Two-step clustering – The algorithm creates pre-clusters, and then clusters the pre-clusters.

Exploratory data analysis often starts with a data matrix, where each row in an item (case, object) and each column is a variable that describes that item. Cluster analysis is a means of grouping the rows (items) that are similar. In contrast, factor analysis and principal component analysis are statistical techniques for grouping similar (highly correlated) variables to reduce the number of variables. In other words, cluster analysis groups items, whereas factor analysis and principal component analysis group variables.

See affinity diagram, algorithm, data mining, data warehouse, factor analysis, logistic regression, Manhattan square distance, Minkowski distance metric, Principal Component Analysis (PCA), Pythagorean Theorem.

CMM – See Capability Maturity Model

CNC – See Computer Numerical Control.

co-competition – See co-opetition.

COD – See Cash on Delivery (COD).

coefficient of determination – See correlation.

coefficient of variation – A measure of the variability relative to the mean, measured as the standard deviation divided by the mean.

The coefficient of variation is used as a measure of the variability relative to the mean. For a sample of data, with a sample standard deviation s and sample mean ![]() , the coefficient of variation is

, the coefficient of variation is ![]() . The coefficient of variation has no unit of measure (i.e., it is a “unitless” quantity).

. The coefficient of variation has no unit of measure (i.e., it is a “unitless” quantity).