T

Taguchi methods – An approach to design of experiments developed by Genichi Taguchi that uses a quadratic loss function; also called robust design.

Dr. Genichi Taguchi developed a practical approach for designing quality into products and processes. His methodology recognized that quality should not be defined as simply within or not within specifications, so he created a simple quadratic loss function to measure quality. The two figures below contrast the typical 0-1 loss function used in quality with the Taguchi quadratic loss function.

Technicians apply Taguchi methods on the manufacturing floor to improve products and processes. The goal is to reduce the sensitivity of engineering designs to uncontrollable factors or noise by maximizing the signal to noise ratio. This moves design targets toward the middle of the design space so external variation affects the behavior of the design as little as possible. This approach permits large reductions in both part and assembly tolerances, which are major drivers of manufacturing cost.

See Analysis of Variance (ANOVA), Design of Experiments (DOE), functional build, lean sigma, robust, tolerance.

takt time – The customer demand rate expressed as a time and used to pace production. ![]()

According to a German-English dictionary (http://dict.leo.org), “takt” is the German word for “beat” or “musical time.” Several sources define takt as the baton that an orchestra conductor uses to regulate the beat for the orchestra. However, this is not correct. The German word for baton is “taktstock.”

The Japanese picked up the German word and use it to mean the beat time or heart beat of a factory. Lean production uses takt time to set the production rate to match the market demand rate. Takt time, therefore, is the desired time between completions of a product, synchronized to the customer demand rate and can be calculated as (available production time)/(forecasted demand rate).

Takt time, therefore, is set by the customer demand rate, and should be adjusted when the forecasted market demand rate changes. If takt time and the customer demand rate do not match, the firm (and the supply chain) will have either too little or too much inventory.

For example, a factory has a forecasted market demand of 100 units per day, and the factory operates for 10 hours per day. The target production rate should be the same as the market demand rate (100 units per day or 10 units per hour). The takt time for this factory should be (10 hours/day)/(100 units/day) = 0.1 hours per unit, or 6 minutes per unit. The factory should complete 1 unit about every 6 minutes, on average.

Many lean manufacturing consultants do not seem to understand the difference between a rate and a time and use the term “takt time” to mean the target production rate. However, a rate is measured in units per hour and a time is measured in hours (or minutes) per unit. For example, a production rate of 10 units per hour translates into a takt time of 6 minutes per unit.

Takt time is nearly identical to the traditional industrial engineering definition of cycle time, which is the target time between completions. The only difference between takt time and this type of cycle time is that takt time is defined by the market demand rate, whereas cycle time is not necessarily defined by the market demand.

Some people confuse takt time and throughput time. It is possible to have a throughput time of 6 weeks and have a takt time of 6 seconds. Takt time is the time between completions and can be thought of as time between units “falling off the end of the line.” The cycle time entry compares cycle time and throughput time.

See cycle time, heijunka, leadtime, lean thinking, pacemaker, pitch.

tally sheet – See checksheet.

tampering – The practice of adjusting a stable process and therefore increasing process variation. ![]()

Tampering is over-reacting to common cause variation and therefore always increasing variation.

See common cause variation, control chart, quality management, special cause variation, Statistical Process Control (SPC), Statistical Quality Control (SQC).

tardiness – See service level.

tare weight – (1) In a shipping/logistics context: The weight of an empty vehicle before the products are loaded; also called unladen weight. (2) In a packaging context: The weight of an empty shipping container or package.

The weight of the goods carried (the net weight) can be determined by subtracting the tare weight from the total weight, which is called the gross weight or laden weight. The tare weight can be useful for estimating the cost of goods carried for taxation and tariff purposes. This is a common practice for tolls related to barge, rail, and road traffic, where the toll varies with the value of the goods. Tare weight is often printed on the sides of railway cars and transport vehicles.

See gross weight, logistics, net weight, scale count, tariff.

target cost – The desired final cost for a new product development effort.

Many firms design a product, estimate the actual cost, and then add the margin to set the price. In contrast to this practice, with a target costing strategy, the firm determines the price based on the market strategy and then determines the target cost by subtracting the desired margin. The resulting “target cost” becomes the requirement for the product design team. The four major steps of target costing are:

1. Determine the price – The amount customers are willing to pay for a product or service with specified features and functions.

2. Set the target cost per unit and in total – The target cost per unit is the market price less the required margin. The total target cost is the per unit target cost multiplied by the expected number of units sold over its life.

3. Compare the total target cost to the currently feasible total cost to create the cost reduction target – The currently feasible total cost is the cost to make the product, given current design and process capabilities. The difference between the total target cost and currently feasible cost is the cost reduction target.

4. Design (or redesign) products and processes to achieve the cost reduction target – This can be an iterative process until both the product or service and its cost meet marketing and financial objectives.

See job order costing, target price.

target market – The group of customers that a business intends to serve.

See market share, strategy map.

target inventory – See order-up-to level.

target price – The practice of setting a sales price based on what the market will bear rather than on a standard cost.

The target price is the price at which the firm believes a seller will buy a product based on market research. The easiest approach for determining a target price is to study similar products sold by competitors. The target price may be used to calculate the target cost. In an investment context, the target price is the price at which a stockholder is willing to sell his or her stock.

See Customer Relationship Management (CRM), target cost.

tariff – A tax on import or export trade.

An ad valorem tariff is set as a percentage of the value. A specific tariff is not based on the value.

See facility location, General Agreement on Tariffs and Trade (GATT), tare weight, trade barrier.

task interleaving – A warehouse management term for combining tasks, such as picking, put away, and cycle counting, on a single trip to reduce deadheading (driving empty) for materials handling equipment.

The main idea of task interleaving is to reduce deadheading for materials handling equipment, such as forklift trucks in a warehouse or distribution center. Task interleaving is often used with put away, picking, and cycle counting tasks. For example, a stock picker might put away a pallet and then pick another pallet and move it to the loading dock. Although task interleaving is used primarily in pallet operations, it can be used with any type of materials handling equipment. Benefits of task interleaving include reduced travel time, increased productivity, less wear on lift trucks, reduced energy usage, and better on-time delivery.

Gilmore (2005) suggests that a Warehouse Management System (WMS) task interleaving system must consider permission, proximity, priority, and the age of the task (time). He also recommends that firms initially implement a WMS system without interleaving so personnel can learn the basics before trying interleaving.

See picking, slotting, warehouse, Warehouse Management System (WMS).

technological forecasting – The process of predicting the future characteristics and timing of technology.

The prediction usually estimates the future capabilities of a technology. The two major methods for technological forecasting include time series and judgmental methods. Time series forecasting methods for technological forecasting fit a mathematical model to historical data to extrapolate some variable of interest into the future. For example, the number of millions of instructions per second (MIPS) for a computer is fairly predictable using time series methods. (However, the underlying technology to achieve that performance will change at discrete points in time.) Judgmental forecasting may also be based on projections of the past, but information sources in such models rely on the subjective judgments of experts.

The growth pattern of a technological capability is similar to the growth of biological life. Technologies go through an invention phase, an introduction and innovation phase, a diffusion and growth phase, and a maturity phase. This is similar to the S-shaped growth of biological life. Technological forecasting helps estimate the timing of these phases. This growth curve forecasting method is particularly useful in determining the upper limit of performance for a specific technology.

See Delphi forecasting, forecasting, technology road map.

technology push – A business strategy that develops a high-tech/innovative product with the hope that the market will embrace it; in contrast, a market pull strategy develops a product in response to a market need.

See operations strategy.

technology road map – A technique used by many businesses and research organizations to plan the future of a particular process or product technology.

The goal of a technology roadmap is to anticipate externally driven technological innovations by mapping them on a timeline. The technology roadmap can then be linked with the research, product development, marketing, and sourcing. Some of the benefits of technology roadmapping include:

• Support the organization’s strategic planning processes with respect to new technologies.

• Plan for the integration of new technologies into current products.

• Identify business opportunities for leveraging new technologies in new products.

• Identify needs for technical knowledge.

• Inform sourcing decisions, resource allocation, and risk management decisions.

One approach for structuring the technology roadmapping process is to use a large matrix on a wall to capture the ideas. The top row should be labeled Past → Now → Plans → Future → Vision. The left column has the rows labeled markets, products, technologies, and resources.

• The markets row is used to explore markets, customers, competitors, environment, industry, business trends, threats, objectives, milestones, and strategies.

• The products row is used to explore products, services, applications, performance capabilities, features, components, families, processes, systems, platforms, opportunities, requirements, and risks.

• The technologies row is used to map new technologies, competencies, and knowledge.

• The resources row is used for skills, partnerships, suppliers, facilities, infrastructure, science, and R&D projects.

Post-it Notes are then used to “map” and link each of these dimensions over time.

The Centre for Technology Management at the University of Cambridge has a number of publications on this topic at www.ifm.eng.cam.ac.uk/ctm/publications/tplan. Their standard “T-Plan” process includes four major steps that focus on (1) the market (performance dimensions, business drivers, SWOT, gaps), (2) the product (features, strategy, gaps), (3) technology (solutions, gaps), and (4) roadmapping (linking technology resources to future market requirements). Technology roadmapping software is offered by www.roadmappingtechnology.com. The University of Minnesota Technological Leadership Institute (TLI) (http://tli.umn.edu) makes technology roadmapping a key topic in many of its programs.

See disruptive technology, New Product Development (NPD), product life cycle management, technological forecasting.

technology transfer – The process of sharing skills, expertise, knowledge, processes, technologies, scientific research, and intellectual property across different organizations, such as research laboratories, governments, universities, joint ventures, or subsidiaries.

Technology transfer can occur in three ways: (1) giving it away through technical journals, conferences, or free training and technical assistance, (2) commercial transactions, such as licensing patent rights, marketing agreements, co-development activities, exchange of personnel, and joint ventures, and (3) theft through industrial espionage or reverse engineering.

See intellectual property (IP), knowledge management, reverse engineering.

telematics – The science of sending, receiving, and storing information via telecommunication devices.

According to Wikipedia, the word “telematics” is now widely associated with the use of Global Positioning System (GPS) technology integrated with computers and mobile communications technology in automotive and trucking navigation systems. This technology is growing in importance in the transportation industry. Some important applications of telematics include the following:

• In-car infotainment

• Navigation and location

• Intelligent vehicle safety

• Fleet management

• Asset monitoring

• Risk management

See Global Positioning System (GPS), logistics.

termination date – A calendar date by which a product will no longer be sold or supported.

Many manufacturing and distribution companies use a policy of having a “termination date” for products and components. A product (and its associated unique components) is no longer sold or supported after the termination date. The advantages of having a clearly defined termination date policy include:

• Provides a clear plan for every functional area in the organization that deals with products (manufacturing, purchasing, inventory, service, engineering, and marketing). This facilitates an orderly, coordinated phaseout of the item.

• Communicates to the salesforce and the market that the product will no longer be supported (or at least no longer be sold) after the termination date. This often provides incentive for customers to upgrade.

• Allows manufacturing and inventory planners to bring down the inventories for all unique components needed for the product in a coordinated way.

Best practices for a termination date policy include the following policies: (1) plan ahead many years to warn all stakeholders (this includes marketing, sales, product management, purchasing, and manufacturing), (2) ensure that all functions (and divisions) have “buy-in” to the termination date, and (3) do not surprise customers by terminating a product without proper notice.

See all-time demand, obsolete inventory, product life cycle management.

terms – A statement of a seller’s payment requirements.

Payment terms generally include discounts for prompt payment, if any, and the maximum time allowed for payment. The shipping terms determine who is responsible for the freight throughout the shipment. Therefore, a shipper will only be concerned about tracking the container to the point where another party takes ownership. This causes problems with container tracking because information may not be shared throughout all links in the supply chain.

See Accounts Payable (A/P), Cash on Delivery (COD), demurrage, FOB, Incoterms, invoice, waybill.

theoretical capacity – See capacity.

Theory of Constraints (TOC) – A management philosophy developed by Dr. Eliyahu M. Goldratt that focuses on the bottleneck resources to improve overall system performance. ![]()

The Theory of Constraints (TOC) recognizes that an organization usually has just one resource that defines its capacity. Goldratt (1992) argues that all systems are constrained by one and only one resource. As Goldratt states, “A chain is only as strong as its weakest link.” This is an application of Pareto’s Law to process management and process improvement. TOC concepts are consistent with managerial economics that teach that the setup cost for a bottleneck resource is the opportunity cost of the lost gross margin and that the opportunity cost for a non-bottleneck resource is nearly zero.

The “constraint” is the bottleneck, which is any resource that has capacity less than the market demand. Alternatively, the constraint can be defined as the process that has the lowest average processing rate for producing end products. The constraint (the bottleneck) is normally defined in terms of a resource, such as a machine, process, or person. However, the TOC definition of a constraint can also include tools, people, facilities, policies, culture, beliefs, and strategies. For example, this author observed that the binding constraint in a business school in Moscow in 1989 was the mindset of the dean (rector) who could not think beyond the limits of Soviet Communism, even for small issues, such as making photocopies58.

The Goal (Goldratt 1992) and the movie of the same name, include a character named Herbie who slowed down a troop of Boy Scouts as they hiked though the woods. Herbie is the “bottleneck” whose pace slowed down the troop. The teaching points of the story for the Boy Scouts are (1) they needed to understand that Herbie paced the operation (i.e., the troop could walk no faster than Herbie) and (2) they needed to help Herbie with his load (i.e., the other Scouts took some of Herbie’s bedding and food so Herbie could walk faster). In the end, the troop finished the hike on-time because it had better managed Herbie, the bottleneck.

According to TOC, the overall performance of a system can be improved when an organization identifies its constraint (the bottleneck) and manages the bottleneck effectively. TOC promotes the following five-step methodology:

1. Identify the system constraint – No improvement is possible unless the constraint or weakest link is found. The constraint can often be discovered by finding the largest queue.

2. Exploit the system constraints – Protect the constraint (the bottleneck) so no capacity is wasted. Capacity can be wasted by (1) starving (running out of work to process), (2) blocking (running out of an authorized place to put completed work), (3) performing setups, or (4) working on defective or low-priority parts. Therefore, it is important to allow the bottleneck to pace the production process, not allow the bottleneck to be starved or blocked, focus setup reduction efforts on the bottleneck, increase lotsizes for the bottleneck, and inspect products before the constraint so no bottleneck time is wasted on defective parts.

3. Subordinate everything else to the system constraint – Ensure that all other resources (the unconstrained resources) support the system constraint, even if this reduces the efficiency of these resources. For example, the other processes can produce smaller lotsizes so the constrained resource is never starved. The unconstrained resources should never be allowed to overproduce.

4. Elevate the system constraints – If this resource is still a constraint, find more capacity. More capacity can be found by working additional hours, using alternate routings, purchasing capital equipment, or subcontracting.

5. Go back to Step 1 – After this constraint problem is solved, go back to the beginning and start over. This is a continuous process of improvement.

Underlying Goldratt’s work is the notion of synchronous manufacturing, which refers to the entire production process working in harmony to achieve the goals of the firm. When manufacturing is synchronized, its emphasis is on total system performance, not on localized measures, such as labor or machine utilization.

The three primary TOC metrics are throughput (T), inventory (I), and operating expenses (OE), often called T, I, and OE. Throughput is defined as sales revenue minus direct materials per time period. Inventory is defined as direct materials at materials cost. Operating expenses include both labor and overhead. Bottleneck management will result in increased throughput, reduced inventory, and the same or better operating expense.

See absorption costing, bill of resources, blocking, bottleneck, buffer management, CONWIP, critical chain, current reality tree, Drum-Buffer-Rope (DBR), facility layout, future reality tree, gold parts, Herbie, Inventory Dollar Days (IDD), lean thinking, opportunity cost, overhead, pacemaker, Pareto’s Law, process improvement program, routing, setup cost, setup time reduction methods, starving, synchronous manufacturing, throughput accounting, Throughput Dollar Days (TDD), transfer batch, utilization, variable costing, VAT analysis.

Theta Model – A forecasting model developed by Assimakopoulos and Nikolopoulos (2000) that combines a longterm and short-term forecast to create a new forecast; sometimes called the Theta Method.

The M3 Competition runs a “race” every few years to compare time series forecasting methods on hundreds of actual times series (Ord, Hibon, & Makridakis 2000). The winner of the 2000 competition was a relatively new forecasting method called the “Theta Model” developed by Assimakopoulos and Nikolopoulos (2000). This model was difficult to understand until Hyndman and Billah (2001) simplified the mathematics. More recently, Assimakopoulos and Nikolopoulos (2005) wrote their own simplified version of the model. Although the two simplified versions are very similar in intent, they are not mathematically equivalent.

Theta Model forecasts are the average (or some other combination) of a longer-term and a shorter-term forecast. The longer-term forecast can be a linear regression fit to the historical demand, and the shorter-term forecast can be a forecast using simple exponential smoothing. The Theta Model assumes that all seasonality has already been removed from the data using methods, such as the centered moving average. The apparent success of this simple time series forecasting method is that the exponential smoothing component captures the “random walk” part of the time series, and the least squares regression trend line captures the longer-term trend.

See exponential smoothing, forecasting, linear regression.

Thiel’s U – An early Relative Absolute Error (RAE) measure of forecast errors developed by Henri Thiel (1966).

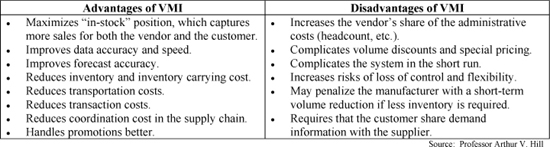

Thiel’s U statistic (or Thiel’s inequality coefficient) is a metric that compares forecasts to an upper bound on the naïve forecast from a random walk, which uses the actual value from the previous period as the forecast for this period (Thiel 1966). Thiel proposed two measures for forecast error that Armstrong calls U1 and U2. SAP uses still another variant of Thiel’s coefficient, labeled U3 below.

According to Armstrong and Collopy (1992), the U2 metric has better statistical properties than U1 or U3. Although Thiel’s U metrics are included in many forecasting tools and texts, Armstrong and Collopy do not recommend them because other RAE metrics are easier to understand and have better statistical properties.

See forecast error metrics, Mean Absolute Percent Error (MAPE), Relative Absolute Error (RAE).

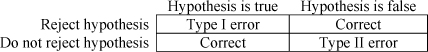

Third Party Logistics (3PL) provider – A firm that provides outsourced services, such as transportation, logistics, warehousing, distribution, and consolidation to customers but does not take ownership of the product.

Third Party Logistics providers (3PLs) are becoming more popular as companies seek to improve their customer service capabilities without making significant investments in logistics assets, networks, and warehouses. The 3PL may conduct these functions in the client’s facility using the client’s equipment or may use its own facilities and equipment. The parties in a supply chain relationship include the following:

• First party – The supplier

• Second party – The customer

• Third party (3PL) – A company that offers multiple logistics services to customers, such as transportation, distribution, inbound freight, outbound freight, freight forwarding, warehousing, storage, receiving, cross-docking, customs, order fulfillment, inventory management, and packaging. In the U.S., the legal definition of a 3PL in HR4040 is “a person who solely receives, holds, or otherwise transports a consumer product in the ordinary course of business but who does not take title to the product” (source: www.scdigest.com, 2009).

• Fourth party (4PL) – An organization that manages the resources, capabilities, and technologies of multiple service providers (such as 3PLs) to deliver a comprehensive supply chain solution to its clients.

With a 3PL, the supplier firm outsources its logistics to two or more specialist firms (3PLs) and then hires another firm (the 4PL) to coordinate the activities of the 3PLs. 4PLs differ from 3PLs in the following ways (source: www.scdigest.com, January 1, 2009):

• The 4PL organization is often a separate entity established as a joint venture or long-term contract between a primary client and one or more partners.

• The 4PL organization acts as a single interface between the client and multiple logistics service providers.

• All aspects of the client’s supply chain are managed by the 4PL organization.

• It is possible for a 3PL to form a 4PL organization within its existing structure.

See the International Warehouse Logistics Association (www.iwla.com) site for more information.

See bullwhip effect, consolidation, customer service, fulfillment, joint venture, logistics, receiving, warehouse.

throughput accounting – Accounting principles based on the Theory of Constraints developed by Goldratt.

Throughput is the rate at which an organization generates money through sales. Goldratt defines throughput as the difference between sales revenue and unit-level variable costs, such as materials and power. Cost is the most important driver for our operations decisions, yet costs are unreliable due to the arbitrary allocation of overhead, even with Activity Based Costing (ABC). Because the goal of the firm is to make money, operations can contribute to this goal by managing three variables:

• Throughput (T) = Revenue less materials cost less out-of-pocket selling costs (Note that this is a rate and is not the same as the throughput time.)

• Inventory (I) = Direct materials cost and other truly variable costs with no overhead

• Operating expenses (OE) = Overhead and labor cost (the things that turn the “I” into “T”)

Throughput accounting is a form of contribution accounting, where all labor and overhead costs are ignored. The only cost that is considered is the direct materials cost. Throughput accounting is applied to the bottleneck (the constraint) using the following key performance measurements: output, setup time (average setup time by product and total setup time per period), downtime (planned and emergency), and yield rate. The bottleneck has the greatest impact on the throughput accounting measures (T, I, and OE), which in turn affect the goal of making money for the firm. Noreen, Smith, and Mackey (1995) is a good reference on this subject.

See absorption costing, Activity Based Costing (ABC), focused factory, Inventory Dollar Days (IDD), overhead, Theory of Constraints (TOC), Throughput Dollar Days (TDD), variable costing, Work-in-Process (WIP) inventory.

Throughput Dollar Days (TDD) – A Theory of Constraints (TOC) measure of the reliability of a supply chain defined in terms of the dollar days of late orders.

The entry Inventory Dollar Days (IDD) has much more detail on this measure.

See Inventory Dollar Days (IDD), operations performance metrics, Theory of Constraints (TOC), throughput accounting.

throughput ratio – See value added ratio.

throughput time – See cycle time.

tier 1 supplier – A sourcing term for an immediate supplier; a tier 2 supplier is a supplier to a supplier.

See purchasing, supply chain management.

time bucket – A time period used in planning and forecasting systems.

The time bucket (period) for most MRP systems is a day. These systems are often called bucketless because they use date-quantity detail rather than weekly or monthly time buckets. In contrast, many forecasting systems forecast in monthly or weekly time buckets.

See Croston’s Method, exponential smoothing, finite scheduling, forecasting, Materials Requirements Planning (MRP), production planning.

time burglar – A personal time management term that refers to anything (including a person) that steals time from someone else; someone who wastes the time of another person.

Time burglars are people who often stop by and ask, “Got a minute?” and then proceed to launch into 20 minutes of low-value discussion. Some situations, such as a friend stopping by to say “hello,” are just minor misdemeanors, but disruptions that arrive during a critical work situation could be considered a crime.

The key time management principle for managers is to explain the situation and then offer to schedule another time for a visit. A reasonable script is, “I can see this is going to take some more time to discuss. Let’s schedule some time to talk about this further. When is a good time for you?”

The term “time burglar” can also be applied to junk e-mail, unnecessary meetings, too much TV, surring the Internet, and other time wasting activities. Time burglars are everywhere, so stop them before they strike.

See personal operations management.

time fence – A manufacturing planning term used for a time period during which the Master Production Schedule (MPS) cannot be changed; sometimes called a planning time fence.

The time fence is usually defined by a planning period in days (e.g., 21 days) during which the Master Production Schedule (MPS) cannot be altered and is said to be frozen. The time fence separates the MPS into a firm order period followed by a tentative time period. The purpose of a time fence policy is to reduce shortterm schedule disruptions for both manufacturing and suppliers and improve on-time delivery for customers by stabilizing the MPS and reducing “system nervousness.”

Oracle makes a distinction between planning, demand, and release time fences. More on this topic can be found at http://download.oracle.com/docs/cd/A60725_05/html/comnls/us/mrp/tfover.htm (January 11, 2011).

See cumulative leadtime, firm planned order, Master Production Schedule (MPS), Materials Requirements Planning (MRP), planned order, planning horizon, premium freight, Sales & Operations Planning (S&OP).

time in system – The total start to finish time for a customer or customer order; also called customer leadtime.

The time in system is the turnaround time for a customer or customer order. In the queuing context, time in system is the sum of the wait time (the time in queue before service begins) and the service time.

Do not confuse the actual time in system for a single customer, the average time in system across a number of customers, and the planned time in system parameter, which is used for planning purposes.

See customer leadtime, cycle time, leadtime, Little’s Law, queuing theory, turnaround time, wait time.

time management – See personal operations management.

time study – A work measurement practice of collecting data on work time by observation, typically using a stop watch or some other timing device. ![]()

The average actual time for a worker in the time study is adjusted by his or her performance rating to determine the normal time for a task. The standard time is then the normal time with an allowance for breaks.

See normal time, performance rating, scientific management, standard time, work measurement, work sampling.

time to market – The time it takes to develop a new product from an initial idea (the product concept) to initial market sales; sometimes called speed to market.

In many industries, a short time to market can provide a competitive advantage, because the firm “first to market” with a new product can command a higher margin, capture a larger market share, and establish its brand as the strongest brand in the market. Precise definitions of the starting and ending points vary from one firm to another, and may even vary between products within a single firm. The time to market includes both product design and commercialization. Time to volume is a closely related concept.

See clockspeed, market share, New Product Development (NPD), product life cycle management, time to volume, time-based competition.

time to volume – The time from the start of production to the start of large-scale production.

See New Product Development (NPD), time to market.

time-based competition – A business strategy to (a) shorten time to market for new product development, (b) shorten manufacturing cycle times to improve quality and reduce cost, and (c) shorten customer leadtimes to stimulate demand. ![]()

Stalk (1988) and Stalk and Hout (1990) make strong claims about the profitability of a time-based strategy. The strategy map entry presents a causal map that summarizes and extends this competition strategy. The benefits of a time-based competition strategy include: (1) segmenting the demand to target the time-sensitive and price-insensitive customers and increase margins, (2) reducing work-in-process and finished goods inventory, (3) driving out non-value activities (e.g., JIT and lean manufacturing concepts), and (4) bringing products to market faster. These concepts are consistent with the Theory of Constraints and lean thinking.

See agile manufacturing, flow, operations strategy, Quick Response Manufacturing, resilience, time to market, value added ratio.

Time Phased Order Point (TPOP) – An extension of the reorder point system that uses the planned future demand to estimate the date when the inventory position will hit the safety stock level; it then backschedules using the planned leadtime from that date to determine a start date for a planned order.

The Time Phased Order Point (TPOP) system is used to determine the order timing in all Materials Requirements Planning (MRP) systems. TPOP uses the gross requirements (the planned demand) to determine the date when the planned inventory position will hit the safety stock level. It then uses the planned leadtime to plan backward in time (backschedule) to determine the start date for the next planned order. The lotsize (order quantity) can be determined with any lotsizing rule, such as lot-for-lot, fixed order quantity, EOQ, day’s supply, week’s supply, etc. TPOP can be used over the planning horizon to create many planned orders.

See lotsizing methods, Materials Requirements Planning (MRP), reorder point, safety stock.

time series forecasting – A forecasting method that identifies patterns in historical data to make forecasts for the future; also called intrinsic forecasting. ![]()

A time series is a set of historical values listed in time order (such as a sales history). A time series can be broken (decomposed) into a level (or mean), trend, and seasonal patterns. If the level, trend, and seasonal patterns are removed from a time series, all that remains is what appears to be random error (white noise). Box-Jenkins methods attempt to identify and model the autocorrelation (serial correlation) structure in this error.

A moving average is the simplest time series forecast method, but it is not very accurate because it does not include either trend or seasonal patterns. The Box-Jenkins method is the most sophisticated, but is more complicated than most managers can handle. The exponential smoothing model with trend and seasonal factors is a good compromise for most firms.

Univariate time series methods simply extrapolate a single time series into the future. Multivariate time series methods consider historical data for several related variables to make forecasts.

See autocorrelation, Box-Jenkins forecasting, Croston’s Method, Durbin-Watson statistic, exponential smoothing, forecasting, linear regression, moving average, seasonality, trend.

time-varying demand lotsizing problem – The problem of finding the set of lotsizes that will “cover” the demand over the time horizon and will minimize the sum of the ordering and carrying costs.

Common approaches for solving this problem include the Wagner-Whitin lotsizing algorithm, the Period Order Quantity (POQ), the Least Total Cost method, the Least Unit Cost method, and the Economic Order Quantity. Only the Wagner-Whitin algorithm is guaranteed to find the optimal solution. All other lotsizing methods are heuristics; however, the cost penalty in using these heuristics is generally small.

See Economic Order Quantity (EOQ), lotsizing methods, Period Order Quantity (POQ), Wagner-Whitin lotsizing algorithm.

TOC – See Theory of Constraints.

tolerance – An allowable variation from a predefined standard; also called specification limits.

All processes have some randomness, which means that no manufacturing process will ever produce parts that exactly achieve the “nominal” (target) value. Therefore, design engineers define tolerance (or specification) limits that account for this “common cause variation.” A variation from the standard is not considered significant unless it exceeds the upper or lower tolerance (specification) limit. Taguchi takes a different approach to this issue and creates a loss function around the nominal value rather than setting limits.

See common cause variation, Lot Tolerance Percent Defective (LTPD), process capability and performance, Taguchi methods.

ton-mile – A measure of freight traffic equal to moving one ton of freight one mile. See logistics.

tooling – The support devices required to operate a machine.

Tooling usually includes jigs, fixtures, cutting tools, molds, and gauges. In some manufacturing contexts, the requirements for specialized tools are specified in the bill of materials. Tooling is often stored in a tool crib.

See fixture, Gauge R&R, jig, manufacturing processes, mold.

total cost of ownership – The total cost that a customer incurs from before the purchase until the final and complete disposal of the product; also known as life cycle cost.

Some of these costs include search costs, purchase (acquisition) cost, purchasing administration, shipping (delivery), expediting, premium freight, transaction cost, inspection, rework, scrap, switching cost, installation cost, training cost, government license fees, royalty fees, Maintenance Repair Operations (service contracts, parts, labor, consumables, repair), information systems costs (support products, upgrades), inventory carrying cost, inventory redistribution/redeployment cost (moving inventory to a new location), insurance, end of life disposal cost, and opportunity costs (downtime, lost productive time, lost sales, lost profits, brand damage)59.

Life cycle cost is very similar to the total cost of ownership. The only difference is that life cycle cost identifies cost drivers based on the stage in the product life cycle (introduction, growth, maturity, and decline). Both total cost of ownership and life cycle cost can have an even broader scope that includes research and development, design, marketing, production, and logistics costs.

See financial performance metrics, purchasing, search cost, switching cost, transaction cost.

Total Productive Maintenance (TPM) – A systematic approach to ensure uninterrupted and efficient use of equipment; also called Total Productive Manufacturing. ![]()

Total Productive Maintenance (TPM) is a manufacturing-led collaboration between operations and maintenance that combines preventive maintenance concepts with the kaizen philosophy of continuous improvement. With TPM, maintenance takes on its proper meaning to “maintain” rather than just repair. TPM, therefore, focuses on preventive and predictive maintenance rather than only on emergency maintenance. Some leading practices related to TPM include:

• Implement a 5S program with a standardized work philosophy.

• Apply predictive maintenance tools where appropriate.

• Use an information system to create work orders for regularly scheduled preventive maintenance.

• Use an information system to maintain a repair history for each piece of equipment.

• Apply autonomous maintenance, which is the concept of using operators to inspect and clean equipment without heavy reliance on mechanics, engineers, or maintenance people. (This is in contrast to the old thinking which required operators to wait for mechanics to maintain and fix their machines.)

• Clearly define cross-functional duties.

• Train operators to handle equipment related issues.

• Measure performance with Overall Equipment Effectiveness (OEE).

Some indications that a TPM program might be needed include frequent emergency maintenance events, long downtimes, high repair costs, reduced machine speeds, high defects and rework, long changeovers, high startup losses, high Mean Time to Repair (MTTR), and low Mean Time Between Failure (MTBF). Some of the benefits for a well-run TPM program include reduced cycle time, improved operational efficiency, improved OEE, improved quality, and reduced maintenance cost.

See 5S, autonomous maintenance, bathtub curve, downtime, maintenance, Maintenance-Repair-Operations (MRO), Manufacturing Execution System (MES), Mean Time Between Failure (MTBF), Mean Time to Repair (MTTR), Overall Equipment Effectiveness (OEE), reliability, reliability engineering, Reliability-Centered Maintenance (RCM), standardized work, Weibull distribution, work order.

Total Productive Manufacturing (TPM) – See Total Productive Maintenance (TPM).

Total Quality Management (TQM) – An approach for improving quality that involves all areas of the organization, including sales, engineering, manufacturing, and purchasing, with a focus on employee participation and customer satisfaction. ![]()

Total Quality Management (TQM) can involve a wide variety of quality control and improvement tools. TQM pioneers, such as Juran (1986), Deming (1986, 2000), and Crosby (1979) emphasized a combination of managerial principles and statistical tools. This term has been largely supplanted by lean sigma and lean programs and few practitioners or academics use this term today. The quality management, lean sigma, and lean entries provide much more information on this subject.

See causal map, defect, Deming’s 14 points, inspection, lean sigma, Malcolm Baldrige National Quality Award (MBNQA), PDCA (Plan-Do-Check-Act), quality management, quality trilogy, stakeholder analysis, Statistical Process Control (SPC), Statistical Quality Control (SQC), zero defects.

touch time – The direct value-added processing time.

See cycle time, run time, value added ratio.

Toyota Production System (TPS) – An approach to manufacturing developed by Eiji Toyoda and Taiichi Ohno at Toyota Motor Company in Japan; some people use TPS synonymously with lean thinking60.

See autonomation, jidoka, Just-in-Time (TPS), lean thinking, muda.

T-plant – See VAT analysis.

TPM – See Total Productive Maintenance (TPM).

TPOP – See Time Phased Order Point (TPOP).

TPS – See Toyota Production System (TPS).

TQM – See Total Quality Management (TQM).

traceability – The capability to track items (or batches of items) through a supply chain; also known as lot traceability, serial number traceability, lot tracking, and chain of custody.

Lot traceability is the ability to track lots (batches of items) forward from raw materials through manufacturing and ultimately to end customers and also backward from end consumers back to the raw materials. Lot traceability is particularly important for food safety in food supply chains.

Serial number traceability is individual “serialized” items and is important in medical device supply chains. Petroff and Hill (1991) provide suggestions for designing lot and serial number traceability systems.

See Electronic Product Code (EPC), part number, supply chain management.

tracking signal – An exception report given when the forecast error is consistently positive or negative over time (i.e., the forecast error is biased).

The exception report signals the manager or analyst to intervene in the forecasting process. The intervention might involve manually changing the forecast, the trend, underlying average, and seasonal factors, or changing the parameters for the forecasting model. The intervention also might require canceling orders and managing both customer and supplier expectations.

Tracking signal measurement – The tracking signal is measured as the forecast bias divided by a measure of the average size of the forecast error.

Measures of forecast bias – The simplest measure of the forecast bias is to accumulate the forecast error over time (the cumulative sum) with the recursive equation Rt = Rt−1 + Et, where Rt is the running sum of the errors and Et is the forecast error in period t. The running sum of the errors is a measure of the bias and an exception report is generated when Rt gets “large.” Another variant is to use the smoothed average error instead of the running sum of the error. The smoothed error is defined as SEt = (1 − α)SEt−1 + αEt.

Measures of the average size of the forecast error – One measure of the size of the average forecast error is the Mean Absolute Deviation (MAD). The MAD is defined as ![]() , where T is the number of periods of history. A more computationally efficient approach to measure the MAD is with the smoothed mean absolute error, which is defined as SMADt = (1 − α)SMADt−1 + α|Et|, where alpha (α) is the smoothing constant (0 < α < 1). Still another approach is to replace the smoothed mean absolute deviation (SMADt) with the square root of the smoothed mean squared error, where the smoothed mean squared error is defined as SMSEt = (1 − α)SMSEt + αEt2. In other words, the average size of the forecast error can be measured as

, where T is the number of periods of history. A more computationally efficient approach to measure the MAD is with the smoothed mean absolute error, which is defined as SMADt = (1 − α)SMADt−1 + α|Et|, where alpha (α) is the smoothing constant (0 < α < 1). Still another approach is to replace the smoothed mean absolute deviation (SMADt) with the square root of the smoothed mean squared error, where the smoothed mean squared error is defined as SMSEt = (1 − α)SMSEt + αEt2. In other words, the average size of the forecast error can be measured as ![]() . The smoothed MAD is the most practical approach for most firms.

. The smoothed MAD is the most practical approach for most firms.

In summary, a tracking signal is a measure of the forecast bias relative to the average size of the forecast error and is defined by TS = bias/size. Forecast bias can be measured as the running sum of the error (Rt) or the smoothed error (SEt); the size of the forecast error can be measured with the MAD, the smoothed mean absolute error (SMADt), or the square root of the mean squared error (SMADt). It is not clear which method is best.

See cumulative sum control chart, demand filter, exponential smoothing, forecast bias, forecast error metrics, forecasting, Mean Absolute Deviation (MAD), Mean Absolute Percent Error (MAPE), mean squared error (MSE).

trade barrier – Any governmental regulation or policy, such as a tariff or quota that restricts imports or exports.

See tariff.

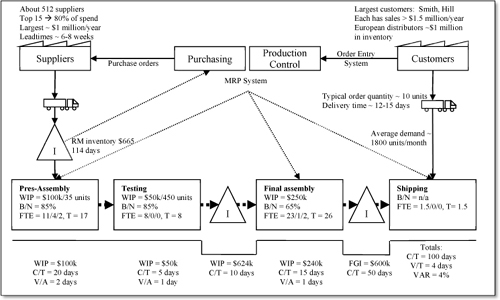

trade promotion allowance – A discount given to retailers and distributors by a manufacturer to promote products; retailers and distributors sponsor advertising and other promotional activities or pass the discount along to consumers to encourage sales; also known as trade allowance, cooperative advertising allowance, advertising allowance, and ad allowance.

Trade promotions include slotting allowances, performance allowances, case allowances, and account specific promotions. Promotions can include newspaper advertisements, television and radio programs, in-store sampling programs, and slotting fees. Trade promotions are common in the consumer packaged goods (CPG) industry.

See consumer packaged goods, slotting.

traffic management – See Transportation Management System (TMS).

trailer – A vehicle pulled by another vehicle (typically a truck or tractor) used to transport goods on roads and highways; also called semitrailer, tractor trailer, rig, reefer, flatbed; in England, called articulated lorry.

Trailers are usually enclosed vehicles with a standard length of 45, 48, or 53 feet, internal width of 98 to 99 inches, and internal height of 105 to 110 inches. Refrigerated trailers are known as reefers and have an internal width of 90 to 96 inches and height of 96 to 100 inches. Semi-trailers usually have three axles, with the front axle having two wheels and the back two axles each having a pair of wheels for a total of 10 wheels.

See Advanced Shipping Notification (ASN), cube utilization, dock, intermodal shipments, less than truck load (LTL), logistics, shipping container, Transportation Management System (TMS).

transaction cost – The cost of processing one transaction, such as a purchase order.

In a supply chain management context, this is the cost of processing one purchase order.

See search cost, switching cost, total cost of ownership.

transactional process improvement – Improving repetitive non-manufacturing activities.

The term “transactional process improvement” is used by many lean sigma consultants to describe efforts to improve non-manufacturing processes in manufacturing firms and also improve processes in service organizations. Examples include back-office operations (e.g., accounting, human resources) and front-office operations (order-entry, customer registration, teller services).

The entities that flow through these processes may be information on customers, patients, lab specimens, etc. and may be stored on paper or in electronic form. The information often has to travel across several departments. Lean sigma programs can often improve transactional processes by reducing non-value-added steps, queue time, cycle time, travel time, defects, and cost while improving customer satisfaction.

See lean sigma, lean thinking, service management, waterfall scheduling.

transfer batch – A set of parts that is moved in quantities less than the production batch size.

When a batch of parts is started on a machine, smaller batches can be moved (transferred) to the following machines while the large batch is still being produced. The smaller batch sizes are called transfer batches, whereas the larger batch produced on the first machine is called a production batch. The practice of allowing some units to move to the next operation before all units have completed the previous operation is called operation overlapping. The Theory of Constraints literature promotes this concept to reduce total throughput time and total work in process inventory. When possible, transfer batches should be used at the bottleneck to allow for large production batch sizes, without requiring large batch sizes after the bottleneck. It is important to have large batch sizes at the bottleneck to avoid wasting valuable bottleneck capacity on setups.

See bottleneck, lotsizing methods, pacemaker, Theory of Constraints (TOC).

transfer price – The monetary value assigned to goods, services, or rights traded between units of an organization.

One unit of an organization charges a transfer price to another unit when it provides goods or services. The transfer price is usually based on a standard cost and is not considered a sale (with receivables) or a purchase (with payables). Some international firms use transfer prices (and related product costs) to shift profits from high-tax countries to low-tax countries to minimize taxes.

See cost of goods sold, purchasing.

transportation – See logistics.

Transportation Management System (TMS) – An information system that supports transportation and logistics management; also called fleet management, transportation, and traffic management systems. ![]()

Transportation Management Systems (TMSs) are information systems that manage transportation operations of all types, including shippers, ocean, air, bus, rail, taxi, moving companies, transportation rental agencies and all types of activities, including shipment scheduling through inbound, outbound, intermodal, and intra-company shipments. TMSs can track and manage every aspect of a transportation system, including fleet management, vehicle maintenance, fuel costing, routing and mapping, warehousing, communications, EDI, traveler and cargo handling, carrier selection and management, accounting, audit and payment claims, appointment scheduling, and yard management. Most TMSs provide information on rates, bills of lading, load planning, carrier selection, posting and tendering, freight bill auditing and payment, loss and damage claims processing, labor planning and assignment, and documentation management.

Many TMSs also provide GPS navigation and terrestrial communications technologies to enable government authorities and fleet operators to better track, manage, and dispatch vehicles. With these technologies, dispatchers can locate vehicles and respond to emergencies, send a repair crew, and notify passengers of delays.

The main benefits of a TMS include lower freight costs (through better mode selection, route planning, and route consolidation) and better customer service (better shipment tracking, increased management visibility, and better on-time delivery). A TMS can provide improved visibility of containers and products, aid in continuous movements of products, and reduce empty miles.

See Advanced Shipping Notification (ASN), cross-docking, Electronic Data Interchange (EDI), intermodal shipments, logistics, materials management, on-time delivery (OTD), Over/Short/Damaged Report, trailer, Warehouse Management System (WMS), waybill.

transportation problem – A mathematical programming problem of finding the optimal number of units to send from location i to location j to minimize the total transportation cost.

The transportation problem is usually shown as a table or a matrix. The problem is to determine how many units should be shipped from each “factory” (row) to each “market” (column). Each factory has limited capacity and each market has limited demand.

The transshipment problem is an extension of the transportation problem that allows for intermediate nodes between the supply and demand nodes. Transportation and transshipment problems can be extended to handle multiple periods where the product is “shipped” from one period to the next with an associated carrying cost.

The size of these problems can become quite large, but network algorithms can handle large networks efficiently. However, network algorithms only allow for a single commodity (product) to be shipped. More general linear programming and integer programming approaches can be used when the firm has multiple products. Unfortunately, the solution algorithms for these approaches are far less efficient. The transportation and transshipment problems can be solved with special-purpose algorithms, network optimization algorithms, or with general purpose linear programming algorithms. Even though they are both integer programming problems, they can be solved with any general linear programming package and can still be guaranteed to produce integer solutions because the problems are unimodular.

The mathematical statement for the transportation problem with N factories (sources) and M markets (demands) is as follows:

Transportation problem: Minimize

Subject to  , for j = 1, 2, ... , M and

, for j = 1, 2, ... , M and  , for i = 1, 2, ... , N

, for i = 1, 2, ... , N

where cij is the cost per unit of shipping from factory i to market j, xij is the number of units shipped from factory i to market j, Dj is the demand in units for market j, and Ci is the capacity (in units) for factory i. The goal is to minimize total transportation cost. The first constraint ensures that all demand is met. The second constraint ensures that production does not exceed capacity.

The transportation model is often formulated with equality constraints. This often requires either a “dummy” plant to handle market demand in excess of capacity or a “dummy” market to handle capacity in excess of market demand. The cost per unit for shipping from the dummy plant is the cost of a lost sale; the cost of shipping to the dummy market is the cost of excess capacity.

See algorithm, assignment problem, linear programming (LP), logistics, network optimization, operations research (OR), transshipment problem, Traveling Salesperson Problem (TSP).

transshipment problem – A mathematical programming term for a generalization of the transportation problem that allows for intermediate points between supply and demand nodes.

The transportation problem finds the optimal quantities to be shipped from a set of supply nodes to a set of demand nodes given the quantities available at each supply node, the quantities demanded at each demand node, and the cost per unit to ship along each arc between the supply and demand nodes. In contrast, the transshipment problem allows transshipment nodes to be between the supply and demand nodes. Any transshipment problem can be converted into an equivalent transportation problem and solved using an algorithm for the transportation problem. Transshipment problems can also be solved by any network optimization model. Both transportation and transshipment problems can handle only one commodity (type of product). Linear and mixed integer linear programs are more general and can handle multiple commodity network optimization problems.

See network optimization, operations research (OR), transportation problem.

traveler – See shop packet.

Traveling Salesperson Problem (TSP) – The problem of finding the minimum cost (distance or travel time) sequence for a single vehicle to visit a set of cities (nodes, locations), visiting each city exactly once, and returning to the starting city; also spelled travelling salesperson problem; formerly known as the Traveling Salesman Problem.

The Traveling Salesperson Problem (TSP) is one of the most studied problems in operations research and many methods are available for solving the problem. The methods can be divided into optimal (“exact”) methods and heuristics. Optimal methods are guaranteed to find the best (lowest cost or lowest travel time) solution, but the computing time can be extremely long and increases exponentially as N increases. On the other hand, many heuristic methods are computationally fast, but may find solutions that are far from optimal. Although optimal methods guarantee the mathematically best solution, heuristics do not.

Extensions of the problem include the multiple-vehicle TSP and the Vehicle Scheduling Problem (VSP). The VSP can involve multiple vehicles, time window constraints on visiting each node, capacity constraints on each vehicle, total distance and time constraints for each vehicle, and demand requirements for each node. The Chinese Postman Problem is finding the optimal (minimum cost) circuit that covers all the arcs.

Both the TSP and the VSP are important problems in logistics and transportation. Similar combinatorial problems are found in many problem contexts. For example, the problem of finding the optimal sequence of jobs for a machine with sequence-dependent setups can be formulated as a TSP. Some printed circuit board design problems can also be formulated as a TSP.

The mathematical programming formulation for the TSP can be formulated as follows:

The traveling salesperson problem (TSP): Minimize

Subject to  , for j = 1, 2, ... , N and

, for j = 1, 2, ... , N and ![]() , for i = 1, 2, ... , N

, for i = 1, 2, ... , N

yi − yj + (n − 1)xij ![]() n − 2 for all (i, j), j

n − 2 for all (i, j), j ![]() depot; xij

depot; xij ![]() {0,1} for all (i, j)

{0,1} for all (i, j)

The xij variables are binary (0,1) variables such that xij = 1 if node i immediately follows node j in the route and xij = 0 otherwise. The cij parameters are the costs, times, or distances to travel from node i to j. The objective is to minimize the total cost, distance, or time. The first two constraints require all nodes to have one incoming and one outgoing arc. The third constraint prohibits subtours, which are circuits that do not connect to the depot. Alternative formulations for this constraint can be found in the literature. The last constraint requires that the xij decision variables to be binary (zero-one) variables.

See algorithm, assignment problem, heuristic, linear programming (LP), logistics, operations research (OR), sequence-dependent setup time, setup cost, transportation problem, Vehicle Scheduling Problem (VSP).

trend – The average rate of increase for a variable over time. ![]()

In the forecasting context, the trend is the slope of the demand over time. One simple way to estimate this rate is with a simple linear regression using time as the x variable. In exponential smoothing, the trend can be smoothed with its own smoothing constant. The Excel function TREND(range) is a useful tool for projecting trends into the future. The linear regression entry presents the equations for the least squares trend line.

See exponential smoothing, forecasting, linear regression, seasonality, time series forecasting.

trend line – See linear regression.

triage – The process of directing (or sorting) customers into different streams based on their needs.

Triage is used to allocate a scarce resource, such as a medical doctor’s time to those most deserving of it. The word comes from trier, which is old French and means to sort.

In a healthcare context, a triage step can be used to sort injured people into groups based on their need for or likely benefit from immediate medical treatment. In a battlefield context, triage means to select a route or treatment path for the wounded. In a service quality context, adding a triage step means to place a resource (a person, computer, or phone system) at the beginning of the process. This resource “triages” incoming customers and directs them to the right resource and process.

A good triage system protects valuable resources from being wasted on unimportant tasks and assigns customers to the most appropriate service for their needs. For example, a clinic should usually not have a highly paid Ear-Nose-Throat specialist seeing a patient with a minor sore throat. The clinic should have a triage nurse directing patients to the right provider. Patients with minor problems should see RNs or physician assistants; patients with major non-urgent problems should be scheduled to see doctors; patients with major urgent problems should see doctors right away. With a good triage system, a patient will be quickly directed to the proper level for the proper medical help and the system will be able to deliver the maximum benefit to society.

See service management, service quality.

triangular distribution – A continuous distribution that is useful when little or no historical data is available.

Parameters: Minimum (a), mode (b), and maximum (c).

Density and distribution functions:

Statistics: Range [a, b], mean (a + b + c)/3, mode b, and variance (a2 + b2 + c2 − ab − ac − bc)/18.

Inverse: The following is the inverse of the triangular distribution function with probability of p:

In other words, when p = F(x) then x = F−1(p). Using the inverse of the triangular distribution is often a practical approach for implementing the newsvendor model when little is known about the demand distribution. When the probability p is set to the critical ratio, the inverse function returns the optimal value.

Graph: The graph below is the triangular density function with parameters (1, 4, 11).

Parameter estimation: An expert (or team) estimates three parameters: minimum (a), mode (b), and maximum (c). When collecting subjective probability estimates, it is a good idea to ask respondents for the maximum and minimum values first so they do not “anchor” (bias) their subjective estimates with their own estimate of the mode. It is imprecise to talk about the “maximum” and the “minimum” for distributions that are not bounded. For example, with a little imagination, the “maximum” demand could be extremely large. In this situation, it would be more precise to ask the expert for the values at the 5th percentile and 95th percentile of the distribution. However, this mathematical fact does not seem to bother most practitioners, who seem to be comfortable using this distribution in a wide variety of situations. The paper entitled “The Triangular Distribution” (Hill 2011c) shows how points (a’, b’) at the p and 1– p points of the CDF can be translated into endpoints (a, b).

Excel: Excel does not have formulas for the triangular distribution, but they are fairly easy to create in Excel with the above equations.

Excel simulation: The inverse transform method can be used to generate random deviates from the inverse CDF above using ![]() when r is in the interval 0 < r

when r is in the interval 0 < r ![]() (b − a)/(c − a) and

(b − a)/(c − a) and ![]() otherwise. (Note: r is a uniformly distributed random variable in range (0,1]). This method will generate x values that will follow the triangular distribution with parameters (a, b, c).

otherwise. (Note: r is a uniformly distributed random variable in range (0,1]). This method will generate x values that will follow the triangular distribution with parameters (a, b, c).

Relationships to other distributions: The sum of two uniformly distributed random variables is triangular.

See newsvendor model, probability density function, probability distribution.

tribal knowledge – Any unwritten information that is not commonly known by others within an organization.

Tribal knowledge is undocumented, informal information closely held by a few individuals. This information is often critical to the organization’s products and processes but is lost when these individuals leave the organization. The term is often used in the context of arguments for knowledgement management systems.

See knowledge management.

trim – A statistical procedure that eliminates (removes) exceptional values (outliers) from a sample; also known as trimming; in Visual Basic Assistant, the Trim(S) function removes leading and trailing blanks from a text string.

See mean, outlier, trimmed mean, Winsorizing.

trimmed mean – A measure of central tendency that eliminates (removes) outliers (exceptional values) from a sample used to compute the mean (average) value.

When data is highly skewed, the trimmed mean may be a better measure of central tendency than the average. The trimmed mean is computed by removing a α percent of values from the bottom and top of a data set that is sorted in rank order. A trimmed mean with α = 0 is the simple mean, and a trimmed mean with α = 50% is the median (assuming that only the middle value remains after the trimming process). The trimmed mean, therefore, can be considered a measure of the central tendency somewhere between the simple average and the median. Whereas trimming removes values in the tails, bounding rules, such as Winsorizing replace values in the tails with a minimum or maximum value.

See interpolated median, mean, median, outlier, skewness, trim, Winsorizing.

triple bottom line – An organizational performance evaluation that includes social and environmental performance indicators as well as the typical financial performance indicators.

The term “triple bottom line” was coined by Elkington (1994), who argued that an organization’s responsibility is to its entire group of stakeholders rather than just to its shareholders. The stakeholders include everyone who is affected directly or indirectly by the actions of the organization.

The triple bottom line is also referred to as the “Three Ps,” which are people (human capital), planet (natural capital), and profits (economic benefit). Wikipedia makes an interesting distinction between the profit for the triple bottom line and the profit that typically shows up on a firm’s income statement. The triple bottom line profit is the economic benefit enjoyed by all stakeholders rather than just the shareholders.

See carbon footprint, green manufacturing, income statement, public-private partnership, sustainability.

triple exponential smoothing – See exponential smoothing.

TRIZ – A methodology for generating creative ideas.

TRIZ is the Russian acronym for the phrase “Theory of Inventive Problem Solving” ![]() , which is a methodology developed by Genrich Altshuller and his colleagues in the former USSR. After reviewing more than 400,000 patents, Altshuller devised 40 inventive principles that distinguished breakthrough products. TRIZ is a methodology that uses these inventive principles for innovative problem solving and design. Furthermore, these principles can be codified and taught, leading to a more predictable process of invention. Although primarily associated with technical innovation, these principles can be applied in a variety of areas, including service operations, business applications, education, and architecture.

, which is a methodology developed by Genrich Altshuller and his colleagues in the former USSR. After reviewing more than 400,000 patents, Altshuller devised 40 inventive principles that distinguished breakthrough products. TRIZ is a methodology that uses these inventive principles for innovative problem solving and design. Furthermore, these principles can be codified and taught, leading to a more predictable process of invention. Although primarily associated with technical innovation, these principles can be applied in a variety of areas, including service operations, business applications, education, and architecture.

The TRIZ list of 40 inventive principles (www.triz-journal.com/archives/1997/07/b/index.html, May 10, 2011) follows:

Principle 1. Segmentation

Principle 2. Taking out

Principle 3. Local quality

Principle 4. Asymmetry

Principle 5. Merging

Principle 6. Universality

Principle 7. “Nested doll”

Principle 8. Anti-weight

Principle 9. Preliminary anti-action

Principle 10. Preliminary action

Principle 11. Beforehand cushioning

Principle 12. Equipotentiality

Principle 13. “The other way round”

Principle 14. Spheroidality - Curvature

Principle 15. Dynamics

Principle 16. Partial or excessive actions

Principle 17. Another dimension

Principle 18. Mechanical vibration

Principle 19. Periodic action

Principle 20. Continuity of useful action

Principle 21. Skipping

Principle 22. Blessing in disguise

Principle 23. Feedback

Principle 24. “Intermediary”

Principle 25. Self-service

Principle 26. Copying

Principle 27. Cheap short-living objects

Principle 28. Mechanics substitution

Principle 29. Pneumatics and hydraulics

Principle 30. Flexible shells and thin films

Principle 31. Porous materials

Principle 32. Color changes

Principle 33. Homogeneity

Principle 34. Discarding and recovering

Principle 35. Parameter changes

Principle 36. Phase transitions

Principle 37. Thermal expansion

Principle 38. Strong oxidants

Principle 39. Inert atmosphere

Principle 40. Composite materials

Source: The TRIZ Journal (www.triz-journal.com).

See Analytic Hierarchy Process (AHP), ideation, Kepner-Tregoe Model, New Product Development (NPD), Pugh Matrix.

truck load – Designation for motor carrier shipments exceeding 10,000 pounds.

A motor carrier may haul more than one truck load (TL) shipment in a single vehicle.

See less than container load (LCL), less than truck load (LTL), logistics.

true north – A lean term that describes a long-term vision of the ideal.

True north is often identified as the customer’s ideal. However, it can (and should) also consider all stakeholders, including the owners, customers, workers, suppliers, and community.

See lean thinking, mission statement.

TS 16949 quality standard – A quality standard developed by the American automotive industry.

Beginning in 1994 with the successful launch of QS 9000 by DaimlerChrysler, Ford, and GM, the automotive OEMs recognized the increased value that could be derived from an independent quality system registration scheme and the efficiencies that could be realized in the supply chain by “communizing” system requirements. In 1996, the success of these efforts led to a move toward the development of a globally accepted and harmonized quality management system requirements document. Out of this process, the International Automotive Task Force (IATF) was formed to lead the development effort. The result of the IATF’s effort was the ISO/TS 16949 specification, which forms the requirements for automotive production and relevant service part organizations. ISO/TS 16949 used the ISO 9001 Standard as the basis for development and included the requirements from these standards with specific “adders” for the automotive supply chain. The 2002 revision of TS builds off of the ISO9001:2000 document. Adapted from www.ul.com/services/ts16949.html.

See ISO 9001:2008, quality management.

TSP – See Traveling Salesperson Problem (TSP).

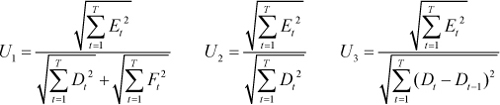

t-test – A statistical technique that uses the Student’s t-test statistic to test if the means of two variables (populations) are significantly different from each other based on a sample of data on each variable.

The null hypothesis is that the true means of two variables (two populations) are equal. The alternative hypothesis is either that the means are different (e.g., μ1 ≠ μ2), which is a two-tailed test, or that one mean is greater than the other (e.g., μ1 < μ2 or μ1 > μ2), which is a one-tailed test. With n1 and n2 observations on variables 1 and 2, and sample means and standard deviations ![]() and

and ![]() , the t-statistic is

, the t-statistic is ![]() , where

, where  . The term

. The term ![]() simplifies to

simplifies to ![]() , when n = n1 = n2.

, when n = n1 = n2.

If each member in population 1 is related to a member in the other population (e.g., a person measured before and after a treatment effect), the observations will be positively correlated and the more powerful paired t-test (or matched pairs test) can be used. The paired t-test computes the difference variable di = x1i − x2i, then computes the sample mean (![]() ) and standard deviation (sd), and finally the t-statistic

) and standard deviation (sd), and finally the t-statistic ![]() .

.

For a two-tailed test, the t-test rejects the null hypothesis of equal means in favor of the alternative hypothesis of unequal means when this t-statistic is greater than the critical level tα/2, n1+n2−2, which is the Student’s t value associated with probability α/2 and n1 + n2 − 2 degrees of freedom. For a one-tailed test, α/2 should be replaced by α. For a paired t-test, use n = n1 = n2 degrees of freedom. In Excel, use TINV(α, n1+n2−2) for a two-tailed test and TINV(2α, n1+n2−2) for a one-tailed test. The counter-intuitive p-values (α and 2α) are used because TINV assumes a two-tailed test.

The t-test assumes that the variables are normally distributed and have equal variances. If the variances of the two populations are not equal, then Welch’s t-test should be used.

The t-test can be done in one Excel function. The TTEST(A1, A2, TAILS, TYPE) function returns the probability (the p-value) that two samples are from populations that have the equal means. A1 and A2 contain the ranges for sample data from the two variables. TAILS specifies the number of tails for the test (one or two). Two tails should be used if the alternative hypothesis is that the two means are not equal. The TYPE parameter defines the type of t-test to use in the Excel TTEST function, where TYPE =1 for a paired t-test, TYPE = 2 for a two-sample test with equal variance, and TYPE = 3 for a two-sample test with unequal variances. The table below summarizes the parameters for the Excel functions assuming equal variances.

See Analysis of Variance (ANOVA), confidence interval, sampling, Student’s t distribution

Turing test – A face validity test proposed by (and named after) Alfred Turing (1950) in which an expert or expert panel compares the results of two processes, typically a computer program and an expert, and tries to determine which process is the computer process.

If the experts cannot tell the difference, the computer process is judged to have a high degree of expertise. For example, an expert system is presented with a series of medical cases and makes a diagnosis for each one. A medical expert is given the same series of cases and also asked to make a diagnosis for each one. A second expert is then asked to review the diagnoses from the two sources and discern which one is the computer. If the second medical expert cannot tell the difference, the computer system is judged to have face validity.

See expert system, simulation.

turnaround time – The actual time required to get results to a customer.