11.5 Generation of Contextual Information and Profiling

The first part of this section presents standardized descriptions for context in a generic multimedia scenario. This is followed by the description on how to represent contextual information based on profiles. As will be explained, the intention of using profiles is to promote interoperability and facilitate the use of context in real-world applications. This section then discusses the problem of how gathering contextual information can affect a user's privacy. Finally, it provides details regarding the generation and aggregation of contextual information from diverse sources.

11.5.1 Types and Representations of Contextual Information

(Portion reprinted, with permission, from V. Barbosa, A. Carreras, H. Kodikara Arachchi, S. Dogan, M.T. Andrade, J. Delgado, A.M. Kondoz, “A scalable platform for context-aware and DRM-enabled adaptation of multimedia content”, in ICT-Mobile Summit 2008 Conference Proceedings, Paul Cunningham & Miriam Cunningham (Eds), IIMC International Information Management Corporation, 2008. ©2008 IIMC Ltd and ICT Mobile Summit.)

Contextual information has a very broad definition. As seen in Section 11.2.1.1, the term context can be applied to many different aspects and characteristics of the complete delivery and consumption environment. The discussion on the context-aware content adaptation platform in this chapter is based on the assumption that a standardized representation of the contextual information is available. This aspect is considered as instrumental to enabling interoperability among systems and applications, and across services. As introduced in Section 11.4.4, MPEG-21 DIA seems to be the most complete standard, and as such the ideal choice for any system that expects wider visibility. MPEG-21 DIA defines UED, which is a full set of contextual information that can be applied to any type of multimedia system, as it assures device-independence. UED includes a description of terminal capabilities and network characteristics as well as User2 and natural environment characteristics. All of these elements can be seen in Table 11.1.

User and natural environment characteristics are possibly the most relevant and innovative of any UED subsets. As we have seen in Sections 1.4.1 and 1.4.4, the majority of standards that have been developed to describe contextual information for content adaptation concentrate their efforts on terminal and network capabilities. To date, the specifications drawn for UMA have had several limitations, as they focus too much on network and terminal restrictions while ignoring the improvement of User experience [55,115]. Nowadays, researchers are starting to concentrate on filling the gap between the content and the User (and MPEG-21 DIA is a clear example); thus the ultimate driver of the adaptation operations is no longer the terminal or networks only, but also the User, with all their surrounding environment. Accordingly, the two new drivers in focus are discussed in more detail here.

| User Characteristics |

|

| Terminal Capabilities |

|

| Network Characteristics |

|

| Natural Environment Characteristics |

|

We can divide the DIA User characteristics into five main blocks, as follows:

- User Info: General information about the User. As can be seen in Table 11.1, this information is specified by means of MPEG-7 agent DSs.

- Usage Preference and History: Usage preference includes descriptions about a User's preferences, and usage history describes the history of actions on DIs by a User. Both import the corresponding MPEG-7 DSs.

- Presentation Preferences: This block includes new and important descriptors about how DIs (and their associated resources) are presented to the User. It is especially interesting as the Focus of Attention descriptor allows the expression of preferences that direct the focus of a User's attention with respect to AV and textual media.

- Accessibility Characteristics: Includes detailed information about auditory or visual impairments of the User, which can lead to a need for specific adaptation of the content.

- Location Characteristics: By means of mobility characteristics and destination, this block is especially useful for adaptive location-aware services.

Natural environment characteristics focus on the physical environmental surrounding the User. They can be used as a complement to adaptive location-aware services, as they contain Location and Time descriptors (based on MPEG-7), which are referenced by both mobility characteristics and destination tools seen in User characteristics. On the other hand, they also include descriptors about the AV environments (such as noise level or illumination characteristics). These characteristics may also impact the adaptation decisions, thus contributing to a finer level of detail of the adaptation operations and consequently increasing the User's experience.

Although the utilization of these two groups of description tools will potentially enable the delivery of innovative and more interesting results, clearly the characterization of the user terminal as well as of the network connections can be considered as indispensable for performing useful adaptation operations. Likewise, a description of the transformation capabilities offered by the available AEs is also instrumental for the effective implementation of the desired adaptation operation. Such a description can be expressed as subsets of the UED descriptions, notably those belonging to the terminal capabilities group.

The MPEG-21 DIA standard specifies appropriate XML schemas to represent this contextual information. They can be exchanged among the components of a content mediation platform as independent XML files or, when applicable, referenced inside DIDs or even directly included in the DID. As will be discussed in Section 11.6.7, a part of this contextual information can also be included in a license that governs the use and consumption of a protected DI.

11.5.2 Context Providers and Profiling

(Portion reprinted, with permission, from M.T. Andrade, H. Kodikara Arachchi, S. Nasir, S. Dogan, H. Uzuner, A.M. Kondoz, J. Delgado, E. Rodriguez, A. Carreras, T. Masterton and R. Craddock, “Using context to assist the adaptation of protected multimedia content in virtual collaboration applications”, roc. 3rd IEEE International Conference on Collaborative Computing: Networking, Applications and Worksharing (CollaborateCom'2007), New York, NY, USA, 12–15 November 2007. ©2007 IEEE and V. Barbosa, A. Carreras, H. Kodikara Arachchi, S. Dogan, M.T. Andrade, J. Delgado, A.M. Kondoz, “A scalable platform for context-aware and DRM-enabled adaptation of multimedia content”, in ICT-Mobile Summit 2008 Conference Proceedings, Paul Cunningham & Miriam Cunningham (Eds), IIMC International Information Management Corporation, 2008. ©2008 IIMC Ltd and ICT Mobile Summit.)

This section describes the definition of profiles based on the contextual information, in order to facilitate the adaptation of multimedia content in generic scenarios. Contextual profiles have the potential to simplify the generation and use of context, by creating restricted groups of contextual descriptions from the full set of DIA descriptors. Each group or profile contains only the descriptions that are essential to each application scenario. This approach also promotes interoperability, as different CxPs are able to generate/provide the same type of contextual information as their counterparts, using the same standard representation.

Profiles presented in this section are based on contextual information that can help to implement different kinds of adaptation operation within multimedia content systems for delivering different services or supporting different applications. As discussed in the previous section, the UED of MPEG-21 DIA is an optimal set of descriptors that can be used to describe virtually any context of usage. As shown in Table 11.1, the set of descriptors is divided into four main blocks, where each block is associated with an entity or concept within the multimedia content chain: User, Terminal, Network, and Natural Environment. This division is a good starting point for defining profiles. Although the combined use of descriptors from different classes can offer increased functionality, the identification of profiles inside each class can potentially simplify the use of these standardized descriptions by the different entities involved in the provision of context-aware multimedia services, and thus increase their rate of acceptance/penetration. One of the resulting advantages would be realized at the level of interoperability. The provision of networked multimedia services usually requires that different entities, operating in distinct domains, interact with one another. The sum of their contributions allows the building of the complete end-to-end service. In order to be able to provide this service in an adaptable manner that seamlessly reacts to different usage environments' characteristics or to varying conditions of a given environment, all of the participating entities should collect and make useful contextual information available. These entities that make contextual information available can be designated as context providers. If they all use the same open format to represent those descriptions, any one entity can use descriptions provided by any other entity. Moreover, considering that CxPs have a one-to-one correspondence to service providers, license servers/authorities, network providers, content providers, or electronic equipment manufacturers, this means that each CxP will offer contextual information concerning its own sphere of action only. For example, a network provider and a manufacturer will make available contextual information related to the network dynamics and the terminal capabilities, respectively. In this way, each one needs to know about one specific profile only.

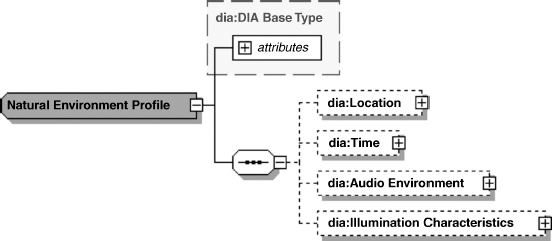

Accordingly, profiles can be defined based on the four existing classes: User profile, Network profile, Terminal profile, and Natural Environment profile. Each of these profiles is composed of the corresponding elements of MPEG-21 DIA to assure a full compliance with the standard. Figures 11.22 – 11.25 show the XML representations of the four profiles.

Figure 11.22 User profile based on MPEG-21 DIA

Figure 11.23 Terminal profile based on MPEG-21 DIA

Figure 11.24 Network profile based on MPEG-21 DIA

11.5.3 User Privacy

The generation and use of contextual information concerning the usage environment may affect the privacy of the users and, if not properly handled, could even be intrusive and violate the users' rights. This section analyzes the possible ways in which this might happen, laying down the foundations for the eventual need for authorization when generating or gathering contextual information.

Figure 11.25 Natural Environment profile based on MPEG-21 DIA

Users might be able to choose some degree of privacy. We should consider not only personal information related to the User profile, but also the possibility of protecting the information related to the Terminal, the Natural Environment, and even the Network. We can therefore think about defining new profiles based on the level of privacy, which could be associated with the previously-defined ones. This situation must be carefully considered when developing context-aware systems, which may need to exchange sensitive personal information among different subsystems. It is therefore of utmost importance to devise ways of protecting this information and thus ensuring the privacy and rights of users. As discussed in Section 11.2, this specific aspect of security has not yet been sufficiently addressed. As a result, addressing user privacy issues has become one of the key areas of research.

11.5.4 Generation of Contextual Information

(Portion reprinted, with permission, from M.T. Andrade, H. Kodikara Arachchi, S. Nasir, S. Dogan, H. Uzuner, A.M. Kondoz, J. Delgado, E. Rodriguez, A. Carreras, T. Masterton and R. Craddock, “Using context to assist the adaptation of protected multimedia content in virtual collaboration applications”, roc. 3rd IEEE International Conference on Collaborative Computing: Networking, Applications and Worksharing (CollaborateCom'2007), New York, NY, USA, 12–15 November 2007. ©2007 IEEE.)

As described in Section 11.2.1.1, the process of gathering contextual information involves three steps. The first and second, namely “sensing the context” and “sensing the context changes”, relate to the generation and representation of basic or low-level contextual information. As referred to in Section 11.5.1, contextual information is represented as MPEG-21 DIA descriptions organized into four distinctive groups. According to the specific application scenario in use, a subset of descriptions from these four groups can be used. Nevertheless, when the same type of information is used in different applications, the form of generating that information may be shared across applications to address more generic application scenarios. It is very important to identify the standard representation of contextual information and accordingly indicate the process and/or mechanisms by which it will be generated and represented. The third step involved in the acquisition of content, i.e. “inferring high-level context”, is discussed in the next section.

Although contextual information belonging to the Resources Context category (as defined in Section 11.2.1.1), such as those concerning the terminal capabilities and the network characteristics, is likely to be automatically generated through the use of software modules, information addressing the User's characteristics and their natural surrounding environment (User Context and Physical Context categories) will require dedicated hardware or possibly the manual intervention of the User. Dedicated hardware may consist of visual and audio sensors, such as video cameras and microphones. User preferences can implicitly be created based on usage history.

The four categories of basic contextual information can be mapped on to the different profiles introduced in Section 11.5.2, which in turn match the different groups of descriptions identified in the MPEG-21 DIA specification. The Resources type is split into the Terminal and Network profiles, and the Physical class is mapped on to the Natural Usage Environment profile. Contextual information belonging to the fourth identified type, the Time Context, may be represented as an attribute within each of the mentioned context profiles, or it may also be addressed as part of the rules for reasoning about the low-level contextual information.