Chapter 8

Simple Linear Regression

Regression modeling represents a powerful and elegant method for estimating the value of a continuous target variable. In this chapter, we introduce regression modeling through simple linear regression, where a straight line is used to approximate the relationship between a single continuous predictor variable and a single continuous response variable. Later, in Chapter 9, we turn to multiple regression, where several predictor variables are used to estimate a single response.

8.1 An Example of Simple Linear Regression

To develop the simple linear regression model, consider the Cereals data set,1 an excerpt of which is presented in Table 8.1. The Cereals data set contains nutritional information for 77 breakfast cereals, and includes the following variables:

- Cereal name

- Cereal manufacturer

- Type (hot or cold)

- Calories per serving

- Grams of protein

- Grams of fat

- Milligrams of sodium

- Grams of fiber

- Grams of carbohydrates

- Grams of sugar

- Milligrams of potassium

- Percentage of recommended daily allowance of vitamins (0%, 25%, or 100%)

- Weight of one serving

- Number of cups per serving

- Shelf location (1 = bottom, 2 = middle, 3 = top)

- Nutritional rating, as calculated by Consumer Reports.

Table 8.1 Excerpt from Cereals data set: eight fields, first 16 cereals

| Cereal Name | Manufacture | Sugars | Calories | Protein | Fat | Sodium | Rating |

| 100% Bran | N | 6 | 70 | 4 | 1 | 130 | 68.4030 |

| 100% Natural Bran | Q | 8 | 120 | 3 | 5 | 15 | 33.9837 |

| All-Bran | K | 5 | 70 | 4 | 1 | 260 | 59.4255 |

| All-Bran Extra Fiber | K | 0 | 50 | 4 | 0 | 140 | 93.7049 |

| Almond Delight | R | 8 | 110 | 2 | 2 | 200 | 34.3848 |

| Apple Cinnamon Cheerios | G | 10 | 110 | 2 | 2 | 180 | 29.5095 |

| Apple Jacks | K | 14 | 110 | 2 | 0 | 125 | 33.1741 |

We are interested in estimating the nutritional rating of a cereal, given its sugar content. However, before we begin, it is important to note that this data set contains some missing data. The following four field values are missing:

- Potassium content of Almond Delight

- Potassium content of Cream of Wheat

- Carbohydrates and sugars content of Quaker Oatmeal.

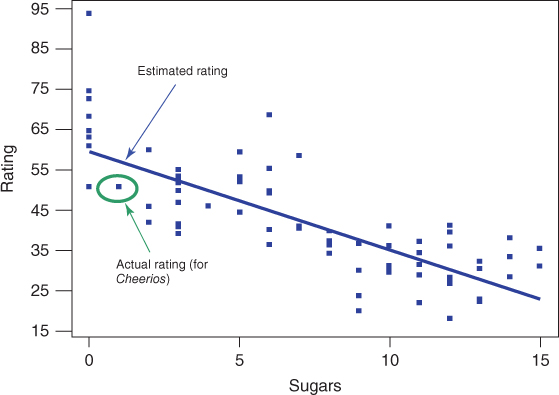

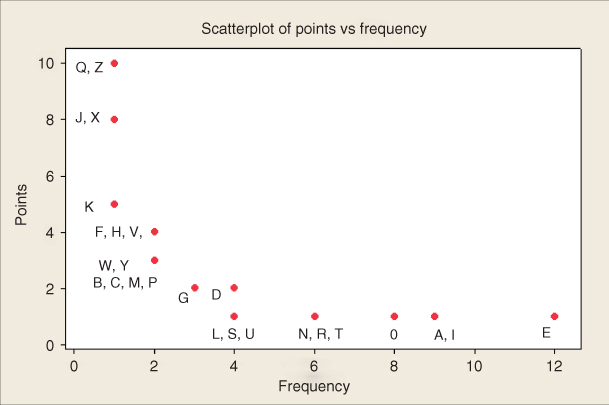

We shall therefore not be able to use the sugar content of Quaker Oatmeal to help estimate nutrition rating using sugar content, and only 76 cereals are available for this purpose. Figure 8.1 presents a scatter plot of the nutritional rating versus the sugar content for the 76 cereals, along with the least-squares regression line.

Figure 8.1 Scatter plot of nutritional rating versus sugar content for 77 cereals.

The regression line is written in the form: ![]() , called the regression equation, where:

, called the regression equation, where:

is the estimated value of the response variable;

is the estimated value of the response variable; is the y-intercept of the regression line;

is the y-intercept of the regression line; is the slope of the regression line;

is the slope of the regression line; and

and  , together, are called the regression coefficients.

, together, are called the regression coefficients.

The regression equation for the relationship between sugars (x) and nutritional rating (y) for this sample of cereals is ![]() . Below we demonstrate how this equation is calculated. This estimated regression equation can be interpreted as “the estimated cereal rating equals 59.953 minus 2.4614 times the sugar content in grams.” The regression line and the regression equation are used as a linear approximation of the relationship between the x (predictor) and y (response) variables, that is, between sugar content and nutritional rating. We can then use the regression equation to make estimates or predictions.

. Below we demonstrate how this equation is calculated. This estimated regression equation can be interpreted as “the estimated cereal rating equals 59.953 minus 2.4614 times the sugar content in grams.” The regression line and the regression equation are used as a linear approximation of the relationship between the x (predictor) and y (response) variables, that is, between sugar content and nutritional rating. We can then use the regression equation to make estimates or predictions.

For example, suppose that we are interested in estimating the nutritional rating for a new cereal (not in the original data) that contains x = 1 gram of sugar. Using the regression equation, we find the estimated nutritional rating for a cereal with 1 gram of sugar to be ![]() . Note that this estimated value for the nutritional rating lies directly on the regression line, at the location (x = 1,

. Note that this estimated value for the nutritional rating lies directly on the regression line, at the location (x = 1, ![]() ), as shown in Figure 8.1. In fact, for any given value of x (sugar content), the estimated value for y (nutritional rating) lies precisely on the regression line.

), as shown in Figure 8.1. In fact, for any given value of x (sugar content), the estimated value for y (nutritional rating) lies precisely on the regression line.

Now, there is one cereal in our data set that does have a sugar content of 1 gram, Cheerios. Its nutrition rating, however, is 50.765, not 57.3916 as we estimated above for the new cereal with 1 gram of sugar. Cheerios' point in the scatter plot is located at (x = 1, y = 50.765), within the oval in Figure 8.1. Now, the upper arrow in Figure 8.1 is pointing to a location on the regression line directly above the Cheerios point. This is where the regression equation predicted the nutrition rating to be for a cereal with a sugar content of 1 gram. The prediction was too high by 57.3916 − 50.765 = 6.6266 rating points, which represents the vertical distance from the Cheerios data point to the regression line. This vertical distance of 6.6266 rating points, in general ![]() , is known variously as the prediction error, estimation error, or residual.

, is known variously as the prediction error, estimation error, or residual.

We of course seek to minimize the overall size of our prediction errors. Least squares regression works by choosing the unique regression line that minimizes the sum of squared residuals over all the data points. There are alternative methods of choosing the line that best approximates the linear relationship between the variables, such as median regression, although least squares remains the most common method. Note that we say we are performing a “regression of rating on sugars,” where the y variable precedes the x variable in the statement.

8.1.1 The Least-Squares Estimates

Now, suppose our data set contained a sample of 76 cereals different from the sample in our Cereals data set. Would we expect that the relationship between nutritional rating and sugar content to be exactly the same as that found above: Rating = 59.853 − 2.4614 Sugars? Probably not. Here, ![]() and

and ![]() are statistics, whose values differ from sample to sample. Like other statistics,

are statistics, whose values differ from sample to sample. Like other statistics, ![]() and

and ![]() are used to estimate population parameters, in this case,

are used to estimate population parameters, in this case, ![]() and

and ![]() , the y-intercept and slope of the true regression line. That is, the equation

, the y-intercept and slope of the true regression line. That is, the equation

represents the true linear relationship between nutritional rating and sugar content for all cereals, not just those in our sample. The error term ![]() is needed to account for the indeterminacy in the model, because two cereals may have the same sugar content but different nutritional ratings. The residuals

is needed to account for the indeterminacy in the model, because two cereals may have the same sugar content but different nutritional ratings. The residuals ![]() are estimates of the error terms,

are estimates of the error terms, ![]() . Equation (8.1) is called the regression equation or the true population regression equation; it is associated with the true or population regression line.

. Equation (8.1) is called the regression equation or the true population regression equation; it is associated with the true or population regression line.

Earlier, we found the estimated regression equation for estimating the nutritional rating from sugar content to be ![]() . Where did these values for

. Where did these values for ![]() and

and ![]() come from? Let us now derive the formulas for estimating the y-intercept and slope of the estimated regression line, given the data.2

come from? Let us now derive the formulas for estimating the y-intercept and slope of the estimated regression line, given the data.2

Suppose we have n observations from the model in equation (8.1); that is, we have

The least-squares line is that line that minimizes the population sum of squared errors, ![]() First, we re-express the population SSEs as

First, we re-express the population SSEs as

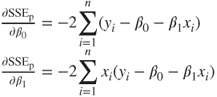

Then, recalling our differential calculus, we may find the values of ![]() and

and ![]() that minimize

that minimize ![]() by differentiating equation (8.2) with respect to

by differentiating equation (8.2) with respect to ![]() and

and ![]() , and setting the results equal to zero. The partial derivatives of equation (8.2) with respect to

, and setting the results equal to zero. The partial derivatives of equation (8.2) with respect to ![]() and

and ![]() are, respectively:

are, respectively:

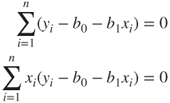

We are interested in the values for the estimates ![]() and

and ![]() , so setting the equations in (8.3) equal to zero, we have

, so setting the equations in (8.3) equal to zero, we have

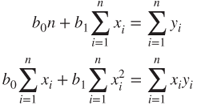

Distributing the summation gives us

which is re-expressed as

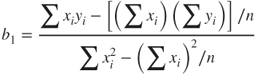

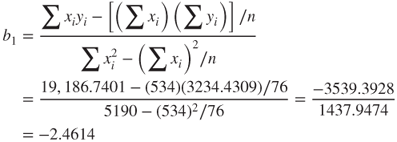

Solving equation (8.4) for ![]() and

and ![]() , we have

, we have

where n is the total number of observations, ![]() is the mean value for the predictor variable and

is the mean value for the predictor variable and ![]() is the mean value for the response variable, and the summations are i = 1 to n. The equations in (8.5) and (8.6) are therefore the least squares estimates for

is the mean value for the response variable, and the summations are i = 1 to n. The equations in (8.5) and (8.6) are therefore the least squares estimates for ![]() and

and ![]() , the values that minimize the SSEs.

, the values that minimize the SSEs.

We now illustrate how we may find the values ![]() and

and ![]() , using equations (8.5), (8.6), and the summary statistics from Table 8.2, which shows the values for

, using equations (8.5), (8.6), and the summary statistics from Table 8.2, which shows the values for ![]() , and

, and ![]() , for the Cereals in the data set (note that only 16 of the 77 cereals are shown). It turns out that, for this data set,

, for the Cereals in the data set (note that only 16 of the 77 cereals are shown). It turns out that, for this data set, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() .

.

Table 8.2 Summary statistics for finding b0 and b1

| Cereal Name | X = Sugars | Y = Rating | X*Y | X2 |

| 100% Bran | 6 | 68.4030 | 410.418 | 36 |

| 100% Natural Bran | 8 | 33.9837 | 271.870 | 64 |

| All-Bran | 5 | 59.4255 | 297.128 | 25 |

| All-Bran Extra Fiber | 0 | 93.7049 | 0.000 | 0 |

| Almond Delight | 8 | 34.3848 | 275.078 | 64 |

| Apple Cinnamon Cheerios | 10 | 29.5095 | 295.095 | 100 |

| Apple Jacks | 14 | 33.1741 | 464.437 | 196 |

| Basic 4 | 8 | 37.0386 | 296.309 | 64 |

| Bran Chex | 6 | 49.1203 | 294.722 | 36 |

| Bran Flakes | 5 | 53.3138 | 266.569 | 25 |

| Cap'n Crunch | 12 | 18.0429 | 216.515 | 144 |

| Cheerios | 1 | 50.7650 | 50.765 | 1 |

| Cinnamon Toast Crunch | 9 | 19.8236 | 178.412 | 81 |

| Clusters | 7 | 40.4002 | 282.801 | 49 |

| Cocoa Puffs | 13 | 22.7364 | 295.573 | 169 |

| Wheaties Honey Gold | 8 | 36.1876 | 289.501 | 64 |

|

|

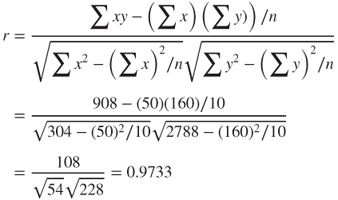

Plugging into formulas (8.5) and (8.6), we find:

and

These values for the slope and y-intercept provide us with the estimated regression line indicated in Figure 8.1.

The y-intercept ![]() is the location on the y-axis where the regression line intercepts the y-axis; that is, the estimated value for the response variable when the predictor variable equals zero. The interpretation of the value of the y-intercept

is the location on the y-axis where the regression line intercepts the y-axis; that is, the estimated value for the response variable when the predictor variable equals zero. The interpretation of the value of the y-intercept ![]() is as the estimated value of y, given x = 0. For example, for the Cereals data set, the y-intercept

is as the estimated value of y, given x = 0. For example, for the Cereals data set, the y-intercept ![]() represents the estimated nutritional rating for cereals with zero sugar content. Now, in many regression situations, a value of zero for the predictor variable would not make sense. For example, suppose we were trying to predict elementary school students' weight (y) based on the students' height (x). The meaning of height = 0 is unclear, so that the denotative meaning of the y-intercept would not make interpretive sense in this case. However, for our data set, a value of zero for the sugar content does make sense, as several cereals contain 0 grams of sugar.

represents the estimated nutritional rating for cereals with zero sugar content. Now, in many regression situations, a value of zero for the predictor variable would not make sense. For example, suppose we were trying to predict elementary school students' weight (y) based on the students' height (x). The meaning of height = 0 is unclear, so that the denotative meaning of the y-intercept would not make interpretive sense in this case. However, for our data set, a value of zero for the sugar content does make sense, as several cereals contain 0 grams of sugar.

The slope of the regression line indicates the estimated change in y per unit increase in x. We interpret ![]() to mean the following: “For each increase of 1 gram in sugar content, the estimated nutritional rating decreases by 2.4614 rating points.” For example, Cereal A with five more grams of sugar than Cereal B would have an estimated nutritional rating 5(2.4614) = 12.307 ratings points lower than Cereal B.

to mean the following: “For each increase of 1 gram in sugar content, the estimated nutritional rating decreases by 2.4614 rating points.” For example, Cereal A with five more grams of sugar than Cereal B would have an estimated nutritional rating 5(2.4614) = 12.307 ratings points lower than Cereal B.

8.2 Dangers of Extrapolation

Suppose that a new cereal (say, the Chocolate Frosted Sugar Bombs loved by Calvin, the comic strip character written by Bill Watterson) arrives on the market with a very high sugar content of 30 grams per serving. Let us use our estimated regression equation to estimate the nutritional rating for Chocolate Frosted Sugar Bombs:

In other words, Calvin's cereal has so much sugar that its nutritional rating is actually a negative number, unlike any of the other cereals in the data set (minimum = 18) and analogous to a student receiving a negative grade on an exam. What is going on here? The negative estimated nutritional rating for Chocolate Frosted Sugar Bombs is an example of the dangers of extrapolation.

Analysts should confine the estimates and predictions made using the regression equation to values of the predictor variable contained within the range of the values of x in the data set. For example, in the Cereals data set, the lowest sugar content is 0 grams and the highest is 15 grams, so that predictions of nutritional rating for any value of x (sugar content) between 0 and 15 grams would be appropriate. However, extrapolation, making predictions for x-values lying outside this range, can be dangerous, because we do not know the nature of the relationship between the response and predictor variables outside this range.

Extrapolation should be avoided if possible. If predictions outside the given range of x must be performed, the end-user of the prediction needs to be informed that no x-data is available to support such a prediction. The danger lies in the possibility that the relationship between x and y, which may be linear within the range of x in the data set, may no longer be linear outside these bounds.

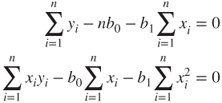

Consider Figure 8.2. Suppose that our data set consisted only of the data points in black but that the true relationship between x and y consisted of both the black (observed) and the gray (unobserved) points. Then, a regression line based solely on the available (black dot) data would look approximately similar to the regression line indicated. Suppose that we were interested in predicting the value of y for an x-value located at the triangle. The prediction based on the available data would then be represented by the dot on the regression line indicated by the upper arrow. Clearly, this prediction has failed spectacularly, as shown by the vertical line indicating the huge prediction error. Of course, as the analyst would be completely unaware of the hidden data, he or she would hence be oblivious to the massive scope of the error in prediction. Policy recommendations based on such erroneous predictions could certainly have costly results.

Figure 8.2 Dangers of extrapolation.

8.3 How Useful is the Regression? The Coefficient of Determination, 2

Of course, a least-squares regression line could be found to approximate the relationship between any two continuous variables, regardless of the quality of the relationship between them, but this does not guarantee that the regression will therefore be useful. The question therefore arises as to how we may determine whether a particular estimated regression equation is useful for making predictions.

We shall work toward developing a statistic, ![]() , for measuring the goodness of fit of the regression. That is,

, for measuring the goodness of fit of the regression. That is, ![]() , known as the coefficient of determination, measures how well the linear approximation produced by the least-squares regression line actually fits the observed data.

, known as the coefficient of determination, measures how well the linear approximation produced by the least-squares regression line actually fits the observed data.

Recall that ![]() represents the estimated value of the response variable, and that

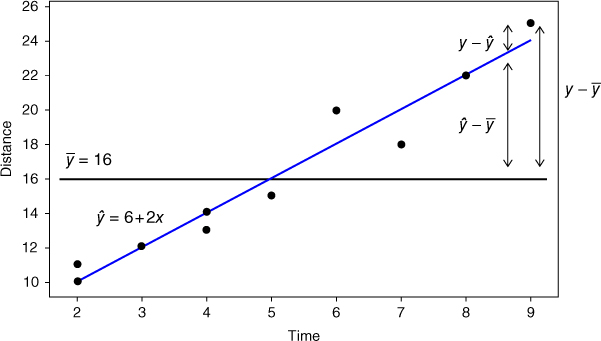

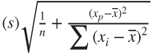

represents the estimated value of the response variable, and that ![]() represents the prediction error or residual. Consider the data set in Table 8.3, which shows the distance in kilometers traveled by a sample of 10 orienteering competitors, along with the elapsed time in hours. For example, the first competitor traveled 10 kilometers in 2 hours. On the basis of these 10 competitors, the estimated regression takes the form

represents the prediction error or residual. Consider the data set in Table 8.3, which shows the distance in kilometers traveled by a sample of 10 orienteering competitors, along with the elapsed time in hours. For example, the first competitor traveled 10 kilometers in 2 hours. On the basis of these 10 competitors, the estimated regression takes the form ![]() , so that the estimated distance traveled equals 6 kilometers plus twice the number of hours. You should verify that you can calculate this estimated regression equation, either using software, or using the equations in (8.7) and (8.8).

, so that the estimated distance traveled equals 6 kilometers plus twice the number of hours. You should verify that you can calculate this estimated regression equation, either using software, or using the equations in (8.7) and (8.8).

Table 8.3 Calculation of the SSE for the orienteering example

| Predicted Score | Error in Prediction | (Error in Prediction)2 | |||

| Subject | X = Time | Y = Distance | |||

| 1 | 2 | 10 | 10 | 0 | 0 |

| 2 | 2 | 11 | 10 | 1 | 1 |

| 3 | 3 | 12 | 12 | 0 | 0 |

| 4 | 4 | 13 | 14 | −1 | 1 |

| 5 | 4 | 14 | 14 | 0 | 0 |

| 6 | 5 | 15 | 16 | −1 | 1 |

| 7 | 6 | 20 | 18 | 2 | 4 |

| 8 | 7 | 18 | 20 | −2 | 4 |

| 9 | 8 | 22 | 22 | 0 | 0 |

| 10 | 9 | 25 | 24 | 1 | 1 |

This estimated regression equation can be used to make predictions about the distance traveled for a given number of hours. These estimated values of y are given in the Predicted Score column in Table 8.3. The prediction error and squared prediction error may then be calculated. The sum of the squared prediction errors, or the sum of squares error, SSE = ![]() , represents an overall measure of the error in prediction resulting from the use of the estimated regression equation. Here we have SSE = 12. Is this value large? We are unable to state whether this value, SSE = 12, is large, because at this point we have no other measure to compare it to.

, represents an overall measure of the error in prediction resulting from the use of the estimated regression equation. Here we have SSE = 12. Is this value large? We are unable to state whether this value, SSE = 12, is large, because at this point we have no other measure to compare it to.

Now, imagine for a moment that we were interested in estimating the distance traveled without knowledge of the number of hours. That is, suppose that we did not have access to the x-variable information for use in estimating the y-variable. Clearly, our estimates of the distance traveled would be degraded, on the whole, because less information usually results in less accurate estimates.

Because we lack access to the predictor information, our best estimate for y is simply ![]() , the sample mean of the number of hours traveled. We would be forced to use

, the sample mean of the number of hours traveled. We would be forced to use ![]() to estimate the number of kilometers traveled for every competitor, regardless of the number of hours that person had traveled.

to estimate the number of kilometers traveled for every competitor, regardless of the number of hours that person had traveled.

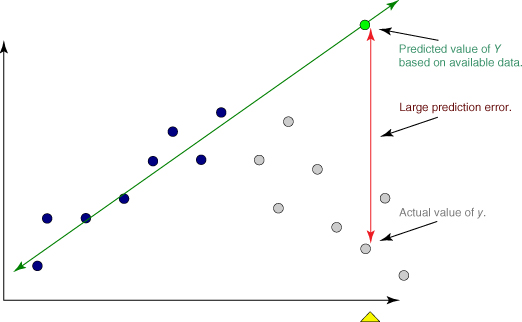

Consider Figure 8.3. The estimates for distance traveled when ignoring the time information is shown by the horizontal line ![]() Disregarding the time information entails predicting

Disregarding the time information entails predicting ![]() kilometers for the distance traveled, for orienteering competitors who have been hiking only 2 or 3 hours, as well as for those who have been out all day (8 or 9 hours). This is clearly not optimal.

kilometers for the distance traveled, for orienteering competitors who have been hiking only 2 or 3 hours, as well as for those who have been out all day (8 or 9 hours). This is clearly not optimal.

Figure 8.3 Overall, the regression line has smaller prediction error than the sample mean.

The data points in Figure 8.3 seem to “cluster” tighter around the estimated regression line than around the line ![]() , which suggests that, overall, the prediction errors are smaller when we use the x-information than otherwise. For example, consider competitor #10, who hiked y = 25 kilometers in x = 9 hours. If we ignore the x-information, then the estimation error would be

, which suggests that, overall, the prediction errors are smaller when we use the x-information than otherwise. For example, consider competitor #10, who hiked y = 25 kilometers in x = 9 hours. If we ignore the x-information, then the estimation error would be ![]() kilometers. This prediction error is indicated as the vertical line between the data point for this competitor and the horizontal line; that is, the vertical distance between the observed y and the predicted

kilometers. This prediction error is indicated as the vertical line between the data point for this competitor and the horizontal line; that is, the vertical distance between the observed y and the predicted ![]() .

.

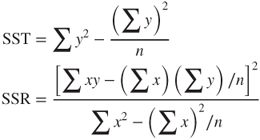

Suppose that we proceeded to find ![]() for every record in the data set, and then found the sum of squares of these measures, just as we did for

for every record in the data set, and then found the sum of squares of these measures, just as we did for ![]() when we calculated the SSE. This would lead us to SST, the sum of squares total:

when we calculated the SSE. This would lead us to SST, the sum of squares total:

SST, also known as the total sum of squares, is a measure of the total variability in the values of the response variable alone, without reference to the predictor. Note that SST is a function of the sample variance of y, where the variance is the square of the standard deviation of y:

Thus, all three of these measures—SST, variance, and standard deviation—are univariate measures of the variability in y alone (although of course we could find the variance and standard deviation of the predictor as well).

Would we expect SST to be larger or smaller than SSE? Using the calculations shown in Table 8.4, we have SST = 228, which is much larger than SSE = 12. We now have something to compare SSE against. As SSE is so much smaller than SST, this indicates that using the predictor information in the regression results in much tighter estimates overall than ignoring the predictor information. These sums of squares measure errors in prediction, so that smaller is better. In other words, using the regression improves our estimates of the distance traveled.

Table 8.4 Finding SST for the orienteering example

| Student | X = Time | Y = Distance | |||

| 1 | 2 | 10 | 16 | −6 | 36 |

| 2 | 2 | 11 | 16 | −5 | 25 |

| 3 | 3 | 12 | 16 | −4 | 16 |

| 4 | 4 | 13 | 16 | −3 | 9 |

| 5 | 4 | 14 | 16 | −2 | 4 |

| 6 | 5 | 15 | 16 | −1 | 1 |

| 7 | 6 | 20 | 16 | 4 | 16 |

| 8 | 7 | 18 | 16 | 2 | 4 |

| 9 | 8 | 22 | 16 | 6 | 36 |

| 10 | 9 | 25 | 16 | 9 | 81 |

Next, what we would like is a measure of how much the estimated regression equation improves the estimates. Once again examine Figure 8.3. For hiker #10, the estimation error when using the regression is ![]() , while the estimation error when ignoring the time information is

, while the estimation error when ignoring the time information is ![]() . Therefore, the amount of improvement (reduction in estimation error) is

. Therefore, the amount of improvement (reduction in estimation error) is ![]() .

.

Once again, we may proceed to construct a sum of squares statistic based on ![]() . Such a statistic is known as SSR, the sum of squares regression, a measure of the overall improvement in prediction accuracy when using the regression as opposed to ignoring the predictor information.

. Such a statistic is known as SSR, the sum of squares regression, a measure of the overall improvement in prediction accuracy when using the regression as opposed to ignoring the predictor information.

Observe from Figure 8.2 that the vertical distance ![]() may be partitioned into two “pieces,”

may be partitioned into two “pieces,” ![]() and

and ![]() . This follows from the following identity:

. This follows from the following identity:

Now, suppose we square each side, and take the summation. We then obtain3

:

We recognize from equation (8.8) the three sums of squares we have been developing, and can therefore express the relationship among them as follows:

We have seen that SST measures the total variability in the response variable. We may then think of SSR as the amount of variability in the response variable that is “explained” by the regression. In other words, SSR measures that portion of the variability in the response variable that is accounted for by the linear relationship between the response and the predictor.

However, as not all the data points lie precisely on the regression line, this means that there remains some variability in the y-variable that is not accounted for by the regression. SSE can be thought of as measuring all the variability in y from all sources, including random error, after the linear relationship between x and y has been accounted for by the regression.

Earlier, we found SST = 228 and SSE = 12. Then, using equation (8.11), we can find SSR to be SSR = SST − SSE = 228 − 12 = 216. Of course, these sums of squares must always be nonnegative. We are now ready to introduce the coefficient of determination, ![]() , which measures the goodness of fit of the regression as an approximation of the linear relationship between the predictor and response variables.

, which measures the goodness of fit of the regression as an approximation of the linear relationship between the predictor and response variables.

As ![]() takes the form of a ratio of SSR to SST, we may interpret

takes the form of a ratio of SSR to SST, we may interpret ![]() to represent the proportion of the variability in the y-variable that is explained by the regression; that is, by the linear relationship between the predictor and response variables.

to represent the proportion of the variability in the y-variable that is explained by the regression; that is, by the linear relationship between the predictor and response variables.

What is the maximum value that ![]() can take? The maximum value for

can take? The maximum value for ![]() would occur when the regression is a perfect fit to the data set, which takes place when each of the data points lies precisely on the estimated regression line. In this optimal situation, there would be no estimation errors from using the regression, meaning that each of the residuals would equal zero, which in turn would mean that SSE would equal zero. From equation (8.11), we have that SST = SSR + SSE. If SSE = 0, then SST = SSR, so that

would occur when the regression is a perfect fit to the data set, which takes place when each of the data points lies precisely on the estimated regression line. In this optimal situation, there would be no estimation errors from using the regression, meaning that each of the residuals would equal zero, which in turn would mean that SSE would equal zero. From equation (8.11), we have that SST = SSR + SSE. If SSE = 0, then SST = SSR, so that ![]() would equal SSR/SST = 1. Thus, the maximum value for

would equal SSR/SST = 1. Thus, the maximum value for ![]() is 1, which occurs when the regression is a perfect fit.

is 1, which occurs when the regression is a perfect fit.

What is the minimum value that ![]() can take? Suppose that the regression showed no improvement at all, that is, suppose that the regression explained none of the variability in y. This would result in SSR equaling zero, and consequently,

can take? Suppose that the regression showed no improvement at all, that is, suppose that the regression explained none of the variability in y. This would result in SSR equaling zero, and consequently, ![]() would equal zero as well. Thus,

would equal zero as well. Thus, ![]() is bounded between 0 and 1, inclusive.

is bounded between 0 and 1, inclusive.

How are we to interpret the value that ![]() takes? Essentially, the higher the value of

takes? Essentially, the higher the value of ![]() , the better the fit of the regression to the data set. Values of

, the better the fit of the regression to the data set. Values of ![]() near one denote an extremely good fit of the regression to the data, while values near zero denote an extremely poor fit. In the physical sciences, one encounters relationships that elicit very high values of

near one denote an extremely good fit of the regression to the data, while values near zero denote an extremely poor fit. In the physical sciences, one encounters relationships that elicit very high values of ![]() , while in the social sciences, one may need to be content with lower values of

, while in the social sciences, one may need to be content with lower values of ![]() , because of person-to-person variability. As usual, the analyst's judgment should be tempered with the domain expert's experience.

, because of person-to-person variability. As usual, the analyst's judgment should be tempered with the domain expert's experience.

8.4 Standard Error of the Estimate,

We have seen how the ![]() statistic measures the goodness of fit of the regression to the data set. Next, the s statistic, known as the standard error of the estimate, is a measure of the accuracy of the estimates produced by the regression. Clearly, s is one of the most important statistics to consider when performing a regression analysis. To find the value of s, we first find the mean square error (MSE):

statistic measures the goodness of fit of the regression to the data set. Next, the s statistic, known as the standard error of the estimate, is a measure of the accuracy of the estimates produced by the regression. Clearly, s is one of the most important statistics to consider when performing a regression analysis. To find the value of s, we first find the mean square error (MSE):

where m indicates the number of predictor variables, which is 1 for the simple linear regression case, and greater than 1 for the multiple regression case (Chapter 9). Like SSE, MSE represents a measure of the variability in the response variable left unexplained by the regression.

Then, the standard error of the estimate is given by

The value of s provides an estimate of the size of the “typical” residual, much as the value of the standard deviation in univariate analysis provides an estimate of the size of the typical deviation. In other words, s is a measure of the typical error in estimation, the typical difference between the predicted response value and the actual response value. In this way, the standard error of the estimate s represents the precision of the predictions generated by the estimated regression equation. Smaller values of s are better, and s has the benefit of being expressed in the units of the response variable y.

For the orienteering example, we have

Thus, the typical estimation error when using the regression model to predict distance is 1.2 kilometers. That is, if we are told how long a hiker has been traveling, then our estimate of the distance covered will typically differ from the actual distance by about 1.2 kilometers. Note from Table 8.3 that all of the residuals lie between 0 and 2 in absolute value, so that 1.2 may be considered a reasonable estimate of the typical residual. (Other measures, such as the mean absolute deviation of the residuals, may also be considered, but are not widely reported in commercial software packages.)

We may compare s = 1.2 kilometers against the typical estimation error obtained from ignoring the predictor data, obtained from the standard deviation of the response,

The typical prediction error when ignoring the time data is 5 kilometers. Using the regression has reduced the typical prediction error from 5 to 1.2 kilometers.

In the absence of software, one may use the following computational formulas for calculating the values of SST and SSR. The formula for SSR is exactly the same as for the slope ![]() , except that the numerator is squared.

, except that the numerator is squared.

Let us use these formulas for finding the values of SST and SSR for the orienteering example. You should verify that we have ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() .

.

Then,![]() .

.

And,  .

.

Of course, these are the same values found earlier using the more onerous tabular method. Finally, we calculate the value of the coefficient of determination ![]() to be

to be

In other words, the linear relationship between time and distance accounts for 94.74% of the variability in the distances traveled. The regression model fits the data very nicely.

8.5 Correlation Coefficient

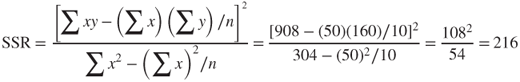

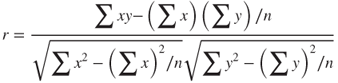

A common measure used to quantify the linear relationship between two quantitative variables is the correlation coefficient. The correlation coefficient r (also known as the Pearson product moment correlation coefficient) is an indication of the strength of the linear relationship between two quantitative variables, and is defined as follows:

where ![]() and

and ![]() represent the sample standard deviations of the x and y data values, respectively.

represent the sample standard deviations of the x and y data values, respectively.

The correlation coefficient r always takes on values between 1 and −1, inclusive. Values of r close to 1 indicate that x and y are positively correlated, while values of r close to −1 indicate that x and y are negatively correlated. However, because of the large sample sizes associated with data mining, even values of r relatively small in absolute value may be considered statistically significant. For example, for a relatively modest-sized data set of about 1000 records, a correlation coefficient of r = 0.07 would be considered statistically significant. Later in this chapter, we learn how to construct a confidence interval for determining the statistical significance of the correlation coefficient r.

The definition formula for the correlation coefficient above may be tedious, because the numerator would require the calculation of the deviations for both the x-data and the y-data. We therefore have recourse, in the absence of software, to the following computational formula for r:

For the orienteering example, we have

We would say that the time spent traveling and the distance traveled are strongly positively correlated. As the time spent hiking increases, the distance traveled tends to increase.

However, it is more convenient to express the correlation coefficient r as ![]() . When the slope

. When the slope ![]() of the estimated regression line is positive, then the correlation coefficient is also positive,

of the estimated regression line is positive, then the correlation coefficient is also positive, ![]() ; when the slope is negative, then the correlation coefficient is also negative,

; when the slope is negative, then the correlation coefficient is also negative, ![]() In the orienteering example, we have

In the orienteering example, we have ![]() . This is positive, which means that the correlation coefficient will also be positive,

. This is positive, which means that the correlation coefficient will also be positive, ![]() .

.

It should be stressed here that the correlation coefficient r measures only the linear correlation between x and y. The predictor and target may be related in a curvilinear manner, for example, and r may not uncover the relationship.

8.6 Anova Table for Simple Linear Regression

Regression statistics may be succinctly presented in an analysis of variance (ANOVA) table, the general form of which is shown here in Table 8.5. Here, m represents the number of predictor variables, so that, for simple linear regression, m = 1.

Table 8.5 The ANOVA table for simple linear regression

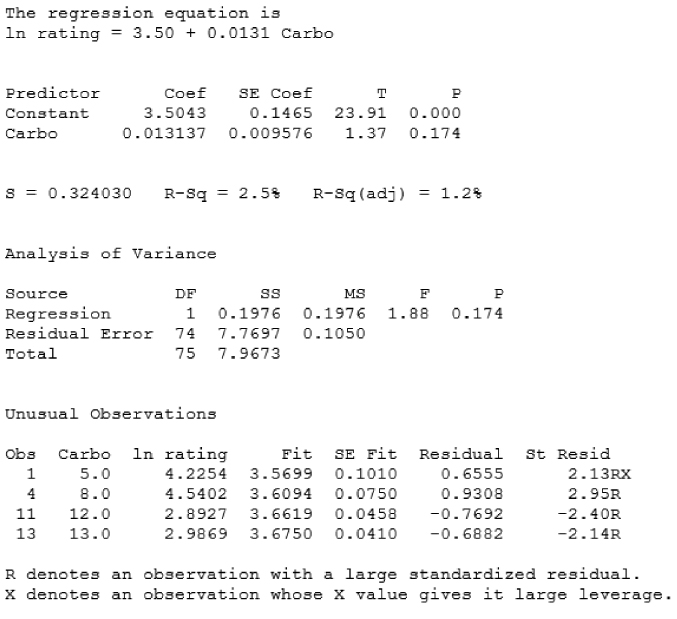

| Source of Variation | Sum of Squares | Degrees of Freedom | Mean Square | F |

| Regression | SSR | m | ||

| Error (or residual) | SSE | |||

| Total |

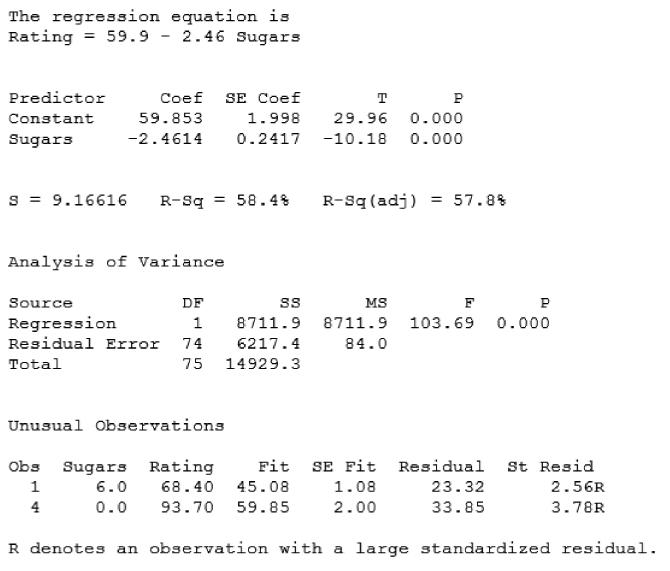

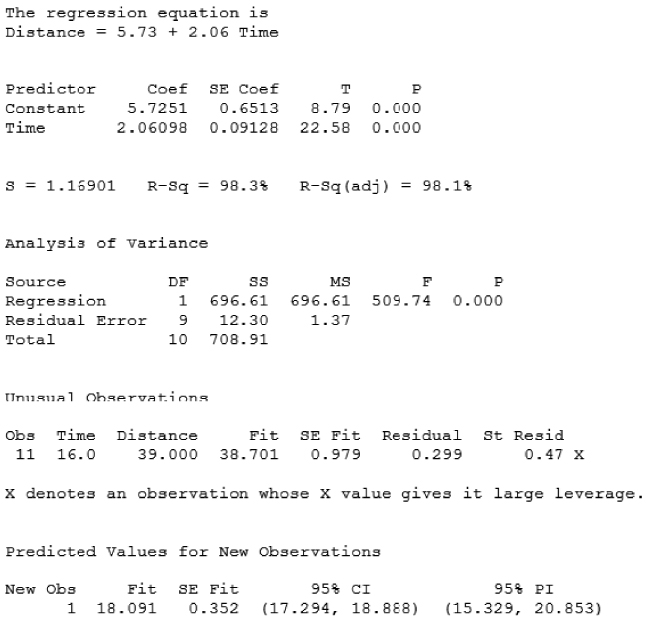

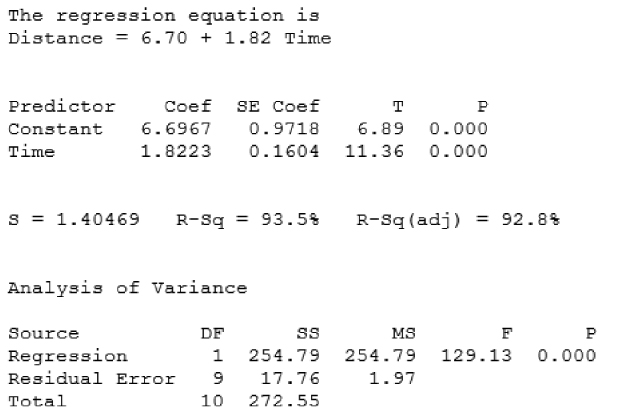

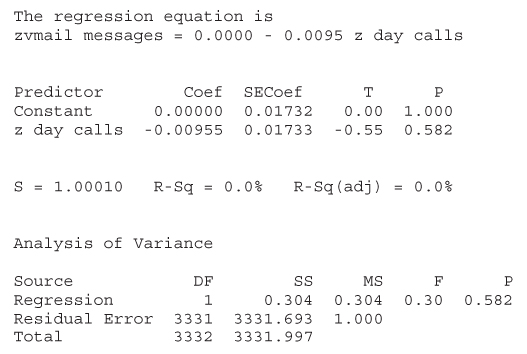

The ANOVA table conveniently displays the relationships among several statistics, showing, for example, that the sums of squares add up to SST. The mean squares are presented as the ratios of the items to their left, and, for inference, the test statistic F is represented as the ratio of the mean squares. Tables 8.6 and 8.7 show the Minitab regression results, including the ANOVA tables, for the orienteering example and the cereal example, respectively.

Table 8.6 Results for regression of distance versus time for the orienteering example

|

Table 8.7 Results for regression of nutritional rating versus sugar content

|

8.7 Outliers, High Leverage Points, and Influential Observations

Next, we discuss the role of three types of observations that may or may not exert undue influence on the regression results. These are as follows:

- Outliers

- High leverage points

- Influential observations.

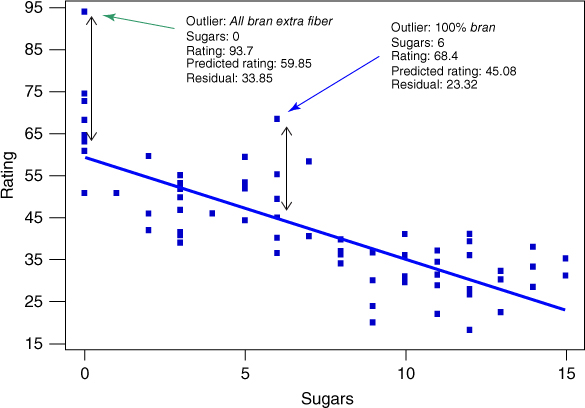

An outlier is an observation that has a very large standardized residual in absolute value. Consider the scatter plot of nutritional rating against sugars in Figure 8.4. The two observations with the largest absolute residuals are identified as All Bran Extra Fiber and 100% Bran. Note that the vertical distance away from the regression line (indicated by the vertical arrows) is greater for these two observations than for any other cereals, indicating the largest residuals.

Figure 8.4 Identifying the outliers in regression of nutritional rating versus sugars.

For example, the nutritional rating for All Bran Extra Fiber (93.7) is much higher than predicted (59.85), based on its sugar content alone (0 grams). Similarly, the nutritional rating for 100% Bran (68.4) is much higher than would have been estimated (45.08) based on its sugar content alone (6 grams).

Residuals may have different variances, so that it is preferable to use the standardized residuals in order to identify outliers. Standardized residuals are residuals divided by their standard error, so that they are all on the same scale. Let ![]() denote the standard error of the ith residual. Then

denote the standard error of the ith residual. Then

where ![]() refers to the leverage of the ith observation (see below).

refers to the leverage of the ith observation (see below).

And the standardized residual equals:

A rough rule of thumb is to flag observations whose standardized residuals exceed 2 in absolute value as being outliers. For example, note from Table 8.7 that Minitab identifies observations 1 and 4 as outliers based on their large standardized residuals; these are All Bran Extra Fiber and 100% Bran.

In general, if the residual is positive, we may say that the observed y-value is higher than the regression estimated, given the x-value. If the residual is negative, we may say that the observed y-value is lower than the regression estimated, given the x-value. For example, for All Bran Extra Fiber (which has a positive residual), we would say that the observed nutritional rating is higher than the regression estimated, given its sugars value. (This may presumably be because of all that extra fiber.)

A high leverage point is an observation that is extreme in the predictor space. In other words, a high leverage point takes on extreme values for the x-variable(s), without reference to the y-variable. That is, leverage takes into account only the x-variables, and ignores the y-variable. The term leverage is derived from the physics concept of the lever, which Archimedes asserted could move the Earth itself if only it were long enough.

The leverage ![]() for the ith observation may be denoted as follows:

for the ith observation may be denoted as follows:

For a given data set, the quantities ![]() and

and ![]() may be considered to be constants, so that the leverage for the ith observation depends solely on

may be considered to be constants, so that the leverage for the ith observation depends solely on ![]() , the squared distance between the value of the predictor and the mean value of the predictor. The farther the observation differs from the mean of the observations, in the x-space, the greater the leverage. The lower bound on leverage values is

, the squared distance between the value of the predictor and the mean value of the predictor. The farther the observation differs from the mean of the observations, in the x-space, the greater the leverage. The lower bound on leverage values is ![]() , and the upper bound is 1.0. An observation with leverage greater than about

, and the upper bound is 1.0. An observation with leverage greater than about ![]() or

or ![]() may be considered to have high leverage (where m indicates the number of predictors).

may be considered to have high leverage (where m indicates the number of predictors).

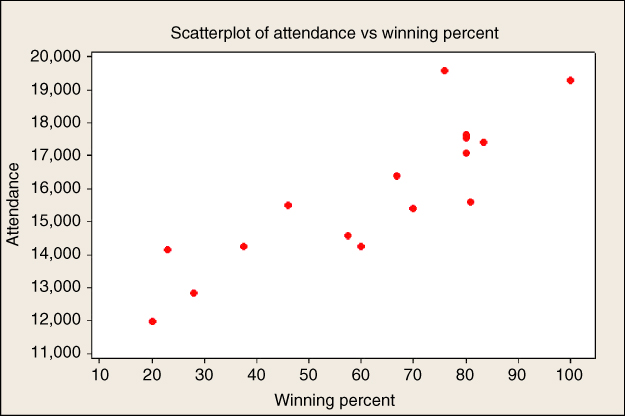

For example, in the orienteering example, suppose that there was a new observation, a real hard-core orienteering competitor, who hiked for 16 hours and traveled 39 kilometers. Figure 8.5 shows the scatter plot, updated with this 11th hiker.

Figure 8.5 Scatter plot of distance versus time, with new competitor who hiked for 16 hours.

The Hard-Core Orienteer hiked 39 kilometers in 16 hours. Does he represent an outlier or a high-leverage point?

Note from Figure 8.5 that the time traveled by the new hiker (16 hours) is extreme in the x-space, as indicated by the horizontal arrows. This is sufficient to identify this observation as a high leverage point, without reference to how many kilometers he or she actually traveled. Examine Table 8.8, which shows the updated regression results for the 11 hikers. Note that Minitab correctly points out that the extreme orienteer does indeed represent an unusual observation, because its x-value gives it large leverage. That is, Minitab has identified the hard-core orienteer as a high leverage point, because he hiked for 16 hours. It correctly did not consider the distance (y-value) when considering leverage.

Table 8.8 Updated regression results, including the hard-core hiker

|

However, the hard-core orienteer is not an outlier. Note from Figure 8.5 that the data point for the hard-core orienteer lies quite close to the regression line, meaning that his distance of 39 kilometers is close to what the regression equation would have predicted, given the 16 hours of hiking. Table 8.8 tells us that the standardized residual is only ![]() , which is less than 2, and therefore not an outlier.

, which is less than 2, and therefore not an outlier.

Next, we consider what it means to be an influential observation. In the context of history, what does it mean to be an influential person? A person is influential if their presence or absence significantly changes the history of the world. In the context of Bedford Falls (from the Christmas movie It's a Wonderful Life), George Bailey (played by James Stewart) discovers that he really was influential when an angel shows him how different (and poorer) the world would have been had he never been born. Similarly, in regression, an observation is influential if the regression parameters alter significantly based on the presence or absence of the observation in the data set.

An outlier may or may not be influential. Similarly, a high leverage point may or may not be influential. Usually, influential observations combine both the characteristics of large residual and high leverage. It is possible for an observation to be not-quite flagged as an outlier, and not-quite flagged as a high leverage point, but still be influential through the combination of the two characteristics.

First let us consider an example of an observation that is an outlier but is not influential. Suppose that we replace our 11th observation (no more hard-core guy) with someone who hiked 20 kilometers in 5 hours. Examine Table 8.9, which presents the regression results for these 11 hikers. Note from Table 8.9 that the new observation is flagged as an outlier (unusual observation with large standardized residual). This is because the distance traveled (20 kilometers) is higher than the regression predicted (16.364 kilometers), given the time (5 hours).

Table 8.9 Regression results including person who hiked 20 kilometers in 5 hours

|

Now, would we consider this observation to be influential? Overall, probably not. Compare Table 8.9 (the regression output for the new hiker with 5 hours/20 kilometers) and Table 8.6 (the regression output for the original data set) to assess the effect the presence of this new observation has on the regression coefficients. The y-intercept changes from ![]() to

to ![]() , but the slope does not change at all, remaining at

, but the slope does not change at all, remaining at ![]() , regardless of the presence of the new hiker.

, regardless of the presence of the new hiker.

Figure 8.6 shows the relatively mild effect this outlier has on the estimated regression line, shifting it vertically a small amount, without affecting the slope at all. Although it is an outlier, this observation is not influential because it has very low leverage, being situated exactly on the mean of the x-values, so that it has the minimum possible leverage for a data set of size n = 11.

Figure 8.6 The mild outlier shifts the regression line only slightly.

We can calculate the leverage for this observation (x = 5, y = 20) as follows. As ![]() , we have

, we have

Then the leverage for the new observation is

Now that we have the leverage for this observation, we may also find the standardized residual, as follows. First, we have the standard error of the residual:

So that the standardized residual equals:

as shown in Table 8.9. Note that the value of the standardized residual, 2.22, is only slightly larger than 2.0, so by our rule of thumb this observation may considered only a mild outlier.

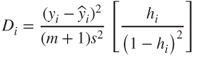

Cook's distance is the most common measure of the influence of an observation. It works by taking into account both the size of the residual and the amount of leverage for that observation. Cook's distance takes the following form, for the ith observation:

where ![]() represents the ith residual, s represents the standard error of the estimate,

represents the ith residual, s represents the standard error of the estimate, ![]() represents the leverage of the ith observation, and m represents the number of predictors.

represents the leverage of the ith observation, and m represents the number of predictors.

The left-hand ratio in the formula for Cook's distance contains an element representing the residual, while the right-hand ratio contains functions of the leverage. Thus Cook's distance combines the two concepts of outlier and leverage into a single measure of influence. The value of the Cook's distance measure for the hiker who traveled 20 kilometers in 5 hours is as follows:

A rough rule of thumb for determining whether an observation is influential is if its Cook's distance exceeds 1.0. More accurately, one may also compare the Cook's distance against the percentiles of the F-distribution with (m, n − m − 1) degrees of freedom. If the observed value lies within the first quartile of this distribution (lower than the 25th percentile), then the observation has little influence on the regression; however, if the Cook's distance is greater than the median of this distribution, then the observation is influential. For this observation, the Cook's distance of 0.2465 lies within the 22nd percentile of the ![]() distribution, indicating that while the influence of the observation is small.

distribution, indicating that while the influence of the observation is small.

What about the hard-core hiker we encountered earlier? Was that observation influential? Recall that this hiker traveled 39 kilometers in 16 hours, providing the 11th observation in the results reported in Table 8.8. First, let us find the leverage.

We have n = 11 and m = 1, so that observations having ![]() or

or ![]() may be considered to have high leverage. This observation has

may be considered to have high leverage. This observation has ![]() , which indicates that this durable hiker does indeed have high leverage, as mentioned earlier with reference to Figure 8.5. Figure 8.5 seems to indicate that this hiker (x = 16, y = 39) is not however an outlier, because the observation lies near the regression line. The standardized residual supports this, having a value of 0.46801. The reader will be asked to verify these values for leverage and standardized residual in the exercises. Finally, the Cook's distance for this observation is 0.2564, which is about the same as our previous example, indicating that the observation is not influential. Figure 8.7 shows the slight change in the regression with (solid line) and without (dotted line) this observation.

, which indicates that this durable hiker does indeed have high leverage, as mentioned earlier with reference to Figure 8.5. Figure 8.5 seems to indicate that this hiker (x = 16, y = 39) is not however an outlier, because the observation lies near the regression line. The standardized residual supports this, having a value of 0.46801. The reader will be asked to verify these values for leverage and standardized residual in the exercises. Finally, the Cook's distance for this observation is 0.2564, which is about the same as our previous example, indicating that the observation is not influential. Figure 8.7 shows the slight change in the regression with (solid line) and without (dotted line) this observation.

Figure 8.7 Slight change in regression line when hard-core hiker added.

So we have seen that an observation that is an outlier with low influence, or an observation that is a high leverage point with a small residual may not be particularly influential. We next illustrate how a data point that has a moderately high residual and moderately high leverage may indeed be influential. Suppose that our 11th hiker had instead hiked for 10 hours, and traveled 23 kilometers. The regression analysis for these 11 hikers is then given in Table 8.10.

Table 8.10 Regression results for new observation with time = 10, distance = 23

|

Note that Minitab does not identify the new observation as either an outlier or a high leverage point. This is because, as the reader is asked to verify in the exercises, the leverage of this new hiker is ![]() , and the standardized residual equals −1.70831.

, and the standardized residual equals −1.70831.

However, despite lacking either a particularly large leverage or large residual, this observation is nevertheless influential, as measured by its Cook's distance of ![]() , which is in line with the 62nd percentile of the

, which is in line with the 62nd percentile of the ![]() distribution.

distribution.

The influence of this observation stems from the combination of its moderately large residual with its moderately large leverage. Figure 8.8 shows the influence this single hiker has on the regression line, pulling down on the right side to decrease the slope (from 2.00 to 1.82), and thereby increase the y-intercept (from 6.00 to 6.70).

Figure 8.8 Moderate residual plus moderate leverage = influential observation.

8.8 Population Regression Equation

Least squares regression is a powerful and elegant methodology. However, if the assumptions of the regression model are not validated, then the resulting inference and model building are undermined. Deploying a model whose results are based on unverified assumptions may lead to expensive failures later on. The simple linear regression model is given as follows. We have a set of n bivariate observations, with response value ![]() related to predictor value

related to predictor value ![]() through the following linear relationship.

through the following linear relationship.

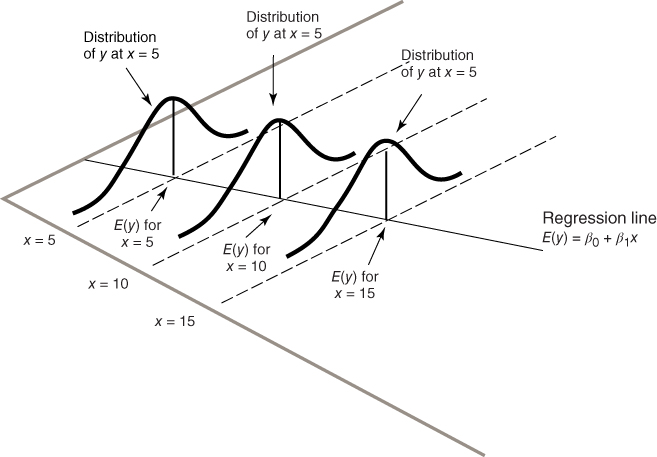

On the basis of these four assumptions, we can derive four implications for the behavior of the response variable, y, as follows.

Figure 8.9 For each value of x, the  are normally distributed, with mean on the true regression line, and constant variance.

are normally distributed, with mean on the true regression line, and constant variance.

If one is interested in using regression analysis in a strictly descriptive manner, with no inference and no model building, then one need not worry quite so much about assumption validation. This is because the assumptions are about the error term. If the error term is not involved, then the assumptions are not needed. However, if one wishes to do inference or model building, then the assumptions must be verified.

8.9 Verifying The Regression Assumptions

So, how does one go about verifying the regression assumptions? The two main graphical methods used to verify regression assumptions are as follows:

- A normal probability plot of the residuals.

- A plot of the standardized residuals against the fitted (predicted) values.

A normal probability plot is a quantile–quantile plot of the quantiles of a particular distribution against the quantiles of the standard normal distribution, for the purposes of determining whether the specified distribution deviates from normality. (Similar to a percentile, a quantile of a distribution is a value ![]() such that

such that ![]() of the distribution values are less than or equal to

of the distribution values are less than or equal to ![]() .) In a normality plot, the observed values of the distribution of interest are compared against the same number of values that would be expected from the normal distribution. If the distribution is normal, then the bulk of the points in the plot should fall on a straight line; systematic deviations from linearity in this plot indicate non-normality.

.) In a normality plot, the observed values of the distribution of interest are compared against the same number of values that would be expected from the normal distribution. If the distribution is normal, then the bulk of the points in the plot should fall on a straight line; systematic deviations from linearity in this plot indicate non-normality.

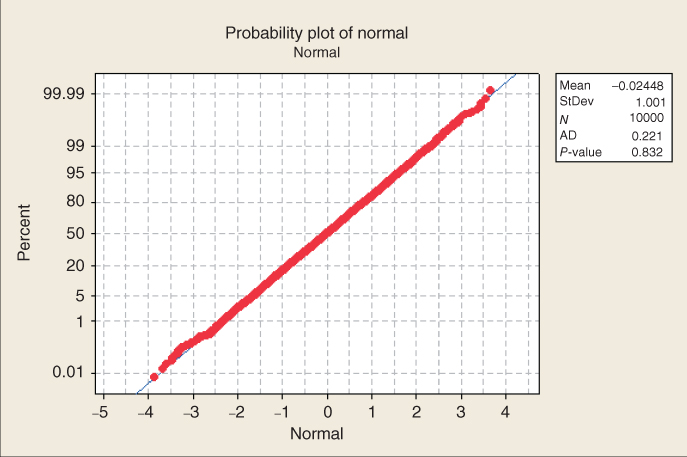

To illustrate the behavior of the normal probability plot for different kinds of data distributions, we provide three examples. Figures 8.10–8.12 contain the normal probability plots for 10,000 values drawn from a uniform (0, 1) distribution, a chi-square (5) distribution, and a normal (0, 1) distribution, respectively.

Figure 8.10 Normal probability plot for a uniform distribution: heavy tails.

Figure 8.11 Probability plot for a chi-square distribution: right-skewed.

Figure 8.12 Probability plot for a normal distribution: Do not expect real-world data to behave this nicely.

Note in Figure 8.10 that the bulk of the data do not line up on the straight line, and that a clear pattern (reverse S curve) emerges, indicating systematic deviation from normality. The uniform distribution is a rectangular-shaped distribution, whose tails are much heavier than the normal distribution. Thus, Figure 8.10 is an example of a probability plot for a distribution with heavier tails than the normal distribution.

Figure 8.11 also contains a clear curved pattern, indicating systematic deviation from normality. The chi-square (5) distribution is right-skewed, so that the curve pattern apparent in Figure 8.11 may be considered typical of the pattern made by right-skewed distributions in a normal probability plot.

Finally, in Figure 8.12, the points line up nicely on a straight line, indicating normality, which is not surprising because the data are drawn from a normal (0, 1) distribution. It should be remarked that we should not expect real-world data to behave this nicely. The presence of sampling error and other sources of noise will usually render our decisions about normality less clear-cut than this.

Note the Anderson–Darling (AD) statistic and p-value reported by Minitab in each of Figures 8.10–8.12. This refers to the AD test for normality. Smaller values of the AD statistic indicate that the normal distribution is a better fit for the data. The null hypothesis is that the normal distribution fits, so that small p-values will indicate lack of fit. Note that for the uniform and chi-square examples, the p-value for the AD test is less than 0.005, indicating strong evidence for lack of fit with the normal distribution. However, the p-value for the normal example is 0.832, indicating no evidence against the null hypothesis that the distribution is normal.

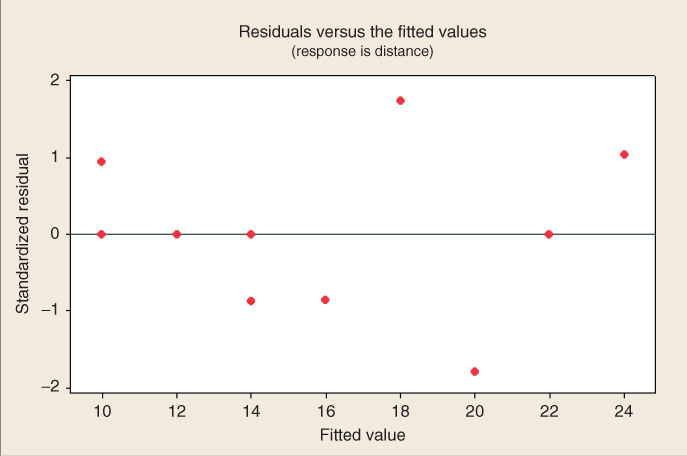

The second graphical method used to assess the validity of the regression assumptions is a plot of the standardized residuals against the fits (predicted values). An example of this type of graph is given in Figure 8.13, for the regression of distance versus time for the original 10 observations in the orienteering example.

Figure 8.13 Plot of standardized residuals versus predicted values for orienteering example.

Note the close relationship between this graph and the original scatter plot in Figure 8.3. The regression line from Figure 8.3 is now the horizontal zero line in Figure 8.13. Points that were either above/below/on the regression line in Figure 8.3 now lie either above/below/on the horizontal zero line in Figure 8.13.

We evaluate the validity of the regression assumptions by observing whether certain patterns exist in the plot of the residuals versus fits, in which case one of the assumptions has been violated, or whether no such discernible patterns exists, in which case the assumptions remain intact. The 10 data points in Figure 8.13 are really too few to try to determine whether any patterns exist. In data mining applications, of course, paucity of data is rarely the issue. Let us see what types of patterns we should watch out for. Figure 8.14 shows four pattern “archetypes” that may be observed in residual-fit plots. Plot (a) shows a “healthy” plot, where no noticeable patterns are observed, and the points display an essentially rectangular shape from left to right. Plot (b) exhibits curvature, which violates the independence assumption. Plot (c) displays a “funnel” pattern, which violates the constant variance assumption. Finally, plot (d) exhibits a pattern that increases from left to right, which violates the zero-mean assumption.

Figure 8.14 Four possible patterns in the plot of residuals versus fits.

Why does plot (b) violate the independence assumption? Because the errors are assumed to be independent, the residuals (which estimate the errors) should exhibit independent behavior as well. However, if the residuals form a curved pattern, then, for a given residual, we may predict where its neighbors to the left and right will fall, within a certain margin of error. If the residuals were truly independent, then such a prediction would not be possible.

Why does plot (c) violate the constant variance assumption? Note from plot (a) that the variability in the residuals, as shown by the vertical distance, is fairly constant, regardless of the value of x. However, in plot (c), the variability of the residuals is smaller for smaller values of x, and larger for larger values of x. Therefore, the variability is non-constant, which violates the constant variance assumption.

Why does plot (d) violate the zero-mean assumption? The zero-mean assumption states that the mean of the error term is zero, regardless of the value of x. However, plot (d) shows that, for small values of x, the mean of the residuals is less than 0, while, for large values of x, the mean of the residuals is greater than 0. This is a violation of the zero-mean assumption, as well as a violation of the independence assumption.

When examining plots for patterns, beware of the “Rorschach effect” of seeing patterns in randomness. The null hypothesis when examining these plots is that the assumptions are intact; only systematic and clearly identifiable patterns in the residuals plots offer evidence to the contrary.

Apart from these graphical methods, there are also several diagnostic hypothesis tests that may be carried out to assess the validity of the regression assumptions. As mentioned above, the AD test may be used to indicate fit of the residuals to a normal distribution. For assessing whether the constant variance assumption has been violated, either Bartlett's test or Levene's test may be used. For determining whether the independence assumption has been violated, either the Durban–Watson test or the runs test may be applied. Information about all of these diagnostic tests may be found in Draper and Smith (1998).4

Note that these assumptions represent the structure needed to perform inference in regression. Descriptive methods in regression, such as point estimates, and simple reporting of such statistics, as the slope, correlation, standard error of the estimate, and ![]() , may still be undertaken even if these assumptions are not met, if the results are cross-validated. What is not allowed by violated assumptions is statistical inference. But we as data miners and big data scientists understand that inference is not our primary modus operandi. Rather, data mining seeks confirmation through cross-validation of the results across data partitions. For example, if we are examining the relationship between outdoor event ticket sales and rainfall amounts, and if the training data set and test data set both report correlation coefficients of about −0.7, and there is graphical evidence to back this up, then we may feel confident in reporting to our client in a descriptive manner that the variables are negatively correlated, even if both variables are not normally distributed (which is the assumption for the correlation test). We just cannot say that the correlation coefficient has a statistically significant negative value, because the phrase “statistically significant” belongs to the realm of inference. So, for data miners, the keys are to (i) cross-validate the results across partitions, and (ii) restrict the interpretation of the results to descriptive language, and avoid inferential terminology.

, may still be undertaken even if these assumptions are not met, if the results are cross-validated. What is not allowed by violated assumptions is statistical inference. But we as data miners and big data scientists understand that inference is not our primary modus operandi. Rather, data mining seeks confirmation through cross-validation of the results across data partitions. For example, if we are examining the relationship between outdoor event ticket sales and rainfall amounts, and if the training data set and test data set both report correlation coefficients of about −0.7, and there is graphical evidence to back this up, then we may feel confident in reporting to our client in a descriptive manner that the variables are negatively correlated, even if both variables are not normally distributed (which is the assumption for the correlation test). We just cannot say that the correlation coefficient has a statistically significant negative value, because the phrase “statistically significant” belongs to the realm of inference. So, for data miners, the keys are to (i) cross-validate the results across partitions, and (ii) restrict the interpretation of the results to descriptive language, and avoid inferential terminology.

8.10 Inference in Regression

Inference in regression offers a systematic framework for assessing the significance of linear association between two variables. Of course, analysts need to keep in mind the usual caveats regarding the use of inference in general for big data problems. For very large sample sizes, even tiny effect sizes may be found to be statistically significant, even when their practical significance may not be clear.

We shall examine five inferential methods in this chapter, which are as follows:

- The t-test for the relationship between the response variable and the predictor variable.

- The correlation coefficient test.

- The confidence interval for the slope,

.

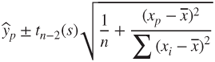

. - The confidence interval for the mean of the response variable, given a particular value of the predictor.

- The prediction interval for a random value of the response variable, given a particular value of the predictor.

In Chapter 9, we also investigate the F-test for the significance of the regression as a whole. However, for simple linear regression, the t-test and the F-test are equivalent.

How do we go about performing inference in regression? Take a moment to consider the form of the true (population) regression equation.

This equation asserts that there is a linear relationship between y on the one hand, and some function of x on the other. Now, ![]() is a model parameter, so that it is a constant whose value is unknown. Is there some value that

is a model parameter, so that it is a constant whose value is unknown. Is there some value that ![]() could take such that, if

could take such that, if ![]() took that value, there would no longer exist a linear relationship between x and y? Consider what would happen if

took that value, there would no longer exist a linear relationship between x and y? Consider what would happen if ![]() was zero. Then the true regression equation would be as follows:

was zero. Then the true regression equation would be as follows:

In other words, when ![]() , the true regression equation becomes:

, the true regression equation becomes:

That is, a linear relationship between x and y no longer exists. However, if ![]() takes on any conceivable value other than zero, then a linear relationship of some kind exists between the response and the predictor. Much of our regression inference in this chapter is based on this key idea, that the linear relationship between x and y depends on the value of

takes on any conceivable value other than zero, then a linear relationship of some kind exists between the response and the predictor. Much of our regression inference in this chapter is based on this key idea, that the linear relationship between x and y depends on the value of ![]() .

.

8.11 t-Test for the Relationship Between x and y

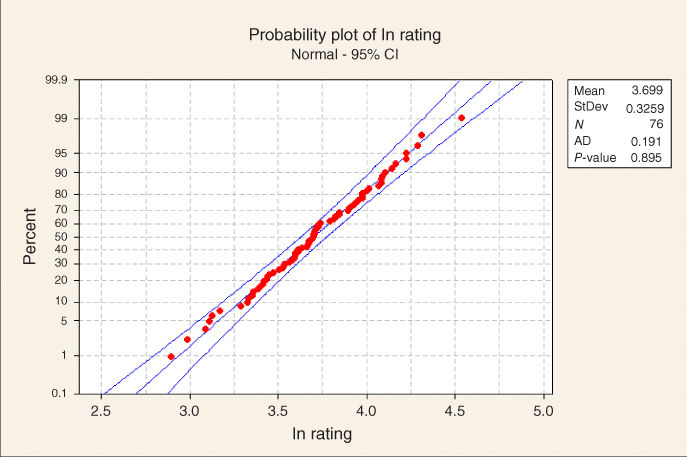

Much of the inference we perform in this section refers to the regression of rating on sugars. The assumption is that the residuals (or standardized residuals) from the regression are approximately normally distributed. Figure 8.15 shows that this assumption is validated. There are some strays at either end, but the bulk of the data lie within the confidence bounds.

Figure 8.15 Normal probability plot of the residuals for the regression of rating on sugars.

The least squares estimate of the slope, ![]() , is a statistic, because its value varies from sample to sample. Like all statistics, it has a sampling distribution with a particular mean and standard error. The sampling distribution of

, is a statistic, because its value varies from sample to sample. Like all statistics, it has a sampling distribution with a particular mean and standard error. The sampling distribution of ![]() has as its mean the (unknown) value of the true slope

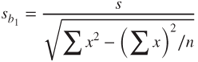

has as its mean the (unknown) value of the true slope ![]() , and has as its standard error, the following:

, and has as its standard error, the following:

Just as one-sample inference about the mean ![]() is based on the sampling distribution of

is based on the sampling distribution of ![]() , so regression inference about the slope

, so regression inference about the slope ![]() is based on this sampling distribution of

is based on this sampling distribution of ![]() . The point estimate of

. The point estimate of ![]() is

is ![]() , given by

, given by

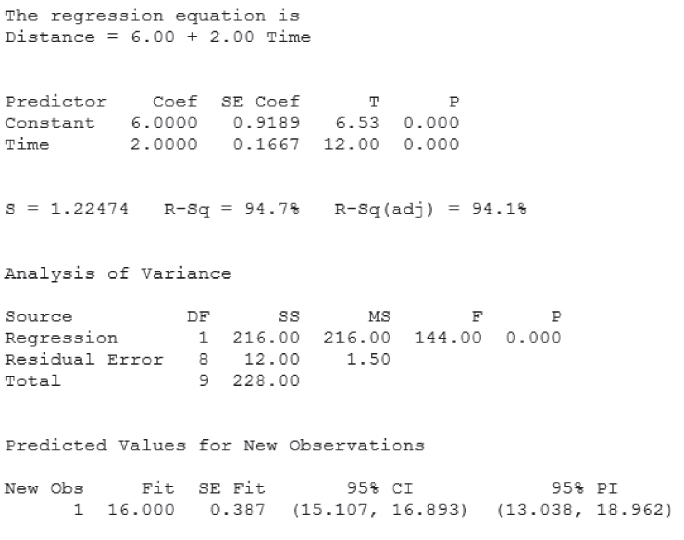

where s is the standard error of the estimate, reported in the regression results. The ![]() statistic is to be interpreted as a measure of the variability of the slope. Large values of

statistic is to be interpreted as a measure of the variability of the slope. Large values of ![]() indicate that the estimate of the slope

indicate that the estimate of the slope ![]() is unstable, while small values of

is unstable, while small values of ![]() indicate that the estimate of the slope

indicate that the estimate of the slope ![]() is precise. The t-test is based on the distribution of

is precise. The t-test is based on the distribution of ![]() , which follows a t-distribution with n − 2 degrees of freedom. When the null hypothesis is true, the test statistic

, which follows a t-distribution with n − 2 degrees of freedom. When the null hypothesis is true, the test statistic ![]() follows a t-distribution with n − 2 degrees of freedom. The t-test requires that the residuals be normally distributed.

follows a t-distribution with n − 2 degrees of freedom. The t-test requires that the residuals be normally distributed.

To illustrate, we shall carry out the t-test using the results from Table 8.7, the regression of nutritional rating on sugar content. For convenience, Table 8.7 is reproduced here as Table 8.11. Consider the row in Table 8.11, labeled “Sugars.”

- Under “Coef” is found the value of

, −2.4614.

, −2.4614. - Under “SE Coef” is found the value of

, the standard error of the slope. Here,

, the standard error of the slope. Here,  .

. - Under “T” is found the value of the t-statistic; that is, the test statistic for the t-test,

.

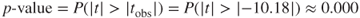

. - Under “P” is found the p-value of the t-statistic. As this is a two-tailed test, this p-value takes the following form:

, where

, where  represent the observed value of the t-statistic from the regression results. Here,

represent the observed value of the t-statistic from the regression results. Here,  , although, of course, no continuous p-value ever precisely equals zero.

, although, of course, no continuous p-value ever precisely equals zero.

Table 8.11 Results for regression of nutritional rating versus sugar content

|

The hypotheses for this hypothesis test are as follows. The null hypothesis asserts that no linear relationship exists between the variables, while the alternative hypothesis states that such a relationship does indeed exist.

- H0:

(There is no linear relationship between sugar content and nutritional rating.)

(There is no linear relationship between sugar content and nutritional rating.) - Ha:

(Yes, there is a linear relationship between sugar content and nutritional rating.)

(Yes, there is a linear relationship between sugar content and nutritional rating.)

We shall carry out the hypothesis test using the p-value method, where the null hypothesis is rejected when the p-value of the test statistic is small. What determines how small is small depends on the field of study, the analyst, and domain experts, although many analysts routinely use 0.05 as a threshold. Here, we have ![]() , which is surely smaller than any reasonable threshold of significance. We therefore reject the null hypothesis, and conclude that a linear relationship exists between sugar content and nutritional rating.

, which is surely smaller than any reasonable threshold of significance. We therefore reject the null hypothesis, and conclude that a linear relationship exists between sugar content and nutritional rating.

8.12 Confidence Interval for the Slope of the Regression Line

Researchers may consider that hypothesis tests are too black-and-white in their conclusions, and prefer to estimate the slope of the regression line ![]() , using a confidence interval. The interval used is a t-interval, and is based on the above sampling distribution for

, using a confidence interval. The interval used is a t-interval, and is based on the above sampling distribution for ![]() . The form of the confidence interval is as follows.5

. The form of the confidence interval is as follows.5

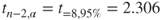

For example, let us construct a 95% confidence interval for the true slope of the regression line, ![]() . We have the point estimate given as

. We have the point estimate given as ![]() . The t-critical value for 95% confidence and n − 2 = 75 degrees of freedom is

. The t-critical value for 95% confidence and n − 2 = 75 degrees of freedom is ![]() . From Figure 8.16, we have

. From Figure 8.16, we have ![]() . Thus, our confidence interval is as follows:

. Thus, our confidence interval is as follows:

We are 95% confident that the true slope of the regression line lies between −2.9448 and −1.9780. That is, for every additional gram of sugar, the nutritional rating will decrease by between 1.9780 and 2.9448 points. As the point ![]() is not contained within this interval, we can be sure of the significance of the relationship between the variables, with 95% confidence.

is not contained within this interval, we can be sure of the significance of the relationship between the variables, with 95% confidence.

Figure 8.16 Probability plot of ln rating shows approximate normality.

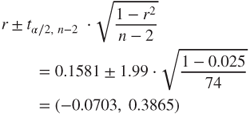

8.13 Confidence Interval for the Correlation Coefficient ρ

Let ![]() (“rho”) represent the population correlation coefficient between the x and y variables for the entire population. Then the confidence interval for

(“rho”) represent the population correlation coefficient between the x and y variables for the entire population. Then the confidence interval for ![]() is as follows.

is as follows.

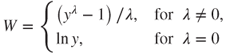

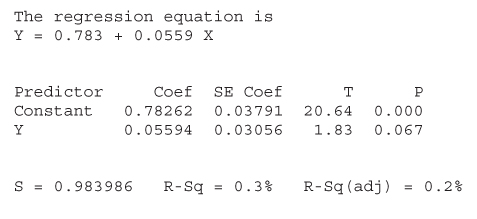

This confidence interval requires that both the x and y variables be normally distributed. Now, rating is not normally distributed, but the transformed variable ln rating is normally distributed, as shown in Figure 8.16. However, neither sugars nor any transformation of sugars (see the ladder of re-expressions later in this chapter) is normally distributed. Carbohydrates, however, shows normality that is just barely acceptable, with an AD p-value of 0.081, as shown in Figure 8.17. Thus, the assumptions are met for calculating the confidence interval for the population correlation coefficient between ln rating and carbohydrates, but not between ln rating and sugars. Thus, let us proceed to construct a 95% confidence interval for ![]() , the population correlation coefficient between ln rating and carbohydrates.

, the population correlation coefficient between ln rating and carbohydrates.

Figure 8.17 Probability plot of carbohydrates shows barely acceptable normality.

From Table 8.12, the regression output for the regression of ln rating on carbohydrates, we have ![]() , and the slope

, and the slope ![]() is positive, so that the sample correlation coefficient is

is positive, so that the sample correlation coefficient is ![]() . The sample size is n = 76, so that n – 2 = 74. Finally,

. The sample size is n = 76, so that n – 2 = 74. Finally, ![]() refers to the t-critical value with area 0.025 in the tail of the curve with 74 degrees of freedom. This value equals 1.99.6 Thus, our 95% confidence interval for