You've learned how to create simple applications and looked at how to create classes. Now it's time not only to start tying these elements together, but also to learn how to dispose of some of the classes that you have created. The architects of .NET realized that all procedural languages require certain base functionality. For example, many languages ship with their own runtime that provides features such as memory management, but what if, instead of each language shipping with its own runtime implementation, all languages used a common runtime? This would provide languages with a standard environment and access to all of the same features. This is exactly what the common language runtime (CLR) provides.

The CLR manages the execution of code on the .NET platform. .NET provided Visual Basic developers with better support for many advanced features, including operator overloading, implementation inheritance, threading, and the ability to marshal objects. Building such features into a language is not trivial. The CLR enabled Microsoft to concentrate on building this plumbing one time and then reuse it across different programming languages. Because the CLR supports these features and because Visual Basic is built on top of the CLR, Visual Basic can use these features. As a result, going forward, Visual Basic is the equal of every other .NET language, with the CLR eliminating many of the shortcomings of the previous versions of Visual Basic.

Visual Basic developers can view the CLR as a better Visual Basic runtime. However, this runtime, unlike the old standalone Visual Basic runtime, is common across all of .NET regardless of the underlying operating system. Thus, the functionality exposed by the CLR is available to all .NET languages; more important, all of the features available to other .NET languages via the CLR are available to Visual Basic developers. Additionally, as long as you develop using managed code — code that runs in the CLR — you'll find that it doesn't matter whether your application is installed on a Windows XP client or a Vista client; your application will run. The CLR provides an abstraction layer separate from the details of the operating system.

This chapter gets down into the belly of the application runtime environment, not to examine how .NET enables this abstraction from the operating system, but instead to look at some specific features related to how you build applications that run against the CLR. This includes an introduction to several basic elements of working with applications that run in the CLR, including the following:

A .NET application is composed of four primary entities:

Classes, covered in the preceding two chapters, are defined in the source files for your application or class library. Upon compilation of your source files, you produce a module. The code that makes up an assembly's modules may exist in a single executable (.exe) file or as a dynamic link library (.dll). A module is in fact a Microsoft Intermediate Language file, which is then used by the CLR when your application is run. However, compiling a .NET application doesn't produce only an MSIL file; it also produces a collection of files that make up a deployable application or assembly. Within an assembly are several different types of files, including not only the actual executable files, but also configuration files, signature keys, and, most important of all, the actual code modules.

A module contains Microsoft Intermediate Language (MSIL, often abbreviated to IL) code, associated metadata, and the assembly's manifest. By default, the Visual Basic compiler creates an assembly that is composed of a single module containing both the assembly code and the manifest.

IL is a platform-independent way of representing managed code within a module. Before IL can be executed, the CLR must compile it into the native machine code. The default method is for the CLR to use the JIT (just-in-time) compiler to compile the IL on a method-by-method basis. At runtime, as each method is called by an application for the first time, it is passed through the JIT compiler for compilation to machine code. Similarly, for an ASP.NET application, each page is passed through the JIT compiler the first time it is requested, to create an in-memory representation of the machine code that represents that page.

Additional information about the types declared in the IL is provided by the associated metadata. The metadata contained within the module is used extensively by the CLR. For example, if a client and an object reside within two different processes, then the CLR uses the type's metadata to marshal data between the client and the object. MSIL is important because every .NET language compiles down to IL.

The CLR doesn't care or need to know what the implementation language was; it knows only what the IL contains. Thus, any differences in .NET languages exist at the level where the IL is generated; but once generated, all .NET languages have the same runtime characteristics. Similarly, because the CLR doesn't care in which language a given module was originally written, it can leverage modules implemented in entirely different .NET languages.

A question that always arises when discussing the JIT compiler and the use of a runtime environment is "Wouldn't it be faster to compile the IL language down to native code before the user asks to run it?" Although the answer is not always yes, Microsoft has provided a utility to handle this compilation: Ngen.exe. Ngen (short for native image generator) enables you to essentially run the JIT compiler on a specific assembly, which is then installed into the user's application cache in its native format. The obvious advantage is that now when the user asks to execute something in that assembly, the JIT compiler is not invoked, saving a small amount of time. However, unlike the JIT compiler, which only compiles those portions of an assembly that are actually referenced, Ngen.exe needs to compile the entire codebase, so the time required for compilation is not the same as what a user actually experiences.

Ngen.exe is executed from the command line. The utility was updated as part of .NET 2.0 and now automatically detects and includes most of the dependent assemblies as part of the image-generation process. To use Ngen.exe, you simply reference this utility followed by an action; for example, install followed by your assembly reference. Several options are available as part of the generation process, but that subject is beyond the scope of this chapter, given that Ngen.exe itself is a topic that generates heated debate regarding its use and value.

Where does the debate begin about when to use Ngen.exe? Keep in mind that in a server application, where the same assembly will be referenced by multiple users between machine restarts, the difference in performance on the first request is essentially lost. This means that compilation to native code is more valuable to client-side applications. Unfortunately, using Ngen.exe requires running it on each client machine, which can become cost prohibitive in certain installation scenarios, particularly if you use any form of self-updating application logic.

Another issue relates to using reflection, which enables you to reference other assemblies at runtime. Of course, if you don't know what assemblies you will reference until runtime, then the native image generator has a problem, as it won't know what to reference either. You may have occasion to use Ngen.exe for an application you've created, but you should fully investigate this utility and its advantages and disadvantages beforehand, keeping in mind that even native images execute within the CLR. Native image generation only changes the compilation model, not the runtime environment.

An assembly is the primary unit of deployment for .NET applications. It is either a dynamic link library (.dll) or an executable (.exe). An assembly is composed of a manifest, one or more modules, and (optionally) other files, such as .config, .ASPX, .ASMX, images, and so on.

The manifest of an assembly contains the following:

Information about the identity of the assembly, including its textual name and version number

If the assembly is public, then the manifest will contain the assembly's public key. The public key is used to help ensure that types exposed by the assembly reside within a unique namespace. It may also be used to uniquely identify the source of an assembly.

A declarative security request that describes the assembly's security requirements (the assembly is responsible for declaring the security it requires). Requests for permissions fall into three categories: required, optional, and denied. The identity information may be used as evidence by the CLR in determining whether or not to approve security requests.

A list of other assemblies on which the assembly depends. The CLR uses this information to locate an appropriate version of the required assemblies at runtime. The list of dependencies also includes the exact version number of each assembly at the time the assembly was created.

A list of all types and resources exposed by the assembly. If any of the resources exposed by the assembly are localized, the manifest will also contain the default culture (language, currency, date/time format, and so on) that the application will target. The CLR uses this information to locate specific resources and types within the assembly.

The manifest can be stored in a separate file or in one of the modules. By default, for most applications, it is part of the .dll or .exe file, which is compiled by Visual Studio. For Web applications, you will find that although there is a collection of ASPX pages, the actual assembly information is located in a DLL referenced by those ASPX pages.

The type system provides a template that is used to describe the encapsulation of data and an associated set of behaviors. It is this common template for describing data that provides the basis for the metadata that .NET uses when applications interoperate. There are two kinds of types: reference and value. The differences between these two types are discussed in Chapter 1.

Unlike COM, which is scoped at the machine level, types are scoped at either the global or the assembly level. All types are based on a common system that is used across all .NET languages. Similar to the MSIL code, which is interpreted by the CLR based upon the current runtime environment, the CLR uses a common metadata system to recognize the details of each type. The result is that all .NET languages are built around a common type system, unlike the different implementations of COM, which require special notation to allow translation of different data types between different .exe and .dll files.

A type has fields, properties, and methods:

Fields — Variables that are scoped to the type. For example, a

Petclass could declare a field called Name that holds the pet's name. In a well-engineered class, fields are often kept private and exposed only as properties or methods.Properties — These look like fields to clients of the type, but can have code behind them (which usually performs some sort of data validation). For example, a

Dogdata type could expose a property to set its gender. Code could then be placed behind the property so that it could be set only to "male" or "female," and then this property could be saved internally to one of the fields in thedogclass.Methods — These define behaviors exhibited by the type. For example, the

Dogdata type could expose a method calledSleep, which would suspend the activity of theDog.

The preceding elements make up each application. Note that some types are defined at the application level and others globally. Under COM, all components are registered globally, and certainly if you want to expose a .NET component to COM, you must register it globally. However, with .NET it is not only possible but often encouraged that the classes and types defined in your modules be visible only at the application level. The advantage of this is that you can run several different versions of an application side by side. Of course, once you have an application that can be versioned, the next challenge is knowing which version of that application you have.

Components and their clients are often installed at different times by different vendors. For example, a Visual Basic application might rely on a third-party grid control to display data. Runtime support for versioning is crucial for ensuring that an incompatible version of the grid control does not cause problems for the Visual Basic application.

In addition to this issue of compatibility, deploying applications written in previous versions of Visual Basic was problematic. Fortunately, .NET provides major improvements over the versioning and deployment offered by COM and the previous versions of Visual Basic.

Managing component versions was challenging in previous versions of Visual Basic. The version number of the component could be set, but this version number was not used by the runtime. COM components are often referenced by their ProgID, but Visual Basic does not provide any support for appending the version number on the end of the ProgID.

For those of you who are unfamiliar with the term ProgID, it's enough to know that ProgIDs are developer-friendly strings used to identify a component. For example, Word.Application describes Microsoft Word. ProgIDs can be fully qualified with the targeted version of the component — for example, Word.Application.10 — but this is a limited capability and relies on both the application and whether the person using it chooses this optional addendum. As you'll see in Chapter 7, a namespace is built on the basic elements of a ProgID, but provides a more robust naming system.

For many applications, .NET has removed the need to identify the version of each assembly in a central registry on a machine. However, some assemblies are installed once and used by multiple applications. .NET provides a global assembly cache (GAC), which is used to store assemblies that are intended for use by multiple applications. The CLR provides versioning support for all components loaded in the GAC.

The CLR provides two features for assemblies installed within the GAC:

Side-by-side versioning — Multiple versions of the same component can be simultaneously stored in the GAC.

Automatic Quick Fix Engineering (QFE) — Also known as hotfix support, if a new version of a component, which is still compatible with the old version, is available in the GAC, the CLR loads the updated component. The version number, which is maintained by the developer who created the referenced assembly, drives this behavior.

The assembly's manifest contains the version numbers of referenced assemblies. The CLR uses the assembly's manifest at runtime to locate a compatible version of each referenced assembly. The version number of an assembly takes the following form:

Major.Minor.Build.Revision

Changes to the major and minor version numbers of the assembly indicate that the assembly is no longer compatible with the previous versions. The CLR will not use versions of the assembly that have a different major or minor number unless it is explicitly told to do so. For example, if an assembly was originally compiled against a referenced assembly with a version number of 3.4.1.9, then the CLR will not load an assembly stored in the GAC unless it has a major and minor number of 3 and 4.

Incrementing the revision and build numbers indicates that the new version is still compatible with the previous version. If a new assembly that has an incremented revision or build number is loaded into the GAC, then the CLR can still load this assembly for applications that were compiled referencing a previous version. Versioning is discussed in greater detail in chapter 23.

Applications written using previous versions of Visual Basic and COM were often complicated to deploy. Components referenced by the application needed to be installed and registered; and for Visual Basic components, the correct version of the Visual Basic runtime needed to be available. The Component Deployment tool helped in the creation of complex installation packages, but applications could be easily broken if the dependent components were inadvertently replaced by incompatible versions on the client's computer during the installation of an unrelated product.

In .NET, most components do not need to be registered. When an external assembly is referenced, the application decides between using a global copy (which must be in the GAC on the developer's system) or copying a component locally. For most references, the external assemblies are referenced locally, which means they are carried in the application's local directory structure. Using local copies of external assemblies enables the CLR to support the side-by-side execution of different versions of the same component. As noted earlier, to reference a globally registered assembly, that assembly must be located in the GAC. The GAC provides a versioning system that is robust enough to allow different versions of the same external assembly to exist side by side. For example, an application could use a newer version of ADO.NET without adversely affecting another application that relies on a previous version.

As long as the client has the .NET runtime installed (which only has to be done once), a .NET application can be distributed using a simple command like this:

xcopy \serverappDirectory "C:Program FilesappDirectory" /E /O /I

The preceding command would copy all of the files and subdirectories from \serverappDirectory to C:Program FilesappDirectory and would transfer the file's access control lists (ACLs).

Besides the capability to XCopy applications, Visual Studio provides a built-in tool for constructing simple .msi installations. The deployment settings can be customized for your project solution, enabling you to integrate the deployment project with your application output. Additionally, Visual Studio 2005 introduced the capability to create a ClickOnce deployment.

ClickOnce deployment provides an entirely new method of deployment, referred to as smart-client deployment. In the smart-client model, your application is placed on a central server from which the clients access the application files. Smart-client deployment builds on the XML Web Services architecture about which you are learning. It has the advantages of central application maintenance combined with a richer client interface and fewer server communication requirements, all of which you have become familiar with in Windows Forms applications. ClickOnce deployment is discussed in greater detail in chapter 24.

Prior to .NET, interoperating with code written in other languages was challenging. There were pretty much two options for reusing functionality developed in other languages: COM interfaces or DLLs with exported C functions. As for exposing functionality written in Visual Basic, the only option was to create COM interfaces.

Because Visual Basic is now built on top of the CLR, it's able to interoperate with the code written in other .NET languages. It's even able to derive from a class written in another language. To support this type of functionality, the CLR relies on a common way of representing types, as well as rich metadata that can describe these types.

Each programming language seems to bring its own island of data types with it. For example, previous versions of Visual Basic represent strings using the BSTR structure, C++ offers char and wchar data types, and MFC offers the CString class. Moreover, the fact that the C++ int data type is a 32-bit value, whereas the Visual Basic 6 Integer data type is a 16-bit value, makes it difficult to pass parameters between applications written using different languages.

To help resolve this problem, C has become the lowest common denominator for interfacing between programs written in multiple languages. An exported function written in C that exposes simple C data types can be consumed by Visual Basic, Java, Delphi, and a variety of other programming languages. In fact, the Windows API is exposed as a set of C functions.

Unfortunately, to access a C interface, you must explicitly map C data types to a language's native data types. For example, a Visual Basic 6 developer would use the following statement to map the GetUserNameA Win32 function (GetUserNameA is the ANSI version of the GetUserName function):

' Map GetUserName to the GetUserNameA exported function ' exported by advapi32.dll. ' BOOL GetUserName( ' LPTSTR lpBuffer, // name buffer ' LPDWORD nSize // size of name buffer ' ); Public Declare Function GetUserName Lib "advapi32.dll" _ Alias "GetUserNameA" (ByVal strBuffer As String, nSize As Long) As Long

This code explicitly maps the lpBuffer C character array data type to the Visual Basic 6 String parameter strBuffer. This is not only cumbersome, but also error prone. Accidentally mapping a variable declared as Long to lpBuffer wouldn't generate any compilation errors, but calling the function would more than likely result in a difficult-to-diagnose, intermittent-access violation at runtime.

COM provides a more refined method of interoperation between languages. Visual Basic 6 introduced a common type system (CTS) for all applications that supported COM — that is, variant-compatible data types. However, variant data types are as cumbersome to work with for non-Visual Basic 6 developers as the underlying C data structures that make up the variant data types (such as BSTR and SAFEARRAY) were for Visual Basic developers. The result is that interfacing between unmanaged languages is still more complicated than it needs to be.

The CTS provides a set of common data types for use across all programming languages. The CTS provides every language running on top of the .NET platform with a base set of types, as well as mechanisms for extending those types. These types may be implemented as classes or as structs, but in either case they are derived from a common System.Object class definition.

Because every type supported by the CTS is derived from System.Object, every type supports a common set of methods, as shown in the following table:

Metadata is the information that enables components to be self-describing. Metadata is used to describe many aspects of a .NET component, including classes, methods, and fields, and the assembly itself. Metadata is used by the CLR to facilitate all sorts of behavior, such as validating an assembly before it is executed or performing garbage collection while managed code is being executed. Visual Basic developers have used metadata for years when developing and using components within their applications.

Visual Basic developers use metadata to instruct the Visual Basic runtime how to behave. For example, you can set the

Unattended Executionproperty to determine whether unhandled exceptions are shown on the screen in a message box or are written to the Event Log.COM components referenced within Visual Basic applications have accompanying type libraries that contain metadata about the components, their methods, and their properties. You can use the Object Browser to view this information. (The information contained within the type library is what is used to drive IntelliSense.)

Additional metadata can be associated with a component by installing it within COM+. Metadata stored in COM+ is used to declare the support a component needs at runtime, including transactional support, serialization support, and object pooling.

Metadata associated with a Visual Basic 6 component was scattered in multiple locations and stored using multiple formats:

Metadata instructing the Visual Basic runtime how to behave (such as the

Unattended Executionproperty) is compiled into the Visual Basic-generated executable.Basic COM attributes (such as the required threading model) are stored in the registry.

COM+ attributes (such as the transactional support required) are stored in the COM+ catalog.

.NET refines the use of metadata within applications in three significant ways:

.NET consolidates the metadata associated with a component.

Because a .NET component does not have to be registered, installing and upgrading the component is easier and less problematic.

.NET makes a much clearer distinction between attributes that should only be set at compile time and those that can be modified at runtime.

All attributes associated with Visual Basic components are represented in a common format and consolidated within the files that make up the assembly.

Because much of a COM/COM+ component's metadata is stored separately from the executable, installing and upgrading components can be problematic. COM/COM+ components must be registered to update the registry/COM+ catalog before they can be used, and the COM/COM+ component executable can be upgraded without upgrading its associated metadata.

The process of installing and upgrading a .NET component is greatly simplified. Because all metadata associated with a .NET component must reside within the file that contains the component, no registration is required. After a new component is copied into an application's directory, it can be used immediately. Because the component and its associated metadata cannot become out of sync, upgrading the component becomes much less of a problem.

Another problem with COM+ is that attributes that should only be set at compile time may be reconfigured at runtime. For example, COM+ can provide serialization support for neutral components. A component that does not require serialization must be designed to accommodate multiple requests from multiple clients simultaneously. You should know at compile time whether or not a component requires support for serialization from the runtime. However, under COM+, the attribute describing whether or not client requests should be serialized can be altered at runtime.

.NET makes a much better distinction between attributes that should be set at compile time and those that should be set at runtime. For example, whether a .NET component is serializable is determined at compile time. This setting cannot be overridden at runtime.

Attributes are used to decorate entities such as assemblies, classes, methods, and properties with additional information. Attributes can be used for a variety of purposes. They can provide information, request a certain behavior at runtime, or even invoke a particular behavior from another application. An example of this can be shown by using the Demo class defined in the following code block:

Module Module1

<Serializable()> Public Class Demo

<Obsolete("Use Method2 instead.")> Public Sub Method1()

' Old implementation ...

End Sub

Public Sub Method2()

' New implementation ...

End Sub

End Class

Public Sub Main()

Dim d As Demo = New Demo()

d.Method1()

End Sub

End ModuleCreate a new console application for Visual Basic and then add a new class into the sample file. A best practice is to place each class in its own source file, but in order to simplify this demonstration, the class Demo has been defined within the main module.

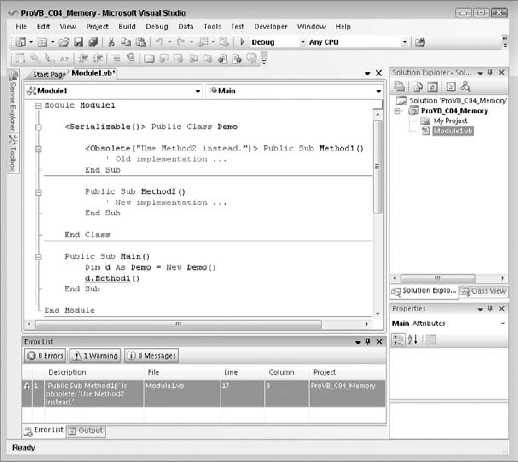

The first attribute on the Demo class marks the class with the Serializable attribute. The base class library will provide serialization support for instances of the Demo type. For example, the ResourceWriter type can be used to stream an instance of the Demo type to disk. The second attribute is associated with Method1. Method1 has been marked as obsolete, but it is still available. When a method is marked as obsolete, there are two options, one being that Visual Studio should prevent applications from compiling. However, a better strategy for large applications is to first mark a method or class as obsolete and then prevent its use in the next release. The preceding code causes Visual Studio to display an IntelliSense warning if Method1 is referenced within the application, as shown in Figure 4-1. Not only does the line with Method1 have a visual hint of the issue, but a task has also been automatically added to the task window.

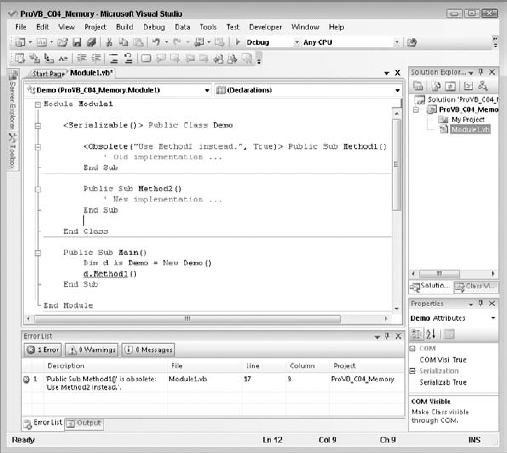

If the developer leaves this code unchanged and then compiles it, the application will compile correctly. As shown in Figure 4-2, the compilation is complete, but the developer receives a warning with a meaningful message that the code should be changed to use the correct method.

Sometimes you might need to associate multiple attributes with an entity. The following code shows an example of using both of the attributes from the previous code at the class level. Note that in this case the Obsolete attribute has been modified to cause a compilation error by setting its second parameter to True:

<Serializable(), Obsolete("No longer used.", True)> Public Class Demo

' Implementation ...

End ClassAttributes play an important role in the development of .NET applications, particularly XML Web services. As you'll see in chapter 28, the declaration of a class as a Web service and of particular methods as Web methods are all handled through the use of attributes.

The .NET Framework provides the Reflection API for accessing metadata associated with managed code. You can use the Reflection API to examine the metadata associated with an assembly and its types, and even to examine the currently executing assembly.

The Assembly class in the System.Reflection namespace can be used to access the metadata in an assembly. The LoadFrom method can be used to load an assembly, and the GetExecutingAssembly method can be used to access the currently executing assembly. The GetTypes method can then be used to obtain the collection of types defined in the assembly.

It's also possible to access the metadata of a type directly from an instance of that type. Because every object derives from System.Object, every object supports the GetType method, which returns a Type object that can be used to access the metadata associated with the type.

The Type object exposes many methods and properties for obtaining the metadata associated with a type. For example, you can obtain a collection of properties, methods, fields, and events exposed by the type by calling the GetMembers method. The Type object for the object's base type can also be obtained by calling the DeclaringType property.

A good tool that demonstrates the power of reflection is Lutz Roeder's Reflector for .NET (see www.aisto.com/roeder/dotnet). In addition to the core tool, you can find several add-ins related to the tool at www.codeplex.com/reflectoraddins.

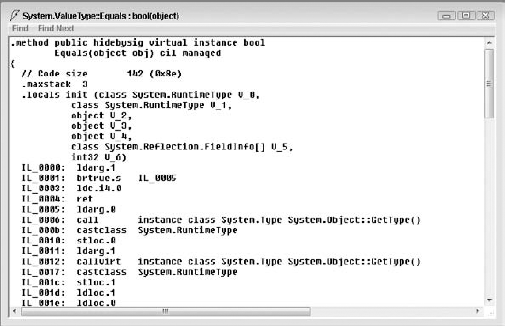

One of the many handy tools that ships with Visual Studio is the IL Disassembler (ildasm.exe). It can be used to navigate the metadata within a module, including the types the module exposes, as well as their properties and methods. The IL Disassembler can also be used to display the IL contained within a module.

You can find the IL Disassembler under your installation directory for Visual Studio 2008; the default path is C:Program FilesMicrosoft SDKsWindowsv6.0ABinILDasm.exe. Once the IL Disassembler has been started, select File

Figure 4-3 shows the IL for the Equals method. Notice how the Reflection API is used to navigate through the instance of the value type's fields in order to determine whether the values of the two objects being compared are equal.

The IL Disassembler is a useful tool for learning how a particular module is implemented, but it could jeopardize your company's proprietary logic. After all, what's to prevent someone from using it to reverse engineer your code? Fortunately, Visual Studio 2008, like previous versions of Visual Studio, ships with a third-party tool called an obfuscator. The role of the obfuscator is to ensure that the IL Disassembler cannot build a meaningful representation of your application logic.

A complete discussion of the obfuscator that ships with Visual Studio 2008 is beyond the scope of this chapter, but to access this tool, select the Tools menu and choose Dotfuscator Community Edition. The obfuscator runs against your compiled application, taking your IL file and stripping out many of the items that are embedded by default during the compilation process.

This section looks at one of the larger underlying elements of managed code. One of the reasons why .NET applications are referred to as "managed" is that memory deallocation is handled automatically by the system. The CLR's memory management fixes the shortcomings of the COM's memory management. Developers are accustomed to worrying about memory management only in an abstract sense. The basic rule has been that every object created and every section of memory allocated needs to be released (destroyed). The CLR introduces a garbage collector (GC), which simplifies this paradigm. Gone are the days when a misbehaving component — for example, one that failed to properly dispose of its object references or allocated and never released memory — could crash a web server.

However, the use of a GC introduces new questions about when and if objects need to be explicitly cleaned up. There are two elements in manually writing code to allocate and deallocate memory and system resources. The first is the release of any shared resources such as file handles and database connections. This type of activity needs to be managed explicitly and is discussed shortly. The second element of manual memory management involves letting the system know when memory is no longer in use by your application. Visual Basic COM developers, in particular, are accustomed to explicitly disposing of object references by setting variables to Nothing. While you can explicitly show your intent to destroy the object by setting it to Nothing manually, this doesn't actually free resources under .NET.

.NET uses a GC to automatically manage the cleanup of allocated memory, which means that you don't need to carry out memory management as an explicit action. Because the system is automatic, it's not up to you when resources are actually cleaned up; thus, a resource you previously used might sit in memory beyond the end of the method where you used it. Perhaps more important is the fact that the GC will at times reclaim objects in the middle of executing the code in a method. Fortunately, the system ensures that collection only happens as long as your code doesn't reference the object later in the method.

For example, you could actually end up extending the amount of time an object is kept in memory just by setting that object to Nothing. Thus, setting a variable to Nothing at the end of the method prevents the garbage collection mechanism from proactively reclaiming objects, and therefore is generally discouraged. After all, if the goal is simply to document a developer's intention, then a comment is more appropriate.

Given this change in paradigms, the next few sections look at the challenges of traditional memory management and peek under the covers to reveal how the garbage collector works, the basics of some of the challenges with COM-based memory management, and then a quick look at how the GC eliminates these challenges from your list of concerns. In particular, you should understand how you can interact with the garbage collector and why the Using command, for example, is recommended over a finalization method in .NET.

The unmanaged (COM/Visual Basic 6) runtime environment provides limited memory management by automatically releasing objects when they are no longer referenced by any application. Once all the references are released on an object, the runtime automatically releases the object from memory. For example, consider the following Visual Basic 6 code, which uses the Scripting.FileSystem object to write an entry to a log file:

' Requires a reference to Microsoft Scripting Runtime (scrrun.dll)

Sub WriteToLog(strLogEntry As String)

Dim objFSO As Scripting.FileSystemObject

Dim objTS As Scripting.TextStream

objTS = objFSO.OpenTextFile("C: empAppLog.log", ForAppending)

Call objTS.WriteLine(Date & vbTab & strLogEntry)

End SubWriteToLog creates two objects, a FileSystemObject and a TextStream, which are used to create an entry in the log file. Because these are COM objects, they may live either within the current application process or in their own process. Once the routine exits, the Visual Basic runtime recognizes that they are no longer referenced by an active application and dereferences the objects. This results in both objects being deactivated. However, in some situations objects that are no longer referenced by an application are not properly cleaned up by the Visual Basic 6 runtime. One cause of this is the circular reference.

One of the most common situations in which the unmanaged runtime is unable to ensure that objects are no longer referenced by the application is when these objects contain a circular reference. An example of a circular reference is when object A holds a reference to object B and object B holds a reference to object A.

Circular references are problematic because the unmanaged environment relies on the reference counting mechanism of COM to determine whether an object can be deactivated. Each COM object is responsible for maintaining its own reference count and for destroying itself once the reference count reaches zero. Clients of the object are responsible for updating the reference count appropriately, by calling the AddRef and Release methods on the object's IUnknown interface. However, in this scenario, object A continues to hold a reference to object B, and vice versa, so the internal cleanup logic of these components is not triggered.

In addition, problems can occur if the clients do not properly maintain the COM object's reference count. For example, an object will never be deactivated if a client forgets to call Release when the object is no longer referenced. To avoid this, the unmanaged environment may attempt to take care of updating the reference count for you, but the object's reference count can be an invalid indicator of whether or not the object is still being used by the application. For example, consider the references that objects A and B hold.

The application can invalidate its references to A and B by setting the associated variables equal to Nothing. However, even though objects A and B are no longer referenced by the application, the Visual Basic runtime cannot ensure that the objects are deactivated because A and B still reference each other. Consider the following (Visual Basic 6) code:

' Class: CCircularRef

' Reference to another object.

Dim m_objRef As Object

Public Sub Initialize(objRef As Object)

Set m_objRef = objRef

End Sub

Private Sub Class_Terminate()

Call MsgBox("Terminating.")

Set m_objRef = Nothing

End SubThe CCircularRef class implements an Initialize method that accepts a reference to another object and saves it as a member variable. Notice that the class does not release any existing reference in the m_objRef variable before assigning a new value. The following code demonstrates how to use this CCircularRef class to create a circular reference:

Dim objA As New CCircularRef Dim objB As New CCircularRef Call objA.Initialize(objB) Call objB.Initialize(objA) Set objA = Nothing Set objB = Nothing

After creating two instances (objA and objB) of CCircularRef, both of which have a reference count of one, the code then calls the Initialize method on each object by passing it a reference to the other. Now each of the object's reference counts is equal to two: one held by the application and one held by the other object. Next, explicitly setting objA and objB to Nothing decrements each object's reference count by one. However, because the reference count for both instances of CCircularRef is still greater than zero, the objects are not released from memory until the application is terminated. The CLR garbage collector solves the problem of circular references because it looks for a reference from the root application or thread to every class, and all classes that do not have such a reference are marked for deletion, regardless of any other references they might still maintain.

The .NET garbage collection mechanism is complex, and the details of its inner workings are beyond the scope of this book, but it is important to understand the principles behind its operation. The GC is responsible for collecting objects that are no longer referenced. It takes a completely different approach from that of the Visual Basic runtime to accomplish this. At certain times, and based on internal rules, a task will run through all the objects looking for those that no longer have any references from the root application thread or one of the worker threads. Those objects may then be terminated; thus, the garbage is collected.

As long as all references to an object are either implicitly or explicitly released by the application, the GC will take care of freeing the memory allocated to it. Unlike COM objects, managed objects in .NET are not responsible for maintaining their reference count, and they are not responsible for destroying themselves. Instead, the GC is responsible for cleaning up objects that are no longer referenced by the application. The GC periodically determines which objects need to be cleaned up by leveraging the information the CLR maintains about the running application. The GC obtains a list of objects that are directly referenced by the application. Then, the GC discovers all the objects that are referenced (both directly and indirectly) by the "root" objects of the application. Once the GC has identified all the referenced objects, it is free to clean up any remaining objects.

The GC relies on references from an application to objects; thus, when it locates an object that is unreachable from any of the root objects, it can clean up that object. Any other references to that object will be from other objects that are also unreachable. Thus, the GC automatically cleans up objects that contain circular references.

In some environments, such as COM, objects are destroyed in a deterministic fashion. Once the reference count reaches zero, the object destroys itself, which means that you can tell exactly when the object will be terminated. However, with garbage collection, you can't tell exactly when an object will be destroyed. Just because you eliminate all references to an object doesn't mean that it will be terminated immediately. It just remains in memory until the garbage collection process gets around to locating and destroying it, a process called nondeterministic finalization.

This nondeterministic nature of CLR garbage collection provides a performance benefit. Rather than expend the effort to destroy objects as they are dereferenced, the destruction process can occur when the application is otherwise idle, often decreasing the impact on the user. Of course, if garbage collection must occur when the application is active, then the system may see a slight performance fluctuation as the collection is accomplished.

It is possible to explicitly invoke the GC by calling the System.GC.Collect method, but this process takes time, so it is not the sort of behavior to invoke in a typical application. For example, you could call this method each time you set an object variable to Nothing, so that the object would be destroyed almost immediately, but this forces the GC to scan all the objects in your application — a very expensive operation in terms of performance.

It's far better to design applications such that it is acceptable for unused objects to sit in the memory for some time before they are terminated. That way, the garbage collector can also run based on its optimal rules, collecting many dereferenced objects at the same time. This means you need to design objects that don't maintain expensive resources in instance variables. For example, database connections, open files on disk, and large chunks of memory (such as an image) are all examples of expensive resources. If you rely on the destruction of the object to release this type of resource, then the system might be keeping the resource tied up for a lot longer than you expect; in fact, on a lightly utilized web server, it could literally be days.

The first principle is working with object patterns that incorporate cleaning up such pending references before the object is released. Examples of this include calling the close method on an open database connection or file handle. In most cases, it's possible for applications to create classes that do not risk keeping these handles open. However, certain requirements, even with the best object design, can create a risk that a key resource will not be cleaned up correctly. In such an event, there are two occasions when the object could attempt to perform this cleanup: when the final reference to the object is released and immediately before the GC destroys the object.

One option is to implement the IDisposable interface. When implemented, this interface ensures that persistent resources are released. This is the preferred method for releasing resources. The second option is to add a method to your class that the system runs immediately before an object is destroyed. This option is not recommended for several reasons, including the fact that many developers fail to remember that the garbage collector is nondeterministic, meaning that you can't, for example, reference an SQLConnection object from your custom object's finalizer.

Finally, as part of .NET 2.0, Visual Basic introduced the Using command. The Using command is designed to change the way that you think about object cleanup. Instead of encapsulating your cleanup logic within your object, the Using command creates a window around the code that is referencing an instance of your object. When your application's execution reaches the end of this window, the system automatically calls the IDIsposable interface for your object to ensure that it is cleaned up correctly.

Conceptually, the GC calls an object's Finalize method immediately before it collects an object that is no longer referenced by the application. Classes can override the Finalize method to perform any necessary cleanup. The basic concept is to create a method that fills the same need as what in other object-oriented languages is referred to as a destructor. Similarly, the Class_Terminate event available in previous versions of Visual Basic does not have a functional equivalent in .NET. Instead, it is possible to create a Finalize method that is recognized by the GC and that prevents a class from being cleaned up until after the finalization method is completed, as shown in the following example:

Protected Overrides Sub Finalize() ' clean up code goes here MyBase.Finalize() End Sub

This code uses both Protected scope and the Overrides keyword. Notice that not only does custom cleanup code go here (as indicated by the comment), but this method also calls MyBase.Finalize, which causes any finalization logic in the base class to be executed as well. Any class implementing a custom Finalize method should always call the base finalization class.

Be careful, however, not to treat the Finalize method as if it were a destructor. A destructor is based on a deterministic system, whereby the method is called when the object's last reference is removed. In the GC system, there are key differences in how a finalizer works:

Because the GC is optimized to clean up memory only when necessary, there is a delay between the time when the object is no longer referenced by the application and when the GC collects it. Therefore, the same expensive resources that are released in the

Finalizemethod may stay open longer than they need to be.The GC doesn't actually run

Finalizemethods. When the GC finds aFinalizemethod, it queues the object up for the finalizer to execute the object's method. This means that an object is not cleaned up during the current GC pass. Because of how the GC is optimized, this can result in the object remaining in memory for a much longer period.The GC is usually triggered when available memory is running low. As a result, execution of the object's

Finalizemethod is likely to incur performance penalties. Therefore, the code in theFinalizemethod should be as short and quick as possible.There's no guarantee that a service you require is still available. For example, if the system is closing and you have a file open, then .NET may have already unloaded the object required to close the file, and thus a

Finalizemethod can't reference an instance of any other .NET object.

All cleanup activities should be placed in the Finalize method, but objects that require timely cleanup should implement a Dispose method that can then be called by the client application just before setting the reference to Nothing:

Class DemoDispose

Private m_disposed As Boolean = False

Public Sub Dispose()

If (Not m_disposed) Then

' Call cleanup code in Finalize.

Finalize()

' Record that object has been disposed.

m_disposed = True

' Finalize does not need to be called.

GC.SuppressFinalize(Me)

End If

End Sub

Protected Overrides Sub Finalize()

' Perform cleanup here dots

End Sub

End ClassThe DemoDispose class overrides the Finalize method and implements the code to perform any necessary cleanup. This class places the actual cleanup code within the Finalize method. To ensure that the Dispose method only calls Finalize once, the value of the private m_disposed field is checked. Once Finalize has been run, this value is set to True. The class then calls GC.SuppressFinalize to ensure that the GC does not call the Finalize method on this object when the object is collected. If you need to implement a Finalize method, this is the preferred implementation pattern.

This example implements all of the object's cleanup code in the Finalize method to ensure that the object is cleaned up properly before the GC collects it. The Finalize method still serves as a safety net in case the Dispose or Close methods were not called before the GC collects the object.

In some cases, the Finalize behavior is not acceptable. For an object that is using an expensive or limited resource, such as a database connection, a file handle, or a system lock, it is best to ensure that the resource is freed as soon as the object is no longer needed.

One way to accomplish this is to implement a method to be called by the client code to force the object to clean up and release its resources. This is not a perfect solution, but it is workable. This cleanup method must be called directly by the code using the object or via the use of the Using statement. The Using statement enables you to encapsulate an object's life span within a limited range, and automate the calling of the IDisposable interface.

The .NET Framework provides the IDisposable interface to formalize the declaration of cleanup logic. Be aware that implementing the IDisposable interface also implies that the object has overridden the Finalize method. Because there is no guarantee that the Dispose method will be called, it is critical that Finalize triggers your cleanup code if it was not already executed.

Having a custom finalizer ensures that, once released, the garbage collection mechanism will eventually find and terminate the object by running its Finalize method. However, when handled correctly, the IDisposable interface ensures that any cleanup is executed immediately, so resources are not consumed beyond the time they are needed.

Note that any class that derives from System.ComponentModel.Component automatically inherits the IDisposable interface. This includes all of the forms and controls used in a Windows Forms UI, as well as various other classes within the .NET Framework. Because this interface is inherited, let's review a custom implementation of the IDisposable interface based on the Person class defined in the preceding chapters. The first step involves adding a reference to the interface to the top of the class:

Public Class Person Implements IDisposable

This interface defines two methods, Dispose and Finalize, that need to be implemented in the class. Visual Studio automatically inserts both these methods into your code:

Private disposed As Boolean = False

' IDisposable

Private Overloads Sub Dispose(ByVal disposing As Boolean)

If Not Me.disposed Then

If disposing Then

' TODO: put code to dispose managed resources

End If' TODO: put code to free unmanaged resources here

End If

Me.disposed = True

End Sub

#Region " IDisposable Support "

' This code added by Visual Basic to correctly implement the disposable pattern.

Public Overloads Sub Dispose() Implements IDisposable.Dispose

' Do not change this code.

' Put cleanup code in Dispose(ByVal disposing As Boolean) above.

Dispose(True)

GC.SuppressFinalize(Me)

End Sub

Protected Overrides Sub Finalize()

' Do not change this code.

' Put cleanup code in Dispose(ByVal disposing As Boolean) above.

Dispose(False)

MyBase.Finalize()

End Sub

#End RegionNotice the use of the Overloads and Overrides keywords. The automatically inserted code is following a best-practice design pattern for implementation of the IDisposable interface and the Finalize method. The idea is to centralize all cleanup code into a single method that is called by either the Dispose method or the Finalize method as appropriate.

Accordingly, you can add the cleanup code as noted by the TODO: comments in the inserted code. As mentioned in Chapter 13, the TODO: keyword is recognized by Visual Studio's text parser, which triggers an entry in the task list to remind you to complete this code before the project is complete. Because this code frees a managed object (the Hashtable), it appears as shown here:

Private Overloads Sub Dispose(ByVal disposing As Boolean)

If Not Me.disposed Then

If disposing Then

' TODO: put code to dispose managed resources

mPhones = Nothing

End If

' TODO: put code to free unmanaged resources here

End If

Me.disposed = True

End SubIn this case, we're using this method to release a reference to the object to which the mPhones variable points. While not strictly necessary, this illustrates how code can release other objects when the Dispose method is called. Generally, it is up to your client code to call this method at the appropriate time to ensure that cleanup occurs. Typically, this should be done as soon as the code is done using the object.

This is not always as easy as it might sound. In particular, an object may be referenced by more than one variable, and just because code in one class is dereferencing the object from one variable doesn't mean that it has been dereferenced by all the other variables. If the Dispose method is called while other references remain, then the object may become unusable and cause errors when invoked via those other references. There is no easy solution to this problem, so careful design is required if you choose to use the IDisposable interface.

One way to work with the IDisposable interface is to manually insert the calls to the interface implementation everywhere you reference the class. For example, in an application's Form1 code, you can override the OnLoad event for the form. You can use the custom implementation of this method to create an instance of the Person object. Then you create a custom handler for the form's OnClosed event, and make sure to clean up by disposing of the Person object. To do this, add the following code to the form:

Private Sub Form1_Closed(ByVal sender As Object, _

ByVal e As System.EventArgs) Handles MyBase.Closed

CType(mPerson, IDisposable).Dispose()

End SubThe OnClosed method runs as the form is being closed, so it is an appropriate place to do cleanup work. Note that because the Dispose method is part of a secondary interface, use of the CType method to access that specific interface is needed in order to call the method.

This solution works fine for patterns where the object implementing IDisposable is used within a form, but it is less useful for other patterns, such as when the object is used as part of a Web service. In fact, even for forms, this pattern is somewhat limited in that it requires the form to define the object when the form is created, as opposed to either having the object created prior to the creation of the form or some other scenario that occurs only on other events within the form.

For these situations, .NET 2.0 introduced a new command keyword: Using. The Using keyword is a way to quickly encapsulate the life cycle of an object that implements IDisposable, and ensure that the Dispose method is called correctly:

Dim mPerson as New Person() Using (mPerson) 'insert custom method calls End Using

The preceding statements allocate a new instance of the mPerson object. The Using command then instructs the compiler to automatically clean up this object's instance when the End Using command is executed. The result is a much cleaner way to ensure that the IDisposable interface is called.

The CLR introduces the concept of a managed heap. Objects are allocated on the managed heap, and the CLR is responsible for controlling access to these objects in a type-safe manner. One of the advantages of the managed heap is that memory allocations on it are very efficient. When unmanaged code (such as Visual Basic 6 or C++) allocates memory on the unmanaged heap, it typically scans through some sort of data structure in search of a free chunk of memory that is large enough to accommodate the allocation. The managed heap maintains a reference to the end of the most recent heap allocation. When a new object needs to be created on the heap, the CLR allocates memory on top of memory that has previously been allocated and then increments the reference to the end of heap allocations accordingly. Figure 4-4 is a simplification of what takes place in the managed heap for .NET.

State 1 — A compressed memory heap with a reference to the endpoint on the heap

State 2 — Object B, although no longer referenced, remains in its current memory location. The memory has not been freed and does not alter the allocation of memory or of other objects on the heap.

State 3 — Even though there is now a gap between the memory allocated for object A and object C, the memory allocation for D still occurs on the top of the heap. The unused fragment of memory on the managed heap is ignored at allocation time.

State 4 — After one or more allocations, before there is an allocation failure, the garbage collector runs. It reclaims the memory that was allocated to B and repositions the remaining valid objects. This compresses the active objects to the bottom of the heap, creating more space for additional object allocations (refer to Figure 4-4).

This is where the power of the GC really shines. Before the CLR is unable to allocate memory on the managed heap, the GC is invoked. The GC not only collects objects that are no longer referenced by the application, but also has a second task: compacting the heap. This is important because if all the GC did was clean up objects, then the heap would become progressively more fragmented. When heap memory becomes fragmented, you can wind up with the common problem of having a memory allocation fail, not because there isn't enough free memory, but because there isn't enough free memory in a contiguous section of memory. Thus, not only does the GC reclaim the memory associated with objects that are no longer referenced, it also compacts the remaining objects. The GC effectively squeezes out all of the spaces between the remaining objects, freeing up a large section of managed heap for new object allocations.

The GC uses a concept known as generations, the primary purpose of which is to improve its performance. The theory behind generations is that objects that have been recently created tend to have a higher probability of being garbage-collected than objects that have existed on the system for a longer time.

To understand generations, consider the analogy of a mall parking lot where cars represent objects created by the CLR. People have different shopping patterns when they visit the mall. Some people spend a good portion of their day in the mall, and others stop only long enough to pick up an item or two. Applying the theory of generations to trying to find an empty parking space for a car yields a scenario in which the highest probability of finding a parking space is a place where other cars have recently parked. In other words, a space that was occupied recently is more likely to be held by someone who just needed to quickly pick up an item or two. The longer a car has been parked, the higher the probability that its owner is an all-day shopper and the lower the probability that the parking space will be freed up anytime soon.

Generations provide a means for the GC to identify recently created objects versus long-lived objects. An object's generation is basically a counter that indicates how many times it has successfully avoided garbage collection. An object's generation counter starts at zero and can have a maximum value of two, after which the object's generation remains at this value regardless of how many times it is checked for collection.

You can put this to the test with a simple Visual Basic application. From the File menu, select either File

Module Module1

Sub Main()

Dim myObject As Object = New Object()

Dim i As Integer

For i = 0 To 3

Console.WriteLine(String.Format("Generation = {0}", _

GC.GetGeneration(myObject)))

GC.Collect()

GC.WaitForPendingFinalizers()

Next i

Console.Read()

End Sub

End ModuleRegardless of the project you use, this code sends its output to the .NET console. For a Windows application, this console defaults to the Visual Studio Output window. When you run this code, it creates an instance of an object and then iterates through a loop four times. For each loop, it displays the current generation count of myObject and then calls the GC. The GC.WaitForPendingFinalizers method blocks execution until the garbage collection has been completed.

As shown in Figure 4-5, each time the GC was run, the generation counter was incremented for myObject, up to a maximum of 2.

Each time the GC is run, the managed heap is compacted, and the reference to the end of the most recent memory allocation is updated. After compaction, objects of the same generation are grouped together. Generation-2 objects are grouped at the bottom of the managed heap, and generation-1 objects are grouped next. New generation-0 objects are placed on top of the existing allocations, so they are grouped together as well.

This is significant because recently allocated objects have a higher probability of having shorter lives. Because objects on the managed heap are ordered according to generations, the GC can opt to collect newer objects. Running the GC over a limited portion of the heap is quicker than running it over the entire managed heap.

It's also possible to invoke the GC with an overloaded version of the Collect method that accepts a generation number. The GC will then collect all objects no longer referenced by the application that belong to the specified (or younger) generation. The version of the Collect method that accepts no parameters collects objects that belong to all generations.

Another hidden GC optimization results from the fact that a reference to an object may implicitly go out of scope; therefore, it can be collected by the GC. It is difficult to illustrate how the optimization occurs only if there are no additional references to the object and the object does not have a finalizer. However, if an object is declared and used at the top of a module and not referenced again in a method, then in the release mode, the metadata will indicate that the variable is not referenced in the later portion of the code. Once the last reference to the object is made, its logical scope ends; and if the garbage collector runs, the memory for that object, which will no longer be referenced, can be reclaimed before it has gone out of its physical scope.

This chapter introduced the CLR. You looked at the memory management features of the CLR, including how the CLR eliminates the circular reference problem that has plagued COM developers. Next, the chapter examined the Finalize method and explained why it should not be treated like the Class_Terminate method. Chapter highlights include the following:

Whenever possible, do not implement the

Finalizemethod in a class.If the

Finalizemethod is implemented, then also implement theIDisposableinterface, which can be called by the client when the object is no longer needed.Code for the

Finalizemethod should be as short and quick as possible.There is no way to accurately predict when the GC will collect an object that is no longer referenced by the application (unless the GC is invoked explicitly).

The order in which the GC collects objects on the managed heap is nondeterministic. This means that the

Finalizemethod cannot call methods on other objects referenced by the object being collected.Leverage the

Usingkeyword to automatically trigger the execution of theIDisposableinterface.

This chapter also examined the value of a common runtime and type system that can be targeted by multiple languages. You saw how the CLR offers better support for metadata. Metadata is used to make types self-describing and is used for language elements such as attributes. Included were examples of how metadata is used by the CLR and the .NET class library, and you saw how to extend metadata by creating your own attributes. Finally, the chapter presented a brief overview of the Reflection API and the IL Disassembler utility (ildasm.exe), which can display the IL contained within a module.