One of the results of the move from 16-bit to 32-bit computing was the ability to write code that made use of threads, but although Visual C++ developers have been able to use threads for some time, Visual Basic developers have not had a truly reliable way to do so, until now. Previous techniques involved accessing the threading functionality available to Visual C++ developers. Although this worked, actually developing multithreaded code without adequate debugger support in the Visual Basic environment was nothing short of a nightmare.

For most developers, the primary motivation for multithreading is the ability to perform long-running tasks in the background while still providing the user with an interactive interface. Another common scenario is when building server-side code that can perform multiple long-running tasks at the same time. In that case, each task can be run on a separate thread, enabling all the tasks to run in parallel.

This chapter introduces you to the various objects in the .NET Framework that enable any .NET language to be used to develop multithreaded applications.

The term thread really refers to thread of execution. When your program is running, the CPU is actually running a sequence of processor instructions, one after another. You can think of these sequential instructions as forming a thread that is being executed by the CPU. A thread is, in effect, a pointer to the currently executing instruction in the sequence of instructions that make up the application. This pointer starts at the top of the program and moves through each line, branching and looping when it comes across decisions and loops. When the program is no longer needed, the pointer steps outside of the program code and the program is effectively stopped.

Most applications have only one thread, so they are only executing one sequence of instructions. Some applications have more than one thread, so they can simultaneously execute more than one sequence of instructions. It is important to realize that each CPU in your computer can only execute one thread at a time, with the exception of hyperthreaded processors that essentially contain multiple CPUs inside a single CPU. If you have only one CPU, then your computer can execute only one thread at a time. Even when an application has several threads, only one can run at a time in this case. If your computer has two or more CPUs, then each CPU will run a different thread at the same time. In this case, more than one thread in your application may run at the same time, each on a different CPU.

Of course, when you have a computer with only one CPU, on which several programs can be actively running at the same time, the statements in the previous paragraph fly in the face of visual evidence. Yet it is true that only one thread can execute at a time on a single-CPU machine. What you perceive to be simultaneously running applications is really an illusion created by the Windows operating system through a technique called preemptive multithreading, which is discussed later in the chapter.

All applications have at least one thread — otherwise, they could not do any work, as there would be no pointer to the thread of execution. The principle of a thread is that it enables your program to perform multiple actions, potentially at the same time. Each sequence of instructions is executed independently of other threads.

The classic example of multithreaded functionality is Microsoft Word's spell checker. When the program starts, the execution pointer begins at the top of the program and eventually gets itself into a position where you are able to start writing code. However, at some point Word starts another thread and creates another execution pointer. As you type, this new thread examines the text and flags any spelling errors as you go, encircling them with a red oval (see Figure 26-1).

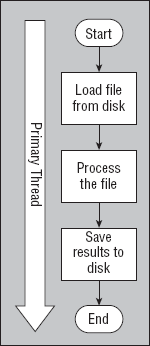

Every application has one primary thread, which serves as the main process thread through the application. Imagine you have an application that starts up, loads a file from disk, performs some processing on the data in the file, writes a new file, and then quits. Functionally, it might look like Figure 26-2.

This simple application needs only a single thread. When the program is told to run, Windows creates a new process and creates the primary thread. To understand more about exactly what it is that a thread does, you need to understand how Windows and the computer's processor deal with different processes.

Windows is capable of keeping many programs in memory at once and enabling the user to switch between them. Windows can also run programs in the background, possibly under different user identities. The capability to run many programs at once is called multitasking.

Each of the programs that your computer keeps in memory runs in a single process. A process is an isolated region of memory that contains a program's code and data. All programs run within a process, and code running in one process cannot access the memory within any other process. This prevents one program from interfering with any other program.

The process is started when the program starts, and exists for as long as the program is running. When a process is started, Windows sets up an isolated memory area for the program and loads the program's code into that area of memory. It then starts the main thread for the process, pointing it at the first instruction in the program. From that point, the thread runs the sequence of instructions defined by the program.

Windows supports multithreading, so the main thread might execute instructions that create more threads within the same process. These other threads run within the same memory space as the main thread — all sharing the same memory. Threads within a process are not isolated from each other. One thread in a process can tamper with data being used by other threads in that same process. However, a thread in one process cannot tamper with data being used by threads in any other processes on the computer.

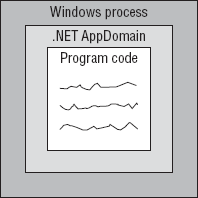

At this point, you should understand that Windows loads program code into a process and executes that code on one or more threads. The .NET Framework adds another concept to the mix: the AppDomain. An AppDomain is very much like a process in concept. Each AppDomain is an isolated region of memory, and code running in one AppDomain cannot access the memory of another AppDomain.

The .NET Framework introduced the AppDomain to make it possible to run multiple, isolated programs within the same Windows process. It turns out to be relatively expensive to create a Windows process in terms of time and memory. It is much cheaper to create a new AppDomain within an existing process.

Remember that Windows has no concept of an AppDomain; it only understands the concept of a process. The only way to get any code to run under Windows is to load it into a process. This means that each .NET AppDomain exists within a process. The result is that all .NET code runs within an AppDomain and within a Windows process (see Figure 26-3).

In most cases, a Windows process contains one AppDomain, which contains your program's code. The main thread of the process executes your program's instructions, so the existence of the AppDomain is largely invisible to your program.

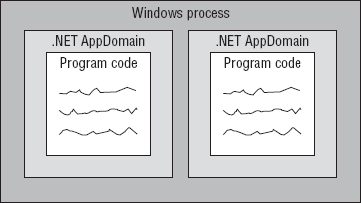

In some cases, most notably ASP.NET, a Windows process will contain multiple AppDomains, each with a separate program loaded (see Figure 26-4).

ASP.NET uses this technique to isolate Web applications from each other without having to start an expensive new Windows process for each virtual root on the server.

Note that AppDomains do not change the relationship between a process and threads. Each process has a main thread and may have other threads. Therefore, even in the ASP.NET process, with multiple AppDomains, there is only one main thread. Of course, ASP.NET creates other threads, so multiple Web applications can execute simultaneously, but there is only a single main thread in the entire process.

It was noted earlier that visual evidence suggests that multiple programs, and thus multiple threads, execute simultaneously, even on a single-CPU computer. Again, this is an illusion created by the operating system, through the use of a concept called time slicing or time sharing.

In reality, only one thread runs on each CPU at a time, with the exception of hyperthreaded processors, which are essentially multiple CPUs in one. In a single-CPU machine, this means that only one thread is ever executing at any one time. To provide the illusion that many things are happening at the same time, the operating system never lets any one thread run for very long, giving other threads a chance to get a bit of work done as well. As a result, it appears that the computer is executing several threads at the same time.

The length of time each thread is allowed to run is called a quantum. Although a quantum can vary, it is typically around 20 milliseconds. After a thread has run for its quantum, the OS stops the thread and allows another thread to run. When that thread reaches its quantum, yet another thread is allowed to run, and so on. A thread can also give up the CPU before it reaches its quantum. This happens frequently, as most I/O operations and numerous other interactions with the Windows operating system cause a thread to give up the CPU.

Because the length of time each thread can run is so short, it isn't noticeable that threads are being started and stopped. This is the same concept animators use when creating cartoons or other animated media. As long as the changes happen faster than you can perceive them, you have the illusion of motion, or, in this case, simultaneous execution of code.

The technology used by Windows is called preemptive multitasking. It is preemptive because no thread is ever allowed to run beyond its quantum. The operating system always intervenes and allows other threads to run. This ensures that no single thread can consume all the processing power on the machine to the detriment of other threads.

It also means that you can never be sure when your thread will be interrupted and another thread allowed to run. This is the primary source of multithreading's complexity, as it can cause race conditions when two threads access the same memory. If you attempt to solve a race condition with a lock, it can cause deadlock conditions when two threads attempt to access the same lock. You will learn more about these concepts later. For now, understand that writing multithreaded code can be exceedingly difficult.

The entity that executes code in Windows is the thread. Therefore, the operating system is primarily focused on scheduling threads to keep the CPU or CPUs busy at all times. The operating system does not schedule either processes or AppDomains. Processes and AppDomains are merely regions of memory that contain your code — threads are what execute the code.

Threads have priorities, and Windows always allows higher priority threads to run before lower priority threads. In fact, if a higher priority thread is ready to run, Windows will cut short a lower priority thread's quantum to allow the higher priority thread to execute sooner. In short, Windows has a bias toward threads of higher priority.

Setting thread priorities can be useful in situations where you have a process that requires a lot of processor muscle but it doesn't matter how long the process takes to do its work. Setting a program's thread to a low priority allows that program to run continuously with little impact on other programs, so if you need to use Word or Outlook or another application, Windows gives more processor time to these applications and less time to the low-priority program. This enables the computer to work smoothly and efficiently for the user, letting the low-priority program only use otherwise wasted CPU power.

Threads may also voluntarily suspend themselves before their quantum is complete. This happens frequently — for example, when a thread attempts to read data from a file. It takes significant time for the I/O subsystem to locate the file and start retrieving the data. You cannot have the CPU sitting idle during that time, especially when other threads could be running. Instead, the thread enters a wait state to indicate that it is waiting for an external event. The Windows scheduler immediately locates and runs the next ready thread, keeping the CPU busy while the first thread waits for its data.

Windows also automatically suspends and resumes threads depending on perceived processing needs, the various priority settings, and so on. Suppose you are running one AppDomain containing two threads. If you can somehow mark the second thread as dormant (in other words, tell Windows that it has nothing to do), then there's no need for Windows to allocate time to it. Effectively, the first thread receives 100 percent of the processor horsepower available to that process. When a thread is marked as dormant, it is said to be in a wait state.

Windows is particularly good at managing processes and threads. It is a core part of Windows' functionality, so its developers have spent a lot of time ensuring that it is super-efficient and as bug-free as possible. This means that creating and spinning up threads is very easy to do and happens very quickly. In addition, threads only consume a small amount of system resources. However, there is a caveat you should be aware of.

The act of stopping one thread and starting another is called context switching. This switching happens relatively quickly, but only if you are careful with the number of threads you create. Remember that this happens for each active thread at the end of each quantum (if not before) — so after at most 20 milliseconds. If you spin up too many threads, the operating system spends all its time switching between different threads, perhaps even getting to a point where the code in the thread doesn't get a chance to run because as soon as you've started the thread it's time for it to stop again.

Creating thousands of threads is not the right solution. What you need is a balance between the number of threads that your application requires and the number of threads that Windows can handle. There is no magic number or right answer to the question of how many threads you should create. Just be aware of context switching and experiment a little.

Consider the Microsoft Word spell check example. The thread that performs the spell check is around all the time. Imagine you have a blank document containing no text. At this point, the spell check thread is in a wait state. If you type a single word into the document and then pause, Word will pass that word over to the thread and signal it to start working. The thread uses its own slice of the processor power to examine the word. If it finds something wrong with it, then it tells the primary thread that a spelling problem was found and that the user needs to be alerted. At this point, the spell check thread drops back into a wait state until more text is entered into the document. Word does not spin up the thread whenever it needs to perform a check — rather, the thread runs all the time, but if it has nothing to do, it drops into this efficient wait state. (You will learn about how the thread starts again later.)

Again, this is an oversimplification. Word actually "wakes up" the thread at various times, but the basic principle is sound — the thread is given work to do, it reports the results, and then it starts waiting for the next chunk of work to do. So why is all this important? If you plan to author multithreaded applications, then you need to understand how the operating system will be scheduling your threads, as well as the threads of all other processes on the system. Most important, you need to recognize that your thread can be interrupted at any time so that another thread can run.

Most of the .NET Framework base class library is not thread safe. Thread-safe code is code that can be called by multiple threads at the same time without negative side effects. If code is not thread safe, then calling that code from multiple threads at the same time can result in unpredictable and undesirable side effects, potentially even crashing your application. When dealing with objects that are not thread safe, you must ensure that multiple threads never simultaneously interact with the same object.

For example, suppose you have a ListBox control (or any other control) on a Windows Form and you start updating that control with data from multiple threads. You will find that your results are undependable. Sometimes you will see all your data in order, but other times it will be out of order, and other times some data will be missing. This is because Windows Forms controls are not thread safe and don't behave properly when used by multiple threads at the same time.

To determine whether any specific method in the .NET Base Class Library is thread safe, refer to the online help. If no mention of threading appears in association with the method, then the method is not thread safe.

The Windows Forms subset of the .NET Framework is not only not thread safe, it also has thread affinity. Thread affinity means that objects created by a thread can only be used by that thread. Other threads should never interact with those objects. In the case of Windows Forms, this means that you must ensure that multiple threads never interact with Windows Forms objects (such as forms and controls). This is important because when you are creating interactive, multithreaded applications, you must ensure that only the thread that created a form interacts directly with that form.

As you will see, Windows Forms includes technology by which a background thread can safely make method calls on forms and controls by transferring the method call to the thread that owns the form.

If we regard computer programs as being either application software or service software, we find there are different motivators for each one. Application software uses threads primarily to deliver a better user experience. Common examples are as follows:

In all of these cases, threads are used to do "something in the background." This provides a better user experience. For example, you can still edit a Word document while Word is spooling another document to the printer. Similarly, you can still read e-mails while Outlook is sending your new e-mail. As an application developer, you should use threads to enhance the user experience. At some point during the application startup, code running in the primary thread will have spun up another thread to be used for spell checking. As part of the "allow user to edit the document" process, you give the spell checker thread some words to check. This thread separation means that the user can continue to type, even though spell checking is still taking place.

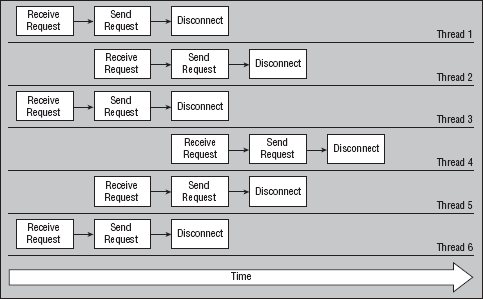

Service software uses threads to both deliver scalability and improve the service offered. For example, imagine you have a web server that receives six incoming connections simultaneously. That server needs to service each of the requests in parallel; otherwise, the sixth thread would have to wait for you to finish threads one through five before it was even started. Figure 26-5 shows how IIS might handle incoming requests.

The primary motivation for multiple threads in a service like this is to keep the CPU busy servicing user requests even when other user requests are blocked waiting for data or other events. If you have six user requests, the odds are high that some or all of them will read from files or databases and thus will spend many milliseconds in wait states. While some of the user requests are in wait states, other user requests need CPU time and can be scheduled to run. The result is higher scalability because the CPU, I/O, and other subsystems of the computer are kept as busy as possible at all times.

The specific goals and requirements for background processing in an interactive application are quite different from a server application. By "interactive application" we are talking about Windows Forms or Console applications. While a Web application might be somewhat interactive, in fact, all your code runs on the server, and so Web applications are server applications when it comes to threading.

In the case of interactive applications (typically, Windows Forms applications), your design must center on having the background thread do useful work, but also interact appropriately (and safely) with the thread managing the UI. After all, you usually want to let the user know when the background process starts, stops, and does interesting things over its life. The following list summarizes the basic requirements for the background thread:

Indicate that the background task has started

Provide periodic status or progress information

Indicate that the background task has completed

Enable the user to request that the background task be canceled

While every application is different, these four requirements are typical for background threads in an interactive application.

As noted earlier, most of the .NET Framework is not thread safe, and Windows Forms is even more restrictive by having thread affinity. You want your background task to be able to notify the user when it starts, stops, and provides progress information. The fact that Windows Forms has thread affinity complicates this, because your background thread can never directly interact with Windows Forms objects. Fortunately, Windows Forms provides a formalized mechanism by which code in a background thread can send messages to the UI thread so that the UI thread can update the display for the user.

This is done using the BackgroundWorker control, which is found in the Components tab of the Toolbox. The purpose of the BackgroundWorker control is to start, monitor, and control the execution of background tasks. The control makes it easy for code on the application's primary thread to start a task on a background thread. It also makes it easy for the code running on the background thread to notify the primary thread of progress and completion. Finally, it provides a mechanism by which the primary thread can request that the background task be canceled, and for the background thread to notify the primary thread when it has completed the cancellation.

All this is done in a way that safely transfers control between the primary thread (which can update the UI) and the background thread (which cannot update the UI).

In the case of server programs, your design should focus on making the background thread as efficient as possible. Server resources are precious, so the quicker the task can complete, the fewer resources you will consume over time. Interactivity with a UI is not a concern, as your code is running on a server, detached from any UI. The key to success in server coding is to avoid or minimize locking, thus maximizing throughput because your code is never stopped by a lock.

For example, Microsoft went to great pains to design and refine ASP.NET to minimize the number of locks required from the time a user request hits the server to the time an ASPX page's code is running. After the page code is running, no locking occurs, so the page code can just run, top to bottom, as fast and efficiently as possible.

Avoiding locking means avoiding shared resources or data. This is the dominant design goal for server code — designing programs to avoid scenarios in which multiple threads need access to the same variables or other resources. Anytime multiple threads may access the same resource, you need to implement locking to prevent the threads from colliding with one another. You'll learn about locking later in the chapter, as sometimes it is simply unavoidable.

At this point, you should have a basic understanding of threads and how they relate to the process and AppDomain concepts. You should also realize that for interactive applications, multithreading is not a way to improve performance, but rather a way to improve the end user experience by providing the illusion that the computer is executing more code simultaneously. In the case of server-side code, multithreading enables higher scalability by enabling Windows to better utilize the CPU, along with other subsystems such as I/O.

When a background thread is created, it points to a method or procedure that will be executed by the thread. Remember that a thread is just a pointer to the current instruction in a sequence of instructions to be executed. In all cases, the first instruction in this sequence is the start of a method or procedure.

When using the BackgroundWorker control, this method is always the control's DoWork event handler. Keep in mind that this method can't be a function. There is no mechanism by which a method running on one thread can return a result directly to code running on another thread. This means that anytime you design a background task, you should start by creating a Sub in which you write the code to run on the background thread.

In addition, because the goals for interactive applications and server programs are different, your designs for implementing threading in these two environments are different. This means that the way you design and code the background task will vary.

To demonstrate this, let's work with a simple method that calculates prime numbers. This implementation is naive, and can take quite a lot of time when run against larger numbers, so it serves as a useful example of a long-running background task. Do the following:

Create a new Windows Forms Application project named Threading.

Add two

Buttoncontrols, aListBoxand aProgressBarcontrol toForm1.Add a

BackgroundWorkercontrol toForm1.Set its

WorkerReportsProgressandWorkerSupportsCancellationproperties toTrue.Add the following to the form's code:

Public Class Form1 #Region " Shared data " Private mMin As Integer Private mMax As Integer Private mResults As New List(Of Integer) #End Region #Region " Primary thread methods " Private Sub btnStart_Click(ByVal sender As System.Object, _ ByVal e As System.EventArgs) Handles btnStart.Click ProgressBar1.Value = 0 ListBox1.Items.Clear() mMin = 1 mMax = 10000 BackgroundWorker1.RunWorkerAsync() End Sub Private Sub btnCancel_Click(ByVal sender As System.Object, _ ByVal e As System.EventArgs) Handles btnCancel.Click BackgroundWorker1.CancelAsync() End Sub Private Sub BackgroundWorker1_ProgressChanged( _ ByVal sender As Object, ByVal e As _ System.ComponentModel.ProgressChangedEventArgs) _ Handles BackgroundWorker1.ProgressChanged ProgressBar1.Value = e.ProgressPercentageEnd Sub Private Sub BackgroundWorker1_RunWorkerCompleted( _ ByVal sender As Object, ByVal e As _ System.ComponentModel.RunWorkerCompletedEventArgs) _ Handles BackgroundWorker1.RunWorkerCompleted For Each item As String In mResults ListBox1.Items.Add(item) Next End Sub #End Region #Region " Background thread methods " Private Sub BackgroundWorker1_DoWork(ByVal sender As Object, _ ByVal e As System.ComponentModel.DoWorkEventArgs) _ Handles BackgroundWorker1.DoWork mResults.Clear() For count As Integer = mMin To mMax Step 2 Dim isPrime As Boolean = True For x As Integer = 1 To CInt(count / 2) For y As Integer = 1 To x If x * y = count Then ' the number is not prime isPrime = False Exit For End If Next ' short-circuit the check If Not isPrime Then Exit For Next If isPrime Then mResults.Add(count) End If Me.BackgroundWorker1.ReportProgress( _ CInt((count - mMin) / (mMax - mMin) * 100)) If Me.BackgroundWorker1.CancellationPending Then Exit Sub End If Next End Sub #End Region End Class

The BackgroundWorker1_DoWork method implements the code to find the prime numbers. This method is automatically run on a background thread by the BackgroundWorker1 control. Notice that the method is a Sub, so it returns no value. Instead, it stores its results in a variable — in this case, a List(Of Integer). The idea is that once the background task is complete, you can do something useful with the results.

When btnStart is clicked, the BackgroundWorker control is told to start the background task. In order to initialize any data values before launching the background thread, the mMin and mMax variables are set before the task is started.

Of course, you want to display the results of the background task. Fortunately, the BackgroundWorker control raises an event when the task is complete. In this event handler you can safely copy the values from the List(Of Integer) into the ListBox for display to the user.

Similarly, the BackgroundWorker control raises an event to indicate progress as the task runs. Notice that the DoWork method periodically calls the ReportProgress method. When this method is called, the progress is transferred from the background thread to the primary thread via the ProgressChanged event.

Finally, you may have the need to cancel a long-running task. It is never wise to directly terminate a background task. Instead, you should send a request to the background task, asking it to stop running. This enables the task to cleanly stop running so it can close any resources it might be using and shut down properly.

To send the cancel request, call the BackgroundWorker control's CancelAsync method. This sets the control's CancellationPending property to True. Notice how this value is periodically checked by the DoWork method; and if it is True, you exit the method, effectively canceling the task.

Running the code now demonstrates that the UI remains entirely responsive while the background task is running, and the results are displayed when available.

Now that you have learned the basics of threading in an interactive application, let's look at the various threading options at your disposal. The .NET Framework offers two ways to implement multithreading. Regardless of which approach you use, you must specify the method or procedure that the thread will execute when it starts.

First, you can use the thread pool provided by the .NET Framework. The thread pool is a managed pool of threads that can be reused over the life of your application. Threads are created in the pool on an as-needed basis, and idle threads in the pool are reused, thus keeping the number of threads created by your application to a minimum. This is important because threads are an expensive operating system resource.

Note

The thread pool should be your first choice in most multithreading scenarios.

Many built-in .NET Framework features already use the thread pool. In fact, you have already used it, because the BackgroundWorker control runs its background tasks on a thread from the thread pool. In addition, anytime you do an asynchronous read from a file, URL, or TCP socket, the thread pool is used on your behalf; and anytime you implement a remoting listener, a website, or a Web service, the thread pool is used. Because the .NET Framework itself relies on the thread pool, it is an optimal choice for most multithreading requirements.

Second, you can create your own thread object. This can be a good approach if you have a single, long-running background task in your application. It is also useful if you need fine-grained control over the background thread. Examples of such control include setting the thread priority or suspending and resuming the thread's execution.

The .NET Framework provides a thread pool in the System.Threading namespace. This thread pool is self-managing. It creates threads on demand and, if possible, reuses idle threads that already exist in the pool.

The thread pool will not create an unlimited number of threads. In fact, it creates at most 25 threads per CPU in the system. If you assign more work requests to the pool than it can handle with these threads, your work requests are queued until a thread becomes available. This is typically a good feature, as it helps ensure that your application will not overload the operating system with too many threads.

There are five primary ways to use the thread pool: through the BackgroundWorker control, by calling BeginXYZ methods, via Delegates, manually via the ThreadPool.QueueUserWorkItem method, or by using a System.Timers.Timer control. Of the five, the easiest is to use the BackgroundWorker control.

The previous quick tour of threading explored the BackgroundWorker control, which enables you to easily start a task on a background thread, monitor that task's progress, and be notified when it is complete. It also enables you to request that the background task cancel itself. All this is done in a safe manner, with control transferred from the primary thread to the background thread and back again without you having to worry about the details.

Many of the .NET Framework objects support both synchronous and asynchronous invocation. For instance, you can read from a TCP socket by using the Read method or the BeginRead method. The Read method is synchronous, so you're blocked until the data is read.

The BeginRead method is asynchronous, so you are not blocked. Instead, the read operation occurs on a background thread in the thread pool. You provide the address of a method that is called automatically when the read operation is complete. This callback method is invoked by the background thread, so your code also ends up running on the background thread in the thread pool.

Behind the scenes, this behavior is all driven by delegates. Rather than explore TCP sockets or some other specific subset of the .NET Framework class library, let's move on and look at the underlying technology itself.

A delegate is a strongly typed pointer to a function or method. Delegates are the underlying technology used to implement events within Visual Basic, and they can be used directly to invoke a method, given just a pointer to that method.

Delegates can be used to launch a background task on a thread in the thread pool. They can also transfer a method call from a background thread to the UI thread. The BackgroundWorker control uses this technology behind the scenes on your behalf, but you can use delegates directly as well.

To use delegates, your worker code must be in a method, and you must define a delegate for that method. The delegate is a pointer for the method, so it must have the same method signature as the method itself:

Private Delegate Sub TaskDelegate(ByVal min As Integer, ByVal max As Integer)

Private Sub FindPrimesViaDelegate(ByVal min As Integer, ByVal max As Integer)

mResults.Clear()

For count As Integer = min To max Step 2

Dim isPrime As Boolean = True

For x As Integer = 1 To CInt(count / 2)

For y As Integer = 1 To x

If x * y = count Then

' the number is not prime

isPrime = False

Exit For

End If

Next

' short-circuit the check

If Not isPrime Then Exit For

Next

If isPrime Then

mResults.Add(count)

End If

Next

End SubRunning background tasks via delegates enables you to pass strongly typed parameters to the background task, thus clarifying and simplifying your code. Now that you have a worker method and corresponding delegate, you can add a new button and write code in its click event handler to use it to run FindPrimes on a background thread:

Private Sub btnDelegate_Click(ByVal sender As System.Object, _

ByVal e As System.EventArgs) Handles btnDelegate.Click

' run the task

Dim worker As New TaskDelegate(AddressOf FindPrimesViaDelegate)

worker.BeginInvoke(1, 10000, AddressOf TaskComplete, Nothing)

End SubFirst, you create an instance of the delegate, setting it up to point to the FindPrimesViaDelegate method. Next, you call BeginInvoke on the delegate to invoke the method.

The BeginInvoke method is the key here. BeginInvoke is an example of the BeginXYZ methods discussed earlier; recall that they automatically run the method on a background thread in the thread pool. This is true for BeginInvoke as well, meaning that FindPrimes runs in the background and the UI thread is not blocked, so it can continue to interact with the user.

Notice all the parameters passed to BeginInvoke. The first two correspond to the parameters defined on the delegate — the min and max values that should be passed to FindPrimes. The next parameter is the address of a method that is automatically invoked when the background thread is complete. The final parameter (to which you have passed Nothing) is a mechanism by which you can pass a value from your UI thread to the method that is invoked when the background task is complete.

This means that you need to implement the TaskComplete method. This method is invoked when the background task is complete. It runs on the background thread, not on the UI thread, so remember that this method cannot interact with any Windows Forms objects. Instead, it will contain the code to invoke an UpdateDisplay method on the UI thread via the form's BeginInvoke method:

Private Sub TaskComplete(ByVal ar As IAsyncResult)

Dim update As New UpdateDisplayDelegate(AddressOf UpdateDisplay)

Me.BeginInvoke(update)

End Sub

Private Delegate Sub UpdateDisplayDelegate()

Private Sub UpdateDisplay()

For Each item As String In mResults

ListBox1.Items.Add(item)

Next

End SubNotice how a delegate is used to invoke the UpdateDisplay method as well, thus illustrating how delegates can be used with a Form object's BeginInvoke method to transfer control back to the primary thread. The same technique could be used to enable the background task to notify the primary thread of progress as the task runs.

Now when you run the application, you'll have a responsive UI, with the FindPrimesViaDelegate method running in the background within the thread pool.

The final option for using the thread pool is to manually queue items for the thread pool to process. This is done by calling ThreadPool.QueueUserWorkItem. This is a Shared method on the ThreadPool class that directly places a method into the thread pool to be executed on a background thread.

This technique does not allow you to pass arbitrary parameters to the worker method. Instead, it requires that the worker method accept a single parameter of type Object, through which you can pass an arbitrary value. You can use this to pass multiple values by declaring a class with all your parameter types. Add the following class inside the Form1 class:

Private Class params

Public min As Integer

Public max As Integer

Public Sub New(ByVal min As Integer, ByVal max As Integer)

Me.min = min

Me.max = max

End Sub

End ClassThen you can make FindPrimes accept this value as an Object:

Private Sub FindPrimesInPool(ByVal state As Object)

Dim params As params = DirectCast(state, params)

mResults.Clear()

For count As Integer = params.min To params.max Step 2

Dim isPrime As Boolean = True

For x As Integer = 1 To CInt(count / 2)

For y As Integer = 1 To x

If x * y = count Then

' the number is not prime

isPrime = False

Exit For

End If

Next

' short-circuit the check

If Not isPrime Then Exit For

Next

If isPrime Then

mResults.Add(count)

End If

Next

Dim update As New UpdateDisplayDelegate(AddressOf UpdateDisplay)

Me.BeginInvoke(update)

End SubThis is basically the same method used with delegates, but it accepts an object parameter, rather than the strongly typed parameters. Notice that the method uses a delegate to invoke the UpdateDisplay method on the UI thread when the task is complete. When you manually put a task on the thread pool, there is no automatic callback to a method when the task is complete, so you must do the callback in the worker method itself.

Now you can manually queue the worker method to run in the thread pool within the Click event handler:

Private Sub btnPool_Click(ByVal sender As System.Object, _

ByVal e As System.EventArgs) Handles btnPool.Click

' run the task

System.Threading.ThreadPool.QueueUserWorkItem( _

AddressOf FindPrimesInPool, New params(1, 10000))

End SubThe QueueUserWorkItem method accepts the address of the worker method — in this case, FindPrimes. This worker method must accept a single parameter of type Object or you will get a compile error here.

The second parameter to QueueUserWorkItem is the object to be passed to the worker method when it is invoked on the background thread. In this case, you're passing a new instance of the params class defined earlier. This enables you to pass your parameter values to FindPrimes.

When you run this code, you will again find that you have a responsive UI, with FindPrimes running on a background thread in the thread pool.

Beyond BeginXYZ methods, delegates, and manually queuing work items, there are various other ways to get your code running in the thread pool. One of the most common is using a special Timer control. The Elapsed event of this control is raised on a background thread in the thread pool.

This is different from the System.Windows.Forms.Timer control, where the Tick event is raised on the UI thread. The difference is very important to understand, because you can't directly interact with Windows Forms objects from background threads. Code running in the Elapsed event of a System.Timers.Timer control must be treated like any other code running on a background thread.

The exception to this is if you set the SynchronizingObject property on the control to a Windows Forms object such as a Form or a Control. In this case, the Elapsed event is raised on the appropriate UI thread, rather than on a thread in the thread pool. The result is basically the same as using System.Windows.Forms.Timer instead.

Thus far, we have been working with the .NET thread pool. You can also manually create and control background threads through code. To manually create a thread, you need to create and start a Thread object. This looks something like the following:

' run the task Dim worker As New Thread(AddressOf FindPrimes) worker.Start()

While this seems like the obvious way to do multithreading, the thread pool is typically the preferred approach because there is a cost to creating and destroying threads, and the thread pool helps avoid that cost by reusing threads when possible. When you manually create a thread as shown here, you must pay the cost of creating the thread each time or implement your own scheme to reuse the threads you create.

However, manual creation of threads can be useful. The thread pool is designed to be used for background tasks that run for a while and then complete, thus enabling the background thread to be reused for subsequent background tasks. If you need to run a background task for the entire duration of your application, the thread pool is not ideal because that thread would never become available for reuse. In such a case, you are better off creating the background thread manually.

An example of this is the aforementioned spell checker in Word, which runs as long as you're editing a document. Running such a task on the thread pool would make little sense, as the task will run as long as the application, so instead it should be run on a manually created thread, leaving the thread pool available for shorter-running tasks.

The other primary scenario for manually creating threads is when you want to be able to interact with the Thread object as it is running. You can use various methods on the Thread object to interact with and control the background thread. These are described in the following table:

Many other methods are available on the Thread object as well; consult the online help for more details. You can use these methods to control the behavior and lifetime of the background thread, which can be useful in advanced threading scenarios.

In most multithreading scenarios, you have data in your main thread that needs to be used by the background task on the background thread. Likewise, the background task typically generates data that is needed by the main thread. These are examples of shared data, or data that is used by multiple threads.

Remember that multithreading means you have multiple threads within the same process, and in .NET within the same AppDomain. Because memory within an AppDomain is common across all threads in that AppDomain, it is very easy for multiple threads to access the same objects or variables within your application.

For example, in our original prime example, the background task needed the min and max values from the main thread, and all the implementations have used a List(Of Integer) to transfer results back to the main thread when the task was complete. These are examples of shared data. Note that we did not do anything special to make the data shared — the variables were shared by default.

When you are writing multithreaded code, the trickiest issue is managing access to shared data within your AppDomain. You do not want, for example, two threads writing to the same piece of memory at the same time. Equally, you do not want a group of threads reading memory that another thread is in the process of changing. This management of memory access is called synchronization. It is properly managing synchronization that makes writing multithreaded code difficult.

When multiple threads want to simultaneously access a common bit of shared data, use synchronization to control things. This is typically done by blocking all but one thread, so only one thread can access the shared data. All other threads are put into a wait state by using a blocking operation of some sort. Once the nonblocked thread is done using the shared data, it releases the block, enabling another thread to resume processing and to use the shared data.

The process of releasing the block is often called an event. When we say "event," we are not talking about a Visual Basic event. Although the naming convention is unfortunate, the principle is the same — something happens and we react to it. In this case, the nonblocked thread causes an event, which releases some other thread so it can access the shared data.

Although blocking can be used to control the execution of threads, it is primarily used to control access to resources, including memory. This is the basic idea behind synchronization — if you need something, you block until you can access it.

Synchronization is expensive and can be complex. It is expensive because it stops one or more threads from running while another thread uses the shared data. The whole point of having multiple threads is to do more than one thing at a time, and if you are constantly blocking all but one thread, then you lose this benefit.

It can be complex because there are many ways to implement synchronization. Each technique is appropriate for a certain class of synchronization problem, and using the wrong one in the wrong place increases the cost of synchronization.

It is also quite possible to create deadlocks, whereby two or more threads end up permanently blocked. You have undoubtedly seen examples of this. Pretty much anytime a Windows application totally locks up and must be stopped by the Task Manager, you are seeing an example of poor multithreading implementation. The fact that this happens even in otherwise high-quality commercial applications (such as Microsoft Outlook) is confirmation that synchronization can be very hard to get right.

Because synchronization has so many downsides in terms of performance and complexity, the best thing you can do is avoid or minimize its use. If at all possible, design your multithreaded applications to avoid reliance on shared data, and to maintain tight control over the use of any shared data that is required.

Typically, some shared data is unavoidable, so the question becomes how to manage that shared data to avoid or minimize synchronization. Two primary schemes are used for this purpose.

The first approach is to avoid sharing of data by always passing references to the data between threads. If you also ensure that neither thread uses the same reference, then each thread has its own copy of the data, and no thread needs access to data being used by any other threads.

This is exactly what you did in the prime example where you started the background task via a delegate:

Dim worker As New TaskDelegate(AddressOf FindPrimesViaDelegate) worker.BeginInvoke(1, 10000, AddressOf TaskComplete, Nothing)

The min and max values are passed as ByVal parameters, meaning they are copied and provided to the indPrimes method. No synchronization is required here because the background thread never tries to access the values from the main thread. We passed copies of the values a different way when we manually started the task in the thread pool:

System.Threading.ThreadPool.QueueUserWorkItem( _ AddressOf FindPrimesInPool, New params(1, 10000))

In this case, we created a params object into which we put the min and max values. Again, those values were copied before they were used by the background thread. The FindPrimesInPool method never attempted to access any parameter data being used by the main thread.

What we have done so far works great for variables that are value types, such as Integer, and immutable objects, such as String. It will not work for reference types, such as a regular object, because reference types are never passed by value, only by reference.

To use reference types, we need to change our approach. Rather than return a copy of the data, we will return a reference to the object containing the data. Then we ensure that the background task stops using that object, and starts using a new object. As long as different threads are not simultaneously using the same objects, there's no conflict.

You can enhance the prime application to provide the prime numbers to the UI thread as it finds them, rather than in a batch at the end of the process. To see how this works, we will alter the original code based on the BackgroundWorker control. That is the easiest, and typically the best, way to start a background task, so we will use it as a base implementation.

The first thing to do is alter the DoWork method so it periodically returns results. Rather than use the shared mResults variable, we'll use a local List(Of Integer) variable to store the results. Each time we have enough results to report, we'll return that List(Of Integer) to the UI thread, and create a new List(Of Integer) for the next batch of values. This way, we are never sharing the same object between two threads. The required changes are highlighted:

Private Sub BackgroundWorker1_DoWork(ByVal sender As Object, _

ByVal e As System.ComponentModel.DoWorkEventArgs) _

Handles BackgroundWorker1.DoWork

'mResults.Clear()

Dim results As New List(Of Integer)

For count As Integer = mMin To mMax Step 2

Dim isPrime As Boolean = True

For x As Integer = 1 To CInt(count / 2)

For y As Integer = 1 To x

If x * y = count Then

' the number is not prime

isPrime = False

Exit For

End If

Next

' short-circuit the check

If Not isPrime Then Exit For

Next

If isPrime Then

'mResults.Add(count)

results.Add(count)

If results.Count >= 10 Then

BackgroundWorker1.ReportProgress( _

CInt((count - mMin) / (mMax - mMin) * 100), results)

results = New List(Of Integer)

End If

End If

BackgroundWorker1.ReportProgress( _

CInt((count - mMin) / (mMax - mMin) * 100))

If BackgroundWorker1.CancellationPending Then

Exit Sub

End If

Next

BackgroundWorker1.ReportProgress(100, results)

End SubThe results are now placed into a local List(Of Integer). Anytime the list has 10 values, we return it to the primary thread by calling the BackgroundWorker control's ReportProgress method, passing the List(Of Integer) as a parameter.

The important thing here is to then immediately create a new List(Of Integer) for use in the DoWorker method. This ensures that the background thread is never trying to interact with the same List(Of Integer) object as the UI thread.

Now that the DoWork method is returning results, alter the code on the primary thread to use those results:

Private Sub BackgroundWorker1_ProgressChanged( _

ByVal sender As Object, _

ByVal e As System.ComponentModel.ProgressChangedEventArgs) _

Handles BackgroundWorker1.ProgressChanged

ProgressBar1.Value = e.ProgressPercentage

If e.UserState IsNot Nothing Then

For Each item As String In CType(e.UserState, List(Of Integer))

ListBox1.Items.Add(item)

Next

End If

End SubAnytime the ProgressChanged event is raised, the code checks to see whether the background task provided a state object. If it did, then you cast it to a List(Of Integer) and update the UI to display the values in the object.

At this point, you no longer need the RunWorkerCompleted method, so it can be removed or commented out. If you run the code at this point, not only is the UI continually responsive, but the results from the background task are displayed as they are discovered, rather than in a batch at the end of the process. As you run the application, resize and move the form while the prime numbers are being found. Although the displaying of the data may be slowed down as you interact with the form (because the UI thread can only do so much work), the generation of the data continues independently in the background and is not blocked by the UI thread's work.

When you rely on transferring data ownership, you ensure that only one thread can access the data at any given time by ensuring that the background task never uses an object once it returns it to the primary thread.

So far, you have seen ways to avoid the sharing of data, but sometimes you'll have a requirement for data sharing, in which case you'll be faced with the complex world of synchronization.

As discussed earlier, incorrect implementation of synchronization can cause performance issues, deadlocks, and application crashes. Success is dependent on serious attention to detail. Problems may not manifest in testing, but when they happen in production, they are often catastrophic. You cannot test to ensure proper implementation; you must prove it in the same way mathematicians prove mathematical truths — by careful logical analysis of all possibilities.

Some objects in the .NET Framework have built-in support for synchronization, so you don't need to write it yourself. In particular, most of the collection-oriented classes have optional support for synchronization, including Queue, Stack, Hashtable, ArrayList, and more.

Rather than transfer ownership of List(Of Integer) objects from the background thread to the UI thread as shown in the last example, you can use the synchronization provided by the ArrayList object to help mediate between the two threads.

To use a synchronized ArrayList, you need to change from the List(Of Integer) to an ArrayList. Additionally, the ArrayList must be created a special way:

Private Sub BackgroundWorker1_DoWork(ByVal sender As Object, _ ByVal e As System.ComponentModel.DoWorkEventArgs) _ Handles BackgroundWorker1.DoWork 'mResults.Clear() 'Dim results As New List(Of Integer) Dim results As ArrayList = ArrayList.Synchronized(New ArrayList)

What you are doing here is creating a normal ArrayList, and then having the ArrayList class "wrap" it with a synchronized wrapper. The result is a thread-safe ArrayList object that automatically prevents multiple threads from interacting with the data in invalid ways.

Now that the ArrayList is synchronized, you don't need to create a new one each time you return the values to the primary thread. Comment out the following line in the DoWork method:

If results.Count >= 10 Then

BackgroundWorker1.ReportProgress( _

CInt((count - mMin) / (mMax - mMin) * 100), results)

'results = New List(Of Integer)

End IfFinally, update the code on the primary thread to properly display the data from the ArrayList:

Private Sub BackgroundWorker1_ProgressChanged( _

ByVal sender As Object, _

ByVal e As System.ComponentModel.ProgressChangedEventArgs) _

Handles BackgroundWorker1.ProgressChanged

ProgressBar1.Value = e.ProgressPercentage

If e.UserState IsNot Nothing Then

Dim result As ArrayList = CType(e.UserState, ArrayList)

For index As Integer = ListBox1.Items.Count To result.Count - 1

ListBox1.Items.Add(result(index))

Next

End If

End SubBecause the entire list is accessible at all times, you need only copy the new values to the ListBox, rather than loop through the entire list. This works out well anyway, because the For Each statement isn't thread safe even with a synchronized collection. To use the For Each statement, you would need to enclose the entire loop inside a SyncLock block:

Dim result As ArrayList = CType(e.UserState, ArrayList)

SyncLock result.SyncRoot

For Each item As String in result

ListBox1.Items.Add(item)

Next

End SyncLockThe SyncLock statement in Visual Basic is used to provide an exclusive lock on an object. Here it is being used to get an exclusive lock on the ArrayList object's SyncRoot. This means all the code within the SyncLock block can be sure that it is the only code interacting with the contents of the ArrayList. No other threads can access the data while your code is in this block.

While many collection objects optionally provide support for synchronization, most objects in the .NET Framework or in third-party libraries are not thread safe. To safely share these objects and classes in a multithreaded environment, you must manually implement synchronization.

To manually implement synchronization, you must rely on help from the Windows operating system. The .NET Framework includes classes that wrap the underlying Windows operating system concepts, so you don't need to call Windows directly. Instead, you use the .NET Framework synchronization objects.

Synchronization objects have their own special terminology. Most of these objects can be acquired and released. In other cases, you wait on an object until it is signaled.

For objects that can be acquired, the idea is that when you have the object, you have a lock. Any other threads trying to acquire the object are blocked until you release the object. These types of synchronization objects are sort of like a hot potato — only one thread has it at any given time and other threads are waiting for it. No thread should hold onto such an object any longer than necessary, as that slows down the whole system.

The other class of objects comprises those that wait on the object — which means your thread is blocked. Some other thread will signal your object, which releases you (to become unblocked). Many threads can be waiting on the same object, and when the object is signaled, all the blocked threads are released. This is basically the exact opposite of an acquire/release type object. The following table lists the primary synchronization objects in the .NET Framework:

Object | Model | Description |

|---|---|---|

Wait/Signal | Allows a thread to release other threads that are waiting on the object | |

N/A | Allows multiple threads to safely increment and decrement values that are stored in variables accessible to all the threads | |

Wait/Signal | Allows a thread to release other threads that are waiting on the object | |

Acquire/Release | Defines an exclusive application-level lock whereby only one thread can hold the lock at any given time | |

Acquire/Release | Defines an exclusive systemwide lock whereby only one thread can hold the lock at any given time | |

Acquire/Release | Defines a lock whereby many threads can read data, but only a single writer is allowed | |

Acquire/Release | Defines a lock whereby many threads can read data, but exclusive access is provided to one thread for writing data. This is a new object in the .NET Framework 3.5. |

Perhaps the easiest type of synchronization to understand and implement is an exclusive lock. When one thread holds an exclusive lock, no other thread can obtain that lock. Any other thread attempting to obtain the lock is blocked until the lock becomes available.

There are two primary technologies for exclusive locking: the monitor and mutex objects. The monitor object allows a thread in a process to block other threads in the same process. The mutex object allows a thread in any process to block threads in the same process or in other processes. Because a mutex has systemwide scope, it is a more expensive object to use and should only be used when cross-process locking is required.

Visual Basic includes the SyncLock statement, which is a shortcut to access a monitor object. While it is possible to directly create and use a System.Threading.Monitor object, it is far simpler to just use the SyncLock statement (briefly mentioned in the ArrayList object discussion), so that is what we will do here.

Exclusive locks can be used to protect shared data so that only one thread at a time can access the data. They can also be used to ensure that only one thread at a time can run a specific bit of code. This exclusive bit of code is called a critical section. While critical sections are an important concept in computer science, it is far more common to use exclusive locks to protect shared data, and that is what this chapter focuses on.

You can use an exclusive lock to lock virtually any shared data. For example, you can change your code to use the SyncLock statement instead of a synchronized ArrayList. To do so, change the declaration of the ArrayList in the DoWork method so it is global to the form and no longer synchronized:

Private results As New ArrayList

This means you are responsible for managing all synchronization yourself. First, in the DoWork method, protect all access to the results variable:

If isPrime Then

Dim numberOfResults As Integer

SyncLock results.SyncRoot

results.Add(count)

numberOfResults = results.Count

End SyncLock

If numberofresults >= 10 Then

BackgroundWorker1.ReportProgress( _

CInt((count - mMin) / (mMax - mMin) * 100), results)

End If

End IfNotice how the code has changed so both the Add and Count method calls are contained within a SyncLock block. This ensures that no other thread can be interacting with the ArrayList while you make these calls. The SyncLock statement acts against an object — in this case, results.SyncRoot.

The trick to making this work is to ensure that all code throughout the application wraps any access to

resultswithin theSyncLockstatement. If any code doesn't follow this protocol, then there will be conflicts between threads!

Because SyncLock acts against a specific object, you can have many active SyncLock statements, each working against a different object:

SyncLock obj1 ' blocks against obj1 End SyncLock SyncLock obj2 ' blocks against obj2 End SyncLock

Note that neither obj1 nor obj2 is altered or affected by this at all. The only thing you are saying here is that while you're within a SyncLock obj1 code block, any other thread attempting to execute a SyncLock obj1 statement will be blocked until you've executed the End SyncLock statement.

Next, change the UI update code in the ProgressChanged method:

ProgressBar1.Value = e.ProgressPercentage

If e.UserState IsNot Nothing Then

Dim result As ArrayList = CType(e.UserState, ArrayList)

SyncLock result

For index As Integer = ListBox1.Items.Count To result.Count - 1

ListBox1.Items.Add(result(index))

Next

End SyncLock

End IfAgain, notice how the interaction with the ArrayList is contained within a SyncLock block. While this version of the code will operate just fine, it is very slow. In fact, you can pretty much stall out the whole processing by continually moving or resizing the window while it runs. This is because the UI thread is blocking the background thread via the SyncLock call, and if the UI thread is totally busy moving or resizing the window, then the background thread can be entirely blocked during that time as well.

While exclusive locks are an easy way to protect shared data, they are not always the most efficient. Your application will often contain some code that is updating shared data, and other code that is only reading from shared data. Some applications do a great deal of data reading and only periodic data changes.

Because reading data does not change anything, there is nothing wrong with having multiple threads read data at the same time, as long as you can ensure that no threads are updating data while you are trying to read. In addition, you typically only want one thread updating at a time.

What you have then is a scenario in which you want to allow many concurrent readers, but if the data is to be changed, then one thread must temporarily gain exclusive access to the shared memory. This is the purpose behind the ReaderWriterLock and ReaderWriterLockSlim objects.

Using a ReaderWriterLock, you can request either a read lock or a write lock. If you obtain a read lock, you can safely read the data. Other threads can simultaneously obtain read locks and safely read the data.

Before you can update data, you must obtain a write lock. When you request a write lock, any other threads requesting either a read or write lock are blocked. If any outstanding read or write locks are in progress, then you will be blocked until they are released. When there are no outstanding locks (read or write), you will be granted the write lock. No other locks are granted until you release the write lock, so your write lock is an exclusive lock.

After you release the write lock, any pending requests for other locks are granted, allowing either another single writer to access the data or multiple readers to simultaneously access the data. You can adapt the sample code to use a System.Threading.ReaderWriterLock object. Start by using the code that was just created based on the SyncLock statement, with a Queue object as shared data. First, create an instance of the ReaderWriterLock in a form-wide variable:

' lock object Private mRWLock As New System.Threading.ReaderWriterLock

Because a ReaderWriterLock is just an object, you can have many lock objects in an application if needed. You could use each lock object to protect different bits of shared data. Then you can change the DoWork method to make use of this object instead of the SyncLock statement:

If isPrime Then

Dim numberOfResults As Integer

mRWLock.AcquireWriterLock(100)

Try

results.Add(count)

Finally

mRWLock.ReleaseWriterLock()

End Try

mRWLock.AcquireReaderLock(100)

TrynumberOfResults = results.Count

Finally

mRWLock.ReleaseReaderLock()

End Try

If numberOfResults >= 10 Then

BackgroundWorker1.ReportProgress( _

CInt((count - mMin) / (mMax - mMin) * 100), results)

End If

End IfBefore you write or alter the data in the ArrayList, you need to acquire a writer lock. Before reading any data from the ArrayList, you need to acquire a reader lock.

If any thread holds a reader lock, then attempts to get a writer lock are blocked. When any thread requests a writer lock, any other requests for a reader lock are blocked until after that thread gets (and releases) its writer lock. In addition, if any thread has a writer lock, then other threads requesting a reader (or writer) lock are blocked until that writer lock is released.

The result is that there can be only one writer, and while the writer is active, there are no readers. However, if no writer is active, then there can be many concurrent reader threads running at the same time.

Note that all work done while a lock is held is contained within a Try..Finally block. This ensures that the lock is released regardless of any exceptions you might encounter.

Note

It is critical to always release locks you are holding. Failure to do so may cause your application to become unstable and crash or lock up unexpectedly.

Failure to release a lock will almost certainly block other threads, possibly forever — causing a deadlock situation. The alternate fate is that the other threads will request a lock and time out, throwing an exception and causing the application to fail. Either way, when you do not release your locks, you cause application failure.

Now update the code in the ProgressChanged method:

ProgressBar1.Value = e.ProgressPercentage

If e.UserState IsNot Nothing Then

Dim result As ArrayList = CType(e.UserState, ArrayList)

mRWLock.AcquireReaderLock(100)

Try

For index As Integer = ListBox1.Items.Count To result.Count - 1

ListBox1.Items.Add(result(index))

Next

Finally

mRWLock.ReleaseReaderLock()

End Try

End IfAgain, before reading from results, you get a reader lock, releasing it in a Finally block once you're done. This code will run a bit smoother than the previous implementation, but the UI thread can be kept busy with resizing or moving the window, thus causing it to hold the reader lock and preventing the background thread from running, as it will not be able to acquire a writer lock.

A brand-new lock available to you in version 3.5 of the .NET Framework is the ReaderWriterLockSlim object. This new lock was introduced to allow for upgradeable reads. The previous ReaderWriterLock has some issues associated with it, such as a poorly designed non-atomic upgrade method. In addition to this, the lock was considered to have rather poor performance. The new ReaderWriterLock also gives precedence to locks in a write mode, rather than a read or an upgradable read mode. The reasoning for this is that it is assumed that write locks are going to occur less frequently, so this precedence structure would allow for better overall performance.

Microsoft was unable to fix the ReaderWriterLock in the previous .NET Framework and thus introduced a brand-new lock. The new ReaderWriterLockSlim supports the methods shown in the following table:

Method | Description |

|---|---|

Releases all the resources held by the object | |

Tries to acquire a read lock | |

Tries to acquire a lock in an upgradable mode | |

Tries to acquire a write lock | |

Exits the read lock | |

Exits the upgradable read lock | |

Exits the write lock | |

Tries to enter a lock in read mode. You can optionally set a timeout period on the try. | |

Tries to enter a lock in an upgradable read mode. You can optionally set a timeout period on the try. | |

Tries to enter a lock in a write mode. You can optionally set a timeout period on the try. |

As you can see from the list of methods, the new ReaderWriterLockSlim supports three modes: read, upgradable read, and write. The new upgradable read mode enables your code to safely transition from read to write modes. This lock supports an atomic upgrade path and won't cause deadlocks like the older ReaderWriterLock. Note that only one thread is allowed in the upgradeable read mode no matter how many threads are contained in a read mode. This is what enables the atomic upgrade path.

The following code shows an example of using the new ReaderWriterLockSlim object:

Imports System.Threading

Module Module1

Dim rwl As New ReaderWriterLockSlim()

Sub Main()Dim th1 As New Thread(AddressOf Read)

th1.Start("1")

Dim th2 As New Thread(AddressOf Read)

th2.Start("2")

Dim th3 As New Thread(AddressOf Write)

th3.Start("3")

Dim th4 As New Thread(AddressOf Write)

th4.Start("4")

Dim th5 As New Thread(AddressOf Write)

th5.Start("5")

End Sub

Sub Read(ByVal ThreadID As String)

While (True)

Console.WriteLine("Thread " & ThreadID & _

" has entered the ReadLock")

rwl.EnterReadLock()

Thread.Sleep(100)

Console.WriteLine("Thread " & ThreadID & _

"has exited the ReadLock")

rwl.ExitReadLock()

End While

End Sub

Sub Write(ByVal ThreadID As String)

While (True)

rwl.EnterUpgradeableReadLock()

Console.WriteLine("Thread " & ThreadID & _

" has entered the UpgradeableReadLock")

rwl.EnterWriteLock()

Console.WriteLine("Thread " & ThreadID & _

" has entered the WriteLock")

Console.WriteLine("Thread " & ThreadID & _

" has the write lock.")

rwl.ExitWriteLock()

Console.WriteLine("Thread " & ThreadID & _

" has exited the WriteLock")

rwl.ExitUpgradeableReadLock()

Console.WriteLine("Thread " & ThreadID & _

" has exited the UpgradeableReadLock")

Thread.Sleep(1000)

End While

End Sub

End ModuleFrom this example, threads can very easily obtain a read lock. Getting a write lock requires the thread to enter the UpgradableReadLock method, and the thread waits in the read mode until it is able to enter into the upgradeable read mode (as only one thread is allowed in this mode at any given time). From there, it can enter into the write mode; and upon exiting, not only does the thread have to exit from the write mode, but it also must exit from the upgradeable read mode.

Both the Monitor (SyncLock) and ReaderWriterLock objects follow the acquire/release model, whereby threads are blocked until they can acquire control of the appropriate lock.

You can flip the paradigm by using the AutoResetEvent and ManualResetEvent objects. With these objects, threads voluntarily wait on the event object. While waiting, they are blocked and do no work. When another thread signals (raises) the event, any threads waiting on the event object are released and do work.

You can signal an event object by calling the object's Set method. To wait on an event object, a thread calls that object's WaitOne method. This method blocks the thread until the event object is signaled (the event is raised).

Event objects can be in one of two states: signaled or not signaled. When an event object is signaled, threads waiting on the object are released. If a thread calls WaitOne on an event object that is signaled, then the thread isn't blocked and continues running. However, if a thread calls WaitOne on an event object that is not signaled, then the thread is blocked until some other thread calls that object's Set method, thus signaling the event.

AutoResetEvent objects automatically reset themselves to the not signaled state as soon as any thread calls the WaitOne method. In other words, if an AutoResetEvent is not signaled and a thread calls WaitOne, then that thread will be blocked. Another thread can then call the Set method, thus signaling the event. This both releases the waiting thread and immediately resets the AutoResetEvent object to its not signaled state.

You can use an AutoResetEvent object to coordinate the use of shared data between threads. Change the ReaderWriterLock declaration to declare an AutoResetEvent instead:

Dim mWait As New System.Threading.AutoResetEvent(False)

By passing False to the constructor, you are telling the event object to start out in its not signaled state. Were you to pass True, it would start out in the signaled state, and the first thread to call WaitOne would not be blocked, but would trigger the event object to automatically reset its state to not signaled.

Next, you can update DoWork to use the event object. In order to ensure that both the primary and background threads do not simultaneously access the ArrayList object, use the AutoResetEvent object to block the background thread until the UI thread is done with the ArrayList:

If isPrime Then

Dim numberOfResults As Integer

results.Add(count)

numberOfResults = results.Count

If numberOfResults >= 10 Then

BackgroundWorker1.ReportProgress( _

CInt((count - mMin) / (mMax - mMin) * 100), results)

mWait.WaitOne()

End If

End IfThis code is much simpler than using the ReaderWriterLock. In this case, the background thread assumes it has exclusive access to the ArrayList until the ReportProgress method is called to invoke the primary thread to update the UI. When that occurs, the background thread calls the WaitOne method, so it is blocked until released by the primary thread.

In the UI update code, change the code to release the background thread:

ProgressBar1.Value = e.ProgressPercentage

If e.UserState IsNot Nothing Then

Dim result As ArrayList = CType(e.UserState, ArrayList)

For index As Integer = ListBox1.Items.Count To result.Count - 1

ListBox1.Items.Add(result(index))

Next

mWait.Set()

End IfThis is done by calling the Set method on the AutoResetEvent object, thus setting it to its signaled state. This releases the background thread so it can continue to work. Notice that the Set method isn't called until after the primary thread is completely done working with the ArrayList object.

As with the previous examples, if you continually move or resize the form, then the UI thread becomes so busy it will never release the background thread.

A ManualResetEvent object is very similar to the AutoResetEvent just used. The difference is that with a ManualResetEvent object, you are in total control over whether the event object is set to its signaled or not signaled state. The state of the event object is never altered automatically.

This means you can manually call the Reset method, rather than rely on it to occur automatically. The result is that you have more control over the process and can potentially gain some efficiency.

To see how this works, change the declaration to create a ManualResetEvent:

' wait object Dim mWait As New System.Threading.ManualResetEvent(True)

Notice that you're constructing it with a True parameter. This means that the object will initially be in its signaled state. Until it is reset to a nonsignaled state, WaitOne calls won't block on this object.

Change the DoWork method as follows:

If isPrime Then

mWait.WaitOne()

Dim numberOfResults As Integer

results.Add(count)

numberOfResults = results.Count

If numberOfResults >= 10 Then

mWait.Reset()

BackgroundWorker1.ReportProgress( _

CInt((count - mMin) / (mMax - mMin) * 100), results)

End If