Chapter 5 NetWare 6 eDirectory Management

This chapter covers the following testing objectives for Novell Course 3004: Novell Network Management and Novell Course 575: Novell eDirectory Design and Implementation:

![]() Define eDirectory Replication and Synchronization (3004)

Define eDirectory Replication and Synchronization (3004)

![]() Understand eDirectory Partitioning and Replication (575)

Understand eDirectory Partitioning and Replication (575)

![]() Plan, Design, Implement a Partition and Replica Strategy (575)

Plan, Design, Implement a Partition and Replica Strategy (575)

![]() Identify Basic eDirectory Administrative Procedures (3004)

Identify Basic eDirectory Administrative Procedures (3004)

![]() Determine a WAN Traffic Manager Strategy for Your Tree (575)

Determine a WAN Traffic Manager Strategy for Your Tree (575)

![]() Identify eDirectory Recovery Steps (3004)

Identify eDirectory Recovery Steps (3004)

![]() Extend the eDirectory Schema (3004)

Extend the eDirectory Schema (3004)

![]() Redirect Resources in the Tree (3004)

Redirect Resources in the Tree (3004)

![]() Prepare for Upgrading to eDirectory 8.6 (3004)

Prepare for Upgrading to eDirectory 8.6 (3004)

![]() Use the eDirectory Import/Export Wizard to Manage LDIF Files (3004)

Use the eDirectory Import/Export Wizard to Manage LDIF Files (3004)

![]() Identify What iMonitor Is and How to Use It (3004)

Identify What iMonitor Is and How to Use It (3004)

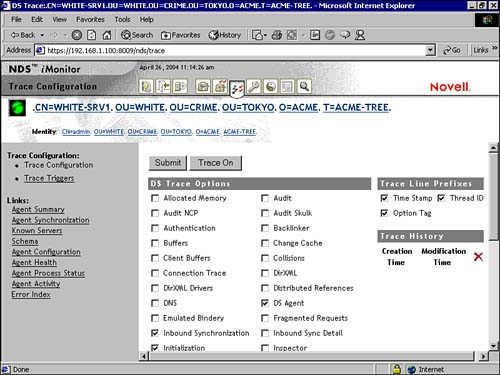

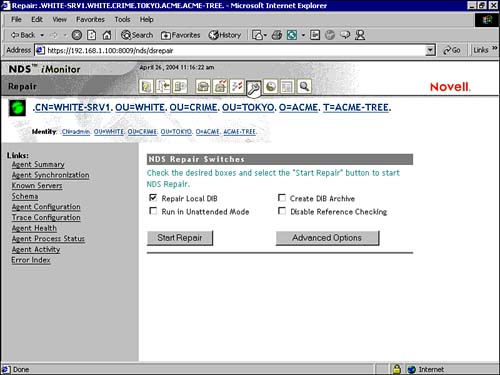

![]() Use iMonitor to Diagnose and Repair eDirectory Problems (3004)

Use iMonitor to Diagnose and Repair eDirectory Problems (3004)

![]() Repair eDirectory Using iMonitor (3004)

Repair eDirectory Using iMonitor (3004)

![]() Maintain and Optimize eDirectory Using Cache Options (3004)

Maintain and Optimize eDirectory Using Cache Options (3004)

![]() Design and Implement a Time Synchronization Strategy (3004)

Design and Implement a Time Synchronization Strategy (3004)

![]() Design and Implement a Time Synchronization Strategy (575)

Design and Implement a Time Synchronization Strategy (575)

Welcome back to eDirectory!

Novell’s CNA Study Guide for NetWare 6 introduced you to a beautiful, powerful eDirectory. Now you’re back for more. In this chapter, we’re going to build on your fundamental eDirectory skills and explore advanced eDirectory design and management.

We’ll begin this challenging tour with a review of eDirectory replication and synchronization rules. Then we’ll break the tree into small little pieces (partitioning) and scatter them around the WAN (replica placement). Of course, design is only the beginning. After that, we’ll tackle a plethora of eDirectory management, implementation, and maintenance tasks. Finally, we’ll end the tour with a few words on time synchronization design.

In summary, this chapter is all about ACME’s partition boundaries, replica placement, synchronization management, and time design. Here’s what’s in store for us:

![]() “Understanding eDirectory Replication and Synchronization”—The first step in building an eDirectory maintenance plan is partitioning fundamentals. In addition, you must understand how to scatter partitions throughout the WAN using replication. eDirectory replication serves two purposes: fault tolerance and resource accessibility. Finally, you must learn how eDirectory replicas communicate, also known as eDirectory synchronization management.

“Understanding eDirectory Replication and Synchronization”—The first step in building an eDirectory maintenance plan is partitioning fundamentals. In addition, you must understand how to scatter partitions throughout the WAN using replication. eDirectory replication serves two purposes: fault tolerance and resource accessibility. Finally, you must learn how eDirectory replicas communicate, also known as eDirectory synchronization management.

![]() “Designing eDirectory Partitions”—Next, we’ll tackle ACME design with a brief review of eDirectory partitioning rules. You’ll learn various partition design guidelines and building boundaries for the top and bottom layers of our ACME tree. In the “Designing eDirectory Partitions” section, we’ll discuss seven important guidelines that affect the bottom layers of our partition strategy.

“Designing eDirectory Partitions”—Next, we’ll tackle ACME design with a brief review of eDirectory partitioning rules. You’ll learn various partition design guidelines and building boundaries for the top and bottom layers of our ACME tree. In the “Designing eDirectory Partitions” section, we’ll discuss seven important guidelines that affect the bottom layers of our partition strategy.

![]() “Placing eDirectory Replicas”—Then we’ll move on to replica design with a brief review of eDirectory’s four different replica types: master, read/write, read-only, and subordinate reference. Next, we’ll discover a variety of different reasons for placing ACME’s replicas intelligently, including fault tolerance, local distribution, bindery services, and improved name resolution.

“Placing eDirectory Replicas”—Then we’ll move on to replica design with a brief review of eDirectory’s four different replica types: master, read/write, read-only, and subordinate reference. Next, we’ll discover a variety of different reasons for placing ACME’s replicas intelligently, including fault tolerance, local distribution, bindery services, and improved name resolution.

![]() “Managing eDirectory Partitions and Replicas”—With our partitioning design under control, we’ll shift our focus to eDirectory management, implementation, and maintenance. In the fourth lesson, we’ll explore partition and replica management with the following hands-on tasks: adding eDirectory replicas, changing replica types, managing replica synchronization with WAN Traffic Manager, preventative maintenance, troubleshooting, extending the Schema, and redirecting resources in the eDirectory tree.

“Managing eDirectory Partitions and Replicas”—With our partitioning design under control, we’ll shift our focus to eDirectory management, implementation, and maintenance. In the fourth lesson, we’ll explore partition and replica management with the following hands-on tasks: adding eDirectory replicas, changing replica types, managing replica synchronization with WAN Traffic Manager, preventative maintenance, troubleshooting, extending the Schema, and redirecting resources in the eDirectory tree.

![]() “Implementing eDirectory 8.6”—Now that you know how to manage eDirectory, it’s time to learn how to implement eDirectory 8.6. In this lesson, I’ll arm you with two valuable action steps: step 1 is eDirectory integration (four tasks to prepare your network for eDirectory 8.6) and step 2 is the eDirectory Import/Export Wizard (create large groups of eDirectory objects from existing LDAP databases).

“Implementing eDirectory 8.6”—Now that you know how to manage eDirectory, it’s time to learn how to implement eDirectory 8.6. In this lesson, I’ll arm you with two valuable action steps: step 1 is eDirectory integration (four tasks to prepare your network for eDirectory 8.6) and step 2 is the eDirectory Import/Export Wizard (create large groups of eDirectory objects from existing LDAP databases).

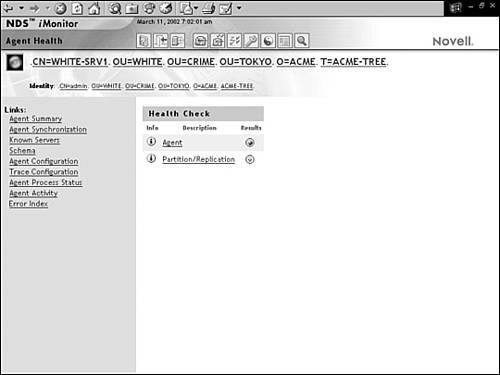

![]() “Maintaining eDirectory with iMonitor”—In the eDirectory maintenance lesson, we’ll use iMonitor to tackle your eDirectory maintenance plan, including eDirectory diagnosis, repair, and optimization.

“Maintaining eDirectory with iMonitor”—In the eDirectory maintenance lesson, we’ll use iMonitor to tackle your eDirectory maintenance plan, including eDirectory diagnosis, repair, and optimization.

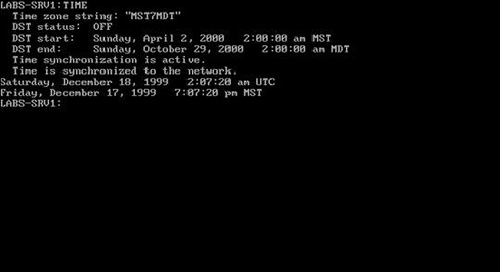

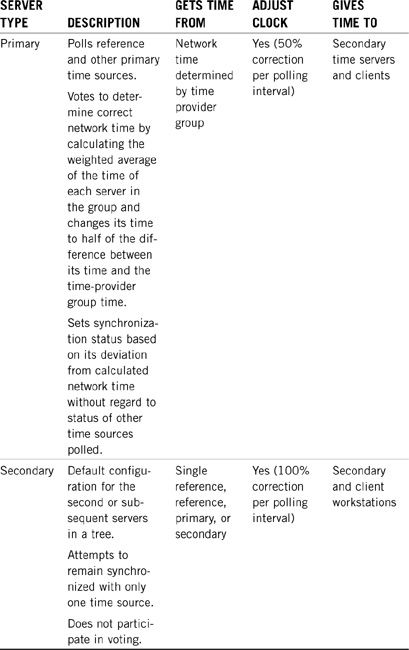

![]() “Designing Time Synchronization”—Finally, we’ll explore the following two time design environments: IPX-only and IP/IPX time synchronization. IPX-only time synchronization relies on four different time server types and the TIMESYNC.NLM utility. On the other hand, IP/IPX mixed networks negotiate time using TCP/IP and the Network Time Protocol (NTP).

“Designing Time Synchronization”—Finally, we’ll explore the following two time design environments: IPX-only and IP/IPX time synchronization. IPX-only time synchronization relies on four different time server types and the TIMESYNC.NLM utility. On the other hand, IP/IPX mixed networks negotiate time using TCP/IP and the Network Time Protocol (NTP).

So, there you have it—your future as an advanced eDirectory engineer. This is a very busy chapter because advanced eDirectory management is such important business. Today you’re going to learn all about it.

Let’s start at the beginning with an introduction to eDirectory replication and synchronization.

Test Objectives Covered:

![]() Define eDirectory Replication and Synchronization (3004)

Define eDirectory Replication and Synchronization (3004)

![]() Understand eDirectory Partitioning and Replication (575)

Understand eDirectory Partitioning and Replication (575)

NetWare 6’s eDirectory is the world’s leading directory service. It provides a unifying, cross-platform infrastructure for managing, securing, accessing, and developing all major components of your network. In fact, eDirectory scales to the largest network environment, including the Internet. And because it is based on the X.500 standard, eDirectory supports LDAP, HTTP, and the Java programming environment.

The following is a brief description of the key network benefits offered by NetWare 6’s eDirectory:

![]() Network administration—eDirectory simplifies network administration by using objects to represent any network resource, including physical devices, such as routers, switches, printers, and fax machines; software, such as databases and applications; and volumes in the network file system. Furthermore, you can move individual objects, groups, or entire branches of the eDirectory tree to different locations by using a simple drag-and-drop method. Finally, eDirectory network administration supports both centralized and distributed network control.

Network administration—eDirectory simplifies network administration by using objects to represent any network resource, including physical devices, such as routers, switches, printers, and fax machines; software, such as databases and applications; and volumes in the network file system. Furthermore, you can move individual objects, groups, or entire branches of the eDirectory tree to different locations by using a simple drag-and-drop method. Finally, eDirectory network administration supports both centralized and distributed network control.

![]() Network performance—eDirectory integrates entire enterprise network systems, consolidating company data into a single database. In addition, eDirectory enables multiple operating systems to run as if they were designed to work together.

Network performance—eDirectory integrates entire enterprise network systems, consolidating company data into a single database. In addition, eDirectory enables multiple operating systems to run as if they were designed to work together.

![]() Network security—With eDirectory authorization, authentication, and access control services, you can manage and secure the relationships and interactions between objects. In addition, eDirectory supports RSA encryption.

Network security—With eDirectory authorization, authentication, and access control services, you can manage and secure the relationships and interactions between objects. In addition, eDirectory supports RSA encryption.

![]() Network availability—eDirectory has a reliable track record spanning more than 10 years and well over 100 million users. eDirectory is renowned for its capability to prevent downtime by allowing network information to be stored and to be updated on multiple systems, including the 24/7 requirements of today’s large telecommunications and government agencies.

Network availability—eDirectory has a reliable track record spanning more than 10 years and well over 100 million users. eDirectory is renowned for its capability to prevent downtime by allowing network information to be stored and to be updated on multiple systems, including the 24/7 requirements of today’s large telecommunications and government agencies.

![]() Scalability—In eDirectory, User objects have the same network view and login procedure whether they’re logging in from their location workstation or from a different country. In addition, the eDirectory schema is extensible, so you can add any resource you need for network management or user accessibility. Finally, eDirectory supports rapid network growth through server migration, tree merging, and container scalability.

Scalability—In eDirectory, User objects have the same network view and login procedure whether they’re logging in from their location workstation or from a different country. In addition, the eDirectory schema is extensible, so you can add any resource you need for network management or user accessibility. Finally, eDirectory supports rapid network growth through server migration, tree merging, and container scalability.

The NetWare 6 eDirectory includes a segmentation strategy known as eDirectory partitioning. Partitioning breaks up an eDirectory tree into two or more logical divisions that can be separated and distributed, which makes dealing with eDirectory objects more manageable. Furthermore, copies of partitions can be distributed on multiple file servers in a strategy known as replication. eDirectory replicas increase network performance by decreasing the size of database files and by placing them closest to the users who need them, and increase fault tolerance because extra copies of the database are distributed throughout the network.

In this lesson, we’ll review the fundamentals of eDirectory partitioning, replication, and synchronization in preparation for the design, management, and maintenance tasks to come. Remember, a well-maintained eDirectory tree leads to a well-functioning network.

To fully understand partitioning and replication, you must be aware of the following Directory characteristics:

![]() The database contains data on all objects in the Directory tree, including object names, object security rights, and object property values. All network information, except server file systems, is stored in the Directory.

The database contains data on all objects in the Directory tree, including object names, object security rights, and object property values. All network information, except server file systems, is stored in the Directory.

![]() eDirectory uses the Directory database for access control to other objects in the network. eDirectory checks the Directory to make sure that you can view, manipulate, create, or delete resource objects.

eDirectory uses the Directory database for access control to other objects in the network. eDirectory checks the Directory to make sure that you can view, manipulate, create, or delete resource objects.

![]() eDirectory uses the Directory database for authentication (an important part of logging in).

eDirectory uses the Directory database for authentication (an important part of logging in).

![]() Except for Server and Volume objects, the Directory does not contain information about the file system.

Except for Server and Volume objects, the Directory does not contain information about the file system.

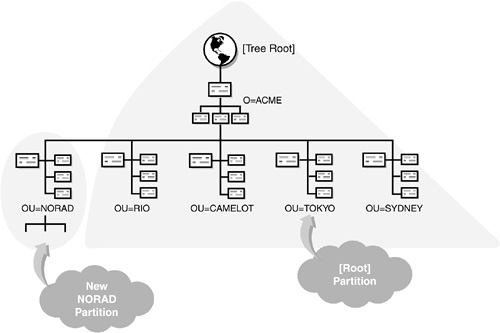

As you can see in Figure 5.1, eDirectory partitioning has been used to break up the ACME organization into three pieces:

![]() Partition A—Known as the [Root] partition because it is the only one that contains the Tree Root object.

Partition A—Known as the [Root] partition because it is the only one that contains the Tree Root object.

![]() Partition B—Known as the LABS partition because OU=LABS is the highest container object in the partition. In addition, Partition B is termed a parent of Partition C because the LABS organizational unit contains the R&D organizational unit.

Partition B—Known as the LABS partition because OU=LABS is the highest container object in the partition. In addition, Partition B is termed a parent of Partition C because the LABS organizational unit contains the R&D organizational unit.

![]() Partition C—Known as the R&D partition because OU=R&D is the highest Container object in the partition. In addition, Partition C is termed a child of Partition B because the R&D organizational unit is located in the LABS container.

Partition C—Known as the R&D partition because OU=R&D is the highest Container object in the partition. In addition, Partition C is termed a child of Partition B because the R&D organizational unit is located in the LABS container.

TIP

Keep in mind that size and number of partitions can significantly affect the synchronization and responsiveness of your network. Avoid creating partitions that are too large or that contain too many copies because host servers can take too long to synchronize, and managing replicas becomes more complex. On the other hand, avoid partitions that are too small. If a partition contains only a few objects, the access and fault-tolerance benefits might not be worth the time you invest in managing the partition.

Partitioning has many advantages because it enables you to separate the eDirectory tree into smaller segments. You can also increase network fault tolerance by placing copies of other partitions on local servers. This is known as replication, and network administrators can increase efficiency by placing a replica of the partition most frequently needed by users on a server that’s geographically close to the users.

NetWare 6 supports four types of eDirectory replicas:

![]() Master—A master replica is the original read/write copy of a partition that is created by default when you define the partition. A master replica contains a complete copy of the object data for the partition. Each partition may have only one master replica. A master replica can perform original requests for partition and object changes. If you want to redefine a partition boundary or join it with another, you must have access to the server that holds the master replica.

Master—A master replica is the original read/write copy of a partition that is created by default when you define the partition. A master replica contains a complete copy of the object data for the partition. Each partition may have only one master replica. A master replica can perform original requests for partition and object changes. If you want to redefine a partition boundary or join it with another, you must have access to the server that holds the master replica.

![]() Read/write—A read/write replica is a read/write copy of a partition. It contains a complete copy of the object data for the partition. Each partition can have multiple read/write replicas. When you modify objects in a read/write or master replica, those changes are propagated to all other replicas of the same partition. This process, known as replica synchronization, creates background traffic over network communication lines. Finally, a read/write replica can fill original requests for object changes, but it passes all partition change requests to the master replica. It cannot handle changes to partition boundaries—that requires a master replica.

Read/write—A read/write replica is a read/write copy of a partition. It contains a complete copy of the object data for the partition. Each partition can have multiple read/write replicas. When you modify objects in a read/write or master replica, those changes are propagated to all other replicas of the same partition. This process, known as replica synchronization, creates background traffic over network communication lines. Finally, a read/write replica can fill original requests for object changes, but it passes all partition change requests to the master replica. It cannot handle changes to partition boundaries—that requires a master replica.

![]() Read-only—A read-only replica is a read-only copy of a partition that contains a complete copy of the object data for the partition. These replicas are used only for searching the eDirectory tree and viewing objects. They cannot handle original change requests, which means that they cannot be used for login authentication. Instead, they pass on all such requests to read/write and master replicas.

Read-only—A read-only replica is a read-only copy of a partition that contains a complete copy of the object data for the partition. These replicas are used only for searching the eDirectory tree and viewing objects. They cannot handle original change requests, which means that they cannot be used for login authentication. Instead, they pass on all such requests to read/write and master replicas.

![]() Subordinate references—A subordinate reference a special type of replica that is created and maintained by eDirectory. It does not contain object data—it points to replicas that do, which facilitates tree connectivity.

Subordinate references—A subordinate reference a special type of replica that is created and maintained by eDirectory. It does not contain object data—it points to replicas that do, which facilitates tree connectivity.

Read/write replicas are the most popular replicas. Master replicas are created automatically during partitioning, and subordinate references flourish throughout the tree as needed. Read-only replicas, however, can be effective if you have many servers and few containers.

Because eDirectory is a distributed, replicated database, NetWare 6 servers continually share information and synchronize changes with each other. In addition, the eDirectory database is loosely consistent. Therefore, it requires time for replication and synchronization when major changes occur. The time required for a change to be replicated and synchronized depends on the type of change, the size of the partition, and the number of servers the partition is replicated on. Therefore, you should not assume that delays in replication and synchronization or an occasional Unknown object necessarily indicate problems in the database.

Figure 5.1 illustrates a simple, saturated replication scheme. As you can see, each server has a copy of each partition. This provides exceptional fault tolerance and accessibility, but synchronization might be a problem. In large environments, this scheme would not be practical because of synchronization delays. Replica updates take place automatically at specific intervals.

Some updates, such as changing a user’s password, are immediate (within ten seconds). Other updates, such as login updates, are synchronized every five minutes. Changes made to Figure 5.1, for example, would generate 27 replica updates—that’s 3×3×3. This is manageable. But consider what background traffic would look like with 50 servers and 20 different partitions—that is 9,536,743,164,062,000,000,000,000,000,000,000 updates every few minutes.

eDirectory synchronization is accomplished within a group of servers known as a replica ring. A replica ring is an internal system group that includes all servers that contain replicas of a given partition. In Figure 5.1, the replica ring for Partition A includes the following:

![]() Master: CN=ADMIN-SRV1

Master: CN=ADMIN-SRV1

![]() R/W: CN=LABS-SRV1

R/W: CN=LABS-SRV1

![]() R/W: CN=R&D-SRV1

R/W: CN=R&D-SRV1

eDirectory synchronization works differently for simple and complex changes. Simple changes, such as changing a user’s phone number, occur almost instantaneously because the replica information already exists on the affected servers, and only the modified information is sent to servers containing a replica that includes the User object.

Creating a partition is another example of a simple eDirectory change. When you create a partition, the system uses partition attributes to draw the new boundary of the partition. In this case, the replica information needed already exists on the affected servers.

Complex changes take more time. For example, joining two partitions on different servers will take time to synchronize throughout the network. During this process, eDirectory initiates a chain reaction of three synchronization events:

1. eDirectory determines where all the replicas of each partition (the replica ring) are stored.

2. eDirectory replicates the data of both partitions to all servers in the replica ring.

3. eDirectory completes the merge, at which point the affected servers have the composite information of both partitions.

eDirectory could have a problem synchronizing between IP-only and IPX-only networks because direct communications between servers is not normally allowed. To resolve this, NetWare 6 includes transitive synchronization. Transitive synchronization eliminates the requirement that all servers in a replica ring have to communicate and synchronize directly. Instead, target servers receive eDirectory updates through an intermediary server that uses both IP and IPX. Also, if the source server’s replica is more recent than the target server’s replica, the source server does not need to receive synchronization updates from the target server. This reduces synchronization traffic.

As eDirectory synchronizes partition replicas, it creates network traffic. If this traffic crosses WAN links unmanaged, it can increase costs and it can overload slow WAN links during high-use periods. Fortunately, NetWare 6 includes the WAN Traffic Manager (WTM) to help you manage synchronization traffic across WAN links (we’ll discuss WTM in more depth in the management lesson later in the chapter). The following is a list of the tasks that WTM performs, as well as tasks that it does not perform:

![]() WTM controls server-to-server traffic generated by eDirectory.

WTM controls server-to-server traffic generated by eDirectory.

![]() WTM can restrict traffic based on cost, time of day, and/or type of traffic.

WTM can restrict traffic based on cost, time of day, and/or type of traffic.

![]() WTM controls periodic events initiated by eDirectory, such as replica synchronization.

WTM controls periodic events initiated by eDirectory, such as replica synchronization.

![]() WTM does not control events initiated by network administrators or users.

WTM does not control events initiated by network administrators or users.

![]() WTM does not control non-eDirectory server-to-server traffic, such as time synchronization. Fortunately, we have Network Time Protocol to solve that problem.

WTM does not control non-eDirectory server-to-server traffic, such as time synchronization. Fortunately, we have Network Time Protocol to solve that problem.

TIP

Transitive synchronization isn’t necessary if you configure your IP-only network with IPX compatibility mode. Refer to Chapter 2, “NetWare 6 Upgrade and Migration,” for more information about migrating to dual-protocol stack support.

That completes our eDirectory partitioning, replication, and synchronization overview. Now let’s learn how to design an effective eDirectory partitioning strategy.

Test Objectives Covered:

![]() Plan, Design, Implement a Partition and Replica Strategy (575)

Plan, Design, Implement a Partition and Replica Strategy (575)

![]() Identify Basic eDirectory Administrative Procedures (3004)

Identify Basic eDirectory Administrative Procedures (3004)

The beauty of eDirectory is its scalability and flexibility. eDirectory is scalable because it enables you to make the database as small or large as you want. eDirectory is flexible because it enables you to store the database (or pieces of it) anywhere you want. Of course, all of these benefits require design, implementation, and management.

After the eDirectory tree has been properly designed (see Chapter 19, “Novell eDirectory Tree Design”), you can divide the Directory into small pieces (partitioning) and intelligently distribute them over the network (replica placement). eDirectory partitioning and replica design are some of the most important aspects of eDirectory planning because they directly affect your network’s performance, accessibility, and fault tolerance.

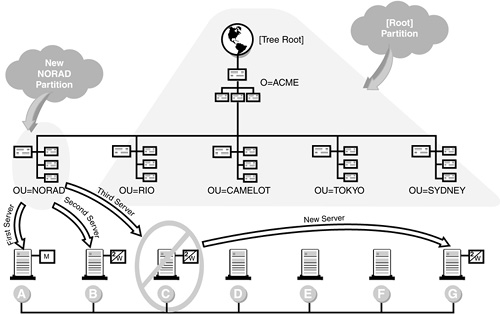

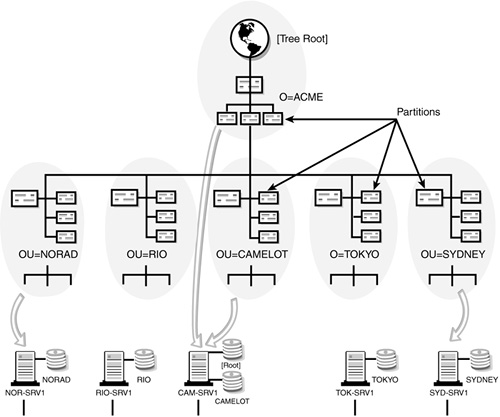

eDirectory partitions are logical divisions of the eDirectory tree (see Figure 5.2). Partitioning effectively splits the eDirectory database into sections that can be distributed to NetWare 6 servers throughout the network.

Furthermore, eDirectory partitioning enables you to selectively distribute eDirectory information near the users who need it.

The purpose of partitioning is to scale the eDirectory database across the NetWare 6 servers in your network. For example, in Figure 5.2, the NORAD partition and its object information are placed on the NOR-SRV1 server in NORAD. The same is true for all other location-based partitions. This enables you to keep local eDirectory information in each geographically separated location. In our example, Camelot is a special partition because it includes the tree root.

eDirectory partitioning follows these simple rules:

![]() Partitioning is hierarchical, meaning that the root-most partition is a parent to its subordinate children. When all the partitions in a tree are taken together, they form a hierarchical map back to the Tree Root object. Figure 5.2 illustrates the hierarchical partition map formed by ACME’s distributed locations. It also shows the parent-child relationship between O=ACME and its subcontainers.

Partitioning is hierarchical, meaning that the root-most partition is a parent to its subordinate children. When all the partitions in a tree are taken together, they form a hierarchical map back to the Tree Root object. Figure 5.2 illustrates the hierarchical partition map formed by ACME’s distributed locations. It also shows the parent-child relationship between O=ACME and its subcontainers.

![]() During installation of the initial NetWare 6 server in your Directory tree, the [Root] partition is created and a master copy (replica) of it is placed on the new server. In NetWare 6, the [Root] partition is the only partition created automatically by the installation program. No default partitioning occurs beyond this.

During installation of the initial NetWare 6 server in your Directory tree, the [Root] partition is created and a master copy (replica) of it is placed on the new server. In NetWare 6, the [Root] partition is the only partition created automatically by the installation program. No default partitioning occurs beyond this.

TIP

There is an important relationship between the Tree Root object and the [Root] partition. The Tree Root object is the highest point in the eDirectory tree. The [Root] partition is the first default partition created during server installation. It contains the Tree Root object. Of course, both of these are mostly unrelated to partition root objects. Clear, huh?

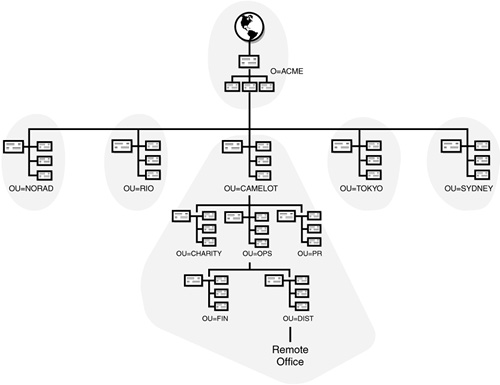

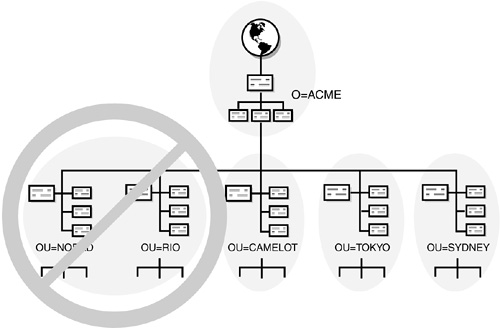

![]() Each partition must be named and requires a single container object as the top (or root) of the partition (not to be confused with the [Root] partition). The container object that’s used as the start of the partition is called the partition root object. Only one partition root object can exist for each partition and, by definition, it is the topmost container object. Examine Figure 5.3 for an example of what you should not do. Note: The reason that a partition could not be created containing only the NORAD and RIO containers is that the partition would not be a partition root because both of these containers exist at the same level in the eDirectory tree.

Each partition must be named and requires a single container object as the top (or root) of the partition (not to be confused with the [Root] partition). The container object that’s used as the start of the partition is called the partition root object. Only one partition root object can exist for each partition and, by definition, it is the topmost container object. Examine Figure 5.3 for an example of what you should not do. Note: The reason that a partition could not be created containing only the NORAD and RIO containers is that the partition would not be a partition root because both of these containers exist at the same level in the eDirectory tree.

![]() Partitioning occurs along the boundaries of container objects. A partition can include more than one container, but it cannot overlap another partition. An eDirectory object can exist in only one partition, and all leaf objects in a container are in the same partition as the container. Also, only eDirectory information (not file system data) can be stored in partitions.

Partitioning occurs along the boundaries of container objects. A partition can include more than one container, but it cannot overlap another partition. An eDirectory object can exist in only one partition, and all leaf objects in a container are in the same partition as the container. Also, only eDirectory information (not file system data) can be stored in partitions.

TIP

Make sure that you understand the definition of a partition (that is, a logical division of eDirectory). Study the hierarchical nature of eDirectory partitions and remember that only one partition is created automatically (during initial server installation). Also, know that the partition root object is the top-level container of a partition. Finally, study the rules of partition construction; that is, partitions cannot overlap each other, and partitions store eDirectory information but not file system data.

The primary reason for partitioning and replicating eDirectory is to increase user resource access and improve fault tolerance. In most cases, you should partition containers along the physical layout of the network. This coincides with the approach used in designing the upper layers of the eDirectory tree (refer to Figure 5.2, shown earlier in this chapter).

By default, no new partitions are created automatically beyond the first ([Root]) partition. This single partition strategy is recommended if your network has no WAN links and a small number of servers holding replicas. See Figure 5.4 for an illustration of ACME’s default partition strategy.

In many cases, your network will grow beyond the recommended limits just discussed. As a result, you’ll need to create and to implement a partitioning design. Creating a new partition under an existing parent is sometimes referred to as a partition split because the child simply splits away from underneath the parent’s control. In Figure 5.5, the NORAD child simply splits away from the [Root] partition. This operation is extremely fast because it doesn’t generate any traffic on your WAN. We’re simply dividing one database into two, with all the information staying on the same server that contained the original partition. This split operation will create a new child subordinate reference pointer on all the servers that had a copy of the parent partition.

The number one design consideration for partitioning is the physical layout of your network infrastructure—mostly the location of network servers. With this in mind, your main task is to partition the eDirectory database so that it localizes eDirectory information. The bottom line is keeping the NORAD information in NORAD, the RIO information in RIO, and so on. Figure 5.2 showed how the ACME tree has been partitioned along the lines of WAN communications. Note that in our example, the [Root] partition is small and includes only the Tree Root object and O=ACME. This recommendation will be discussed later when we address replication.

The primary reason for partitioning and replicating the eDirectory database is to increase efficiency for users and to create fault tolerance. In most cases, you should design partition boundaries around the physical layout of your network infrastructure. This coincides with the approach used in designing the upper and lower layers of the eDirectory tree. If your tree design is structured correctly, your partition strategy is generally easy to implement and maintain.

Follow these guidelines when partitioning the eDirectory tree:

![]() Don’t span a WAN link or physical locations with a partition—This design guideline is important and should not be ignored. If you span a WAN link, it creates unnecessary eDirectory synchronization traffic between two or more locations. This is why you should partition the top layers according to location. See Figure 5.6 for an example of how not to partition the ACME tree.

Don’t span a WAN link or physical locations with a partition—This design guideline is important and should not be ignored. If you span a WAN link, it creates unnecessary eDirectory synchronization traffic between two or more locations. This is why you should partition the top layers according to location. See Figure 5.6 for an example of how not to partition the ACME tree.

![]() Keep the [Root] partition small—Typically, the first partition should include only the Tree Root object and the O=Organization container. Do not include any other subordinate containers in the partition with the Tree Root object because doing so will create unnecessary subordinate references.

Keep the [Root] partition small—Typically, the first partition should include only the Tree Root object and the O=Organization container. Do not include any other subordinate containers in the partition with the Tree Root object because doing so will create unnecessary subordinate references.

![]() Use the pyramid design for partitioning—You should design a small number of partitions at the top layers of the tree and more partitions as you move toward the bottom. If you’ve designed the tree based on a pyramid shape, as recommended, your partition design will naturally follow the tree design.

Use the pyramid design for partitioning—You should design a small number of partitions at the top layers of the tree and more partitions as you move toward the bottom. If you’ve designed the tree based on a pyramid shape, as recommended, your partition design will naturally follow the tree design.

![]() Partition the top layers according to location—Partition locally whenever possible. The topmost parent partitions should follow the location-based organizational units of your tree design.

Partition the top layers according to location—Partition locally whenever possible. The topmost parent partitions should follow the location-based organizational units of your tree design.

![]() Partition the bottom layers according to organization—The bottom layers of the tree should be partitioned only if there is a special requirement, such as if the partition is too large, if there are too many replicas of the same partition, or if there is a need to break out an administrative container.

Partition the bottom layers according to organization—The bottom layers of the tree should be partitioned only if there is a special requirement, such as if the partition is too large, if there are too many replicas of the same partition, or if there is a need to break out an administrative container.

![]() Do not create a partition unless there is a local server—Do not create a partition (even if it includes a WAN link) if there is no local server on which to store the replica. This type of situation is common, for instance, if you have small remote offices that do not have servers on site. Refer to Figure 5.7 for an example.

Do not create a partition unless there is a local server—Do not create a partition (even if it includes a WAN link) if there is no local server on which to store the replica. This type of situation is common, for instance, if you have small remote offices that do not have servers on site. Refer to Figure 5.7 for an example.

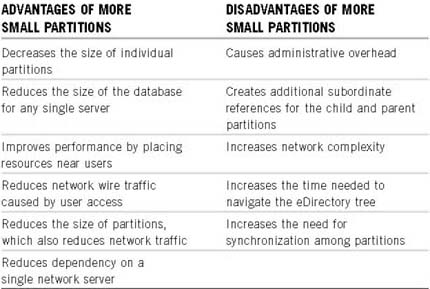

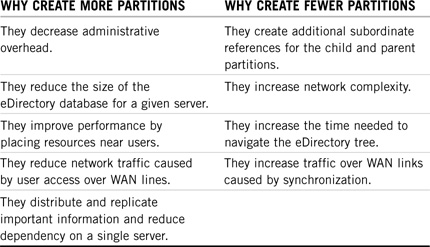

![]() Smaller is better—A partition should typically have fewer than 3,500 objects. Maintain as few child partitions as possible (fewer than 35 child partitions per parent). Because most partition operations affect child partitions, minimize the number of children linked across unreliable WAN connections. Table 5.1 explains the advantages and disadvantages of creating smaller eDirectory partitions.

Smaller is better—A partition should typically have fewer than 3,500 objects. Maintain as few child partitions as possible (fewer than 35 child partitions per parent). Because most partition operations affect child partitions, minimize the number of children linked across unreliable WAN connections. Table 5.1 explains the advantages and disadvantages of creating smaller eDirectory partitions.

This completes our partition design for ACME. If you follow these partitioning guidelines at both the top and bottom layers, the eDirectory information always will remain close to the user and other leaf objects. Remember that location is the key when creating partition boundaries. Review Table 5.2 for a complete picture of eDirectory partition design.

TIP

These guidelines do not suggest that you partition every organizational unit in your tree. There is also such a thing as overpartitioning. Partition locally and further partition at remote sites only if necessary.

Very good. Now that we have ACME’s partition design in place, it’s time to spread them across the network—replica placement. This is the fun part. We get to distribute all the little pieces to ACME servers everywhere.

Test Objectives Covered:

![]() Plan, Design, Implement a Partition and Replica Strategy (575) (continued)

Plan, Design, Implement a Partition and Replica Strategy (575) (continued)

![]() Identify Basic eDirectory Administrative Procedures (continued)

Identify Basic eDirectory Administrative Procedures (continued)

After you’ve designed an eDirectory partitioning strategy, the next step is to distribute replicas for fault tolerance, network performance, and name resolution:

![]() Fault tolerance—Replication increases the availability of partitions by spreading multiple copies of various pieces of the eDirectory to distributed servers. This also increases reliability. For example, if a server holding a replica of a given partition goes down, you can simply use another copy (that is, a replica) for authentication and updates.

Fault tolerance—Replication increases the availability of partitions by spreading multiple copies of various pieces of the eDirectory to distributed servers. This also increases reliability. For example, if a server holding a replica of a given partition goes down, you can simply use another copy (that is, a replica) for authentication and updates.

![]() Network performance—Distributed replicas increase eDirectory and client performance by ensuring that users access eDirectory resource information locally. This level of eDirectory scalability is particularly important during authentication, eDirectory changes, directory searches, and eDirectory database access.

Network performance—Distributed replicas increase eDirectory and client performance by ensuring that users access eDirectory resource information locally. This level of eDirectory scalability is particularly important during authentication, eDirectory changes, directory searches, and eDirectory database access.

![]() Name resolution—Replication enhances name resolution by ensuring that users can walk the tree from child to parent replicas. To facilitate this process, eDirectory automatically creates subordinate reference replicas on every server that contains a parent replica, but not all the parent replica’s child replicas.

Name resolution—Replication enhances name resolution by ensuring that users can walk the tree from child to parent replicas. To facilitate this process, eDirectory automatically creates subordinate reference replicas on every server that contains a parent replica, but not all the parent replica’s child replicas.

Now let’s start our replica placement lesson with a brief review of the four eDirectory replica types.

eDirectory supports the following four different types of replicas:

![]() Master—Created automatically when you define a partition. Each partition can have only one master replica.

Master—Created automatically when you define a partition. Each partition can have only one master replica.

![]() Read/write—Placed automatically on the second and third server in a partition and manually on all subsequent servers that you specify.

Read/write—Placed automatically on the second and third server in a partition and manually on all subsequent servers that you specify.

![]() Read-only—Rarely used. Read-only replicas must be created manually.

Read-only—Rarely used. Read-only replicas must be created manually.

![]() Subordinate references—Automatically placed on servers that contain a parent replica, but not all the parent replica’s child replicas.

Subordinate references—Automatically placed on servers that contain a parent replica, but not all the parent replica’s child replicas.

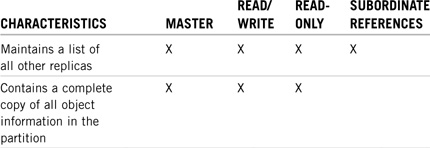

Table 5.3 contains a detailed overview of these four eDirectory replica types and their corresponding characteristics.

TIP

Carefully study the characteristics of the four eDirectory replica types in Table 5.3. Specifically, learn which replica types match the following characteristics: contains a complete copy of all eDirectory information for a partition (master, read/write, and read-only); is required for bindery services (master or read/write); and is not created automatically when servers are installed (read-only).

As you learned earlier in the chapter, eDirectory replication relies on the following basic rules:

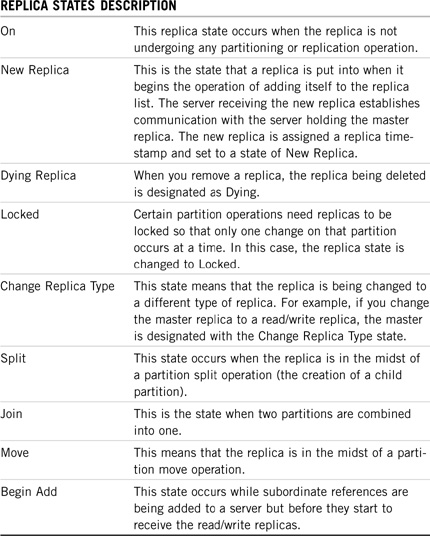

![]() Replica list—When a partition is created, the partition root object receives a replica list. When changes are made to objects within a partition, those changes are sent to the other replicas on the list. The replica list (also called the replica ring) includes a list of all servers containing the replicas, the type of replica they hold, and each replica’s current state. All replicas, including subordinate references, contain a copy of the replica list. Furthermore, the replica list of a subordinate reference is used by the server to locate replicas of a child partition.

Replica list—When a partition is created, the partition root object receives a replica list. When changes are made to objects within a partition, those changes are sent to the other replicas on the list. The replica list (also called the replica ring) includes a list of all servers containing the replicas, the type of replica they hold, and each replica’s current state. All replicas, including subordinate references, contain a copy of the replica list. Furthermore, the replica list of a subordinate reference is used by the server to locate replicas of a child partition.

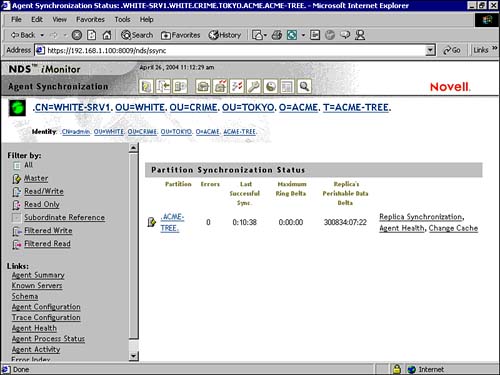

![]() Replica synchronization—The eDirectory directory is a loosely consistent database. As changes occur, all replicas of a partition do not always contain exactly the same information at every instant. For this reason, it’s imperative that each replica server synchronizes with the other servers in the replica list every few minutes. For instance, some changes (such as changes to a user’s password) are immediately sent to all servers on the list. Other less-critical changes (such as a user’s last login time) are collected locally for a short period of time before being sent to other servers on the replica list. Each type of replica participates in the synchronization process differently: master and read/write replicas initiate and receive updates, whereas read-only replicas only receive updates.

Replica synchronization—The eDirectory directory is a loosely consistent database. As changes occur, all replicas of a partition do not always contain exactly the same information at every instant. For this reason, it’s imperative that each replica server synchronizes with the other servers in the replica list every few minutes. For instance, some changes (such as changes to a user’s password) are immediately sent to all servers on the list. Other less-critical changes (such as a user’s last login time) are collected locally for a short period of time before being sent to other servers on the replica list. Each type of replica participates in the synchronization process differently: master and read/write replicas initiate and receive updates, whereas read-only replicas only receive updates.

![]() Transitive synchronization—eDirectory includes an additional level of synchronization complexity by supporting simultaneous access to IP-only and IPX-only networks. The problem with this scenario is that IP-only servers can’t synchronize directly with IPX-only servers. Fortunately, eDirectory includes a feature called transitive synchronization, which eliminates the requirement that all servers in a replica list be capable of directly synchronizing with each other. Instead, they communicate indirectly by using intermediary servers or IPX compatibility mode gateways.

Transitive synchronization—eDirectory includes an additional level of synchronization complexity by supporting simultaneous access to IP-only and IPX-only networks. The problem with this scenario is that IP-only servers can’t synchronize directly with IPX-only servers. Fortunately, eDirectory includes a feature called transitive synchronization, which eliminates the requirement that all servers in a replica list be capable of directly synchronizing with each other. Instead, they communicate indirectly by using intermediary servers or IPX compatibility mode gateways.

Well, that completes our quick lesson in the basics of eDirectory replication. The rules you’ve learned in this section are important because they describe the way servers replicate and synchronize. You must understand how replicas behave before you start copying ACME’s partitions throughout the network.

Now it’s your turn. Ready, set, replicate!

The first step in building an eDirectory replica placement plan is to explore the following two design considerations and their related issues:

![]() Installing NetWare servers—What happens to your replica plan when you install the first eDirectory server? More importantly, what happens when you install subsequent servers?

Installing NetWare servers—What happens to your replica plan when you install the first eDirectory server? More importantly, what happens when you install subsequent servers?

![]() Merging eDirectory trees—How does an eDirectory merge affect the replica plan of your source and target trees?

Merging eDirectory trees—How does an eDirectory merge affect the replica plan of your source and target trees?

These design considerations and their related issues are explored in the following sections.

The initial eDirectory partition in a tree is called the [Root] partition because it is the only one that includes the tree root (see Figure 5.8). This special partition is created when the initial NetWare 6 server is installed in an eDirectory tree. The master replica of the [Root] partition is placed on that server. The master replica of the [Root] can be removed at any time or changed to a read/write replica after other servers have been placed in the eDirectory tree. However, you must first upgrade an existing read/write replica to master status because there must always be a master replica of every partition.

The following eDirectory partitioning and replication rules apply to all subsequent servers installed in the same eDirectory tree (follow along in Figure 5.8):

![]() When you install a new NetWare 6 server in an existing eDirectory tree, the server is automatically added to an existing partition. In other words, no new partition is created.

When you install a new NetWare 6 server in an existing eDirectory tree, the server is automatically added to an existing partition. In other words, no new partition is created.

![]() The second and third new servers installed in an existing partition receive read/write replicas of the host partition. The fourth and subsequent servers do not receive replicas. As you can see in Figure 5.8, new servers B and C receive read/write replicas, but D, E, and F don’t.

The second and third new servers installed in an existing partition receive read/write replicas of the host partition. The fourth and subsequent servers do not receive replicas. As you can see in Figure 5.8, new servers B and C receive read/write replicas, but D, E, and F don’t.

![]() When a new NetWare 6 server is added to an existing partition, eDirectory determines whether there are enough replicas for fault tolerance. If there aren’t at least one master and two read/write replicas of the partition, eDirectory places a read/write replica on the new server (see the example in Figure 5.8). For instance, suppose that the NORAD partition automatically places the master replica of itself on server A and read/write replicas on servers B and C. Assume that servers D through F hold no replicas. Next, suppose that you remove server C from the network. When you install server G, eDirectory discovers that there aren’t enough replicas for fault tolerance and automatically places a read/write replica on server G. Although server G isn’t the third server installed in the partition, it is the third server required for fault-tolerance purposes.

When a new NetWare 6 server is added to an existing partition, eDirectory determines whether there are enough replicas for fault tolerance. If there aren’t at least one master and two read/write replicas of the partition, eDirectory places a read/write replica on the new server (see the example in Figure 5.8). For instance, suppose that the NORAD partition automatically places the master replica of itself on server A and read/write replicas on servers B and C. Assume that servers D through F hold no replicas. Next, suppose that you remove server C from the network. When you install server G, eDirectory discovers that there aren’t enough replicas for fault tolerance and automatically places a read/write replica on server G. Although server G isn’t the third server installed in the partition, it is the third server required for fault-tolerance purposes.

![]() In all other cases, if you want a replica created on a server, you must add it manually using ConsoleOne.

In all other cases, if you want a replica created on a server, you must add it manually using ConsoleOne.

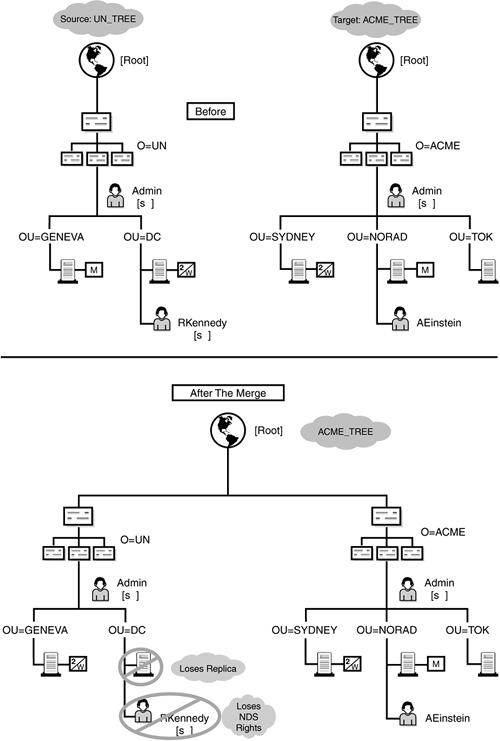

eDirectory merging has a dramatic effect on eDirectory partitioning and replication. During an eDirectory merge, you identify a source tree and a target tree. It is imperative that each of these servers contains a master replica of its host tree’s [Root] partition. In Chapter 21, “Novell eDirectory Implementation,” we’ll cover eDirectory merging in much greater depth. In summary, the following partitioning and replication changes occur during an eDirectory merge:

![]() During an eDirectory merge, the master replica of the target [Root] partition becomes the master replica of the new, combined [Root] partition. Any servers in the target tree that held replicas of the old [Root] partition are given replicas of the new [Root] partition.

During an eDirectory merge, the master replica of the target [Root] partition becomes the master replica of the new, combined [Root] partition. Any servers in the target tree that held replicas of the old [Root] partition are given replicas of the new [Root] partition.

![]() Only the source server that held a master replica of the source [Root] partition is given a read/write replica of the new [Root] partition. Any other servers in the source server’s original tree that held replicas of the old source [Root] lose their copies and do not receive copies of the new [Root] partition.

Only the source server that held a master replica of the source [Root] partition is given a read/write replica of the new [Root] partition. Any other servers in the source server’s original tree that held replicas of the old source [Root] lose their copies and do not receive copies of the new [Root] partition.

![]() All first-level containers in the source tree [Root] partition become independent partition roots in the new, combined tree. All other servers in the source server’s original tree that held a replica of the source tree root lose the [Root] replica, but they maintain replicas of the new first-level partitions—except, of course, the source server itself, which receives a read/write replica of the new [Root] partition.

All first-level containers in the source tree [Root] partition become independent partition roots in the new, combined tree. All other servers in the source server’s original tree that held a replica of the source tree root lose the [Root] replica, but they maintain replicas of the new first-level partitions—except, of course, the source server itself, which receives a read/write replica of the new [Root] partition.

![]() Any server containing a replica of the new [Root] partition receives subordinate references to child partitions of the new [Root] partition. The name of the new eDirectory tree is the name of the target server’s eDirectory tree.

Any server containing a replica of the new [Root] partition receives subordinate references to child partitions of the new [Root] partition. The name of the new eDirectory tree is the name of the target server’s eDirectory tree.

![]() The access control list (ACL) of the source tree root is discarded, and the ACL of the target tree root is kept. The only source tree root trustee assignments that survive are those that belong to the source User object that performed the merge (typically, Admin). This administrative user gains Supervisor rights to the new, combined tree root.

The access control list (ACL) of the source tree root is discarded, and the ACL of the target tree root is kept. The only source tree root trustee assignments that survive are those that belong to the source User object that performed the merge (typically, Admin). This administrative user gains Supervisor rights to the new, combined tree root.

Now that wasn’t so hard—was it?! Of course, we’ve only just begun. Next, we’re going to build on these design considerations and discover some valuable design strategies for placing eDirectory replicas.

Welcome to phase two of partition design—replica placement. The partitions we created in the previous section aren’t going to do us any good until we clone them. As a matter of fact, replica placement is one of your most important design responsibilities—for the many reasons outlined previously.

Your eDirectory replica placement plan should accomplish the following four goals:

![]() Fault tolerance—Attempt to eliminate any single point of failure in your eDirectory tree.

Fault tolerance—Attempt to eliminate any single point of failure in your eDirectory tree.

![]() Local distribution—Place replicas on local servers for efficiency and speed.

Local distribution—Place replicas on local servers for efficiency and speed.

![]() Bindery services—Bindery users and applications need access to a master or read/write replica of each container in a server’s bindery context.

Bindery services—Bindery users and applications need access to a master or read/write replica of each container in a server’s bindery context.

![]() Tree walking with subordinate references—Strategically distribute replicas to create bridges between separated containers for tree-walking purposes. Also, use a replica table to track automatic subordinate reference placement.

Tree walking with subordinate references—Strategically distribute replicas to create bridges between separated containers for tree-walking purposes. Also, use a replica table to track automatic subordinate reference placement.

Let’s take a closer look at replica placement for ACME.

The primary goal of replication is to eliminate any single point of failure in the entire eDirectory tree. Distributing multiple replicas of a partition increases the availability of object information if one of the servers should become unavailable. In Figure 5.9, the NORAD and CAMELOT partitions have been replicated to multiple servers within their locations. This provides fault tolerance for each partition. If one of the servers in the partition goes down, the information isn’t lost—it’s available from the other server.

For fault tolerance, the NetWare 6 server installation program automatically creates up to three eDirectory replicas for each partition. When you install additional servers into the eDirectory tree, eDirectory places on the first three servers a replica of the server’s home partition—a master and two read/write replicas. After that, you’re on your own.

For example, in Figure 5.10, the NORAD partition is automatically replicated as new servers are added. It starts with NOR-SRV1, and then NOR-CHR-SRV1, and finally LABS-SRV1. Notice that NOR-SRV1 gets a master replica. That’s because it was the first server installed into the NORAD partition. The others receive read/write replicas.

So, what happens when you decide to install a fourth server (R&D-SRV1) into the NORAD partition? Nothing! Remember, by default, eDirectory replicates only the partition on the first three servers. The first thing you should do is place a read/write NORAD replica on the new R&D-SRV1 server. This strategy only applies to the new server’s home partition. It doesn’t have any effect on other partitions in the tree. This is done for one simple purpose: fault tolerance of the eDirectory database. If you’re comfortable with where the three automatic replicas are placed, you don’t need to place any of your own.

As a general guideline, you should have at least a master and two read/write replicas of every partition, but never more than ten replicas of any partition except the [Root]. If you don’t have three servers in the same site, replicate the partition elsewhere. Just make sure that the eDirectory information is available somewhere, and that there’s never more than ten replicas of any partition except the [Root].

Always replicate a partition locally, near the users who need the resources defined in the partition. Don’t place replicas on servers across a WAN if a local server is available. If you follow these guidelines, the users will be able to retrieve their personal information from the nearest available server. The benefits of using this strategy are that it’s faster, more efficient, and more reliable than spanning partitions across a WAN link.

Figure 5.11 illustrates how a small remote office should be replicated. For this example only, assume that there’s a small remote site called OU=SLC connected to the NORAD hub. There’s only one server in the remote site, and it’s called SLC-SRV1. You should create a small partition and replicate the master to SLC-SRV1. You should also place a read/write replica of OU=SLC in the NORAD location, possibly on the master NOR-SRV1 server.

Ideally, you should place the replica that contains a user’s eDirectory information on the same server that stores the user’s Home directory. This might not always be possible, but doing so improves the user’s access to eDirectory objects and the speed of login authentication (a master or read/write replica is required).

You should also pay attention to WAN synchronization when placing replicas locally. As eDirectory synchronizes replica updates, it creates network traffic. If this traffic crosses WAN links unmanaged, it can increase costs and overload slow WAN links during high-use periods. Fortunately, NetWare 6 includes a synchronization management tool called WAN Traffic Manager (WTM), which we’ll discuss in the next lesson.

Finally, be sure to manage the number of replicas you create for any partition. The time cost of synchronization is greater when the servers in a replica ring are separated by relatively slow WAN links. Therefore, the limiting factor in creating multiple replicas is the amount of processing time and traffic required to synchronize them. As a general rule, you should never create more than 10 replicas for any partition or place more than 20 replicas on any server.

Bindery services has a big effect on replica placement. Each bindery user or application requires a master or read/write replica of its server’s bindery context in order to access eDirectory resources. The following is an example of the console command that can be used to set a server’s bindery context:

SET BINDERY CONTEXT=OU=PR.OU=NORAD.O=ACME

In Figure 5.12, the bindery users attached to NOR-PR-SRV1 can see only the eDirectory objects in the OU=PR.OU=NORAD.O=ACME container. Actually, they can’t see all the eDirectory objects, just the bindery-equivalent objects (such as servers, users, groups, printers, print queues, print servers, and profiles). In case you were wondering, the Profile eDirectory object was added to NetWare 6 as a bindery-equivalent object. Unfortunately, eDirectory-dependent eDirectory objects aren’t available to bindery users (such as Directory Maps, Organizational Roles, Computers, and Aliases).

Bindery services is also required during a NetWare 3.12 to NetWare 6 server upgrade. For example, when you upgrade a NetWare 3.12 server, a read/write replica of its home partition is placed on the new NetWare 6 server. This happens regardless of whether there are already three replicas of the partition.

Tree walking (also referred to as name resolution) is the mechanism used by eDirectory to find object information that is not stored on local servers. If the eDirectory information you need isn’t stored locally, the server must walk the eDirectory tree to find a server containing an appropriate replica. Every replica maintains a list of the other servers holding replicas of the same partition (called the replica ring).

The [Root] is probably the most troublesome name resolution replica because it stores information about every resource. Initially, replicas of the [Root] partition include the containers at the top of the eDirectory tree. For this reason, you should replicate the [Root] partition to all major hub sites in your network. Also, keep the [Root] partition small to avoid unnecessary subordinate reference replicas.

As we discussed earlier, you should use a replica table to track the automatic placement of subordinate references throughout the network. These pointers are automatically placed on servers that hold a parent replica, but not all the parent replica’s child replicas. A replica table consists of a matrix containing partition columns and server rows. To determine the location of subordinate references, simply compare the matrix in the replica table to a representation of the graphical eDirectory tree structure. If a server box holds a parent replica but not all the parent’s child replicas, that’s an indication that subordinate references have been created automatically.

This completes our discussion of eDirectory partition and replica design. In review, we can organize most network designs into two different classifications:

![]() Quick design—Most networks have few special needs. As a result, they can use a conservative approach to replica design.

Quick design—Most networks have few special needs. As a result, they can use a conservative approach to replica design.

![]() Advanced design—Some networks have special needs that require complex design strategies.

Advanced design—Some networks have special needs that require complex design strategies.

Now let’s learn how to manage eDirectory partitions and replicas using iMonitor and WTM.

Test Objectives Covered:

![]() Identify Basic eDirectory Administrative Procedures (continued)

Identify Basic eDirectory Administrative Procedures (continued)

![]() Determine a WAN Traffic Manager Strategy for Your Tree (575)

Determine a WAN Traffic Manager Strategy for Your Tree (575)

![]() Identify eDirectory Recovery Steps (3004)

Identify eDirectory Recovery Steps (3004)

![]() Extend the eDirectory Schema (3004)

Extend the eDirectory Schema (3004)

![]() Redirect Resources in the Tree (3004)

Redirect Resources in the Tree (3004)

eDirectory partition and replica management involves the daily tasks required to scale eDirectory. After you’ve created an eDirectory partitioning and replication design, you must master the following seven partition management skills:

![]() Managing eDirectory partitions—First, you must gain experience in managing basic eDirectory components, including creating, merging, and moving partitions. This is typically accomplished using iManager (which we’ll discuss in much great depth in later lessons).

Managing eDirectory partitions—First, you must gain experience in managing basic eDirectory components, including creating, merging, and moving partitions. This is typically accomplished using iManager (which we’ll discuss in much great depth in later lessons).

![]() Managing eDirectory replicas—Next, you’ll need to enhance your management skills to include adding eDirectory replicas and changing the replica type.

Managing eDirectory replicas—Next, you’ll need to enhance your management skills to include adding eDirectory replicas and changing the replica type.

![]() Managing WAN traffic—WAN Traffic Manager (WTM) enables you to manage replica synchronization traffic across WAN links. This is a critical eDirectory CNE skill because it forms the basis of your network optimization strategy.

Managing WAN traffic—WAN Traffic Manager (WTM) enables you to manage replica synchronization traffic across WAN links. This is a critical eDirectory CNE skill because it forms the basis of your network optimization strategy.

![]() Preventive maintenance—eDirectory maintenance begins with prevention, including partition security, backing up the Directory, maintaining a standard eDirectory version, monitoring SYS: volume space, and preparing the server for downtime.

Preventive maintenance—eDirectory maintenance begins with prevention, including partition security, backing up the Directory, maintaining a standard eDirectory version, monitoring SYS: volume space, and preparing the server for downtime.

![]() Troubleshooting eDirectory inconsistencies—Another important aspect of eDirectory maintenance is troubleshooting inconsistencies. This occurs when replicas cannot be synchronized or their shared information becomes dissimilar.

Troubleshooting eDirectory inconsistencies—Another important aspect of eDirectory maintenance is troubleshooting inconsistencies. This occurs when replicas cannot be synchronized or their shared information becomes dissimilar.

![]() Extending the eDirectory schema—As a CNE, you must ensure that your network’s schema matches the needs of your organization.

Extending the eDirectory schema—As a CNE, you must ensure that your network’s schema matches the needs of your organization.

Sometimes that means you’ll have to extend the schema to accommodate additional classes and attributes.

![]() Redirecting resources in the tree—Managing eDirectory objects involves creating, modifying, and manipulating them in the tree. One of the key responsibilities you’ll have as an eDirectory CNE is providing support for redirecting resources in the tree. This is accomplished by moving and aliasing eDirectory objects using ConsoleOne.

Redirecting resources in the tree—Managing eDirectory objects involves creating, modifying, and manipulating them in the tree. One of the key responsibilities you’ll have as an eDirectory CNE is providing support for redirecting resources in the tree. This is accomplished by moving and aliasing eDirectory objects using ConsoleOne.

That’s all there is to it—no sweat. We have quite a large hands-on eDirectory lesson ahead of us. Let’s get started with some basic management tasks.

eDirectory partition management involves the following three tasks:

![]() Creating partitions—Creating a new partition actually involves splitting a child partition from its parent. Partition splits normally occur on a single server because the parent partition already resides there. Therefore, splitting such a partition doesn’t generate any traffic and happens quickly.

Creating partitions—Creating a new partition actually involves splitting a child partition from its parent. Partition splits normally occur on a single server because the parent partition already resides there. Therefore, splitting such a partition doesn’t generate any traffic and happens quickly.

![]() Merging partitions—Merging is the opposite of splitting. Merging typically takes longer than splitting and generates a great deal of WAN traffic, depending on the physical location of all servers in both partitions’ replica rings. This is especially important if a WAN is involved. To merge partitions, each server holding a replica of the parent partition must have a copy of the child partition. In return, each server holding a copy of the child partition must have a copy of the parent partition. The merge operation attempts to move copies of either the parent or child partitions to the appropriate servers, as needed.

Merging partitions—Merging is the opposite of splitting. Merging typically takes longer than splitting and generates a great deal of WAN traffic, depending on the physical location of all servers in both partitions’ replica rings. This is especially important if a WAN is involved. To merge partitions, each server holding a replica of the parent partition must have a copy of the child partition. In return, each server holding a copy of the child partition must have a copy of the parent partition. The merge operation attempts to move copies of either the parent or child partitions to the appropriate servers, as needed.

![]() Moving partitions—eDirectory enables you to move entire subtrees from one location to another. This is accomplished by moving a container (which must be a partition root) along with its contents. After you’ve moved a container and its contents, each user in the old container must perform the following two tasks to adapt to his new home context: log in using his new distinguished name, and change the Name Context field in the Login window of his Novell Client workstation.

Moving partitions—eDirectory enables you to move entire subtrees from one location to another. This is accomplished by moving a container (which must be a partition root) along with its contents. After you’ve moved a container and its contents, each user in the old container must perform the following two tasks to adapt to his new home context: log in using his new distinguished name, and change the Name Context field in the Login window of his Novell Client workstation.

eDirectory replica management involves the following two operations (discussed later in this chapter):

![]() Adding replicas—Adding replicas is the method used to distribute partition copies throughout the network. When you add replicas to distributed servers, all the eDirectory data for that partition is copied from one server to another over the network. This operation causes significant network traffic.

Adding replicas—Adding replicas is the method used to distribute partition copies throughout the network. When you add replicas to distributed servers, all the eDirectory data for that partition is copied from one server to another over the network. This operation causes significant network traffic.

![]() Changing replica type—eDirectory also enables you to change a replica’s type. This is particularly important when you want to manage eDirectory partitions or authenticate to a local master or read/write replica of your home container. eDirectory makes it possible for you to upgrade or downgrade master, read/write, and read-only replicas.

Changing replica type—eDirectory also enables you to change a replica’s type. This is particularly important when you want to manage eDirectory partitions or authenticate to a local master or read/write replica of your home container. eDirectory makes it possible for you to upgrade or downgrade master, read/write, and read-only replicas.

When eDirectory synchronizes partition replicas, it creates network traffic. If this traffic crosses WAN links unmanaged, it can increase costs and overload slow WAN links during high-use periods. NetWare 6 includes the WAN Traffic Manager to help you manage synchronization traffic across WAN links in the following ways:

![]() WTM controls server-to-server traffic generated by eDirectory.

WTM controls server-to-server traffic generated by eDirectory.

![]() WTM controls periodic events initiated by eDirectory (such as replica synchronization).

WTM controls periodic events initiated by eDirectory (such as replica synchronization).

![]() WTM can restrict traffic based on cost, time of day, type of traffic, or a combination of these criteria.

WTM can restrict traffic based on cost, time of day, type of traffic, or a combination of these criteria.

However, WAN Traffic Manager does not perform the following tasks:

![]() WTM does not control events initiated by network administrators or users.

WTM does not control events initiated by network administrators or users.

![]() WTM does not control non-eDirectory server-to-server traffic, such as time synchronization. Fortunately, we have Network Time Protocol (NTP) to solve that problem.

WTM does not control non-eDirectory server-to-server traffic, such as time synchronization. Fortunately, we have Network Time Protocol (NTP) to solve that problem.

WAN Traffic Manager consists of the following three components:

![]() WTM.NLM—This resides in the SYS:SYSTEM directory on each server. Before eDirectory initiates server-to-server traffic, WTM.NLM reads a WAN traffic policy and determines whether that traffic will be sent.

WTM.NLM—This resides in the SYS:SYSTEM directory on each server. Before eDirectory initiates server-to-server traffic, WTM.NLM reads a WAN traffic policy and determines whether that traffic will be sent.

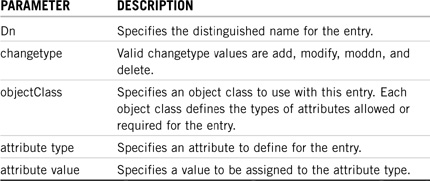

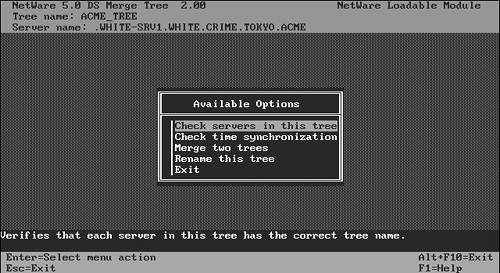

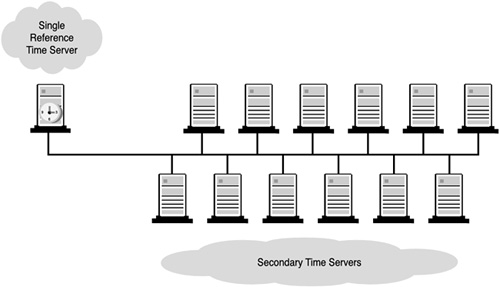

![]() WTM ConsoleOne snap-in files—These files create an interface to the WAN Traffic Manager from within ConsoleOne. ConsoleOne enables you to create or to modify policies, to create LAN Area objects, and to apply policies to LAN Area objects or to servers. When WAN Traffic Manager is installed, the schema is extended to include a LAN Area object and three new detail pages: LAN Area Membership, WAN Policies, and Cost. A LAN Area object enables you to administer WAN traffic policies for a group of servers. If you don’t create a LAN Area object, you can still manage each server’s WAN traffic individually.