Implementing the WGAN is the same as implementing a vanilla GAN except that the loss function of WGAN varies and we need to clip the gradients of the discriminator. Instead of looking at the whole, we will only see how to implement the loss function of the WGAN and how to clip the gradients of the discriminator.

We know that loss of the discriminator is given as:

And it can be implemented as follows:

D_loss = - tf.reduce_mean(D_real) + tf.reduce_mean(D_fake)

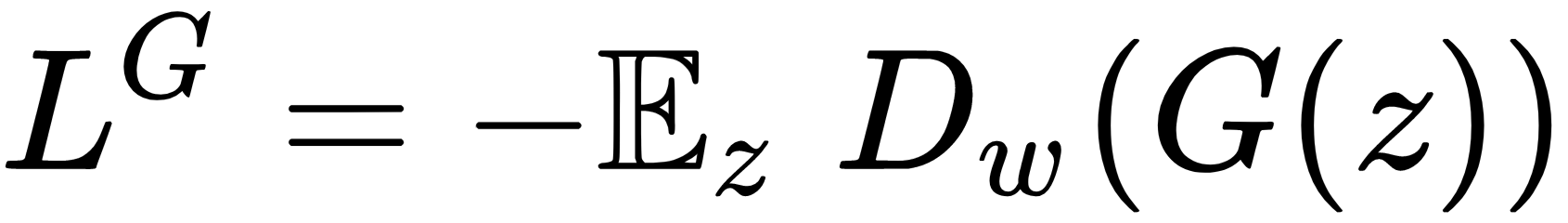

We know that generator loss is given as:

And it can be implemented as follows:

G_loss = -tf.reduce_mean(D_fake)

We clip the gradients of the discriminator as follows:

clip_D = [p.assign(tf.clip_by_value(p, -0.01, 0.01)) for p in theta_D]