We know that the goal of the generator is to generate a fake image by learning the real data distribution. First, we sample noise from a Gaussian distribution and create z. Then we concatenate z with our conditional variable,  , and feed this as an input to the generator which outputs a basic version of the image.

, and feed this as an input to the generator which outputs a basic version of the image.

The loss function of the generator is given as follows:

Let's examine this formula:

implies we sample z from the fake data distribution, that is, the noise prior.

implies we sample z from the fake data distribution, that is, the noise prior. implies we sample the text description,

implies we sample the text description,  , from the real data distribution.

, from the real data distribution. implies that the generator takes the noise and the conditioning variable returns the image. We feed this generated image to the discriminator.

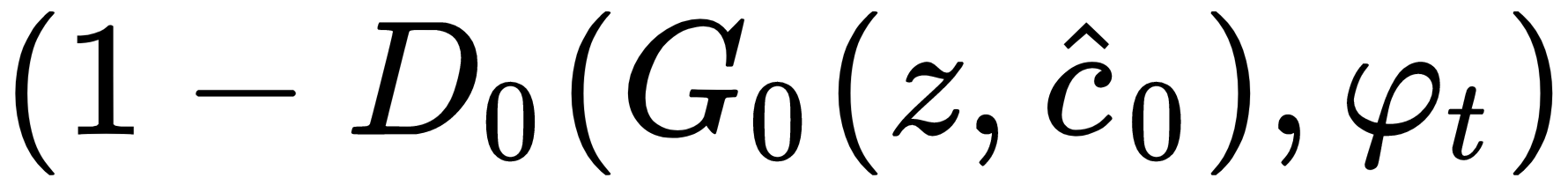

implies that the generator takes the noise and the conditioning variable returns the image. We feed this generated image to the discriminator. implies the log probability of the generated image being fake.

implies the log probability of the generated image being fake.

Along with this loss, we also add a regularizer term,  , to our loss function, which implies the KL divergence between the standard Gaussian distribution and the conditioning Gaussian distribution. It helps us to avoid overfitting.

, to our loss function, which implies the KL divergence between the standard Gaussian distribution and the conditioning Gaussian distribution. It helps us to avoid overfitting.

So, our final loss function for the generator becomes: