Now we will see how to calculate the gradients of loss with respect to hidden-to-hidden layer weights,  , for all the gates and the candidate state.

, for all the gates and the candidate state.

Let's calculate gradients of loss with respect to  .

.

Recall the equation of the input gate, which is given as follows:

Thus, by the chain rule, we can write the following:

Let's calculate each of the terms in the preceding equation.

We have already seen how to compute the first term, the gradient of loss with respect to the input gate, , in the Gradients with respect to gates section. Refer to equation (2).

, in the Gradients with respect to gates section. Refer to equation (2).

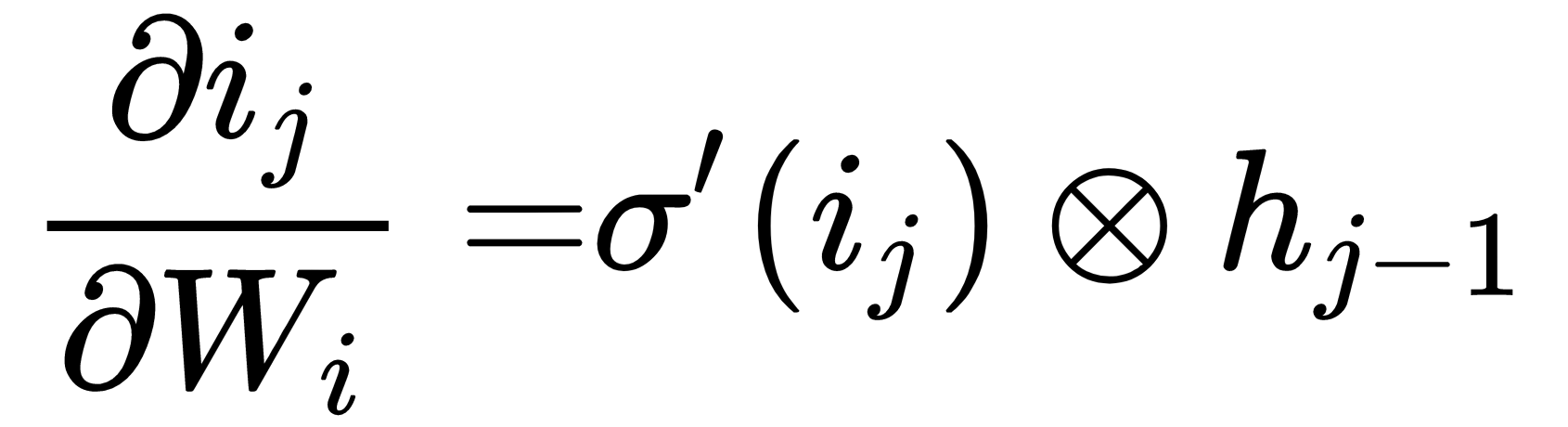

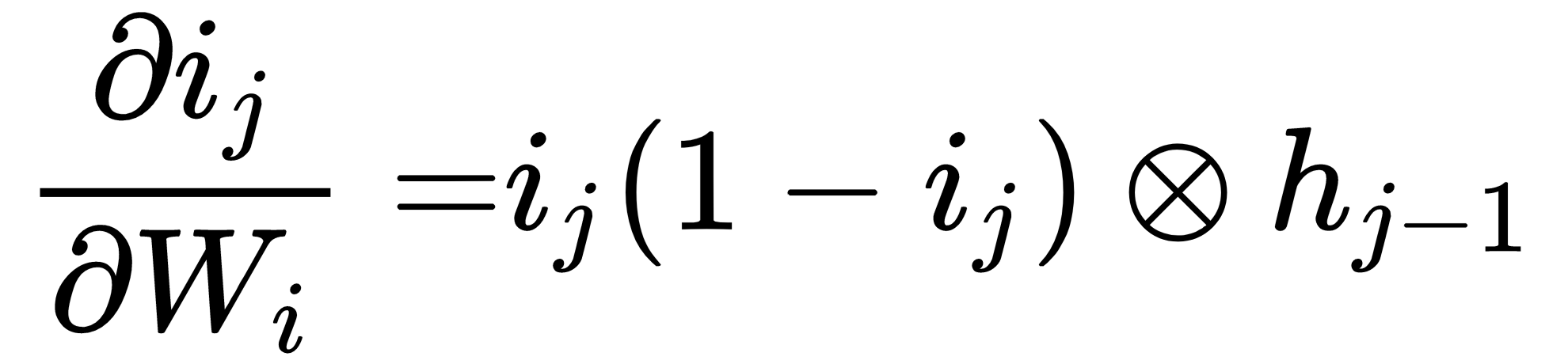

So, let's look at the second term:

Since we know the derivative of the sigmoid function,  , we can write the following:

, we can write the following:

But  is already a result of sigmoid, that is,

is already a result of sigmoid, that is,  , so we can just write

, so we can just write  , Thus, our equation becomes the following:

, Thus, our equation becomes the following:

Thus, our final equation for calculating the gradient of loss with respect to  becomes the following:

becomes the following:

Now, let's find out the gradients of loss with respect to  .

.

Recall the equation of the forget gate, which is given as follows:

Thus, by the chain rule, we can write the following:

We have already seen how to compute  in the gradients with respect to the gates section. Refer to equation (3). So, let's look at computing the second term:

in the gradients with respect to the gates section. Refer to equation (3). So, let's look at computing the second term:

Thus, our final equation for calculating the gradient of loss with respect to  becomes the following:

becomes the following:

Let's calculate the gradients of loss with respect to  .

.

Recall the equation of the output gate, which is given as follows:

So, by the chain rule, we can write the following:

Check equation (1) for the first term. The second term can be computed as follows:

Thus, our final equation for calculating the gradient of loss with respect to  becomes the following:

becomes the following:

Let's move on to calculating the gradient with respect to  .

.

Recall the candidate state equation:

Thus, by the chain rule, we can write the following:

Refer to equation (4) for the first term. The second term can be computed as follows:

We know that derivative of tanh is  , so we can write the following:

, so we can write the following:

Thus, our final equation for calculating the gradient of loss with respect to becomes the following:

becomes the following: