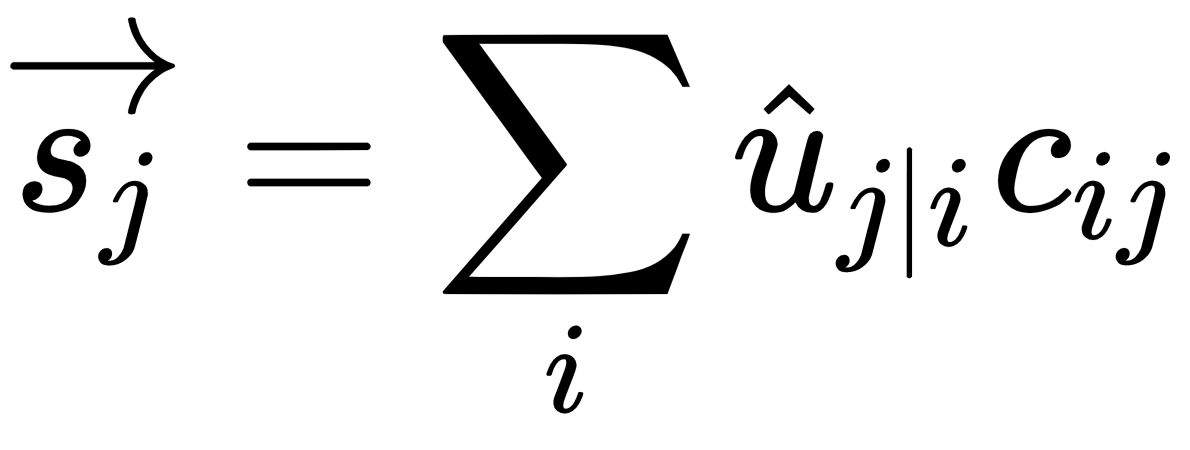

Next, we multiply the prediction vectors  by the coupling coefficients

by the coupling coefficients  . The coupling coefficients exist between any two capsules. We know that capsules from the lower layer send their output to the capsules in the higher layer. The coupling coefficient helps the capsule in the lower layer to understand which capsule in the higher layer it has to send its output to.

. The coupling coefficients exist between any two capsules. We know that capsules from the lower layer send their output to the capsules in the higher layer. The coupling coefficient helps the capsule in the lower layer to understand which capsule in the higher layer it has to send its output to.

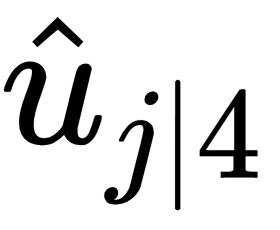

For instance, let's consider the same example, where we are trying to predict whether an image consists of a face.  represents the agreement between

represents the agreement between  and

and  .

.

represents the agreement between an eye and a face. Since we know that the eye is on the face, the

represents the agreement between an eye and a face. Since we know that the eye is on the face, the  value will be increased. We know that the prediction vector

value will be increased. We know that the prediction vector  implies the predicted position of the face based on the eyes. Multiplying

implies the predicted position of the face based on the eyes. Multiplying  by

by  implies that we are increasing the importance of the eyes, as the value of

implies that we are increasing the importance of the eyes, as the value of  is high.

is high.

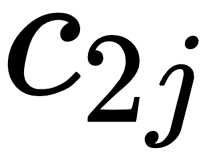

represents the agreement between nose and face. Since we know that the nose is on the face, the

represents the agreement between nose and face. Since we know that the nose is on the face, the  value will be increased. We know that the prediction vector

value will be increased. We know that the prediction vector  implies the predicted position of the face based on the nose. Multiplying

implies the predicted position of the face based on the nose. Multiplying  by

by  implies that we are increasing the importance of the nose, as the value of

implies that we are increasing the importance of the nose, as the value of  is high.

is high.

Let's consider another low-level feature, say,  , which detects a finger. Now,

, which detects a finger. Now,  represents the agreement between a finger and a face, which will be low. Multiplying

represents the agreement between a finger and a face, which will be low. Multiplying  by

by  implies that we are decreasing the importance of the finger, as the value of

implies that we are decreasing the importance of the finger, as the value of  is low.

is low.

But how are these coupling coefficients learned? Unlike weights, the coupling coefficients are learned in the forward propagation itself, and they are learned using an algorithm called dynamic routing, which we will discuss later in an upcoming section.

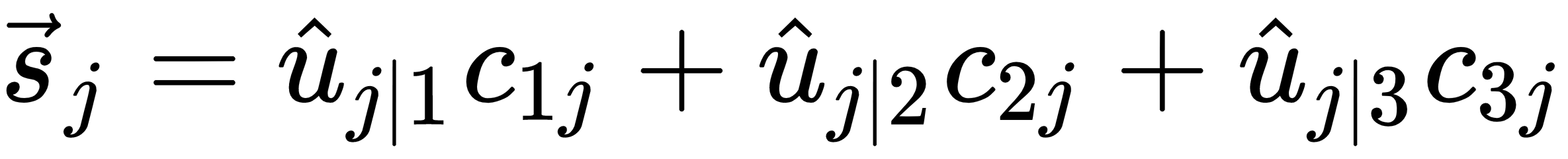

After multiplying  by

by  , we sum them up, as follows:

, we sum them up, as follows:

Thus, we can write our equation as follows: