We have learned several types of GANs, and the applications of them are endless. We have seen how the generator learns the distribution of real data and generates new realistic samples. We will now see a really different and very innovative type of GAN called the CycleGAN.

Unlike other GANs, the CycleGAN maps the data from one domain to another domain, which implies that here we try to learn the mapping from the distribution of images from one domain to the distribution of images in another domain. To put it simply, we translate images from one domain to another.

What does this mean? Assume we want to convert a grayscale image to a colored image. The grayscale image is one domain and the colored image is another domain. A CycleGAN learns the mapping between these two domains and translates between them. This means that given a grayscale image, a CycleGAN converts the image into a colored one.

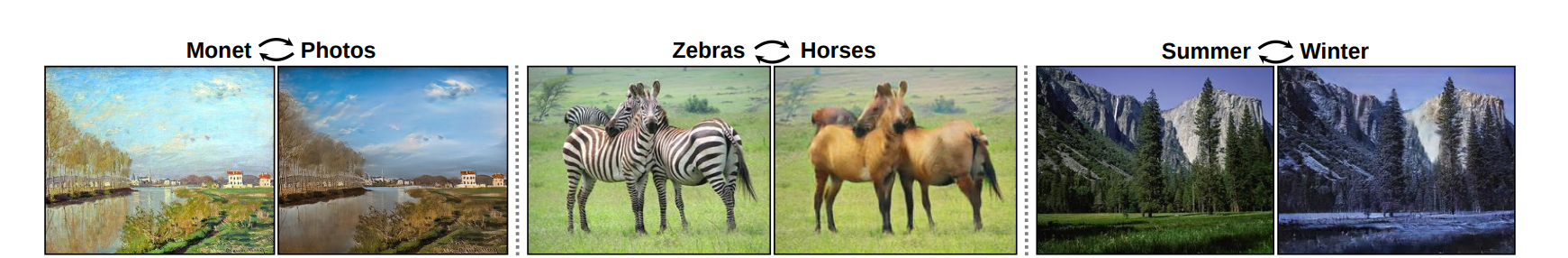

Applications of CycleGANs are numerous, such as converting real photos to artistic pictures, season transfer, photo enhancement, and many more. As shown in the following figure, you can see how a CycleGAN converts images between different domains:

But what is so special about CycleGANs? It's their ability to convert images from one domain to another without any paired examples. Let's say we are converting photos (source) to paintings (target). In a normal image-to-image translation, how do we that? We prepare the training data by collecting some photos and also their corresponding paintings in pairs, as shown in the following image:

Collecting these paired data points for every use case is an expensive task, and we might not have many records or pairs. Here is where the biggest advantage of a CycleGAN lies. It doesn't require data in aligned pairs. To convert from photos to paintings, we just need a bunch of photos and a bunch of paintings. They don't have to map or align with each other.

As shown in the following figure, we have some photos in one column and some paintings in another column; as you can see, they are not paired with each other. They are completely different images:

Thus, to convert images from any source domain to the target domain, we just need a bunch of images in both of the domains and it does not have to be paired. Now let's see how they work and how they learn the mapping between the source and the target domain.

Unlike other GANs, the CycleGAN consists of two generators and two discriminators. Let's represent an image in the source domain by  and target domain by

and target domain by  . We need to learn the mapping between

. We need to learn the mapping between  and

and  .

.

Let's say we are learning to convert a real picture,  , to a painting,

, to a painting,  , as shown in the following figure:

, as shown in the following figure: