We learned that, in the CBOW model, we try to predict the target word given the context words, so it takes some  number of context words as an input and returns one target word as an output. In CBOW model with a single context word, we will have only one context word, that is,

number of context words as an input and returns one target word as an output. In CBOW model with a single context word, we will have only one context word, that is,  . So, the network takes only one context word as an input and returns one target word as an output.

. So, the network takes only one context word as an input and returns one target word as an output.

Before going ahead, first, let's familiarize ourselves with the notations. All the unique words we have in our corpus is called the vocabulary. Considering the example we saw in the Understanding the CBOW model section, we have five unique words in the sentence—the, sun, rises, in, and east. These five words are our vocabulary.

Let  denote the size of the vocabulary (that is, number of words) and

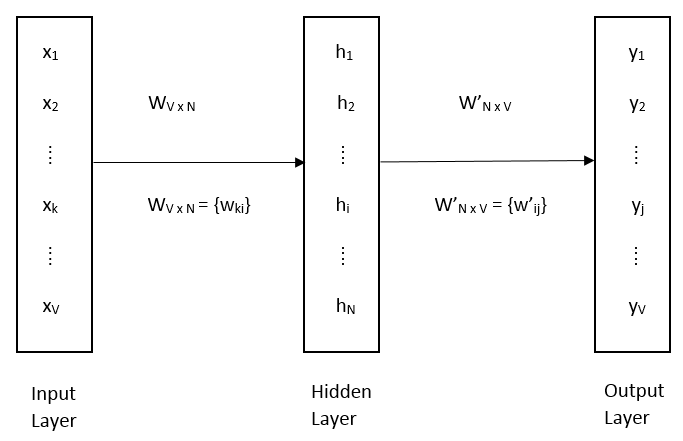

denote the size of the vocabulary (that is, number of words) and  denotes the number of neurons in the hidden layer. We learned that we have one input, one hidden, and one output layer:

denotes the number of neurons in the hidden layer. We learned that we have one input, one hidden, and one output layer:

- The input layer is represented by

. When we say

. When we say  , it represents the

, it represents the  input word in the vocabulary.

input word in the vocabulary. - The hidden layer is represented by

. When we say

. When we say  , it represents the

, it represents the  neuron in the hidden layer.

neuron in the hidden layer. - Output layer is represented by

. When we say

. When we say  it represents the

it represents the  output word in the vocabulary.

output word in the vocabulary.

The dimension of input to hidden layer weight  is

is  (which is the size of our vocabulary x the number of neurons in the hidden layer) and the dimension of hidden to output layer weight,

(which is the size of our vocabulary x the number of neurons in the hidden layer) and the dimension of hidden to output layer weight,  is

is  (that is, the number of neurons in the hidden layer x the size of the vocabulary). The representation of the elements of the matrix is as follows:

(that is, the number of neurons in the hidden layer x the size of the vocabulary). The representation of the elements of the matrix is as follows:

represents an element in the matrix between node

represents an element in the matrix between node  of the input layer and node

of the input layer and node  of the hidden layer

of the hidden layer represents an element in the matrix between node

represents an element in the matrix between node  of the hidden layer and node

of the hidden layer and node  of the output layer

of the output layer

The following figure will help us to attain clarity on the notations: