We subtract mutual information from both the discriminator and the generator loss. So, the final loss function of discriminator and generator is given as follows:

The mutual information can be calculated as follows:

First, we define a prior for  :

:

c_prior = 0.10 * tf.ones(dtype=tf.float32, shape=[batch_size, 10])

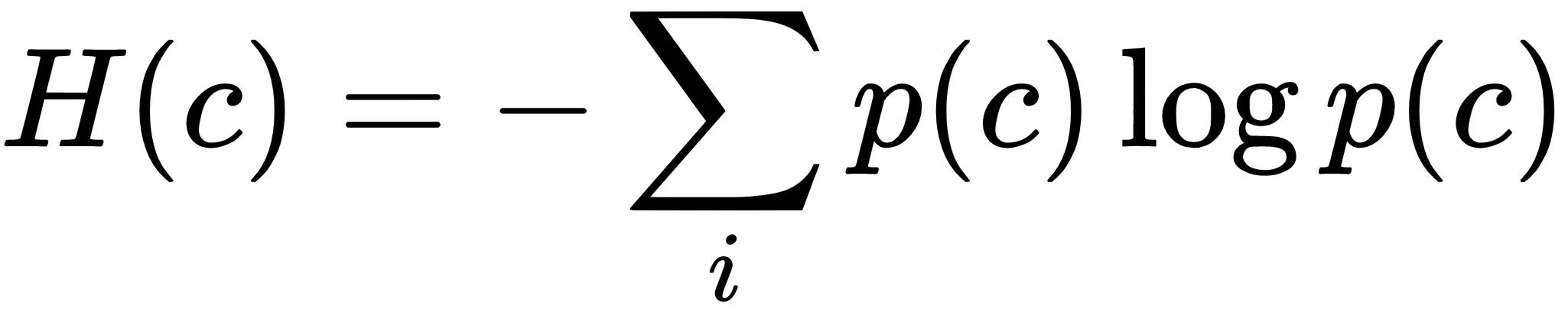

The entropy of  is represented as

is represented as  . We know that the entropy is calculated as

. We know that the entropy is calculated as  :

:

entropy_of_c = tf.reduce_mean(-tf.reduce_sum(c * tf.log(tf.clip_by_value(c_prior, 1e-12, 1.0)),axis=-1))

The conditional entropy of  when

when  is given is

is given is  . The code for the conditional entropy is as follows:

. The code for the conditional entropy is as follows:

log_q_c_given_x = tf.reduce_mean(tf.reduce_sum(c * tf.log(tf.clip_by_value(c_posterior_fake, 1e-12, 1.0)), axis=-1))

The mutual information is given as  :

:

mutual_information = entropy_of_c + log_q_c_given_x

The final loss of the discriminator and the generator is given as:

D_loss = D_loss - mutual_information

G_loss = G_loss - mutual_information